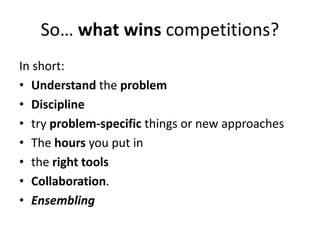

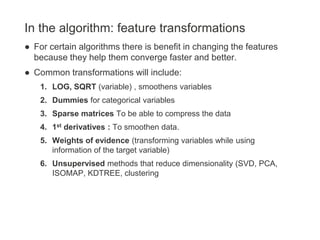

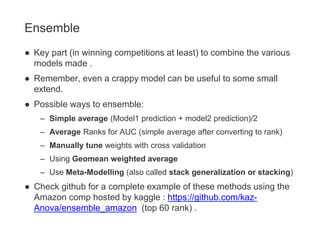

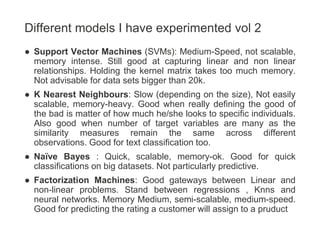

The document outlines strategies for succeeding in machine learning competitions, particularly on the Kaggle platform, emphasizing the importance of understanding the problem, effective collaboration, and iterative modeling techniques. It discusses various algorithms and methods for data preparation, feature engineering, model selection, and hyperparameter tuning, and highlights the significance of cross-validation and ensemble techniques. The author shares personal experiences and insights gained from participating in numerous competitions, along with suggested tools and resources for further learning.