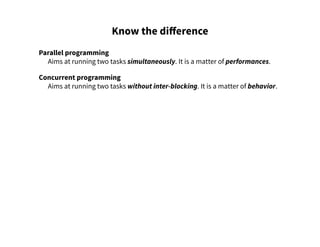

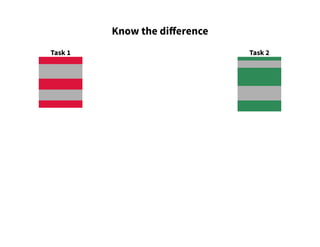

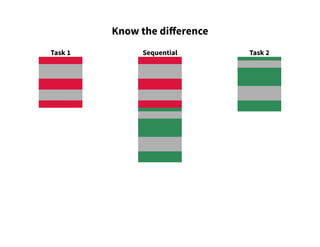

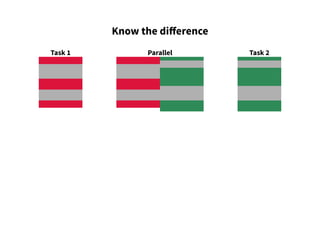

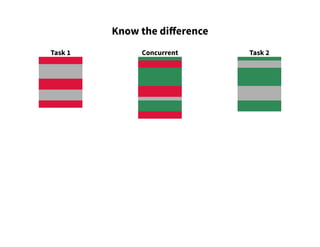

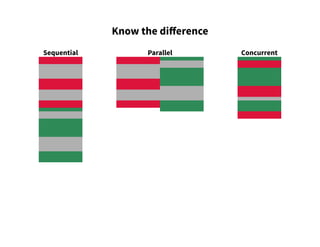

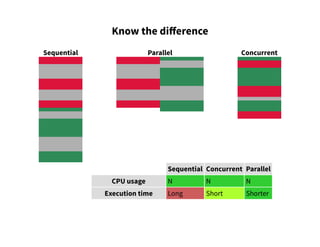

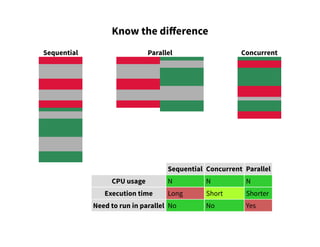

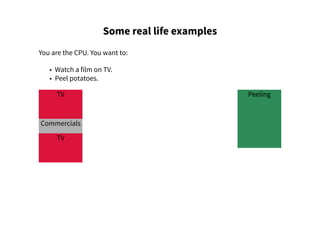

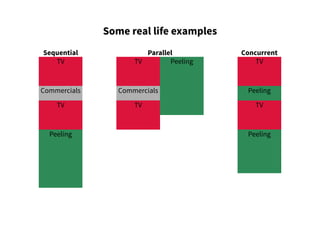

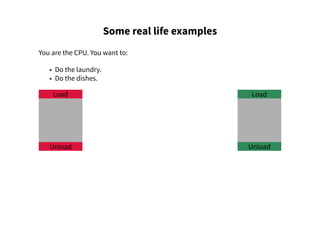

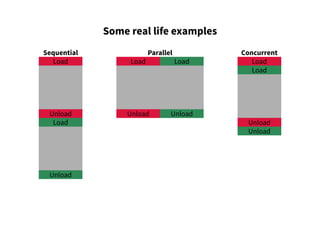

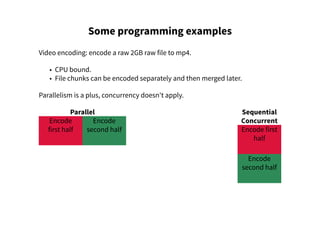

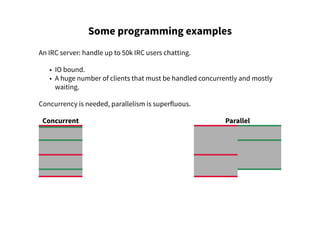

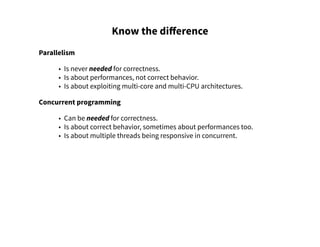

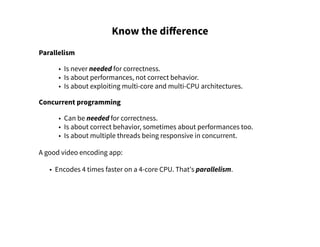

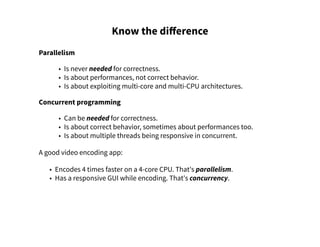

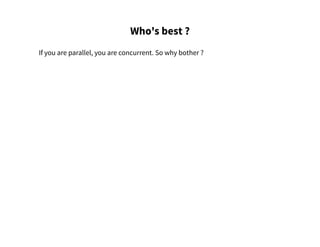

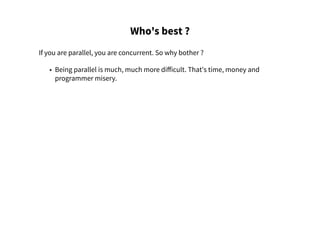

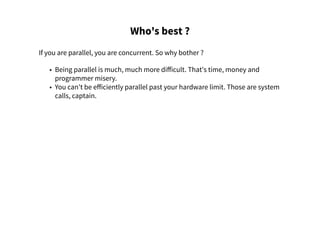

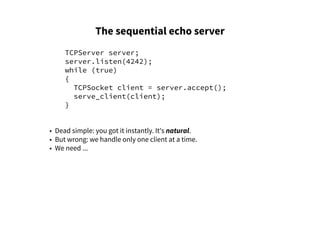

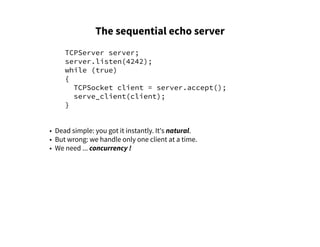

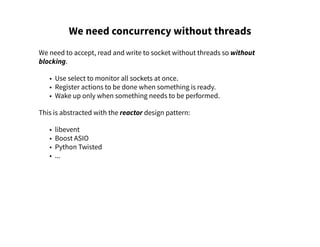

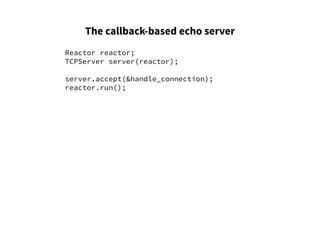

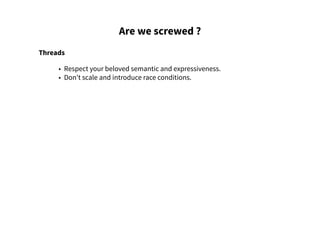

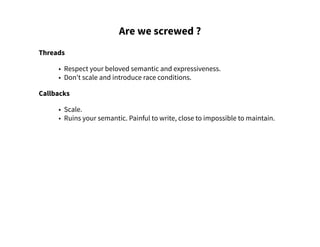

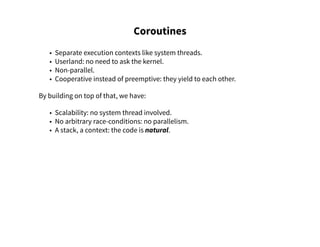

The document discusses the differences between concurrent and parallel programming, emphasizing their respective purposes in managing tasks and system resources. It provides practical examples of programming scenarios, such as video encoding and server design, to illustrate the concepts of concurrency and parallelism, as well as the challenges and complexities associated with each approach. The text concludes with a focus on coroutines as a solution for achieving concurrency without the complications of traditional threading.

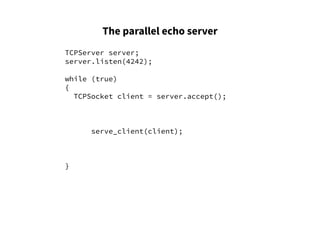

![The parallel echo server

TCPServer server;

server.listen(4242);

std::vector<std::thread> threads;

while (true)

{

TCPSocket client = server.accept();

std::thread client_thread(

[&]

{

serve_client(client);

});

client_thread.run();

vectors.push_back(std::move(client_thread));

}](https://image.slidesharecdn.com/highly-concurrent-yet-natural-programming-141111025519-conversion-gate02-151114230925-lva1-app6891/85/Highly-concurrent-yet-natural-programming-35-320.jpg)

![The parallel echo server

TCPServer server;

server.listen(4242);

std::vector<std::thread> threads;

while (true)

{

TCPSocket client = server.accept();

std::thread client_thread(

[&]

{

serve_client(client);

});

client_thread.run();

vectors.push_back(std::move(client_thread));

}

• Almost as simple and still natural,

• To add the concurrency property, we just added a concurrency construct

to the existing.](https://image.slidesharecdn.com/highly-concurrent-yet-natural-programming-141111025519-conversion-gate02-151114230925-lva1-app6891/85/Highly-concurrent-yet-natural-programming-36-320.jpg)

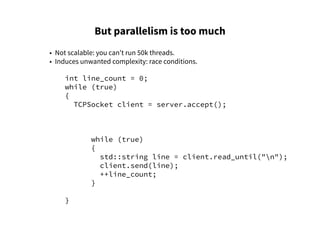

![But parallelism is too much

• Not scalable: you can't run 50k threads.

• Induces unwanted complexity: race conditions.

int line_count = 0;

while (true)

{

TCPSocket client = server.accept();

std::thread client_thread(

[&]

{

while (true)

{

std::string line = client.read_until("n");

client.send(line);

++line_count;

}

});

}](https://image.slidesharecdn.com/highly-concurrent-yet-natural-programming-141111025519-conversion-gate02-151114230925-lva1-app6891/85/Highly-concurrent-yet-natural-programming-40-320.jpg)

![Coroutines-based echo server

TCPServer server; server.listen(4242);

std::vector<Thread> threads;

int lines_count = 0;

while (true)

{

TCPSocket client = server.accept();

Thread t([client = std::move(client)] {

try

{

while (true)

{

++lines_count;

client.send(client.read_until("n"));

}

}

catch (ConnectionClosed const&) {}

});

threads.push_back(std::move(t));

}](https://image.slidesharecdn.com/highly-concurrent-yet-natural-programming-141111025519-conversion-gate02-151114230925-lva1-app6891/85/Highly-concurrent-yet-natural-programming-69-320.jpg)

![Coroutine scheduling

reactor::Scheduler sched;

reactor::Thread t1(sched,

[&]

{

print("Hello 1");

reactor::yield();

print("Bye 1");

});

reactor::Thread t2(sched,

[&]

{

print("Hello 2");

reactor::yield();

print("Bye 2");

});

);

sched.run();](https://image.slidesharecdn.com/highly-concurrent-yet-natural-programming-141111025519-conversion-gate02-151114230925-lva1-app6891/85/Highly-concurrent-yet-natural-programming-72-320.jpg)

![Coroutine scheduling

reactor::Scheduler sched;

reactor::Thread t1(sched,

[&]

{

print("Hello 1");

reactor::yield();

print("Bye 1");

});

reactor::Thread t2(sched,

[&]

{

print("Hello 2");

reactor::yield();

print("Bye 2");

});

);

sched.run();

Hello 1

Hello 2

Bye 1

Bye 2](https://image.slidesharecdn.com/highly-concurrent-yet-natural-programming-141111025519-conversion-gate02-151114230925-lva1-app6891/85/Highly-concurrent-yet-natural-programming-73-320.jpg)

![Sleeping and waiting

reactor::Thread t1(sched,

[&]

{

print("Hello 1");

reactor::sleep(500_ms);

print("Bye 1");

});

reactor::Thread t2(sched,

[&]

{

print("Hello 2");

reactor::yield();

print("World 2");

reactor::yield();

print("Bye 2");

});

);](https://image.slidesharecdn.com/highly-concurrent-yet-natural-programming-141111025519-conversion-gate02-151114230925-lva1-app6891/85/Highly-concurrent-yet-natural-programming-74-320.jpg)

![Sleeping and waiting

reactor::Thread t1(sched,

[&]

{

print("Hello 1");

reactor::sleep(500_ms);

print("Bye 1");

});

reactor::Thread t2(sched,

[&]

{

print("Hello 2");

reactor::yield();

print("World 2");

reactor::yield();

print("Bye 2");

});

);

Hello 1

Hello 2

World 2

Bye 2](https://image.slidesharecdn.com/highly-concurrent-yet-natural-programming-141111025519-conversion-gate02-151114230925-lva1-app6891/85/Highly-concurrent-yet-natural-programming-75-320.jpg)

![Sleeping and waiting

reactor::Thread t1(sched,

[&]

{

print("Hello 1");

reactor::sleep(500_ms);

print("Bye 1");

});

reactor::Thread t2(sched,

[&]

{

print("Hello 2");

reactor::yield();

print("World 2");

reactor::yield();

print("Bye 2");

});

);

Hello 1

Hello 2

World 2

Bye 2

Bye 1](https://image.slidesharecdn.com/highly-concurrent-yet-natural-programming-141111025519-conversion-gate02-151114230925-lva1-app6891/85/Highly-concurrent-yet-natural-programming-76-320.jpg)

![Sleeping and waiting

reactor::Thread t1(sched,

[&]

{

print("Hello 1");

reactor::sleep(500_ms);

print("Bye 1");

});

reactor::Thread t2(sched,

[&]

{

print("Hello 2");

reactor::yield();

print("World 2");

reactor::wait(t1); // Wait

print("Bye 2");

});

);](https://image.slidesharecdn.com/highly-concurrent-yet-natural-programming-141111025519-conversion-gate02-151114230925-lva1-app6891/85/Highly-concurrent-yet-natural-programming-77-320.jpg)

![Sleeping and waiting

reactor::Thread t1(sched,

[&]

{

print("Hello 1");

reactor::sleep(500_ms);

print("Bye 1");

});

reactor::Thread t2(sched,

[&]

{

print("Hello 2");

reactor::yield();

print("World 2");

reactor::wait(t1); // Wait

print("Bye 2");

});

);

Hello 1

Hello 2

World 2](https://image.slidesharecdn.com/highly-concurrent-yet-natural-programming-141111025519-conversion-gate02-151114230925-lva1-app6891/85/Highly-concurrent-yet-natural-programming-78-320.jpg)

![Sleeping and waiting

reactor::Thread t1(sched,

[&]

{

print("Hello 1");

reactor::sleep(500_ms);

print("Bye 1");

});

reactor::Thread t2(sched,

[&]

{

print("Hello 2");

reactor::yield();

print("World 2");

reactor::wait(t1); // Wait

print("Bye 2");

});

);

Hello 1

Hello 2

World 2

Bye 1

Bye 2](https://image.slidesharecdn.com/highly-concurrent-yet-natural-programming-141111025519-conversion-gate02-151114230925-lva1-app6891/85/Highly-concurrent-yet-natural-programming-79-320.jpg)

![Synchronization: signals

reactor::Signal task_available;

std::vector<Task> tasks;

reactor::Thread handler([&] {

while (true)

{

if (!tasks.empty())

{

std::vector mytasks = std::move(tasks);

for (auto& task: tasks)

; // Handle task

}

else

reactor::wait(task_available);

}

});](https://image.slidesharecdn.com/highly-concurrent-yet-natural-programming-141111025519-conversion-gate02-151114230925-lva1-app6891/85/Highly-concurrent-yet-natural-programming-80-320.jpg)

![Synchronization: signals

reactor::Signal task_available;

std::vector<Task> tasks;

reactor::Thread handler([&] {

while (true)

{

if (!tasks.empty())

{

std::vector mytasks = std::move(tasks);

for (auto& task: tasks)

; // Handle task

}

else

reactor::wait(task_available);

}

});

tasks.push_back(...);

task_available.signal();](https://image.slidesharecdn.com/highly-concurrent-yet-natural-programming-141111025519-conversion-gate02-151114230925-lva1-app6891/85/Highly-concurrent-yet-natural-programming-81-320.jpg)

![Synchronization: signals

reactor::Signal task_available;

std::vector<Task> tasks;

reactor::Thread handler([&] {

while (true)

{

if (!tasks.empty()) // 1

{

std::vector mytasks = std::move(tasks);

for (auto& task: tasks)

; // Handle task

}

else

reactor::wait(task_available); // 4

}

});

tasks.push_back(...); // 2

task_available.signal(); // 3](https://image.slidesharecdn.com/highly-concurrent-yet-natural-programming-141111025519-conversion-gate02-151114230925-lva1-app6891/85/Highly-concurrent-yet-natural-programming-82-320.jpg)

![Synchronization: channels

reactor::Channel<Task> tasks;

reactor::Thread handler([&] {

while (true)

{

Task t = tasks.get();

// Handle task

}

});

tasks.put(...);](https://image.slidesharecdn.com/highly-concurrent-yet-natural-programming-141111025519-conversion-gate02-151114230925-lva1-app6891/85/Highly-concurrent-yet-natural-programming-83-320.jpg)

![Mutexes

But you said no race conditions! You lied again!

reactor::Thread t([&] {

while (true)

{

for (auto& socket: sockets)

socket.send("YO");

}

});

{

socket.push_back(...);

}](https://image.slidesharecdn.com/highly-concurrent-yet-natural-programming-141111025519-conversion-gate02-151114230925-lva1-app6891/85/Highly-concurrent-yet-natural-programming-85-320.jpg)

![Mutexes

But you said no race conditions! You lied again!

reactor::Mutex mutex;

reactor::Thread t([&] {

while (true)

{

reactor::wait(mutex);

for (auto& socket: sockets)

socket.send("YO");

mutex.unlock();

}

});

{

reactor::wait(mutex);

socket.push_back(...);

mutex.unlock();

}](https://image.slidesharecdn.com/highly-concurrent-yet-natural-programming-141111025519-conversion-gate02-151114230925-lva1-app6891/85/Highly-concurrent-yet-natural-programming-86-320.jpg)

![Mutexes

But you said no race conditions! You lied again!

reactor::Mutex mutex;

reactor::Thread t([&] {

while (true)

{

reactor::Lock lock(mutex);

for (auto& socket: sockets)

socket.send("YO");

}

});

{

reactor::Lock lock(mutex);

socket.push_back(...);

}](https://image.slidesharecdn.com/highly-concurrent-yet-natural-programming-141111025519-conversion-gate02-151114230925-lva1-app6891/85/Highly-concurrent-yet-natural-programming-87-320.jpg)

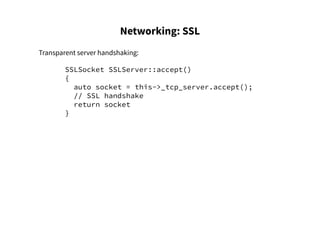

![Networking: SSL

Transparent server handshaking:

reactor::network::SSLServer server(certificate, key);

server.listen(4242);

while (true)

{

auto socket = server.accept();

reactor::Thread([&] { ... });

}](https://image.slidesharecdn.com/highly-concurrent-yet-natural-programming-141111025519-conversion-gate02-151114230925-lva1-app6891/85/Highly-concurrent-yet-natural-programming-91-320.jpg)

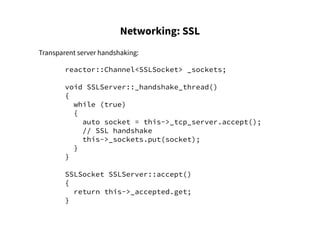

![Networking: SSL

Transparent server handshaking:

void SSLServer::_handshake_thread()

{

while (true)

{

auto socket = this->_tcp_server.accept();

reactor::Thread t(

[&]

{

// SSL handshake

this->_sockets.put(socket);

});

}

}](https://image.slidesharecdn.com/highly-concurrent-yet-natural-programming-141111025519-conversion-gate02-151114230925-lva1-app6891/85/Highly-concurrent-yet-natural-programming-94-320.jpg)