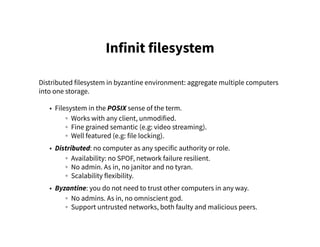

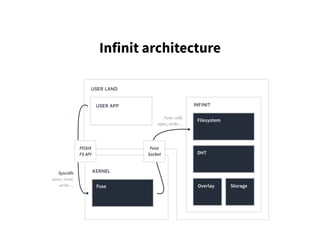

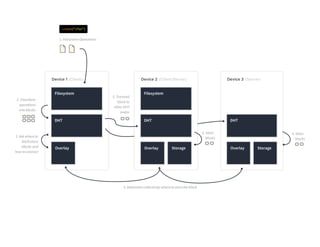

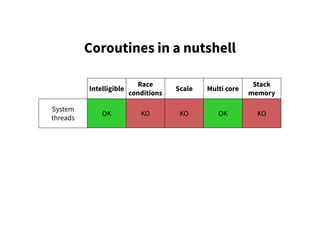

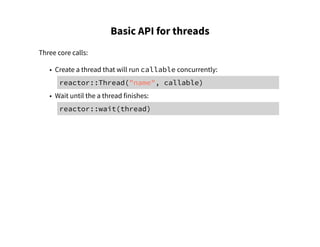

The document details the Infinit Filesystem, a distributed filesystem designed for Byzantine environments that aggregates multiple computers into a unified storage solution, emphasizing its lack of administrative authority and resilience to network failures. It also describes the Reactor library in C++, which supports coroutines and enables safe concurrent programming through a structured API for creating and managing threads. Additionally, it discusses various threading patterns, operations to exploit multicore systems, and background processing techniques for efficiency.

![Reactor in a nutshell

Reactor is a C++ library providing coroutines support, enabling simple and safe

imperative style concurrency.

while (true)

{

auto socket = tcp_server.accept();

new Thread([socket] {

try

{

while (true)

socket->write(socket->read_until("n"));

}

catch (reactor::network::Error const&)

{}

});

}](https://image.slidesharecdn.com/parallelism-advanced-160401143829/85/Infinit-filesystem-Reactor-reloaded-15-320.jpg)

![Basic API for reactor

reactor::Scheduler sched;

reactor::Thread main(

sched, "main",

[]

{

});

// Will execute until all threads are done.

sched.run();](https://image.slidesharecdn.com/parallelism-advanced-160401143829/85/Infinit-filesystem-Reactor-reloaded-23-320.jpg)

![Basic API for reactor

reactor::Scheduler sched;

reactor::Thread main(

sched, "main",

[]

{

reactor::Thread t("t", [] { print("world"); });

print("hello");

});

// Will execute until all threads are done.

sched.run();](https://image.slidesharecdn.com/parallelism-advanced-160401143829/85/Infinit-filesystem-Reactor-reloaded-24-320.jpg)

![Basic API for reactor

reactor::Scheduler sched;

reactor::Thread main(

sched, "main",

[]

{

reactor::Thread t("t", [] { print("world"); });

print("hello");

reactor::wait(t);

});

// Will execute until all threads are done.

sched.run();](https://image.slidesharecdn.com/parallelism-advanced-160401143829/85/Infinit-filesystem-Reactor-reloaded-25-320.jpg)

![The detached thread

The detached thread is a global background operation whose lifetime is tied to

the program only.

E.g. uploading crash reports on startup.

new reactor::Thread(

[]

{

for (auto p: list_directory(reports_dir))

reactor::http::put("infinit.sh/reports",

ifstream(p));

});](https://image.slidesharecdn.com/parallelism-advanced-160401143829/85/Infinit-filesystem-Reactor-reloaded-28-320.jpg)

![The detached thread

The detached thread is a global background operation whose lifetime is tied to

the program only.

E.g. uploading crash reports on startup.

new reactor::Thread(

[]

{

for (auto p: list_directory(reports_dir))

reactor::http::put("infinit.sh/reports",

ifstream(p));

},

reactor::Thread::auto_dispose = true);](https://image.slidesharecdn.com/parallelism-advanced-160401143829/85/Infinit-filesystem-Reactor-reloaded-29-320.jpg)

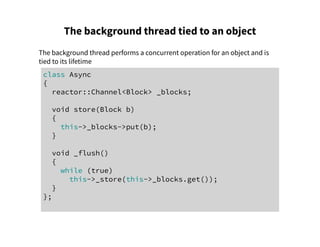

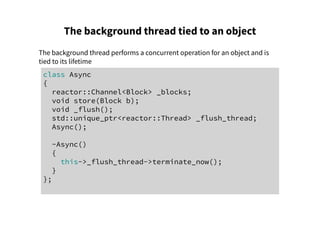

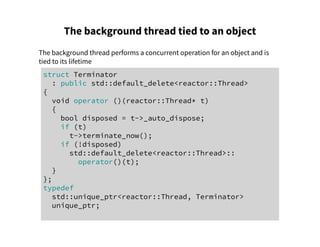

![The background thread tied to an object

The background thread performs a concurrent operation for an object and is

tied to its lifetime

class Async

{

reactor::Channel<Block> _blocks;

void store(Block b);

void _flush();

std::unique_ptr<reactor::Thread> _flush_thread;

Async()

: _flush_thread(new reactor::Thread(

[this] { this->_flush(); }))

{}

};](https://image.slidesharecdn.com/parallelism-advanced-160401143829/85/Infinit-filesystem-Reactor-reloaded-32-320.jpg)

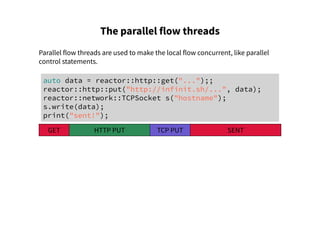

![The parallel flow threads

auto data = reactor::http::get("...");

reactor::Thread http_put(

[&]

{

reactor::http::put("http://infinit.sh/...", data);

});

reactor::Thread tcp_put(

[&]

{

reactor::network::TCPSocket s("hostname");

s.write(data);

});

reactor::wait({http_put, tcp_put});

print("sent!");

GET SENT

HTTP PUT

TCP PUT](https://image.slidesharecdn.com/parallelism-advanced-160401143829/85/Infinit-filesystem-Reactor-reloaded-37-320.jpg)

![The parallel flow threads

auto data = reactor::http::get("...");

std::exception_ptr exn;

reactor::Thread http_put([&] {

try { reactor::http::put("http://...", data); }

catch (...) { exn = std::current_exception(); }

});

reactor::Thread tcp_put([&] {

try {

reactor::network::TCPSocket s("hostname");

s.write(data);

}

catch (...) { exn = std::current_exception(); }

});

reactor::wait({http_put, tcp_put});

if (exn)

std::rethrow_exception(exn);

print("sent!");](https://image.slidesharecdn.com/parallelism-advanced-160401143829/85/Infinit-filesystem-Reactor-reloaded-38-320.jpg)

![The parallel flow threads

auto data = reactor::http::get("...");

std::exception_ptr exn;

reactor::Thread http_put([&] {

try {

reactor::http::put("http://...", data);

} catch (reactor::Terminate const&) { throw; }

catch (...) { exn = std::current_exception(); }

});

reactor::Thread tcp_put([&] {

try {

reactor::network::TCPSocket s("hostname");

s.write(data);

} catch (reactor::Terminate const&) { throw; }

catch (...) { exn = std::current_exception(); }

});

reactor::wait({http_put, tcp_put});

if (exn) std::rethrow_exception(exn);

print("sent!");](https://image.slidesharecdn.com/parallelism-advanced-160401143829/85/Infinit-filesystem-Reactor-reloaded-39-320.jpg)

![The parallel flow threads

auto data = reactor::http::get("...");

reactor::Scope scope;

scope.run([&] {

reactor::http::put("http://infinit.sh/...", data);

});

scope.run([&] {

reactor::network::TCPSocket s("hostname");

s.write(data);

});

reactor::wait(scope);

print("sent!");

GET SENT

HTTP PUT

TCP PUT](https://image.slidesharecdn.com/parallelism-advanced-160401143829/85/Infinit-filesystem-Reactor-reloaded-40-320.jpg)

![Futures

auto data = reactor::http::get("...");

reactor::Scope scope;

scope.run([&] {

reactor::http::Request r("http://infinit.sh/...");

r.write(data);

});

scope.run([&] {

reactor::network::TCPSocket s("hostname");

s.write(data);

});

reactor::wait(scope);

print("sent!");](https://image.slidesharecdn.com/parallelism-advanced-160401143829/85/Infinit-filesystem-Reactor-reloaded-41-320.jpg)

![Futures

elle::Buffer data;

reactor::Thread fetcher(

[&] { data = reactor::http::get("..."); });

reactor::Scope scope;

scope.run([&] {

reactor::http::Request r("http://infinit.sh/...");

reactor::wait(fetcher);

r.write(data);

});

scope.run([&] {

reactor::network::TCPSocket s("hostname");

reactor::wait(fetcher);

s.write(data);

});

reactor::wait(scope);

print("sent!");](https://image.slidesharecdn.com/parallelism-advanced-160401143829/85/Infinit-filesystem-Reactor-reloaded-42-320.jpg)

![Futures

reactor::Future<elle::Buffer> data(

[&] { data = reactor::http::get("..."); });

reactor::Scope scope;

scope.run([&] {

reactor::http::Request r("http://infinit.sh/...");

r.write(data.get());

});

scope.run([&] {

reactor::network::TCPSocket s("hostname");

s.write(data.get());

});

reactor::wait(scope);

print("sent!");](https://image.slidesharecdn.com/parallelism-advanced-160401143829/85/Infinit-filesystem-Reactor-reloaded-43-320.jpg)

![Concurrent iteration

The final touch.

std::vector<Host> hosts;

reactor::Scope scope;

for (auto const& host: hosts)

scope.run([] (Host h) { h->send(block); });

reactor::wait(scope);](https://image.slidesharecdn.com/parallelism-advanced-160401143829/85/Infinit-filesystem-Reactor-reloaded-45-320.jpg)

![Concurrent iteration

The final touch.

std::vector<Host> hosts;

reactor::Scope scope;

for (auto const& host: hosts)

scope.run([] (Host h) { h->send(block); });

reactor::wait(scope);

Concurrent iteration:

std::vector<Host> hosts;

reactor::concurrent_for(

hosts, [] (Host h) { h->send(block); });](https://image.slidesharecdn.com/parallelism-advanced-160401143829/85/Infinit-filesystem-Reactor-reloaded-46-320.jpg)

![Concurrent iteration

The final touch.

std::vector<Host> hosts;

reactor::Scope scope;

for (auto const& host: hosts)

scope.run([] (Host h) { h->send(block); });

reactor::wait(scope);

Concurrent iteration:

std::vector<Host> hosts;

reactor::concurrent_for(

hosts, [] (Host h) { h->send(block); });

• Also available: reactor::concurrent_break().

• As for a concurrent continue, that's just return.](https://image.slidesharecdn.com/parallelism-advanced-160401143829/85/Infinit-filesystem-Reactor-reloaded-47-320.jpg)

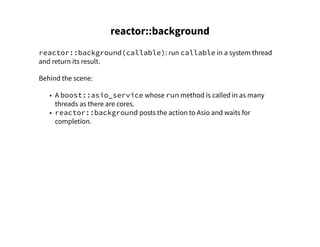

![reactor::background

reactor::background(callable): run callable in a system thread

and return its result.

Behind the scene:

• A boost::asio_service whose run method is called in as many

threads as there are cores.

• reactor::background posts the action to Asio and waits for

completion.

Block::seal(elle::Buffer data)

{

this->_data = reactor::background(

[data=std::move(data)] { return encrypt(data); });

}](https://image.slidesharecdn.com/parallelism-advanced-160401143829/85/Infinit-filesystem-Reactor-reloaded-53-320.jpg)

![reactor::background

No fiasco rules of thumb: be pure.

• No side effects.

• Take all bindings by value.

• Return any result by value.

std::vector<int> ints;

int i = 0;

// Do not:

reactor::background([&] {ints.push_back(i+1);});

reactor::background([&] {ints.push_back(i+2);});

// Do:

ints.push_back(reactor::background([i] {return i+1;});

ints.push_back(reactor::background([i] {return i+2;});](https://image.slidesharecdn.com/parallelism-advanced-160401143829/85/Infinit-filesystem-Reactor-reloaded-55-320.jpg)

![Background pools

Infinit generates a lot of symmetric keys.

Let other syscall go through during generation with reactor::background:

Block::Block()

: _block_keys(reactor::background(

[] { return generate_keys(); }))

{}](https://image.slidesharecdn.com/parallelism-advanced-160401143829/85/Infinit-filesystem-Reactor-reloaded-56-320.jpg)

![Background pools

Infinit generates a lot of symmetric keys.

Let other syscall go through during generation with reactor::background:

Block::Block()

: _block_keys(reactor::background(

[] { return generate_keys(); }))

{}

Problem: because of usual on-disk filesystems, operations are often sequential.

$ time for i in $(seq 128); touch $i; done

The whole operation will still be delayed by 128 * generation_time.](https://image.slidesharecdn.com/parallelism-advanced-160401143829/85/Infinit-filesystem-Reactor-reloaded-57-320.jpg)

![Background pools

Solution: pregenerate keys in a background pool.

reactor::Channel<KeyPair> keys;

keys.max_size(64);

reactor::Thread keys_generator([&] {

reactor::Scope keys;

for (int i = 0; i < NCORES; ++i)

scope.run([] {

while (true)

keys.put(reactor::background(

[] { return generate_keys(); }));

});

reactor::wait(scope);

});

Block::Block()

: _block_keys(keys.get())

{}](https://image.slidesharecdn.com/parallelism-advanced-160401143829/85/Infinit-filesystem-Reactor-reloaded-58-320.jpg)

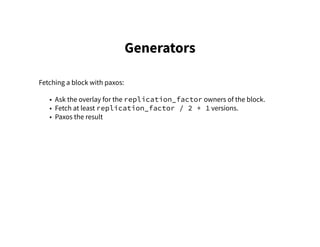

![Generators

Fetching a block with paxos:

DHT::fetch(Address addr)

{

auto hosts = this->_overlay->lookup(addr, 3);

std::vector<Block> blocks;

reactor::concurrent_for(

hosts,

[&] (Host host)

{

blocks.push_back(host->fetch(addr));

if (blocks.size() >= 2)

reactor::concurrent_break();

});

return some_paxos_magic(blocks);

}](https://image.slidesharecdn.com/parallelism-advanced-160401143829/85/Infinit-filesystem-Reactor-reloaded-60-320.jpg)

![Generators

reactor::Generator<Host>

Kelips::lookup(Address addr, factor f)

{

return reactor::Generator<Host>(

[=] (reactor::Generator<Host>::Yield const& yield)

{

some_udp_socket.write(...);

while (f--)

yield(kelips_stuff(some_udp_socket.read()));

});

}](https://image.slidesharecdn.com/parallelism-advanced-160401143829/85/Infinit-filesystem-Reactor-reloaded-61-320.jpg)

![Generators

Behind the scene:

template <typename T>

class Generator

{

reactor::Channel<T> _values;

Generator(Callable call)

: _thread([this] {

call([this] (T e) {this->_values.put(e);});

})

{}

// Iterator interface calling _values.get()

};

• Similar to python generators

• Except generation starts right away and is not tied to iteration](https://image.slidesharecdn.com/parallelism-advanced-160401143829/85/Infinit-filesystem-Reactor-reloaded-62-320.jpg)