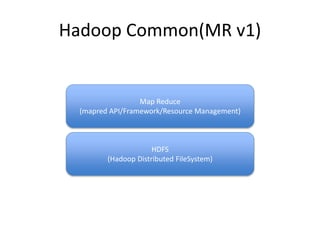

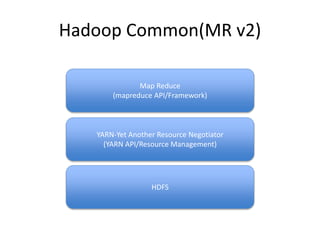

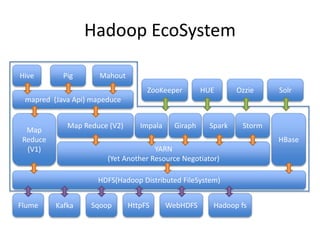

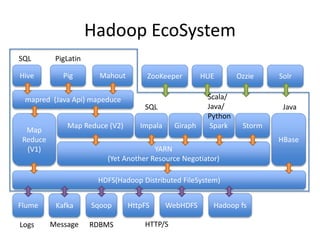

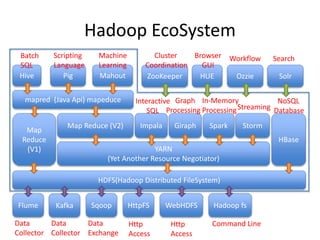

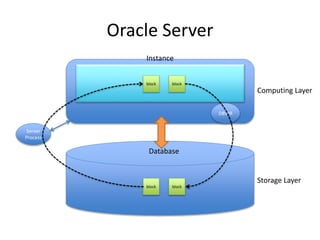

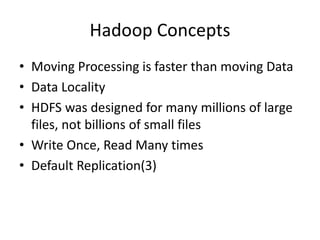

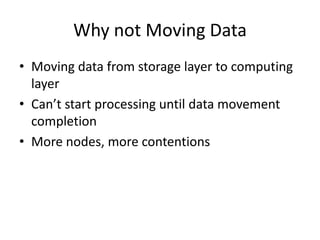

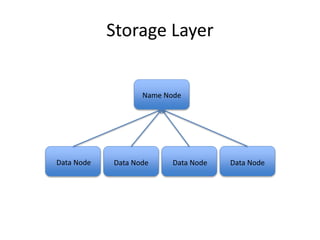

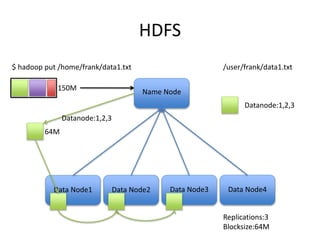

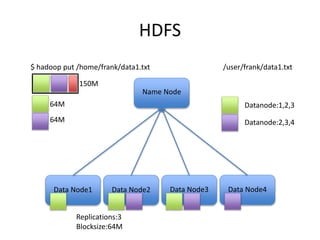

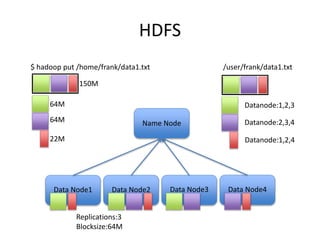

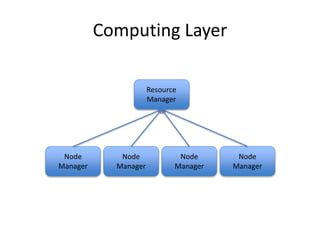

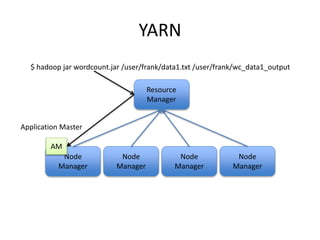

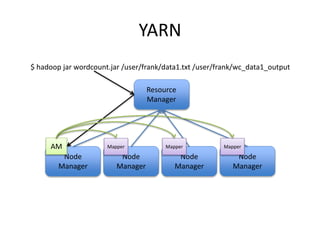

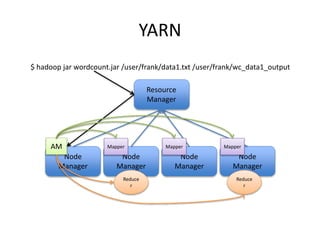

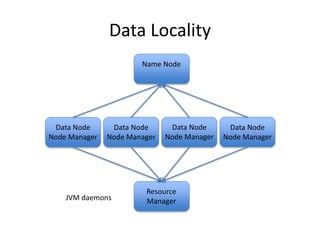

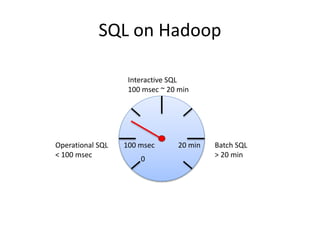

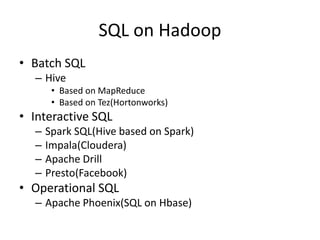

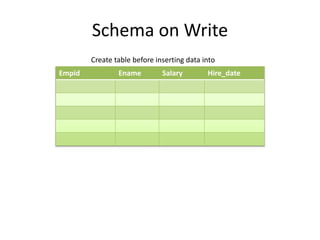

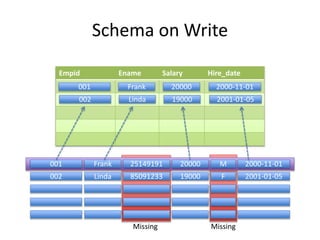

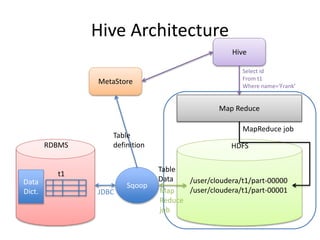

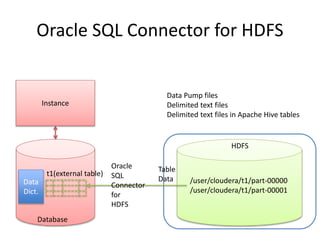

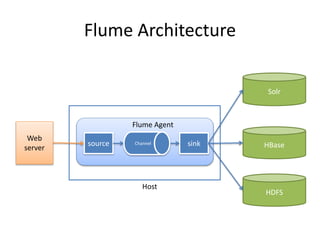

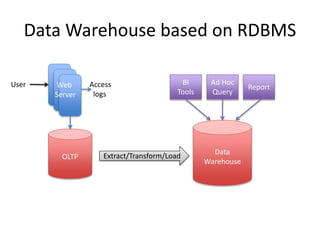

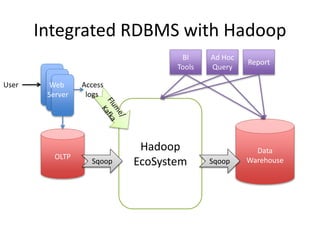

This document provides an overview of Hadoop and the Hadoop ecosystem. It discusses key Hadoop concepts like HDFS, MapReduce, YARN and data locality. It also summarizes SQL on Hadoop using tools like Hive, Impala and Spark SQL. The document concludes with examples of using Sqoop and Flume to move data between relational databases and Hadoop.