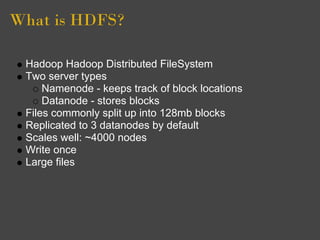

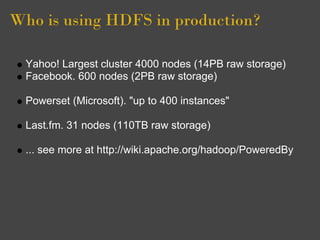

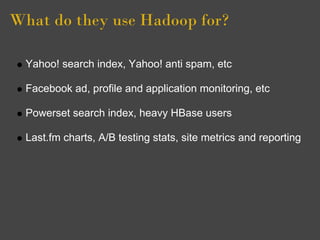

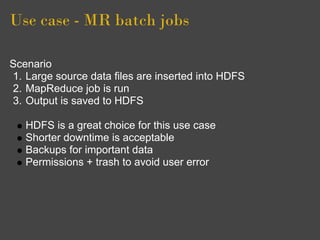

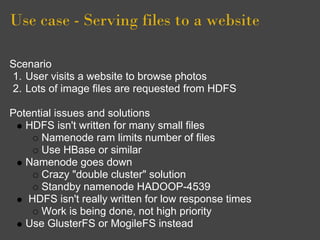

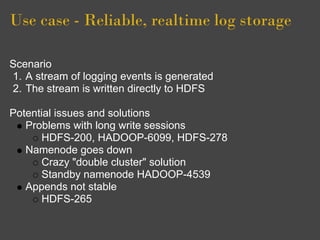

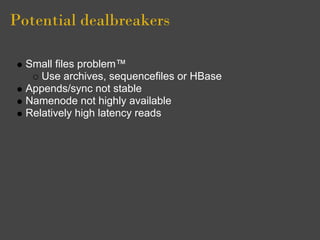

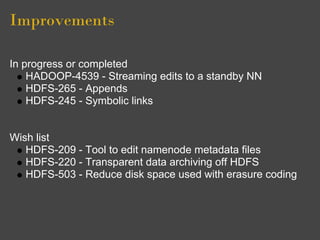

HDFS is a distributed file system used for large data sets in Hadoop. It scales well and can support thousands of nodes storing petabytes of data. Several large companies use HDFS in production including Yahoo, Facebook, and Last.fm. HDFS works well for batch jobs but may have issues for real-time logging or serving many small files to a website due to performance and high availability concerns. Improvements are being made to address issues with appends, availability, and reducing disk usage. Alternative solutions exist for low latency use cases.