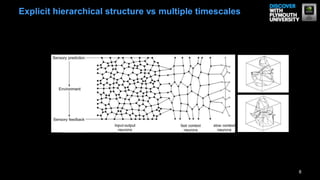

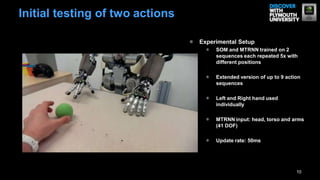

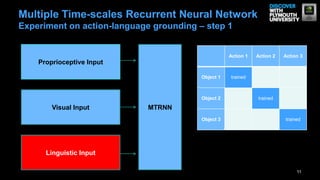

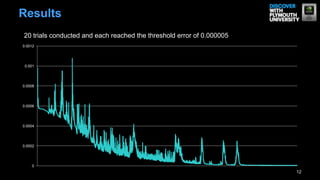

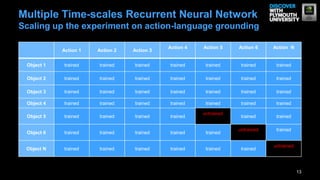

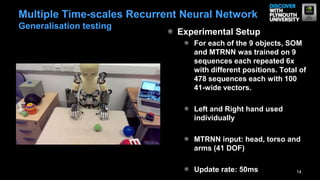

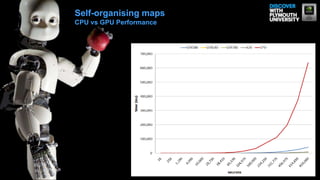

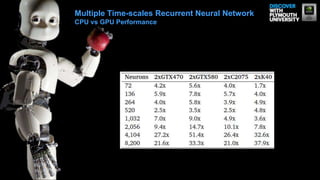

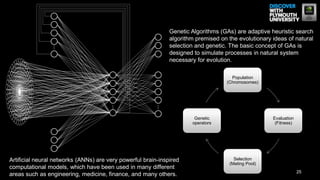

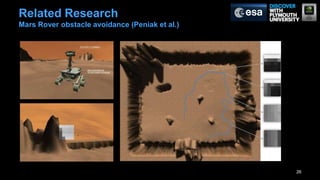

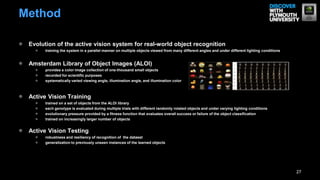

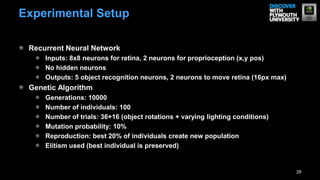

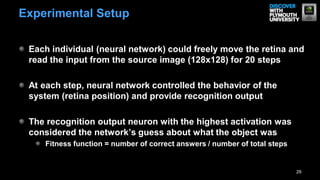

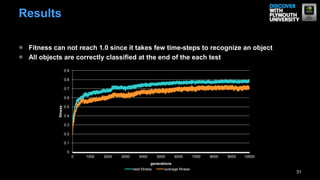

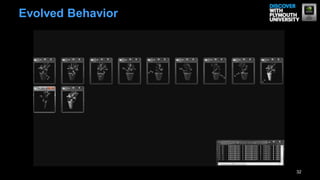

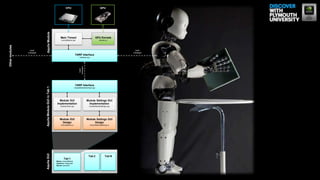

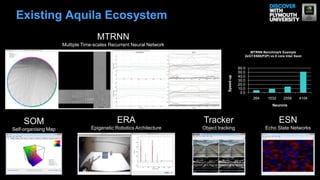

This document discusses the advancements in action and language acquisition in humanoid robots, focusing on biologically-inspired active vision systems and their development through GPU computing. It highlights experiments with recurrent neural networks for action-language grounding, the use of evolutionary robotics, and a method for real-world object recognition training. The findings emphasize the superior adaptability and effectiveness of active vision systems modeled after biological vision compared to traditional computer vision techniques.