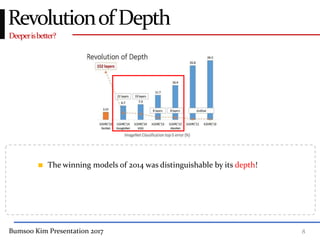

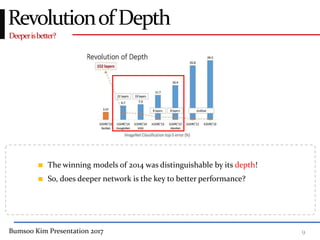

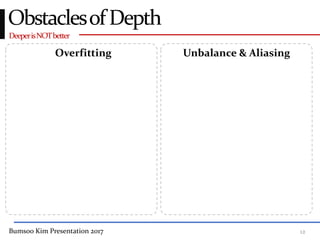

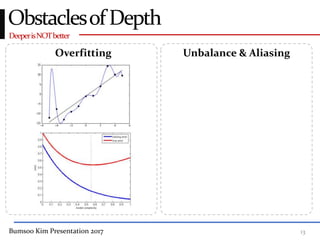

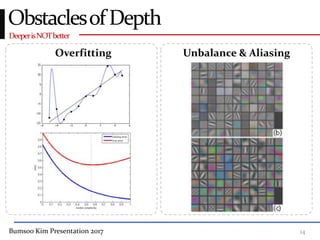

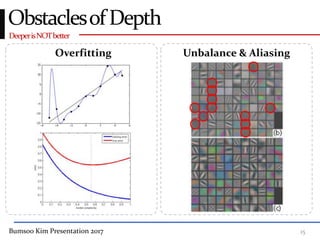

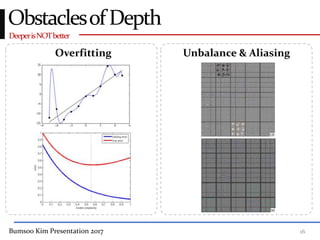

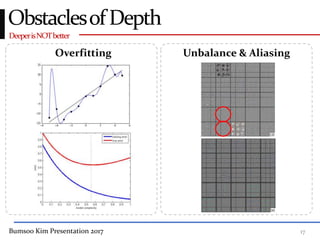

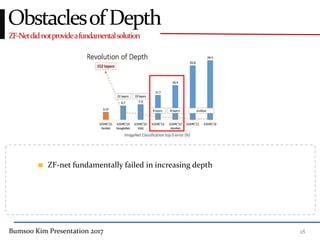

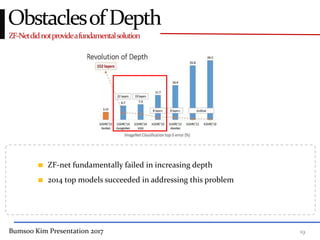

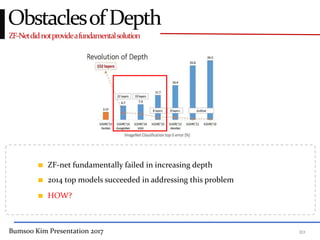

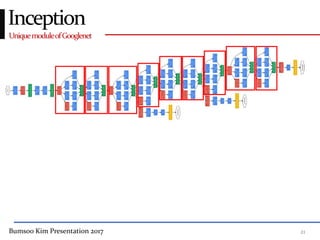

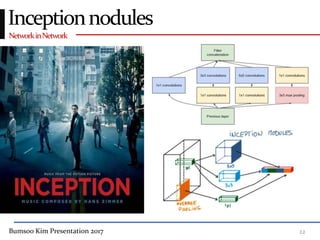

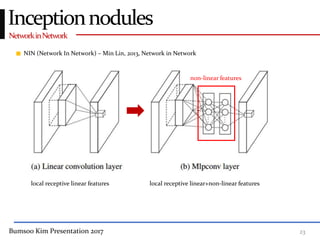

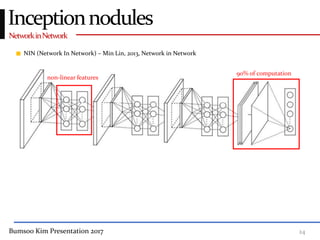

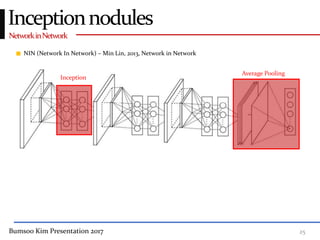

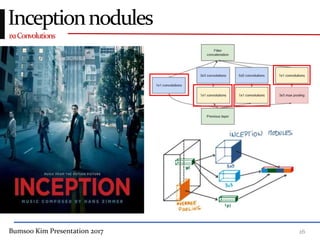

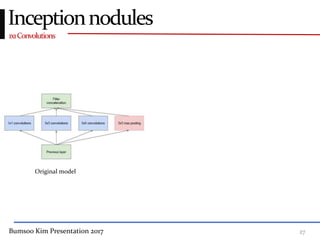

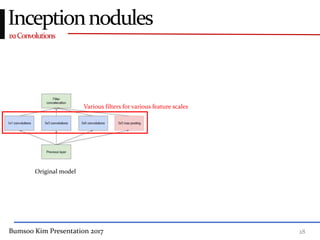

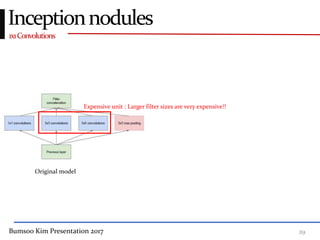

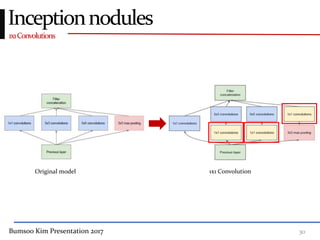

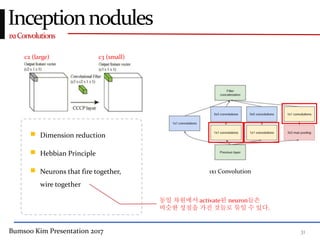

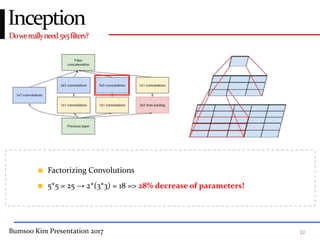

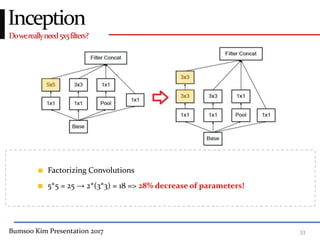

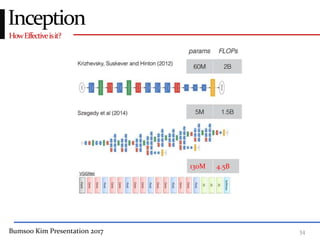

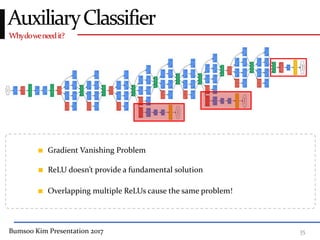

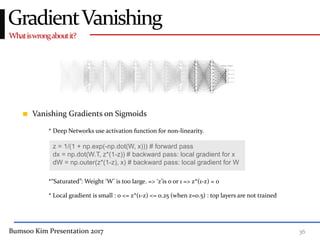

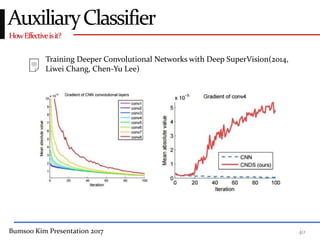

This presentation discusses GoogleNet, the 2014 winning model in the ILSVRC image classification competition. GoogleNet (also known as Inception) revolutionized convolutional neural networks by utilizing inception modules to increase depth while avoiding issues like overfitting. The inception module employs 1x1 convolutions for dimension reduction before 3x3 and 5x5 convolutions, allowing deeper networks to be trained. It also uses auxiliary classifiers to address the gradient vanishing problem and help train deeper layers. The presentation provides details on the architecture and training of GoogleNet, which achieved top-5 error rate of 6.7% and demonstrated that increased depth from innovative modules, rather than simply adding layers, can lead to better performance.