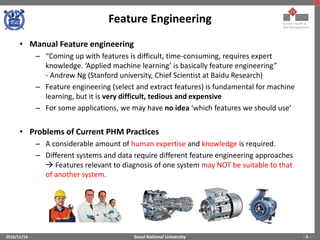

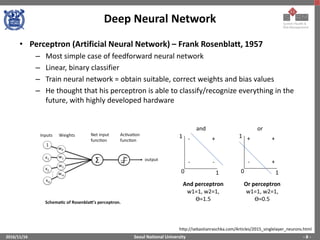

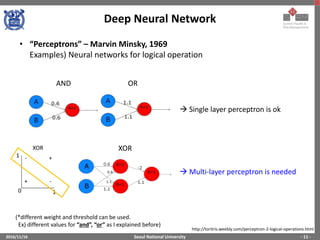

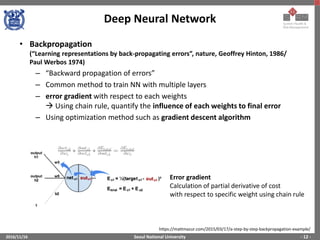

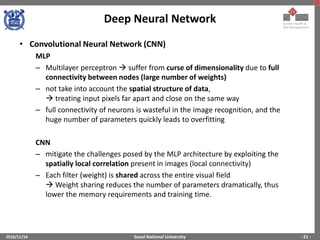

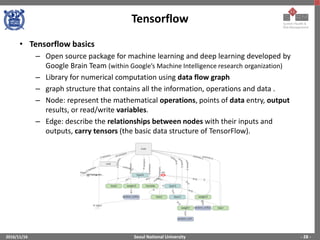

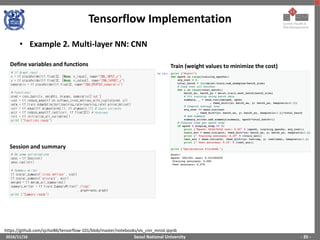

The document provides an overview of feature engineering and deep learning, particularly focusing on the challenges and methodologies associated with implementing deep neural networks using TensorFlow. It discusses the difficulties in manual feature extraction, the role of automated feature learning through deep learning, and outlines key components such as convolutional neural networks, activation functions, and training processes. Additionally, it presents TensorFlow as a powerful tool for machine learning and deep learning implementations, illustrating its functionalities and applications.