This document discusses generative adversarial nets (GANs). GANs use an adversarial modeling framework where a generator and discriminator are trained against each other. The generator learns to generate fake samples from noise to match the real data distribution, while the discriminator learns to distinguish real from fake samples. They are trained together through a minimax game, with the generator trying to maximize the discriminator's errors. The document proves that the global minimum of the GAN's training criterion is achieved when the generator's distribution pg matches the real data distribution pdata, with the criterion value reaching -log4.

![.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

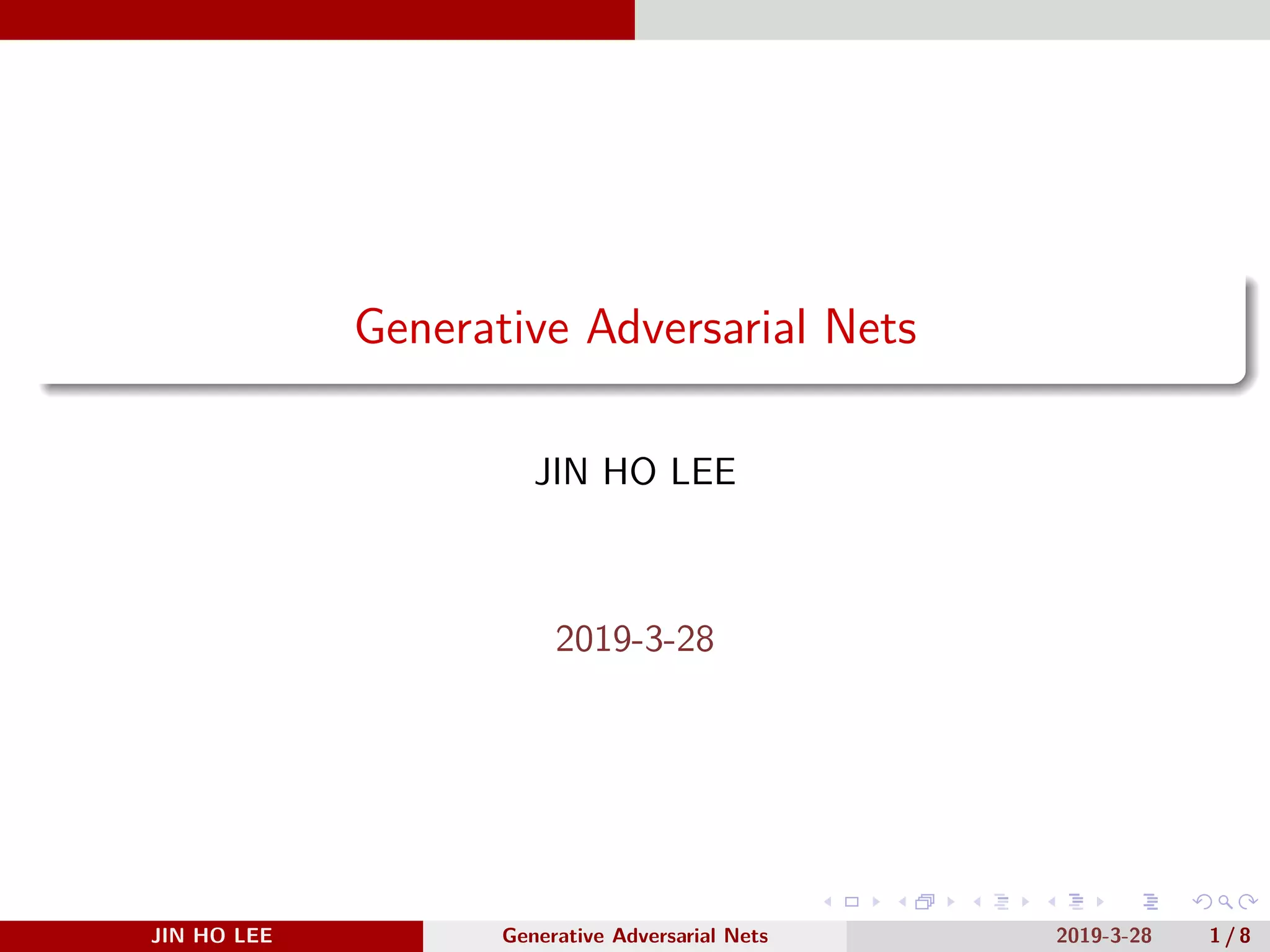

3. Adversarial nets

3. Adversarial nets

• Adversarial modeling framework 는 generator 와 discriminator 의

distribution 을 학습하게 된다.

• Generator’s distribution : input noise variables pz(z) 를 정의하고

거기에서 parameter θg 를 학습하여 G(z; θg) 를 업데이트 하는 방법으로

학습된다.

• Discriminator’s distribution : discriminator D(x; θd) 는 single scalar 의

값을 갖는 함수로 x 가 generator 의 distribution pz 에서 나오지 않았을 확률

(즉, input data 에서 나왔을 확률)을 의미한다.

• 이것을 한 번에 표시하면 아래의 min-max problem 으로 표시된 loss

function 을 통해 학습이 된다.

min

G

max

D

V(D, G) = Ex∼pdata

[log D(x)] + Ez∼pz(z)[log(1 − D(G(z)))].

JIN HO LEE Generative Adversarial Nets 2019-3-28 2 / 8](https://image.slidesharecdn.com/190328gan-190328124518/75/Generative-Adversarial-Nets-2-2048.jpg)

![.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

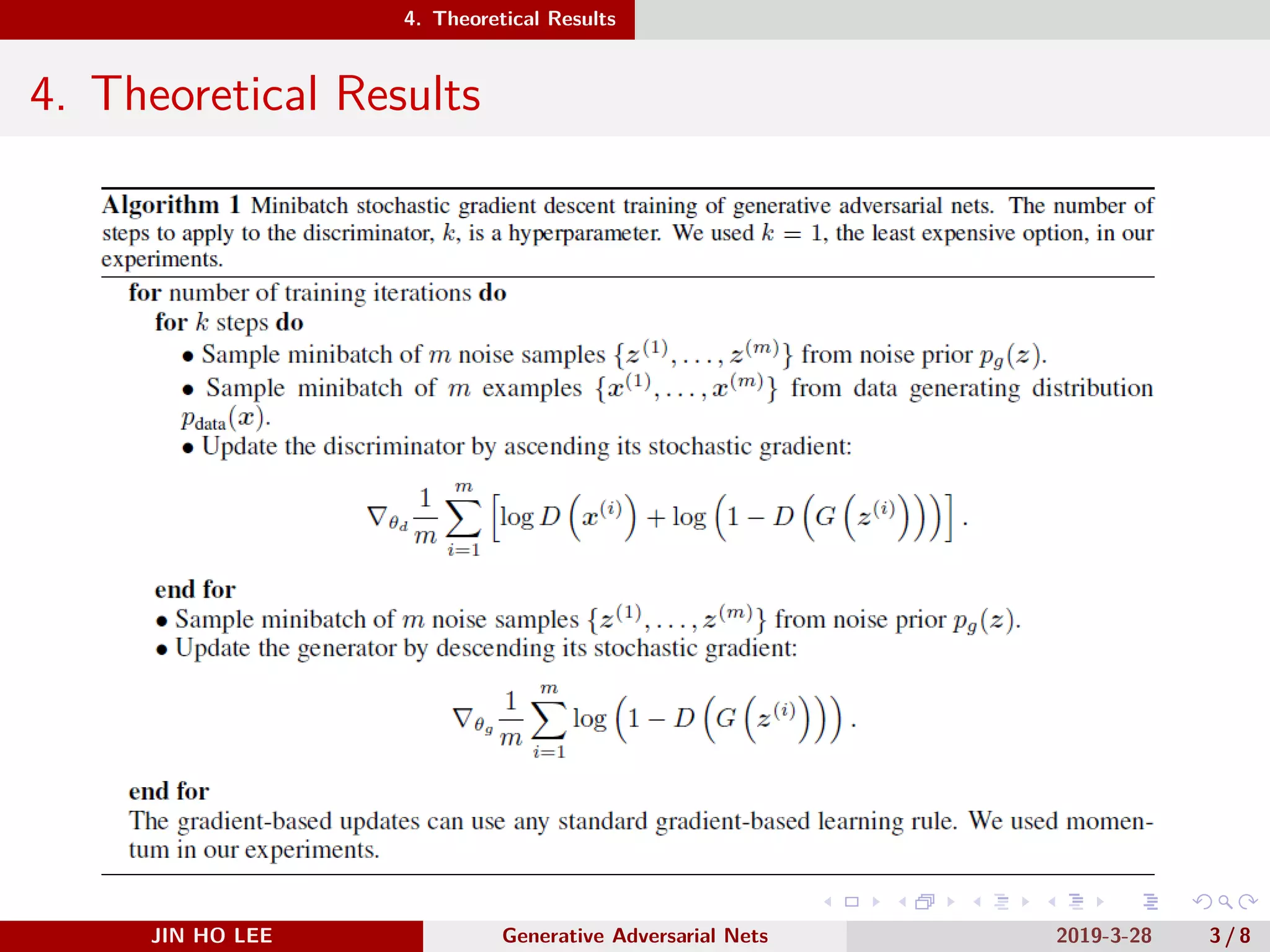

4. Theoretical Results 4.1 Global Optimality of pg = pdata

For the optimal discriminator D∗

G(x) = pdata(x)

pdata(x)+pg(x), we have

C(G) = max

D

V(C, D)

= Ex∼pdata

[log D∗

G(x)] + Ez∼pz [log(1 − D∗

G(G(z)))]

= Ex∼pdata

[log D∗

G(x)] + Ez∼pg [log(1 − D∗

G(x))]

= Ex∼pdata

[

log

pdata(x)

pdata(x) + pg(x)

]

+ Ex∼pg

[

log

(

1 −

pdata(x)

pdata(x) + pg(x)

)]

= Ex∼pdata

[

log

pdata(x)

pdata(x) + pg(x)

]

+ Ex∼pg

[

log

pg(x)

pdata(x) + pg(x)

]

JIN HO LEE Generative Adversarial Nets 2019-3-28 6 / 8](https://image.slidesharecdn.com/190328gan-190328124518/75/Generative-Adversarial-Nets-6-2048.jpg)

![.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

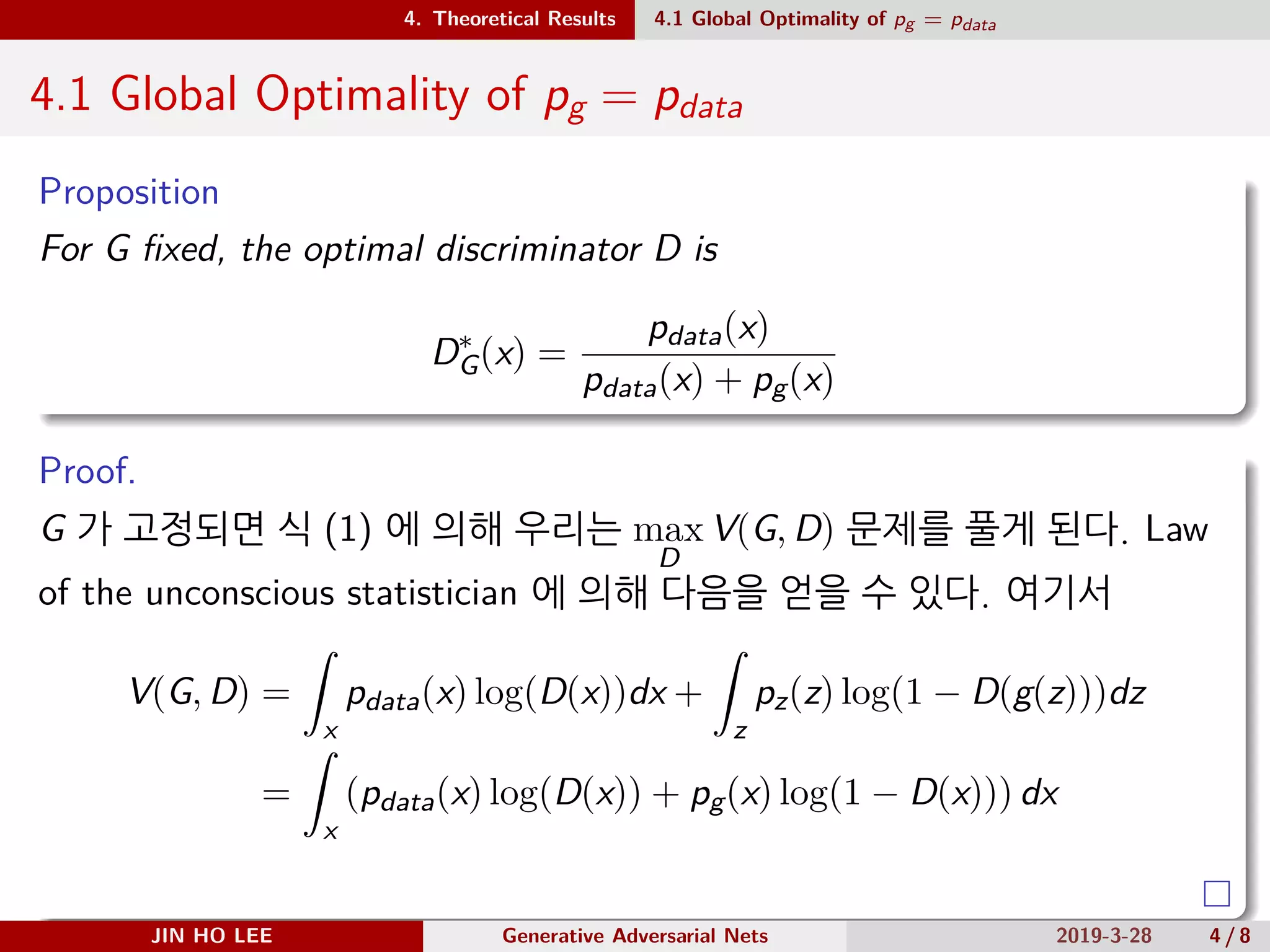

4. Theoretical Results 4.1 Global Optimality of pg = pdata

Theorem

The global minimum of the vortual training criterion C(G) is achieved iff

pg = pdata. At that point, C(G) achieves the value − log 4.

Proof.

If pg = pdata, then

D∗

G =

pdata(x)

pdata(x) + pg(x)

=

pg

pg + pg

=

1

2

.

C(G) = Ex∼pdata

[

log

pdata

pdata + pg

]

+ Ex∼pg

[

log

1

2

]

= log

1

2

+ log

1

2

= − log 4

JIN HO LEE Generative Adversarial Nets 2019-3-28 7 / 8](https://image.slidesharecdn.com/190328gan-190328124518/75/Generative-Adversarial-Nets-7-2048.jpg)

![.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

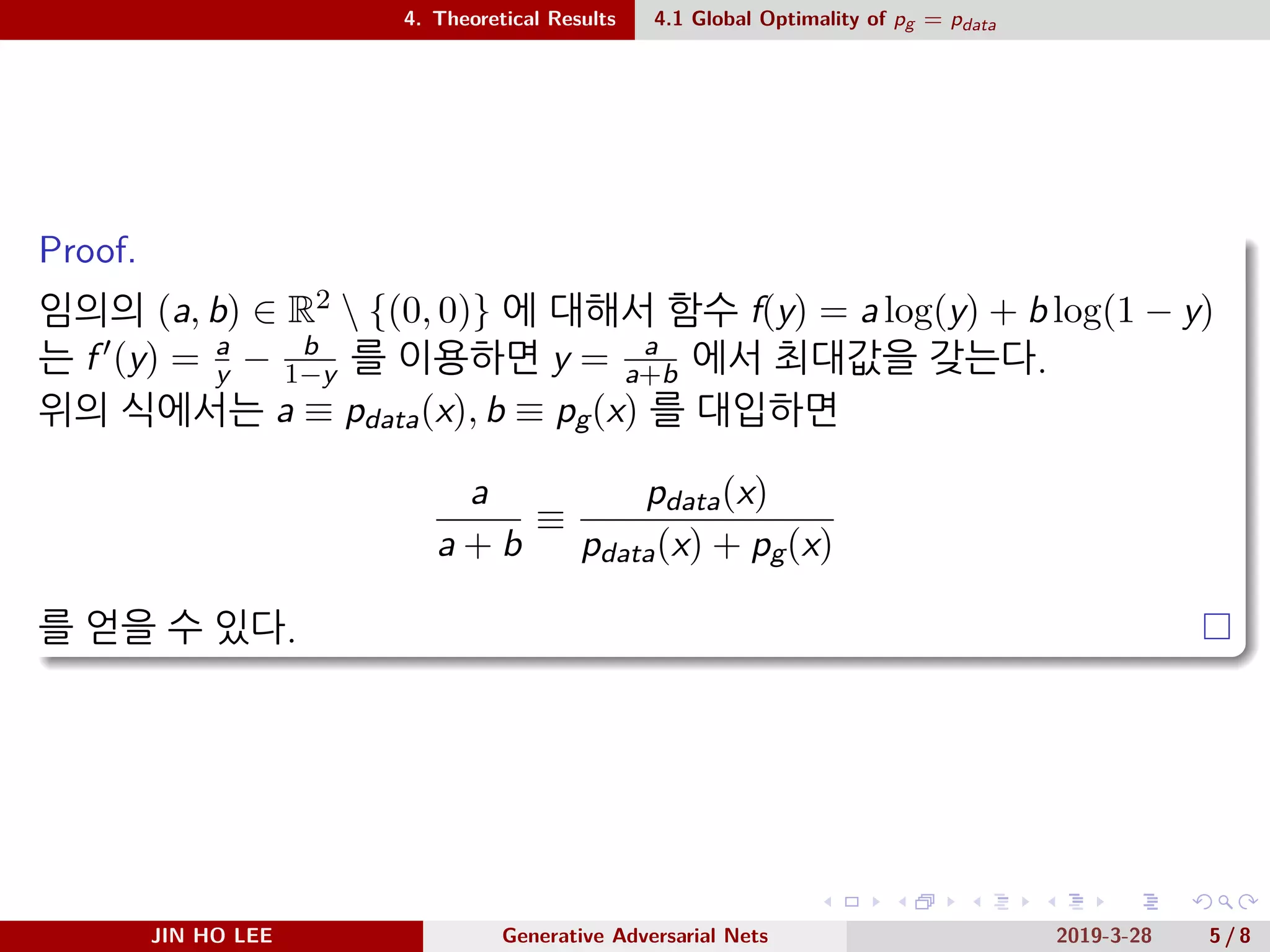

4. Theoretical Results 4.1 Global Optimality of pg = pdata

C(G) = Ex∼pdata

[

log

pdata

pdata + pg

]

+ Ex∼pg

[

pg

pdata + pg

]

= KL(pdata||pdata + pg) + KL(pg||pdata + pg)

= −2 log 2 + KL

(

pdata||

pdata + pg

2

)

+ KL

(

pg||

pdata + pg

2

)

= − log 4 + 2JSD(pdata||pg)

≥ − log 4

JIN HO LEE Generative Adversarial Nets 2019-3-28 8 / 8](https://image.slidesharecdn.com/190328gan-190328124518/75/Generative-Adversarial-Nets-8-2048.jpg)