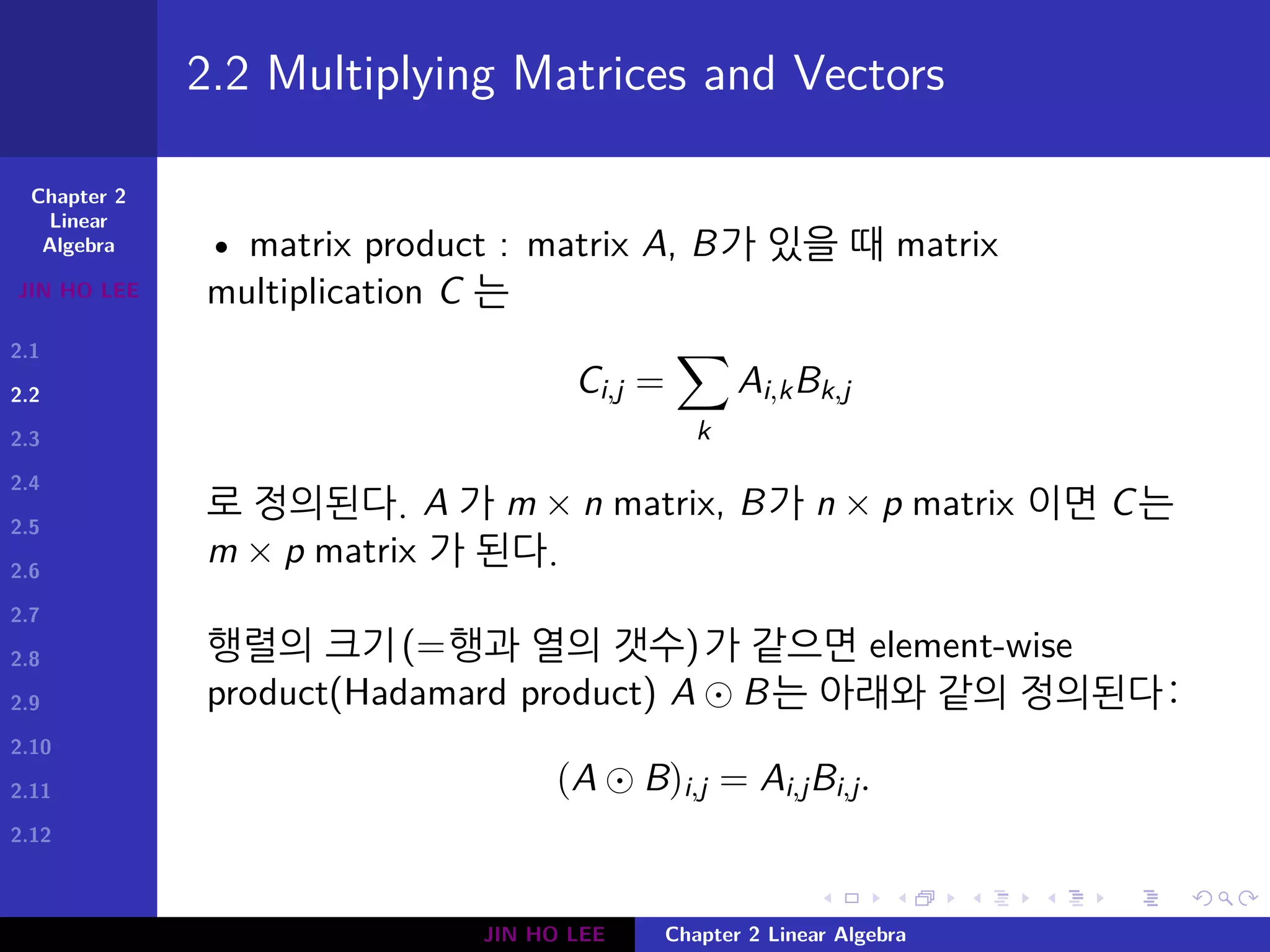

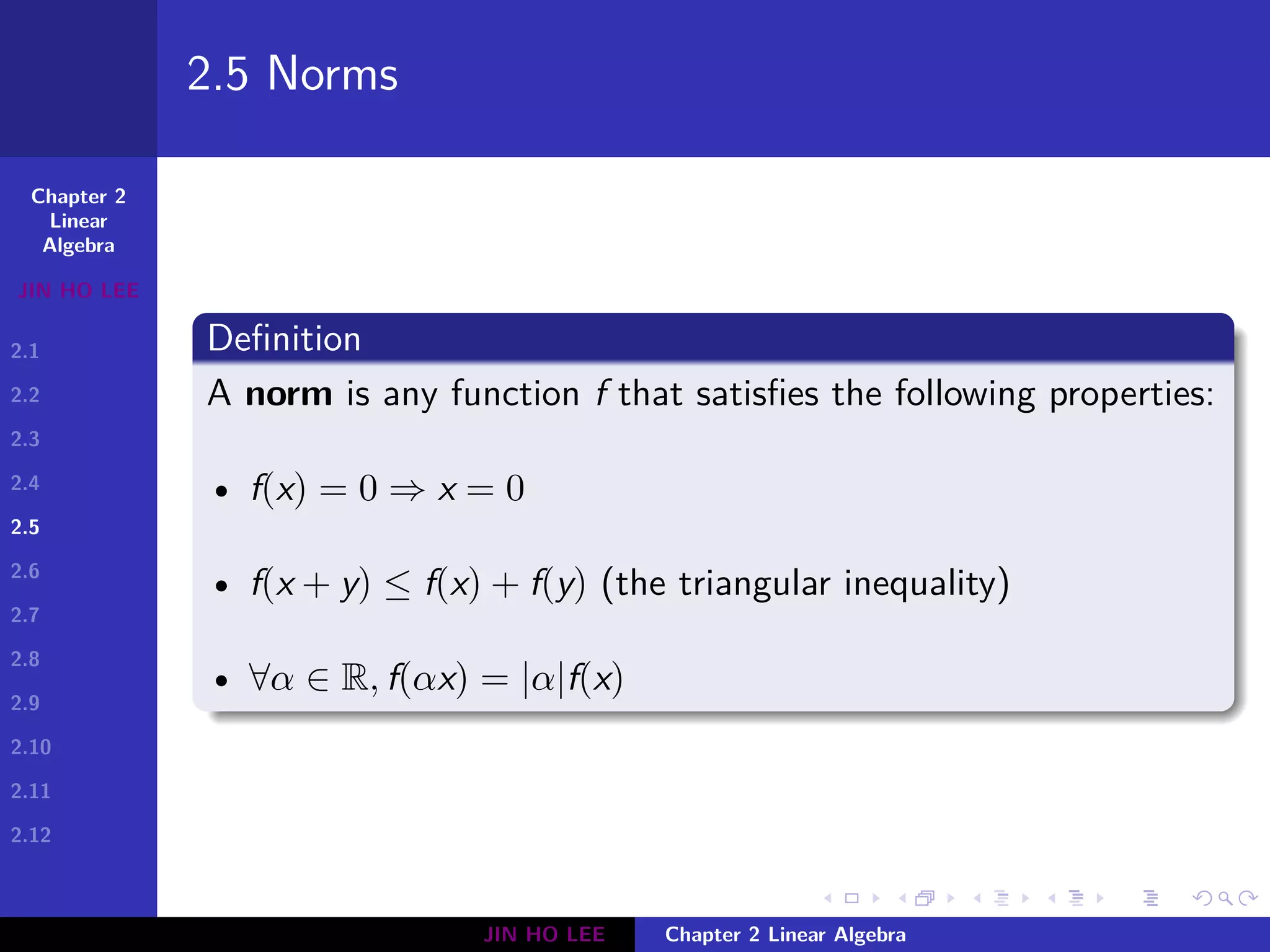

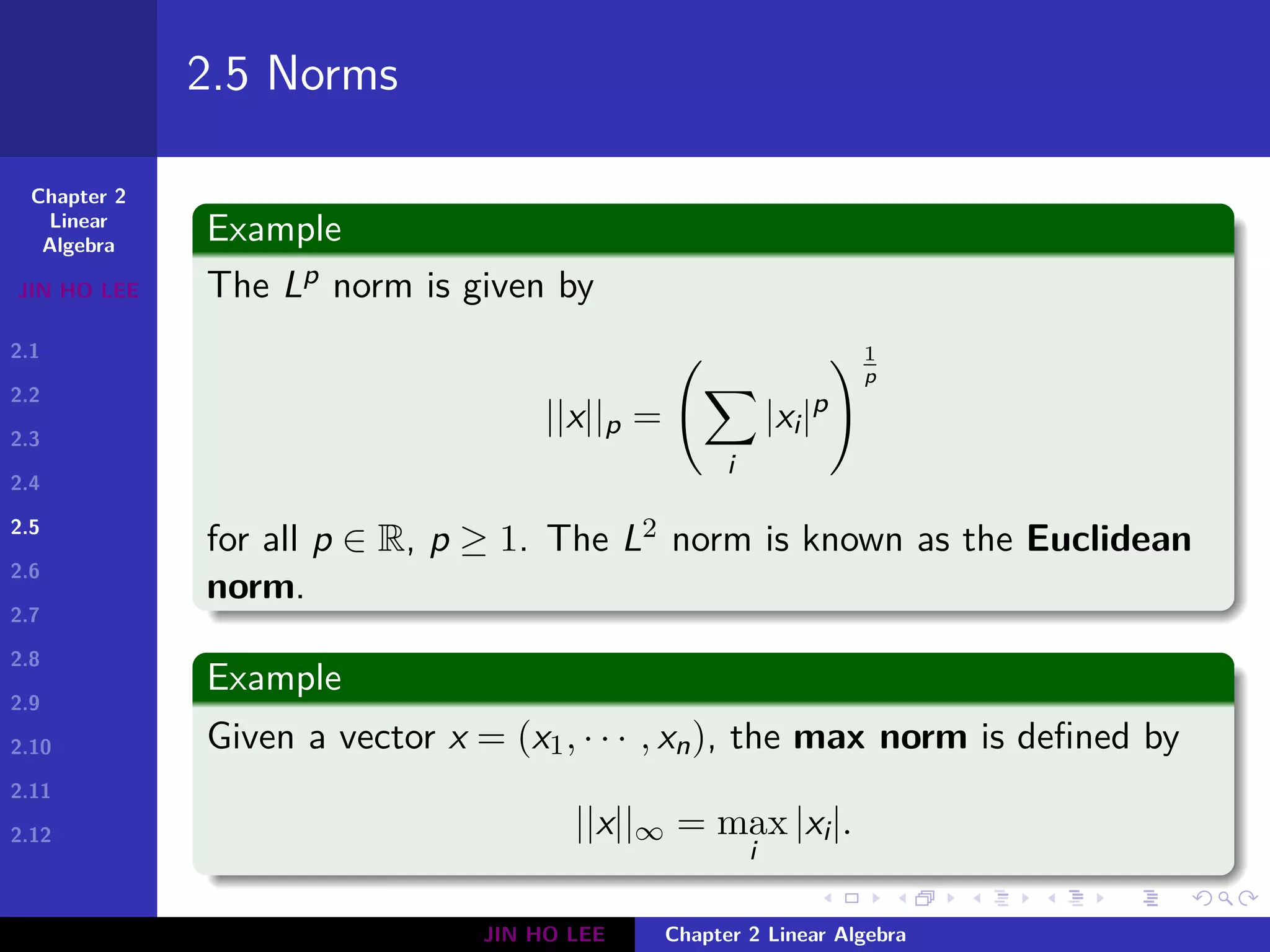

This document outlines the key topics covered in Chapter 2 of the book "Linear Algebra" by Jin Ho Lee. The chapter introduces fundamental concepts in linear algebra including scalars, vectors, matrices, and tensors. It describes operations on these objects such as matrix multiplication and vector dot products. Important matrix properties and special types of matrices like identity, inverse, diagonal, and symmetric matrices are defined. Linear dependence, spans, and vector spaces are discussed. Various vector and matrix norms are also introduced.

![Chapter 2

Linear

Algebra

JIN HO LEE

2.1

2.2

2.3

2.4

2.5

2.6

2.7

2.8

2.9

2.10

2.11

2.12

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

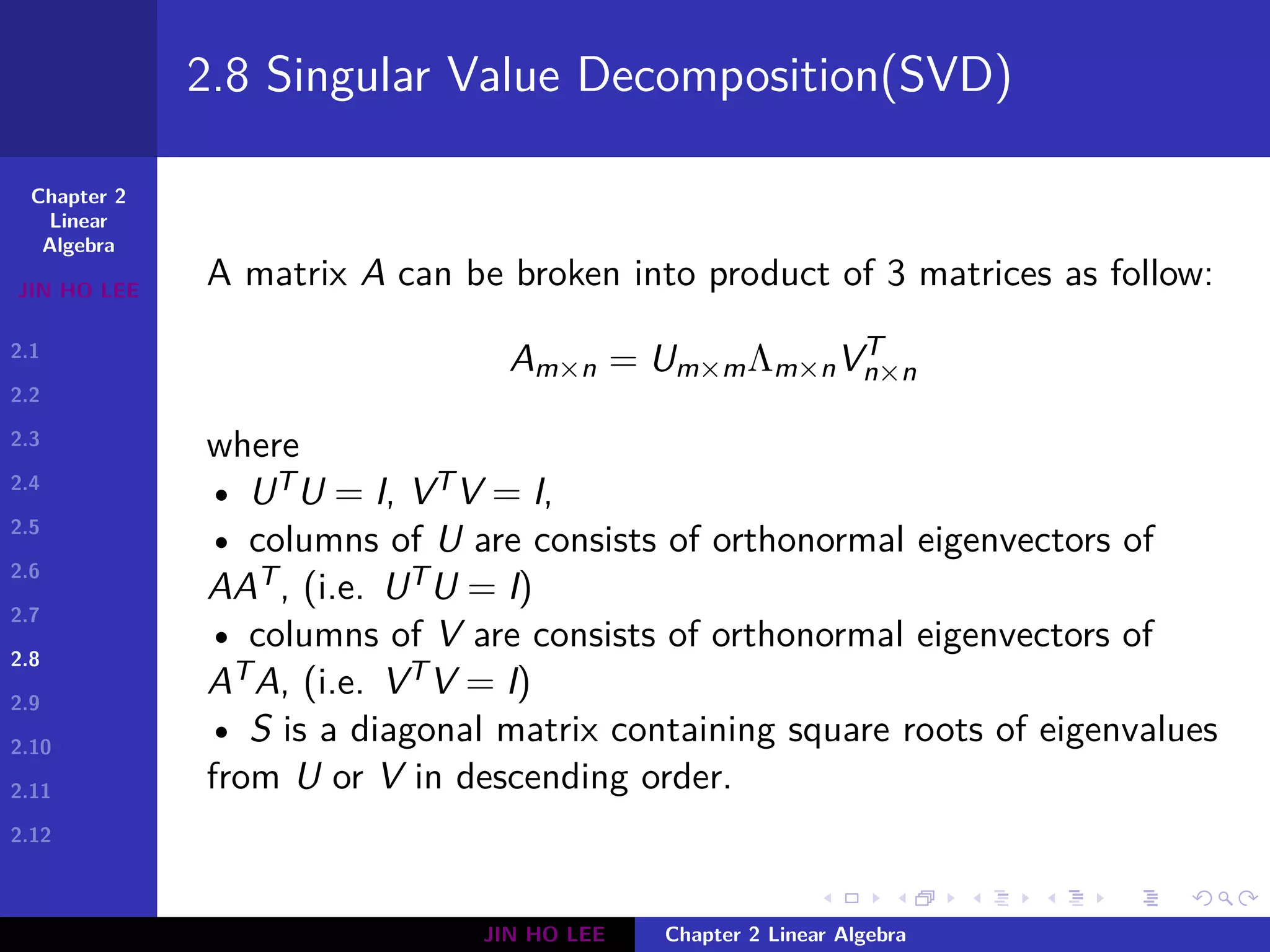

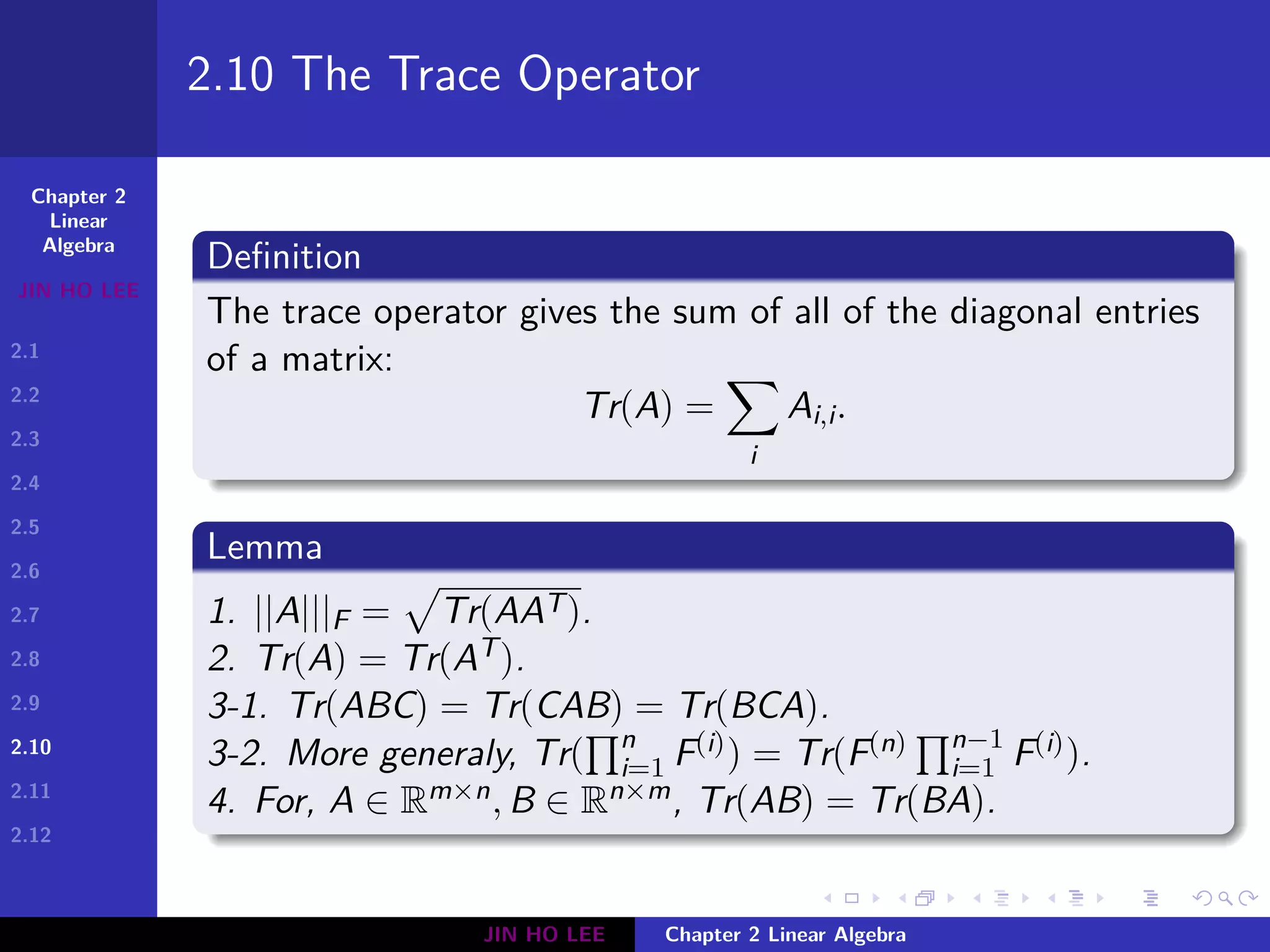

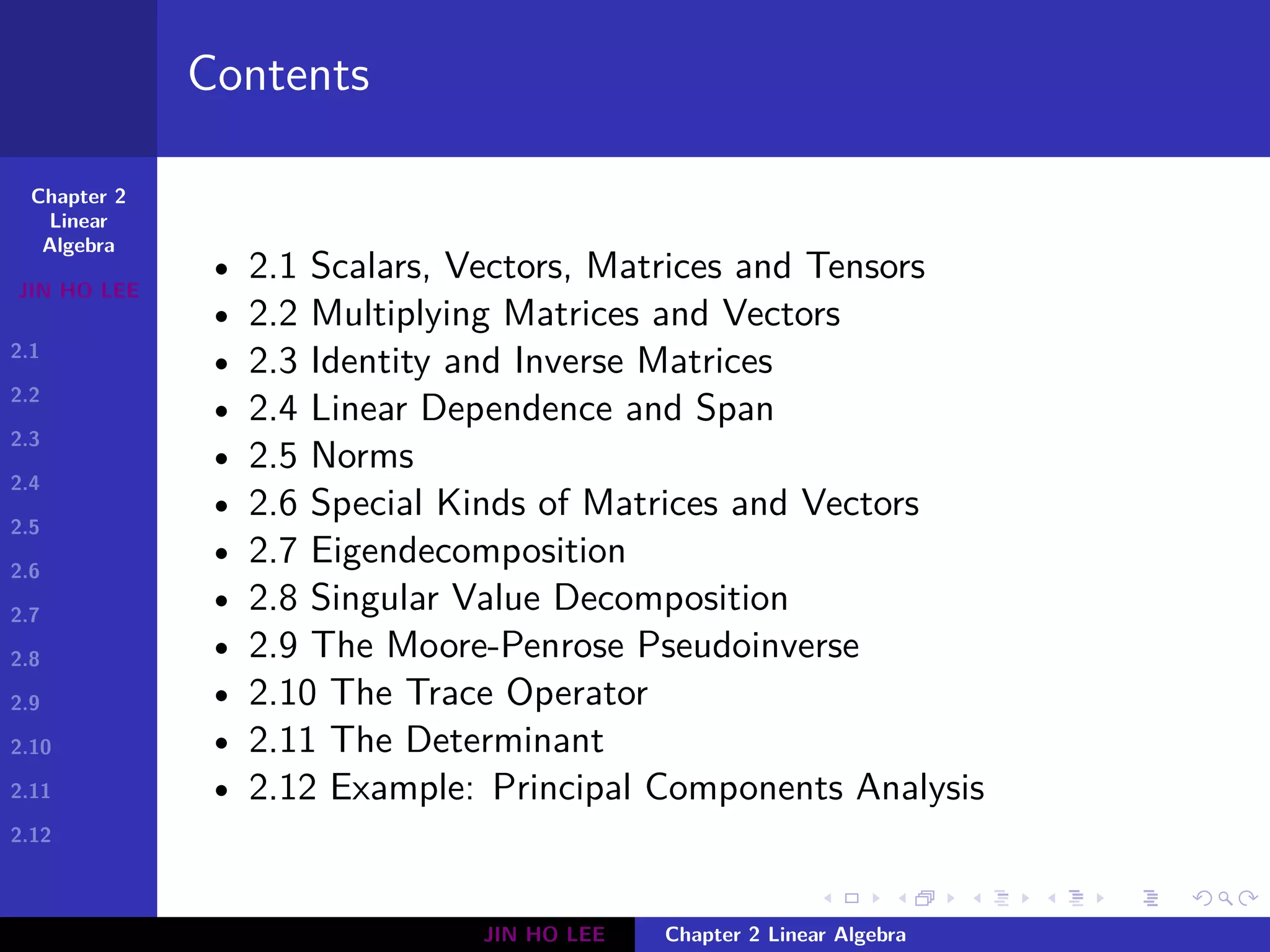

2.1 Scalars, Vectors, Matrices and Tensors

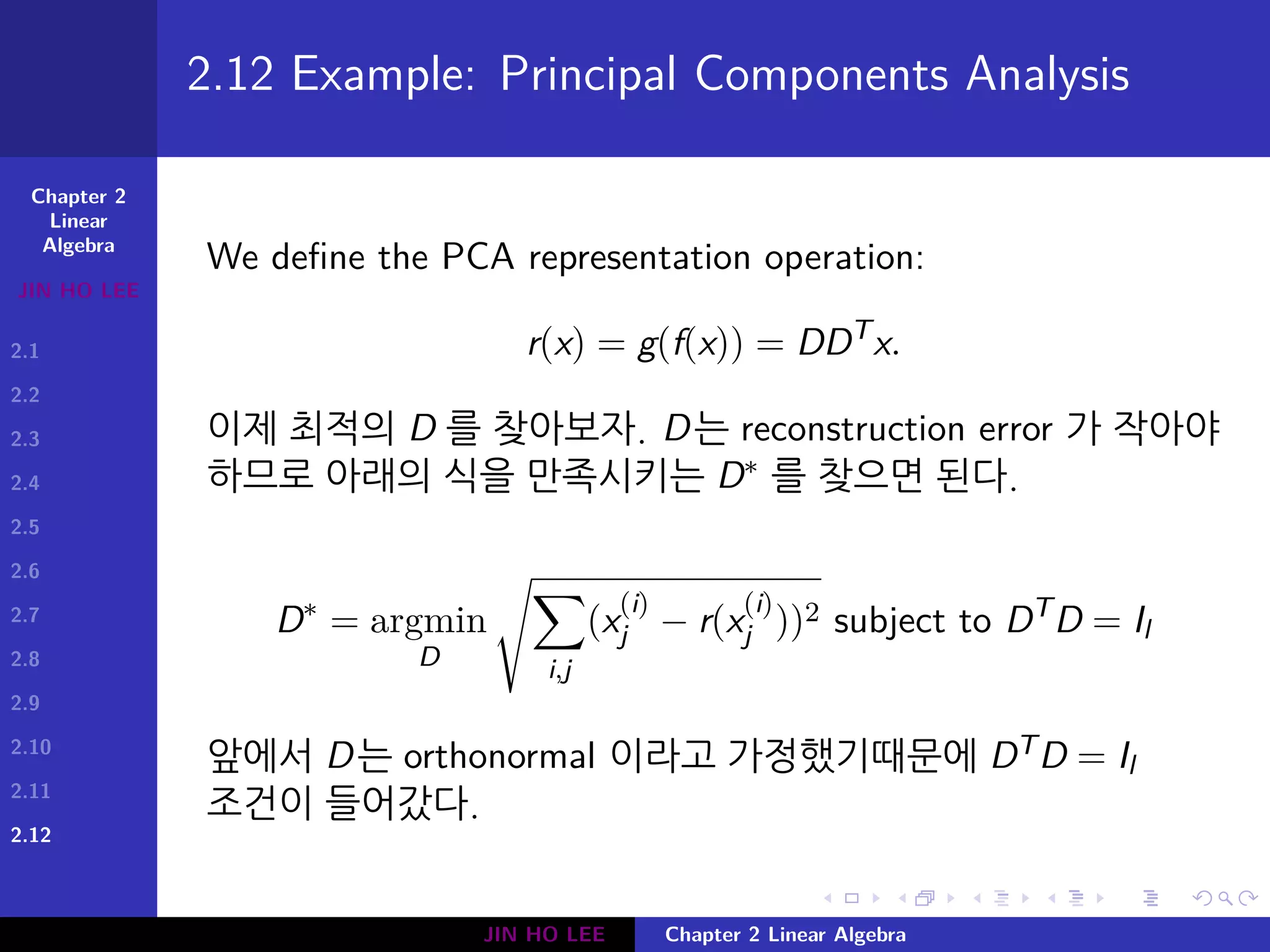

real valued function f 가 있을 때 matrix 에 적용할 수 있는데,

방법은 entry를 각각 f로 보내는 것이다. 예를 들어 f(x) = 2x

이고 A =

[

1 2

3 4

]

이면 f(A)i,j =

[

f(1) f(2)

f(3) f(4)

]

=

[

2 4

6 8

]

이다.

• Tensors : 3차원 이상의 숫자 배열을 tensor 라고 한다. A 의

(i, j, k) coordinate를 Ai,j,k 로 쓰자.

• Transpose : The transpose of a matrix AT is the mirror

image of the matrix across a diagonal line, called the main

diagonal, that is

(AT

)i,j = Aj,i.

JIN HO LEE Chapter 2 Linear Algebra](https://image.slidesharecdn.com/2-linearalgebra-180524152143/75/Ch-2-Linear-Algebra-6-2048.jpg)

![Chapter 2

Linear

Algebra

JIN HO LEE

2.1

2.2

2.3

2.4

2.5

2.6

2.7

2.8

2.9

2.10

2.11

2.12

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

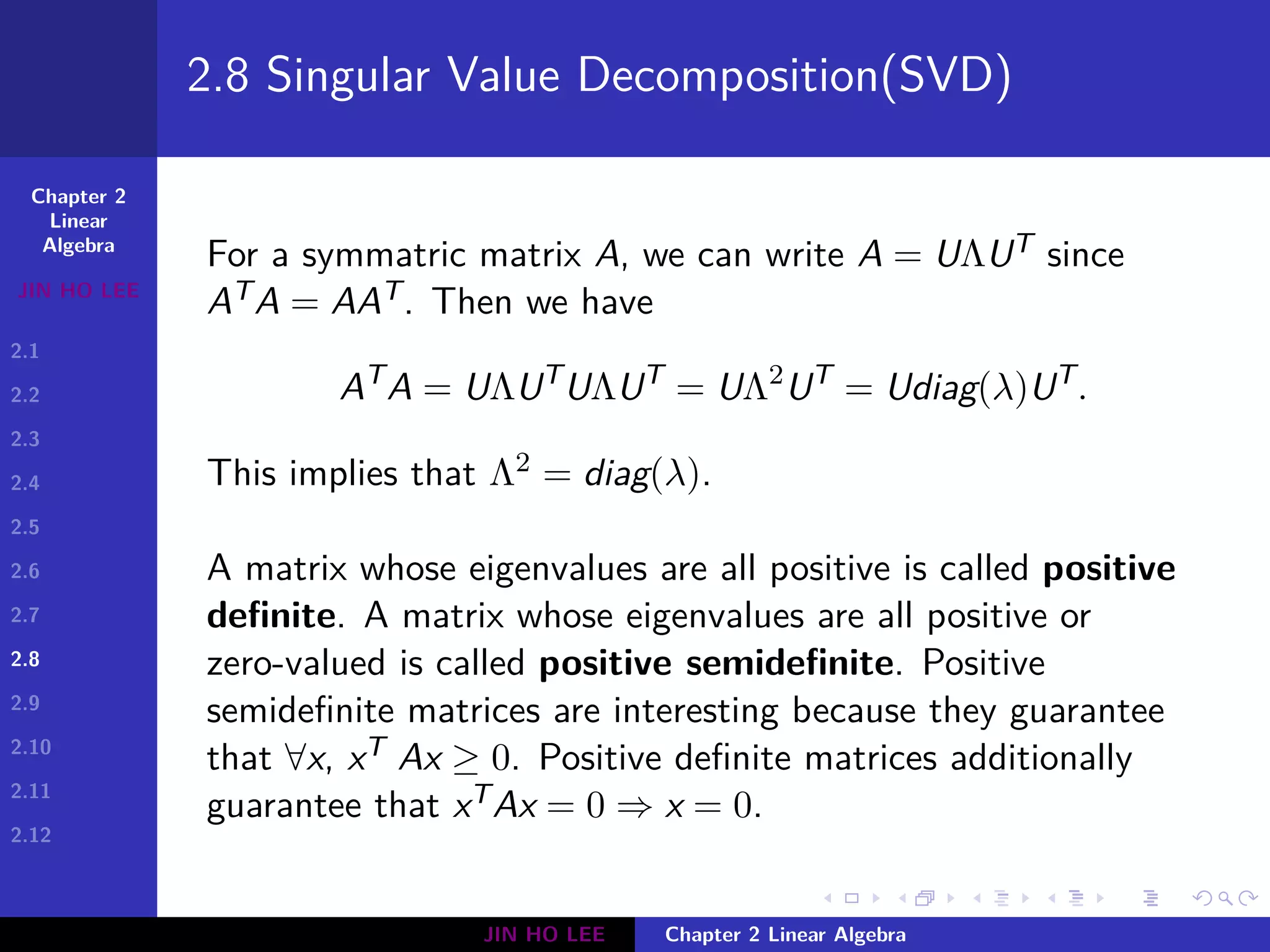

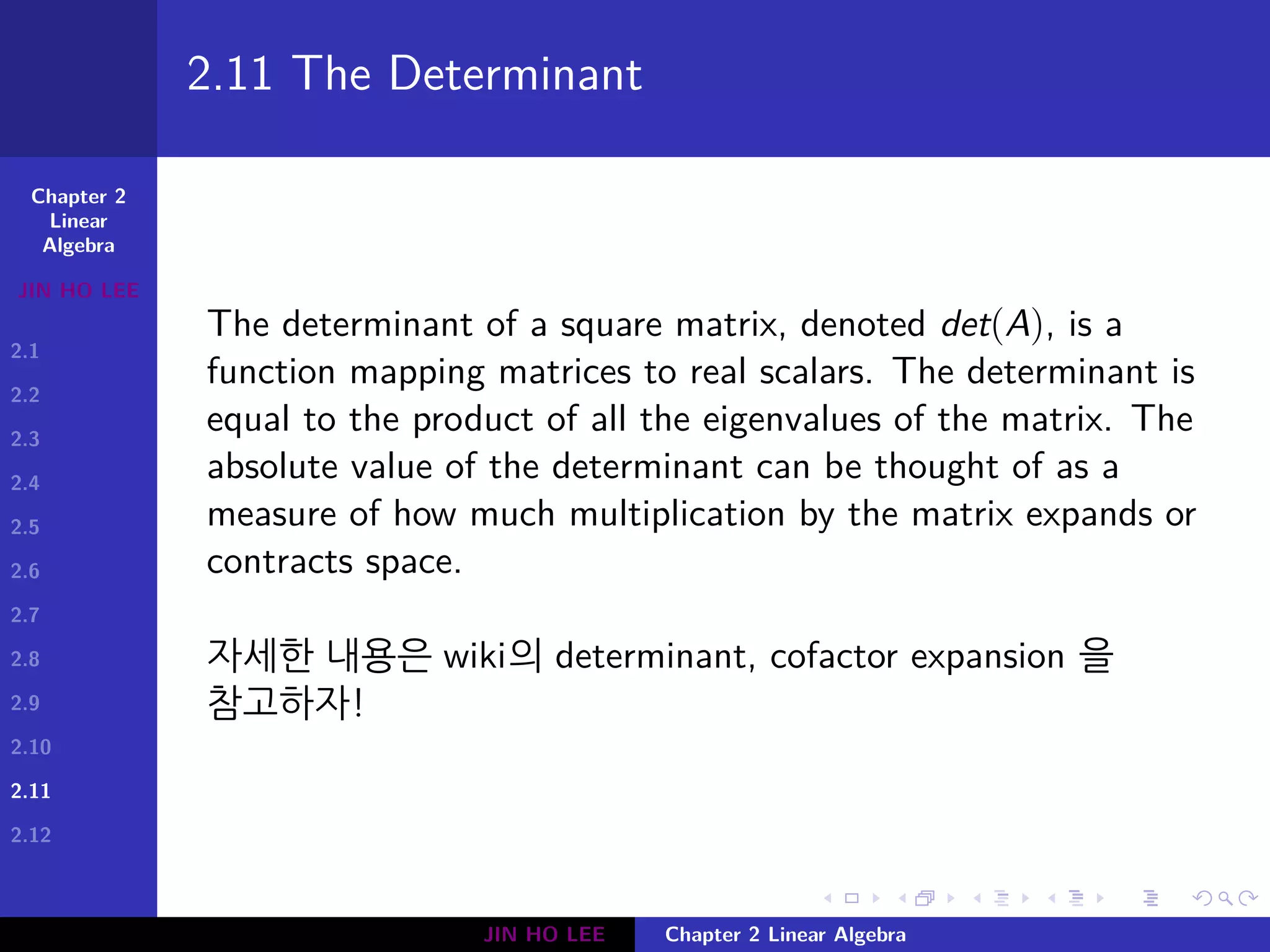

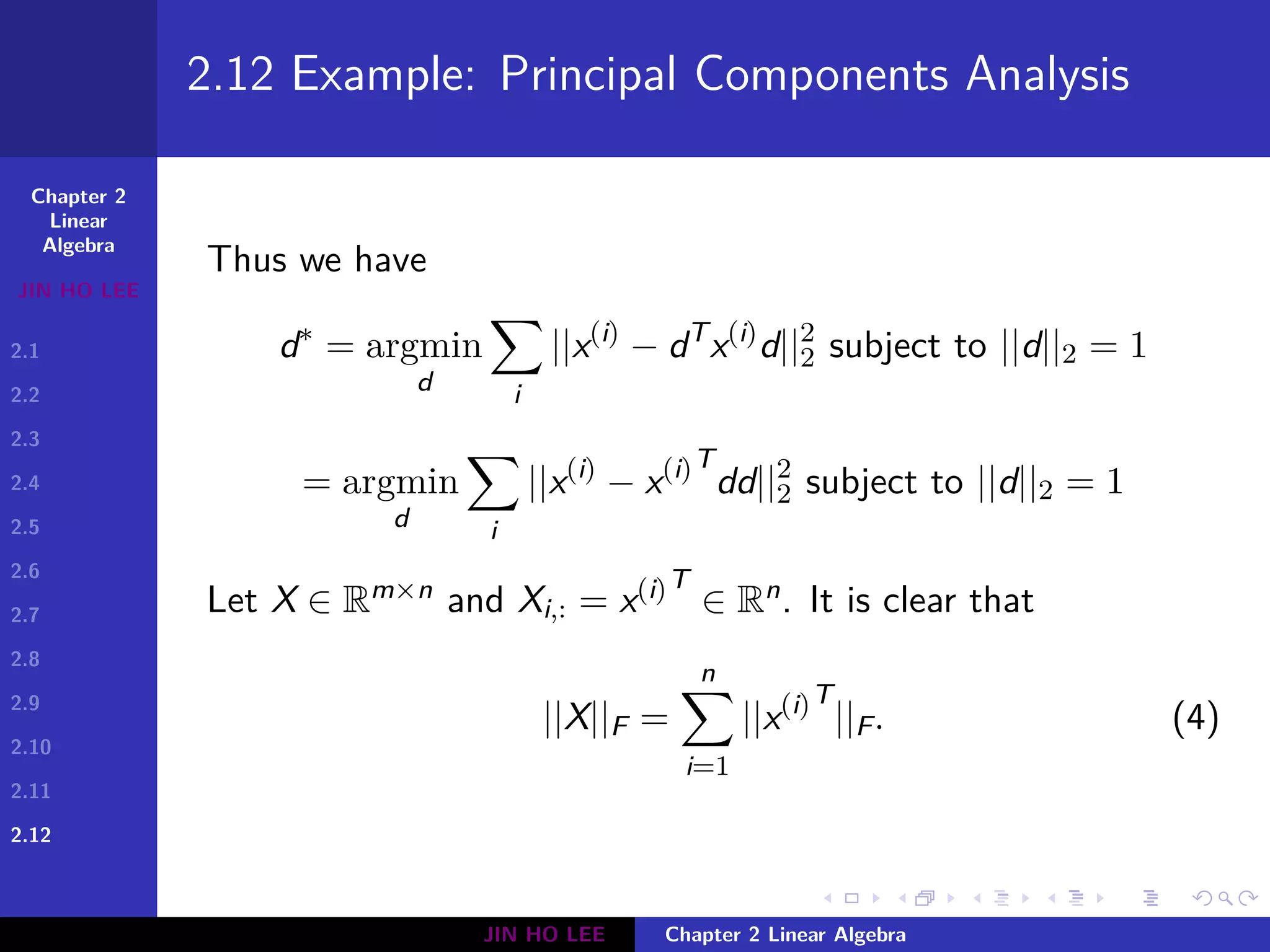

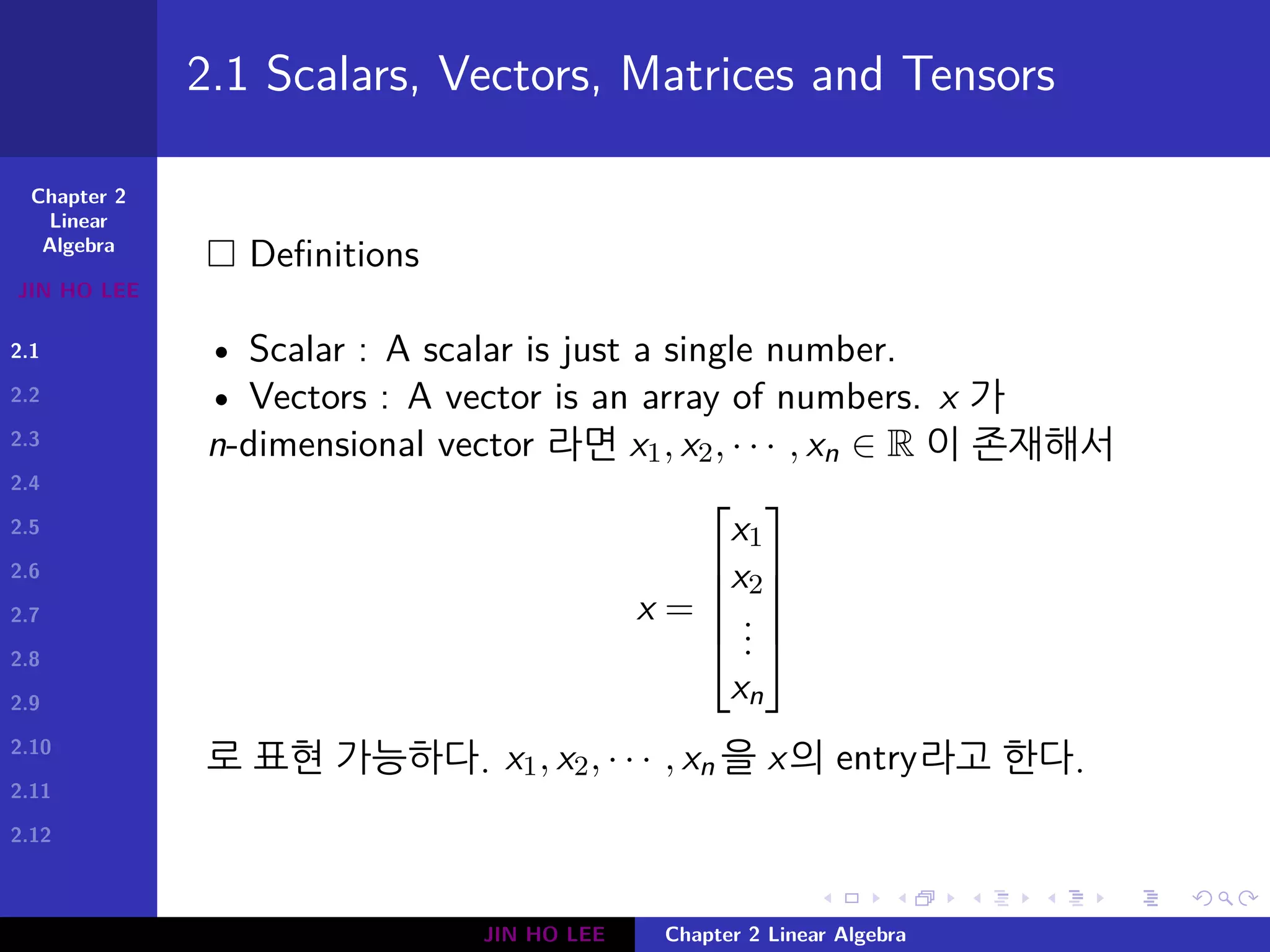

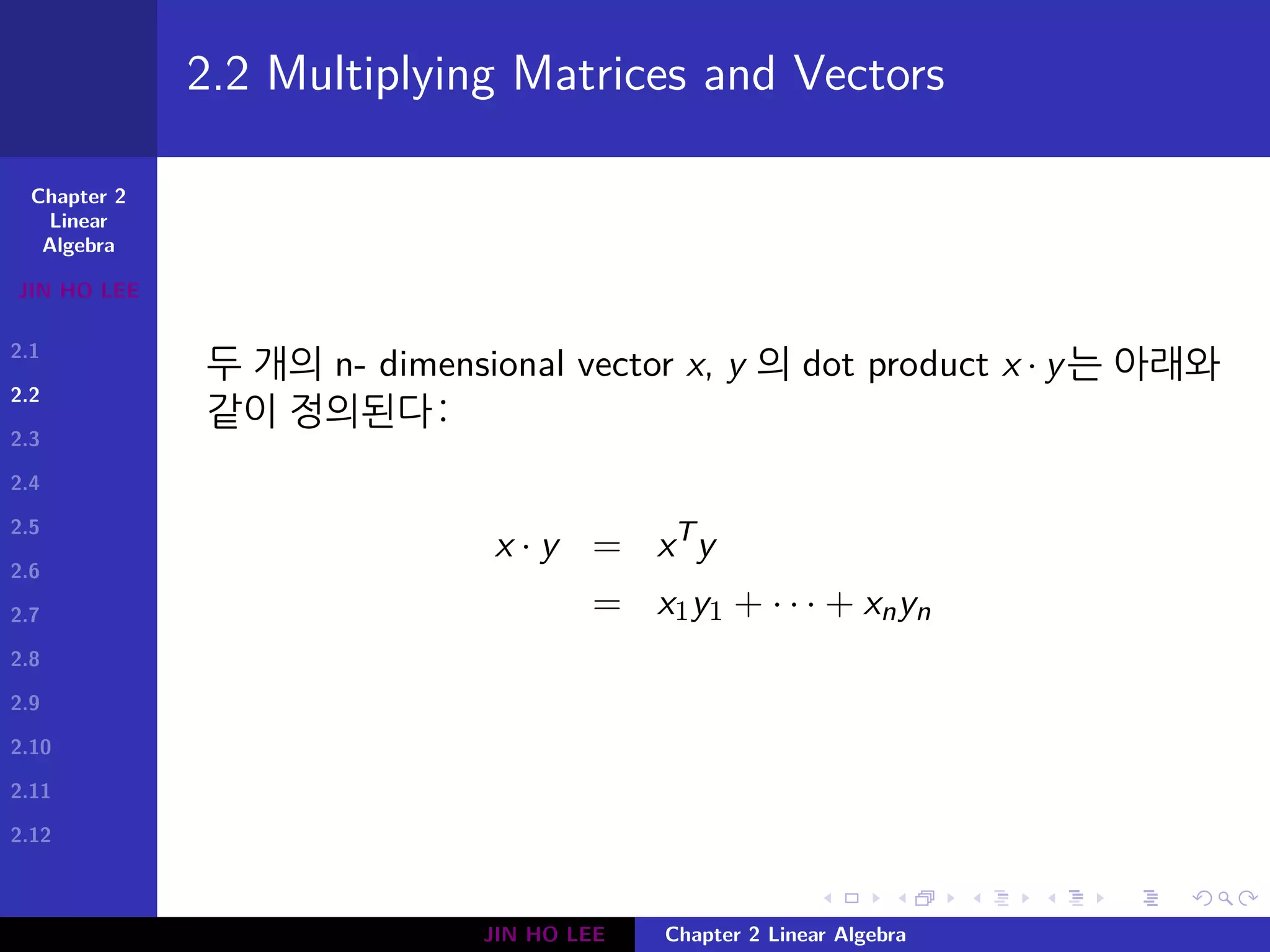

2.4 Linear Dependence and Span

scalar c1, · · · , cn 와 vector v(1), · · · , v(n) 가 있을 때

∑

i

civ(i)

= c1v(1)

+ · · · + ckv(n)

형태를 linear combination 이라고 한다.

vector 들의 집합 S = {v1, · · · , vn}가 있을 때, S가 span 하는

벡터공간 아래와 같이 정의된다:

{c1v1 + · · · + cnvn|c1, · · · , cn ∈ R}.

Example

v = [1, 2]T 일 때 {v1}가 span 하는 벡터공간은 아래와 같다:

{cv = [c, 2c]|c ∈ R}.

JIN HO LEE Chapter 2 Linear Algebra](https://image.slidesharecdn.com/2-linearalgebra-180524152143/75/Ch-2-Linear-Algebra-10-2048.jpg)

![Chapter 2

Linear

Algebra

JIN HO LEE

2.1

2.2

2.3

2.4

2.5

2.6

2.7

2.8

2.9

2.10

2.11

2.12

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

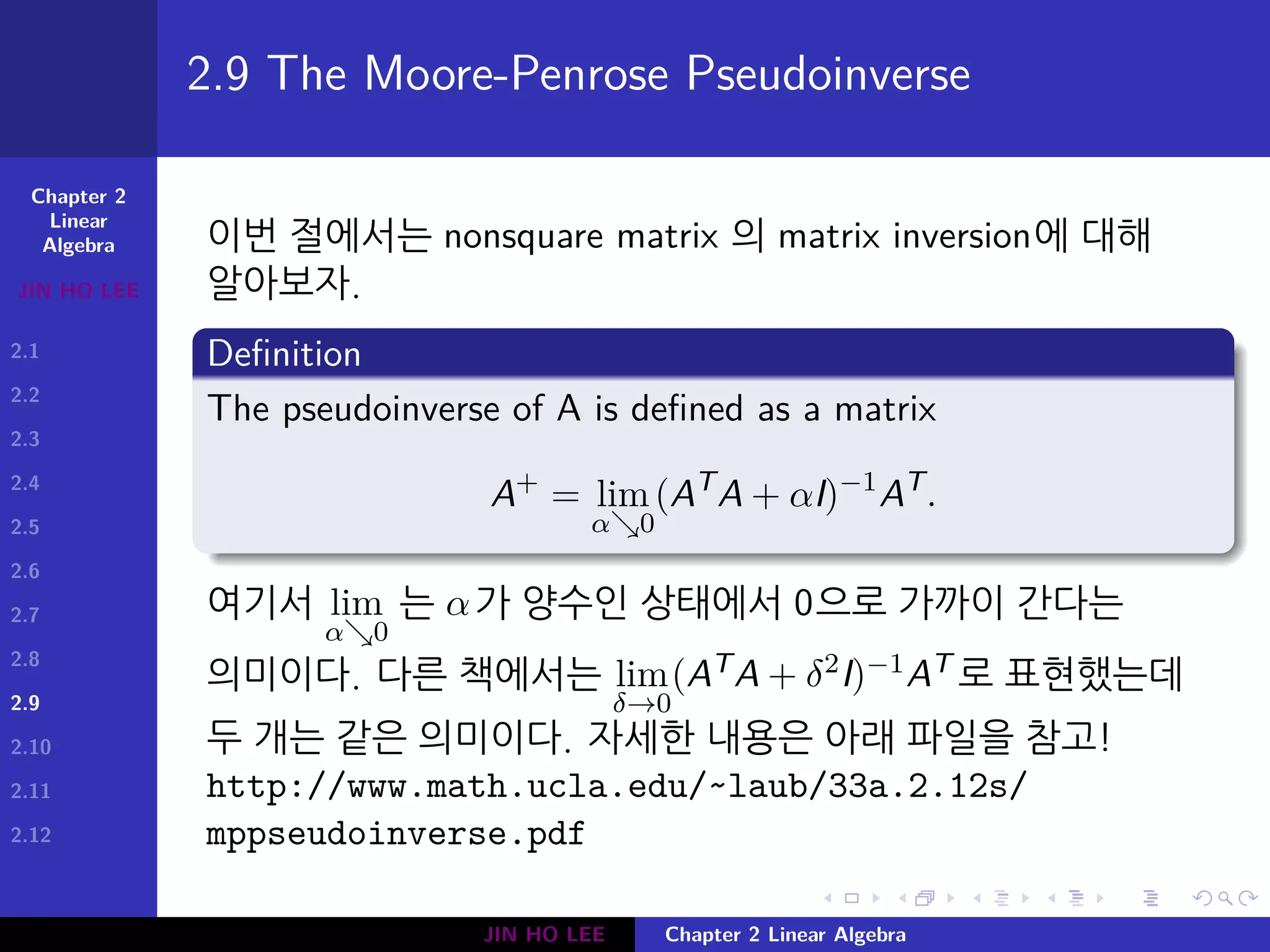

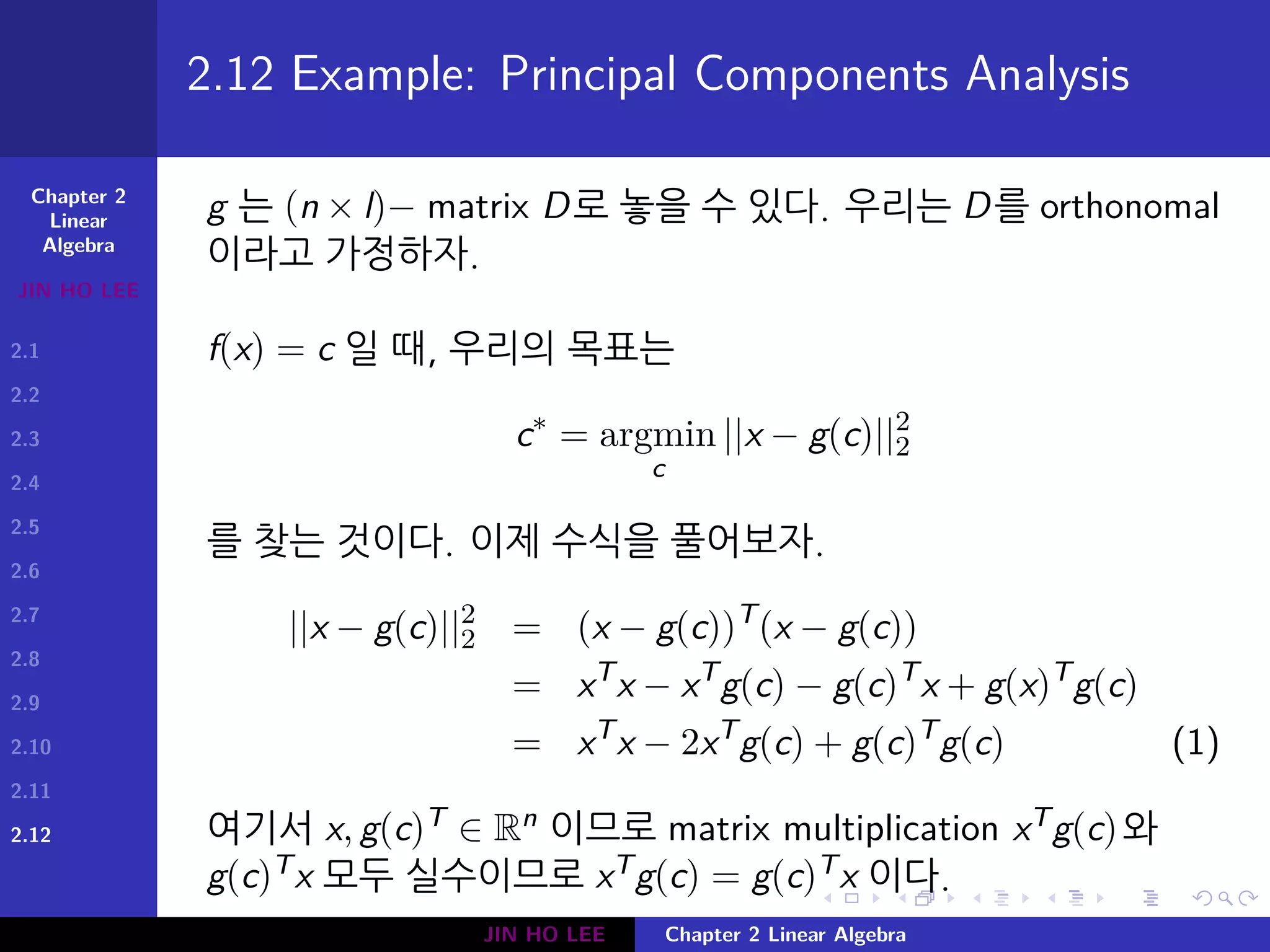

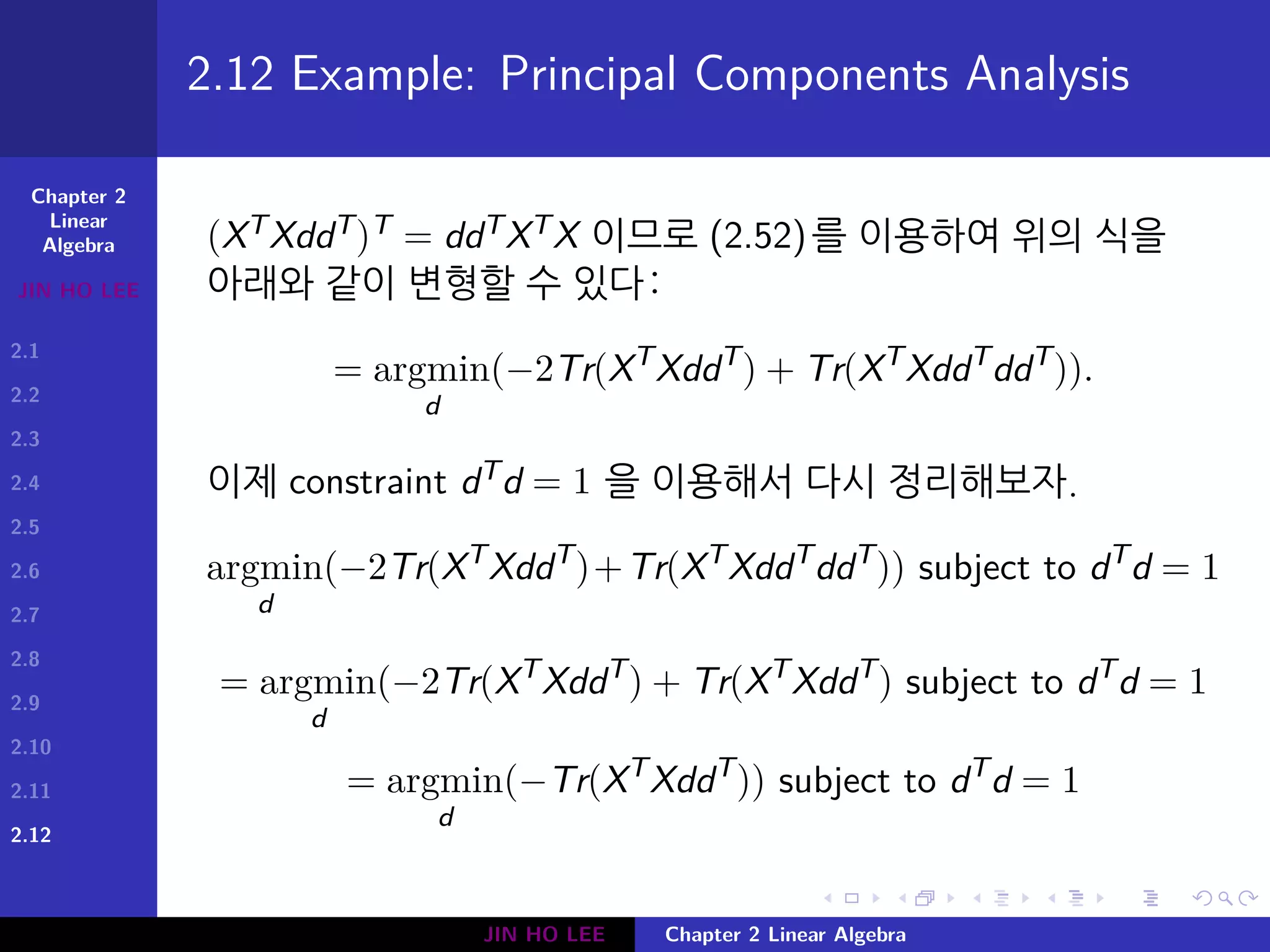

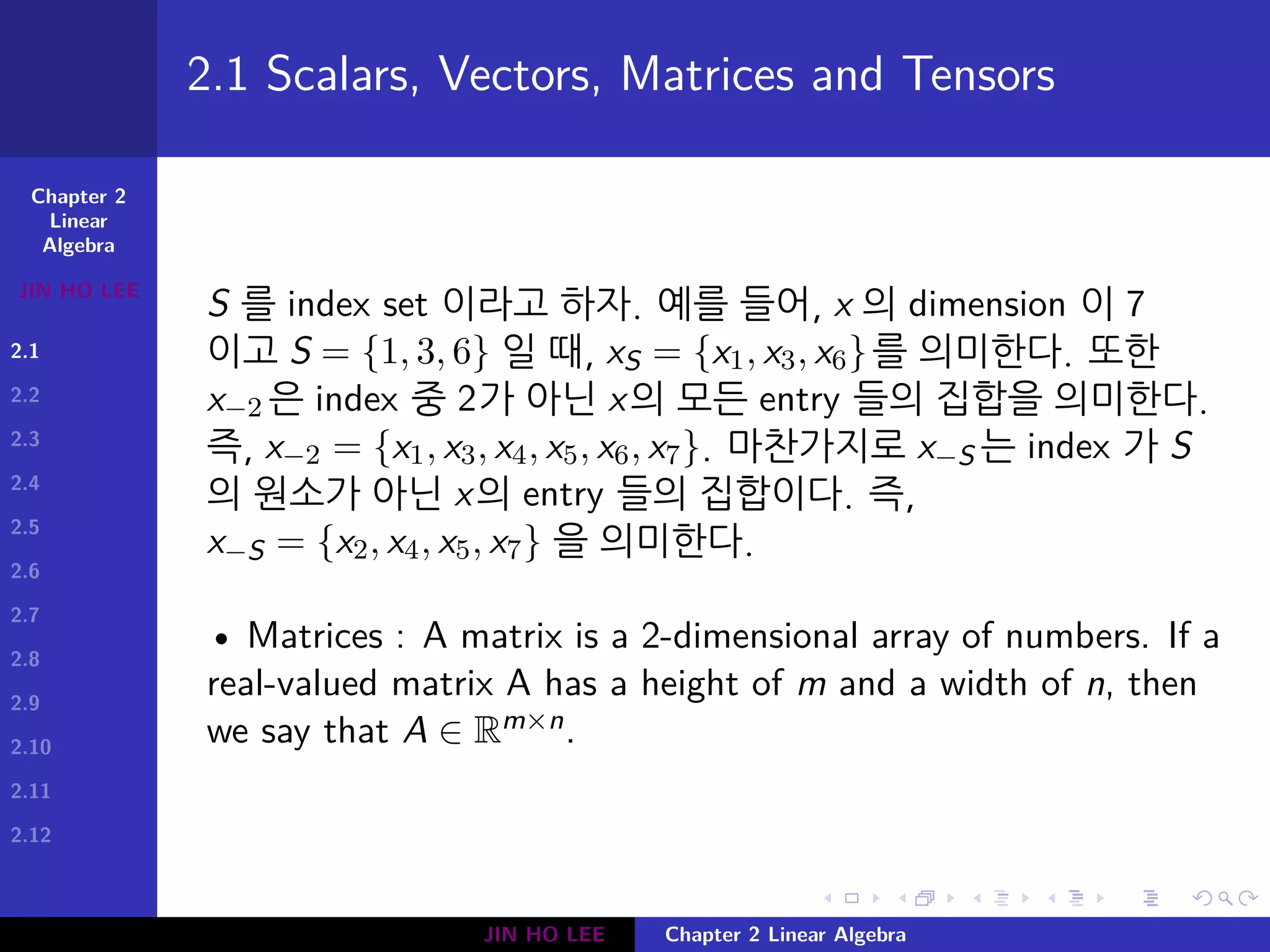

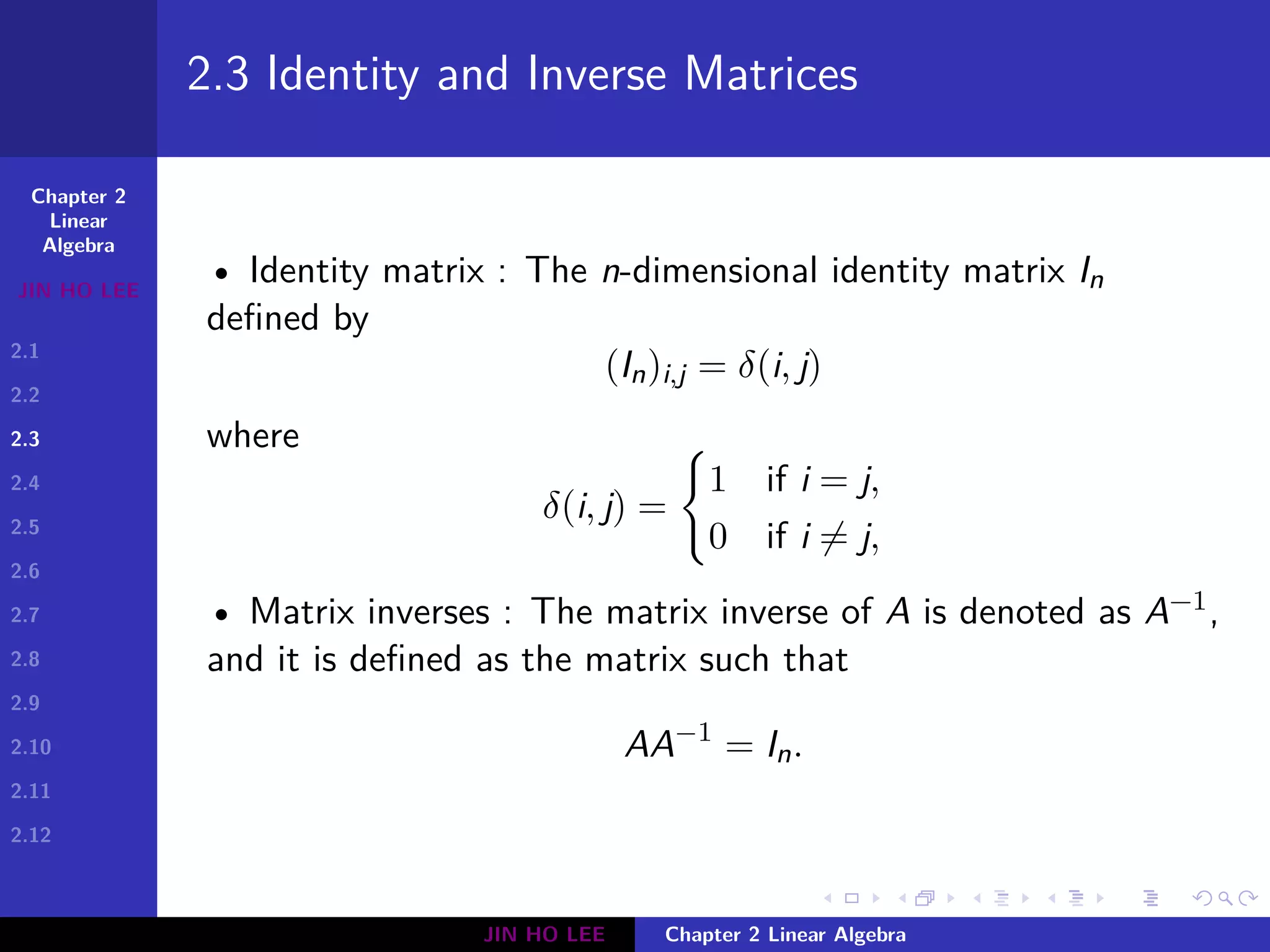

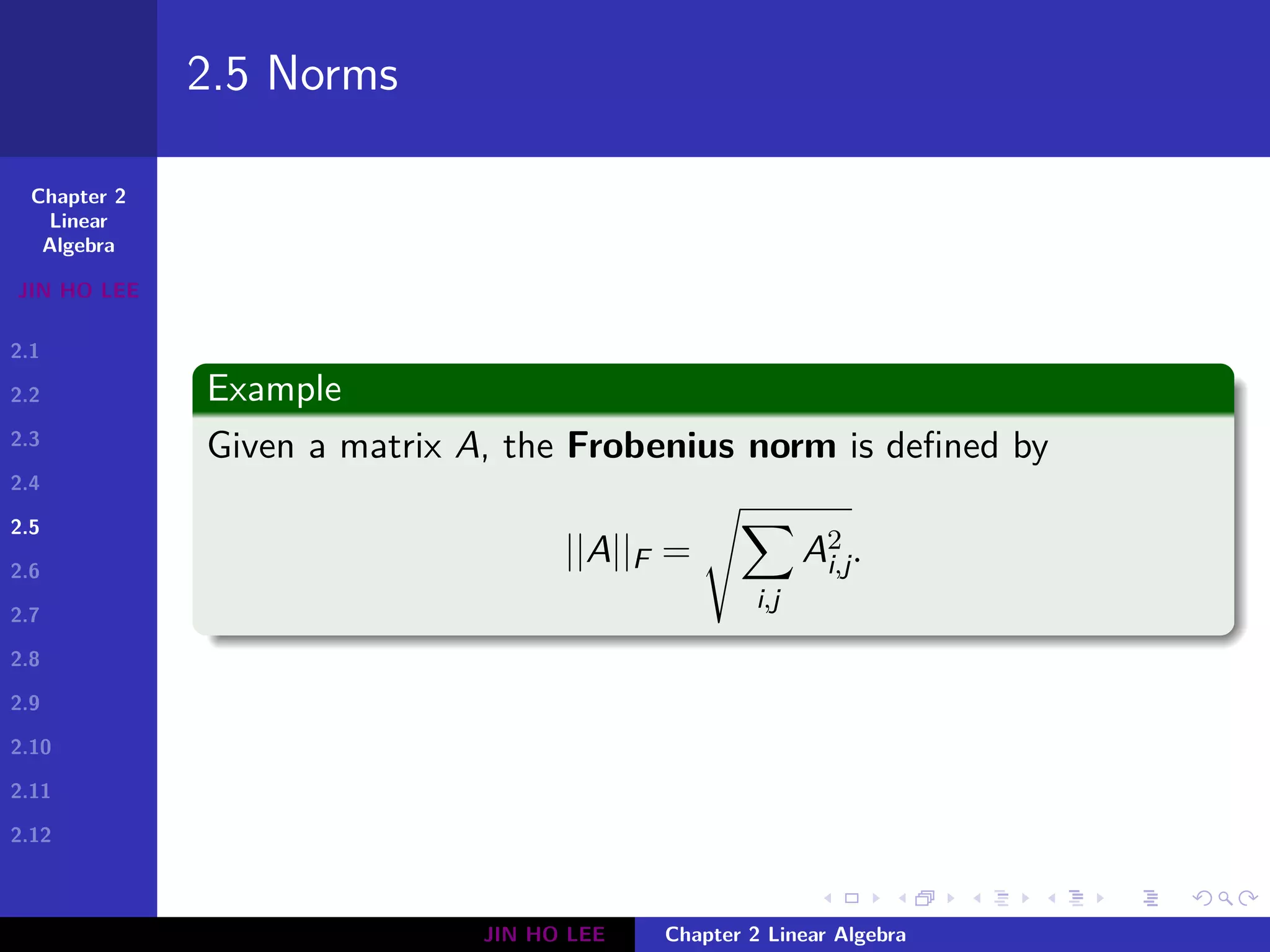

2.6 Special Kinds of Matrices and Vectors

Definition

A matrix D is diagonal if Di,j = 0 for i ̸= j.

Given a vector v = (v1, · · · , vn), we write diag(v) to denote a

square diagonal matrix whose diagonal entries are given by the

entries of the vector v. For a vector v = (1, 2), we have

diag(v) =

[

1 0

0 2

]

It is clear that diag(v)x = v ⊙ x for any vector x. If vi ̸= 0 for

any i = 1, · · · , n, we denote

diag(v)−1

= diag([1/v1, · · · , 1/vn]T

).

JIN HO LEE Chapter 2 Linear Algebra](https://image.slidesharecdn.com/2-linearalgebra-180524152143/75/Ch-2-Linear-Algebra-15-2048.jpg)

![Chapter 2

Linear

Algebra

JIN HO LEE

2.1

2.2

2.3

2.4

2.5

2.6

2.7

2.8

2.9

2.10

2.11

2.12

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

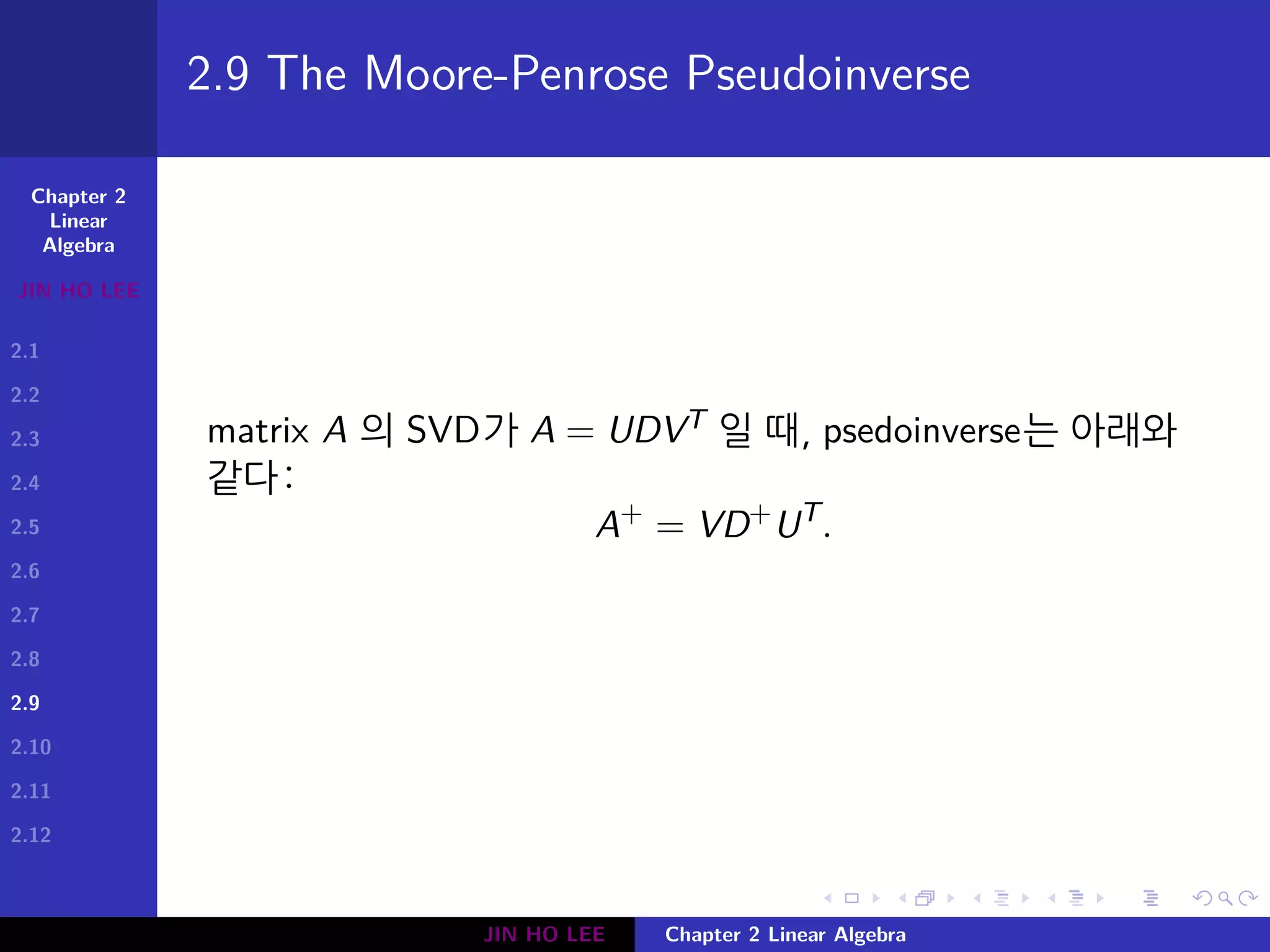

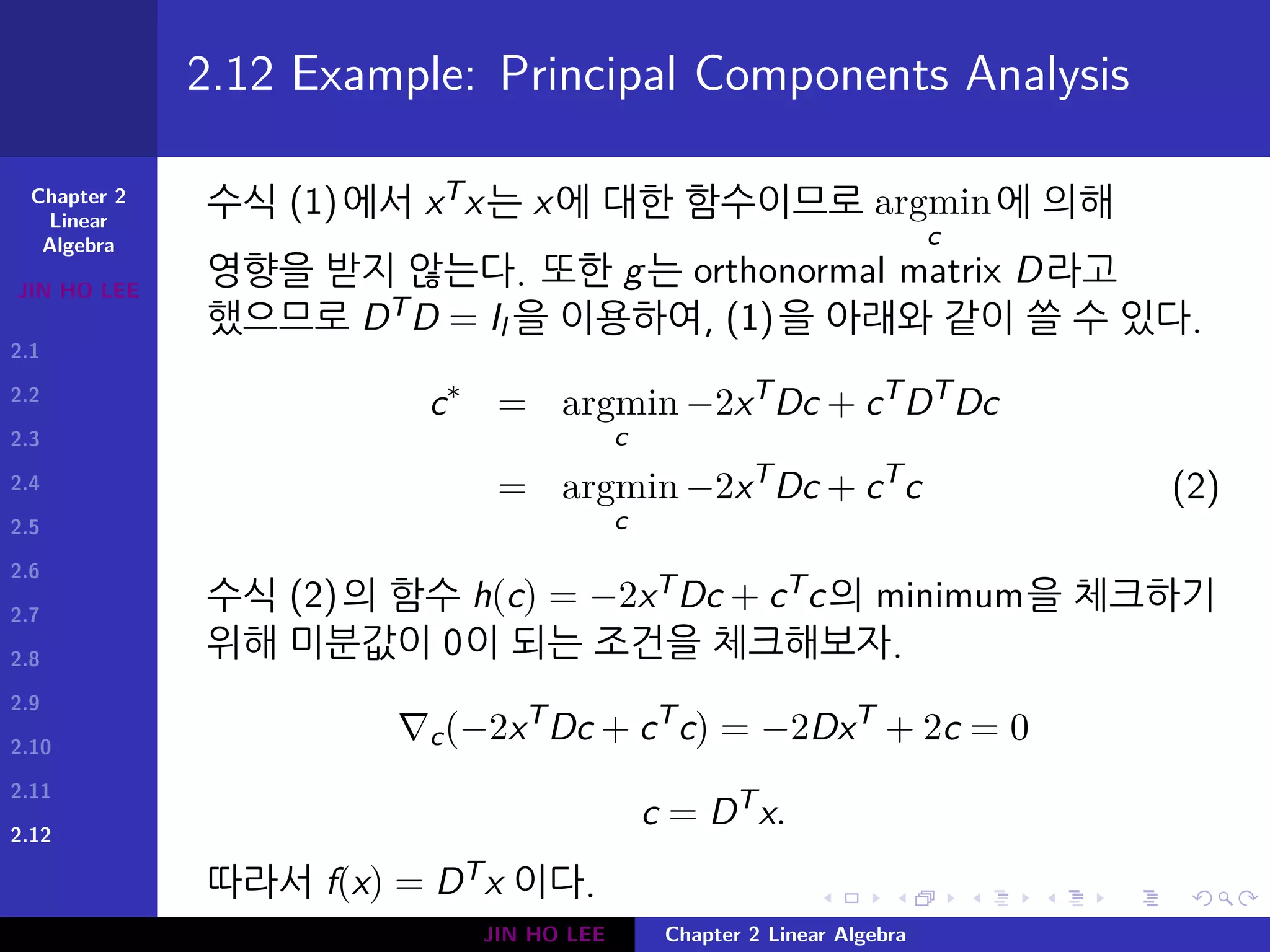

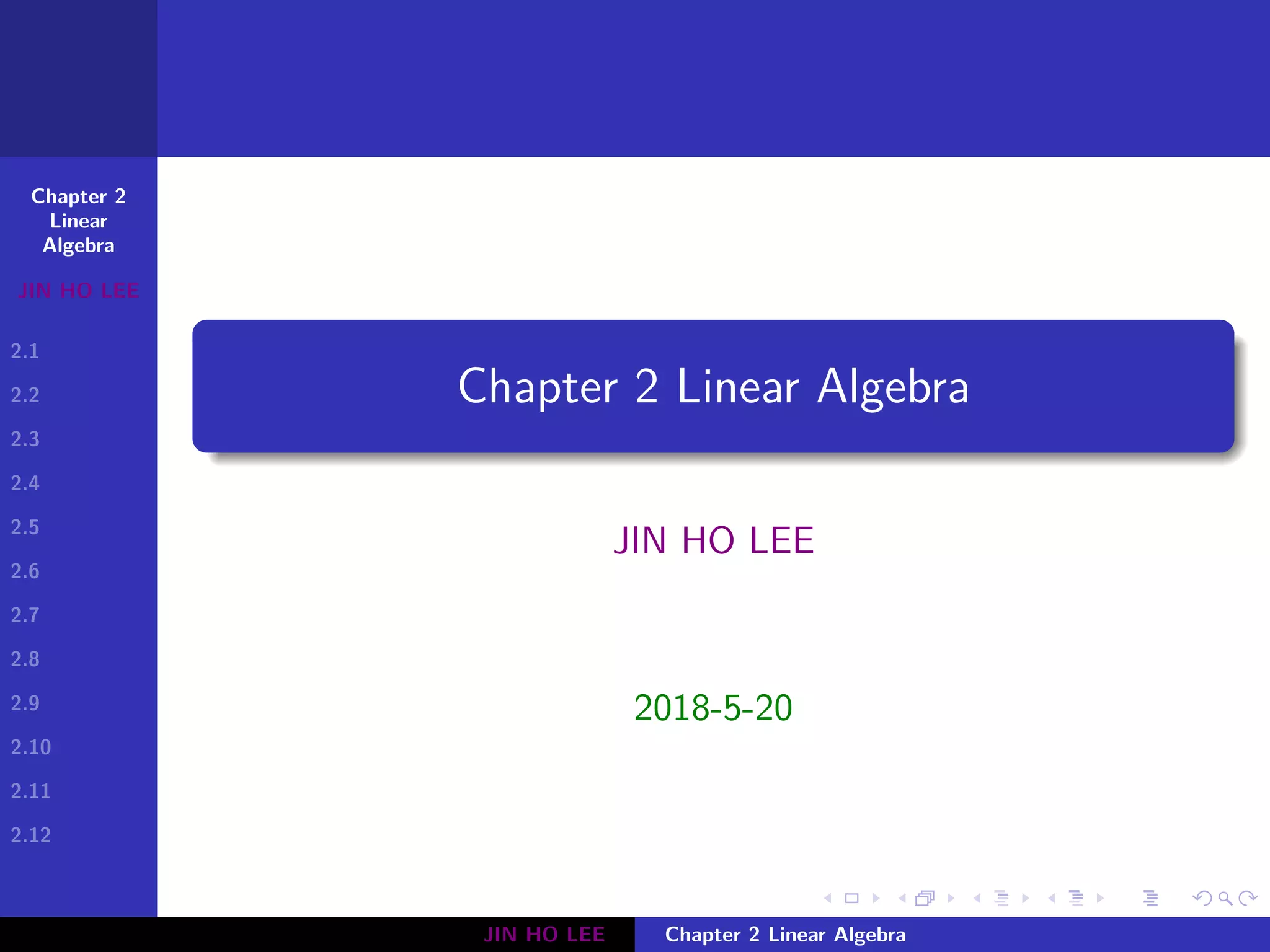

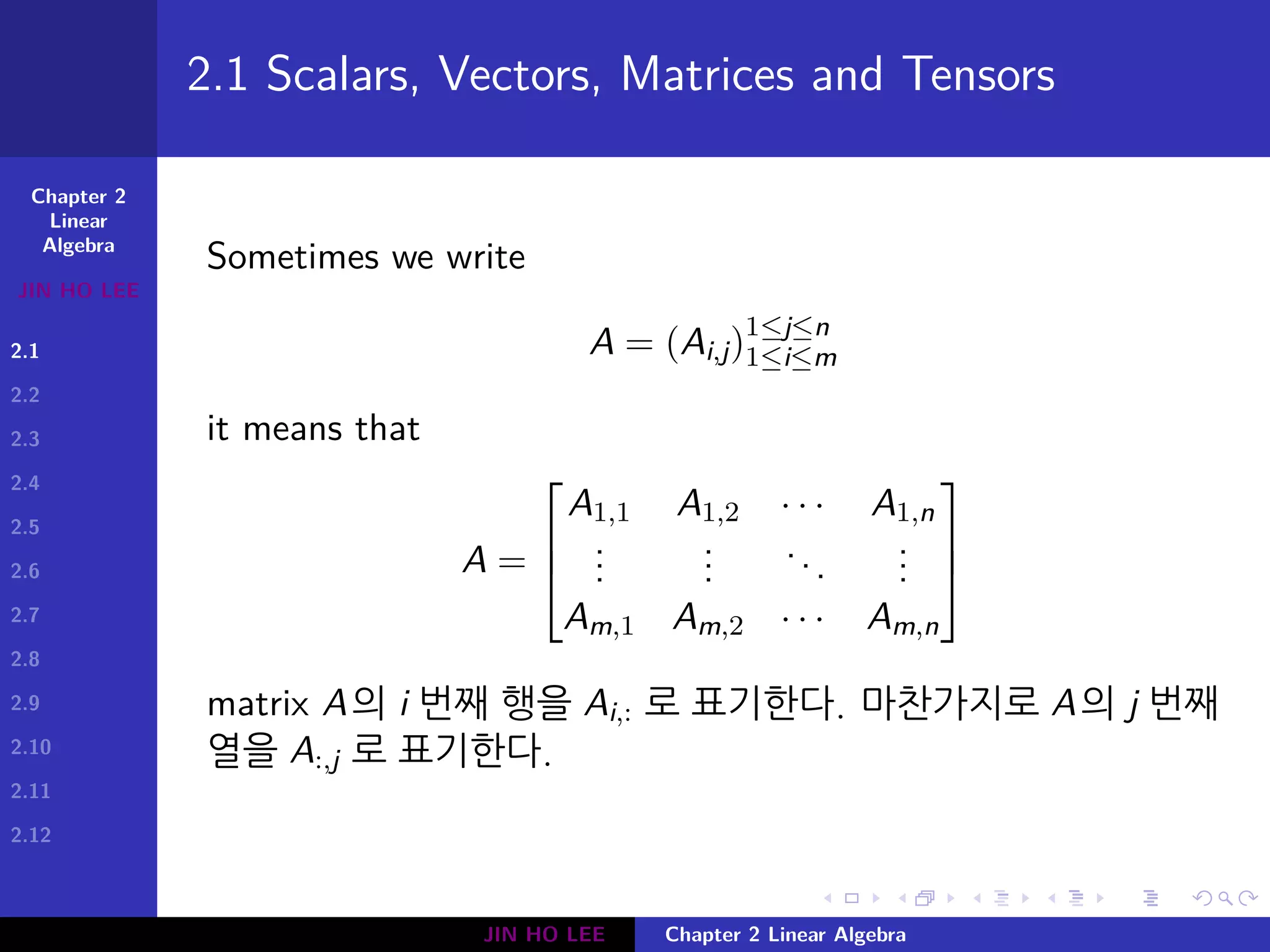

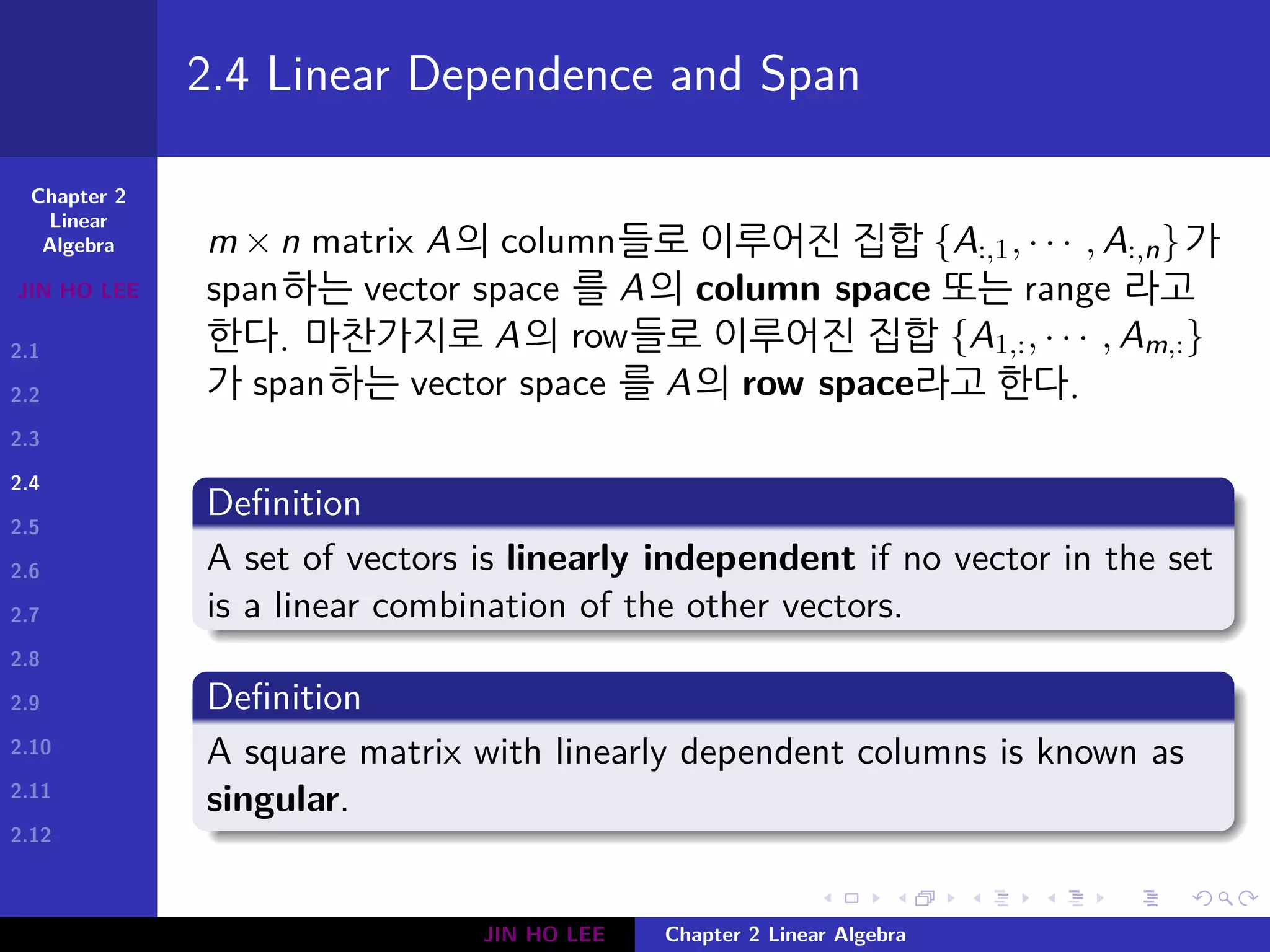

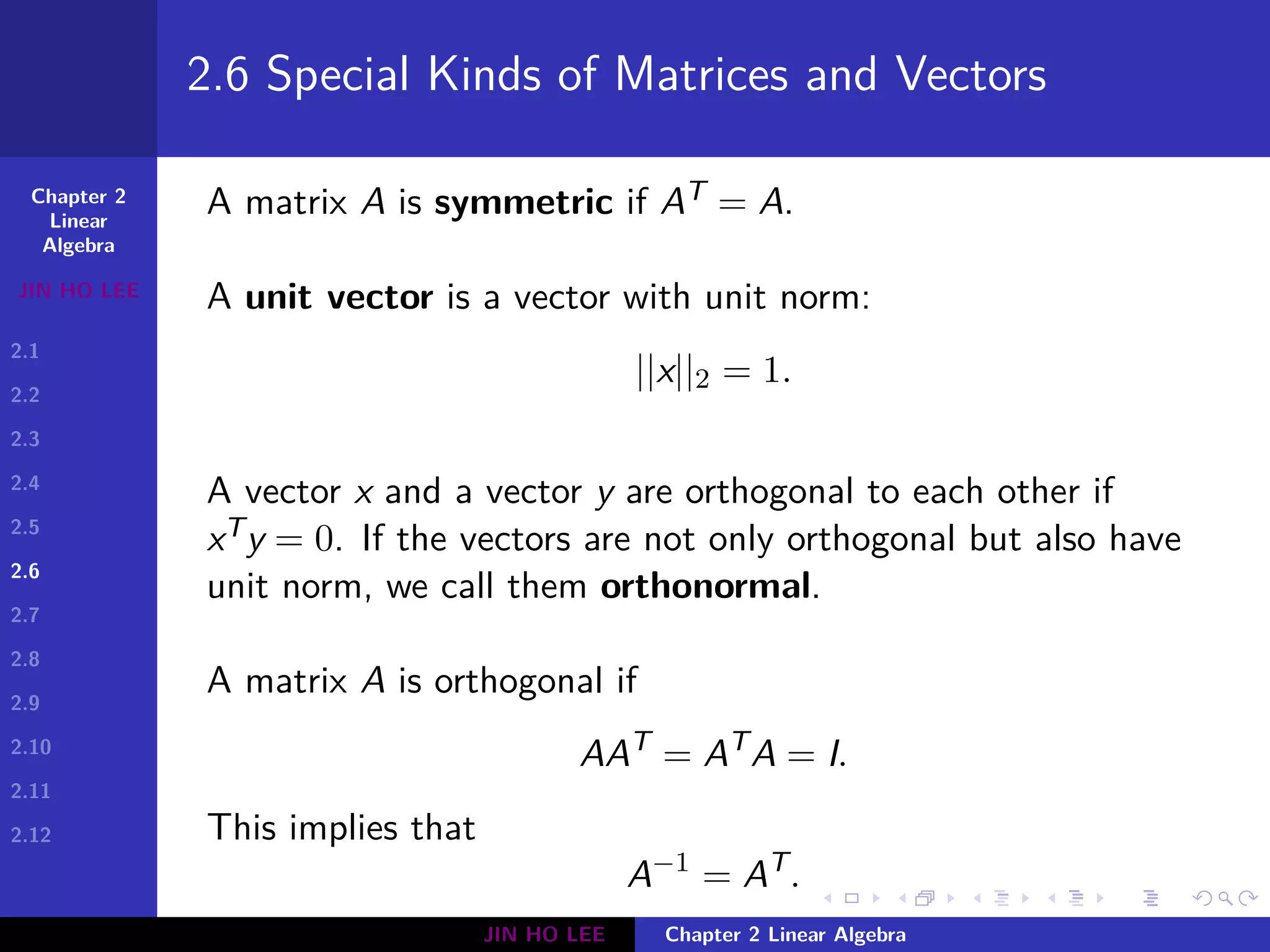

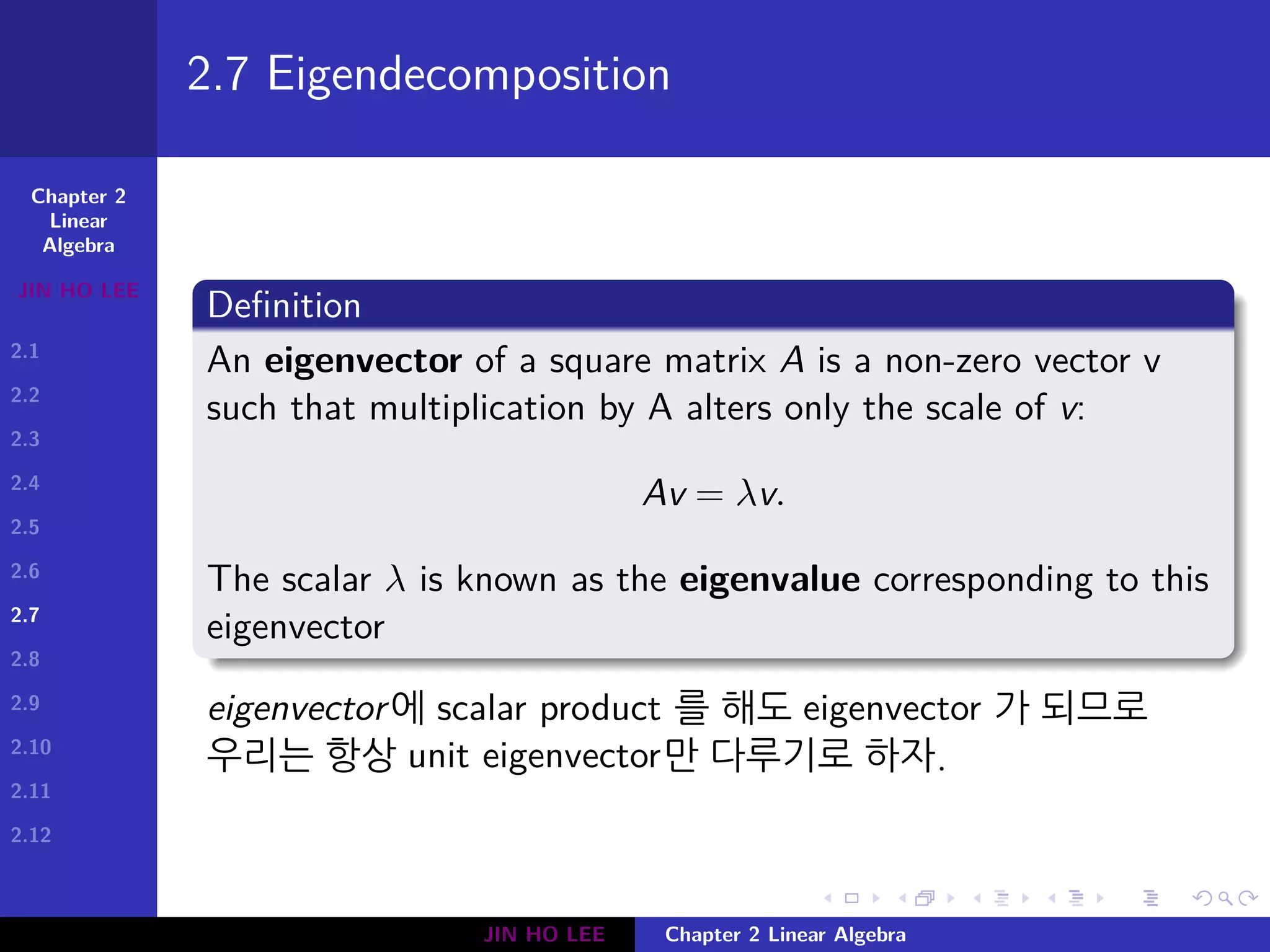

2.7 Eigendecomposition

Suppose that a matrix A has n linearly independent

eigenvectors, {v(1), · · · , v(n)}, with corresponding eigenvalues

{λ1, ..., λn}. We may concatenate all of the eigenvectors to

form a matrix V with one eigenvector per column:

V = [v(1), · · · , v(n)]. Likewise, we can concatenate the

eigenvalues to form a vector λ = [λ1, · · · , λn].

The eigendecomposition of A is then given by

A = V diag(λ) V−1

.

JIN HO LEE Chapter 2 Linear Algebra](https://image.slidesharecdn.com/2-linearalgebra-180524152143/75/Ch-2-Linear-Algebra-18-2048.jpg)