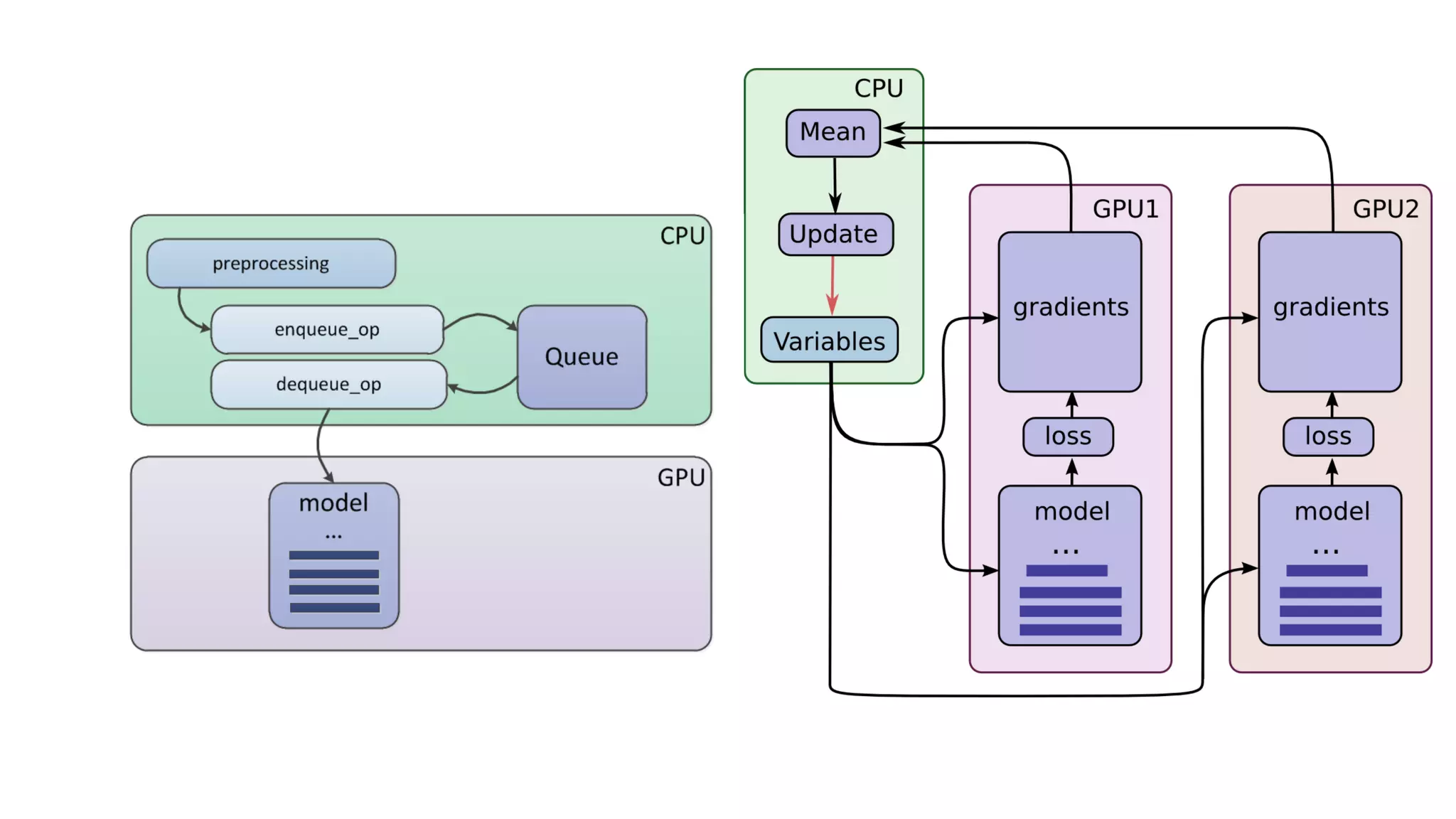

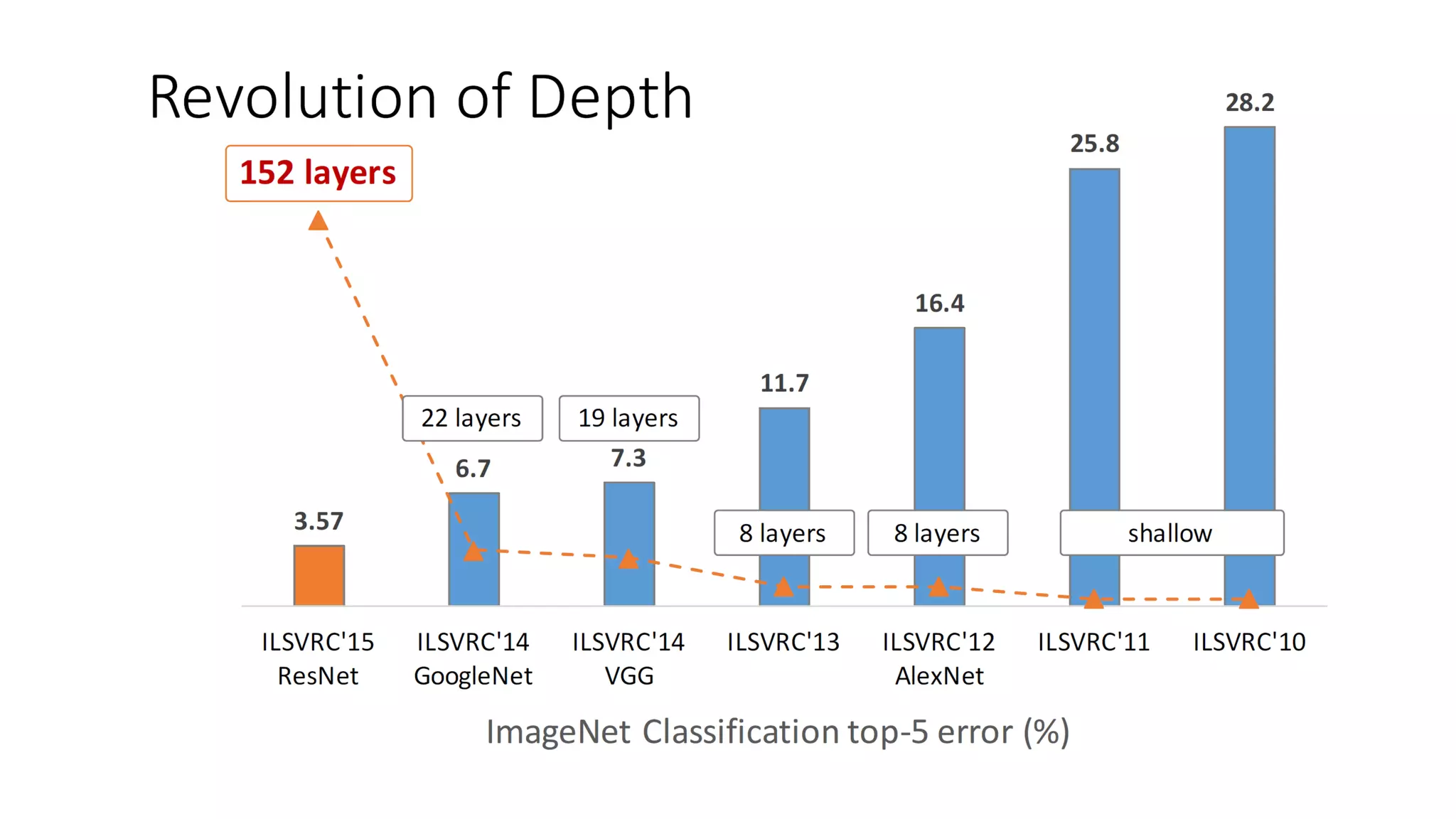

The document discusses the implementation of deep learning techniques using TensorFlow, focusing on a deep residual network for fashion image classification. It details the architecture, including convolutional layers and training steps, along with a dataset containing approximately 300,000 images across 23 categories. The document emphasizes GPU support and the capabilities of TensorFlow for automatic gradient computations.

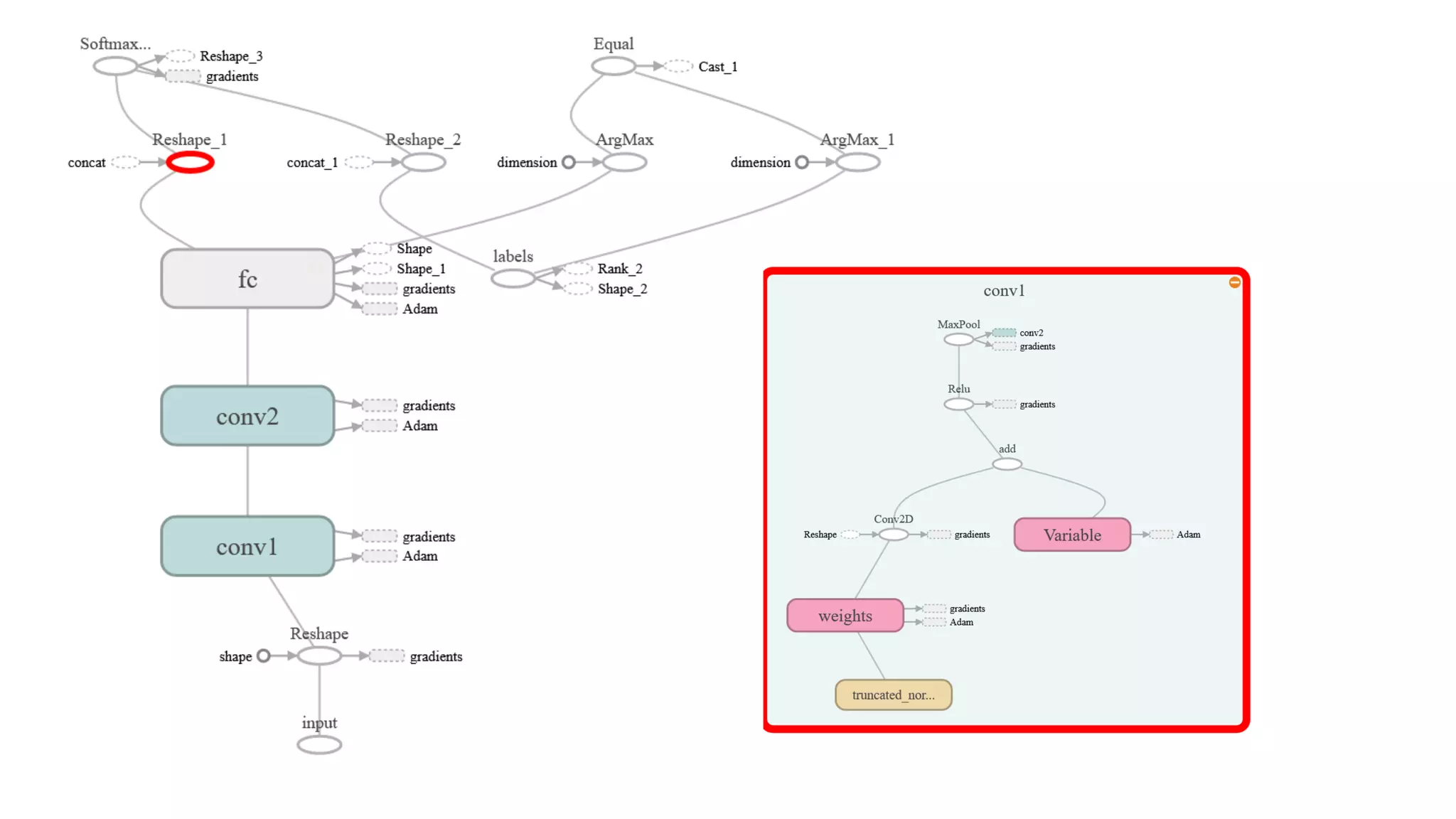

![graph = tf.Graph()

with graph.as_default():

x = tf.placeholder(tf.float32,name='input')

y = tf.placeholder(tf.float32,name='labels')

x_image = tf.reshape(x, [-1, 28, 28, 1])

with tf.variable_scope('conv1'):

W_conv1 = weight_variable([5, 5, 1, 32])

b_conv1 = bias_variable([32])

h_conv1 = tf.nn.relu(conv2d(x_image, W_conv1) + b_conv1)

h_pool1 = max_pool_2x2(h_conv1)

with tf.variable_scope('conv2'):

...

with tf.variable_scope('fc'):

W_fc = weight_variable([7 * 7 * 64, 10])

b_fc = bias_variable([10])

h_pool2_flat = tf.reshape(h_pool2, [-1, 7 * 7 * 64])

y_conv =tf.matmul(h_pool2_flat, W_fc) + b_fc

cross_entropy = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(labels=y,

logits=y_conv))

train_step = tf.train.AdamOptimizer(1e-4).minimize(cross_entropy)

correct_prediction = tf.equal(tf.argmax(y_conv, 1), tf.argmax(y, 1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))](https://image.slidesharecdn.com/dsromaniukshelpukdeep-learning-tensorflow-and-fashion-170504064659/75/Sergey-Shelpuk-Olha-Romaniuk-Deep-learning-Tensorflow-and-Fashion-how-to-stay-in-trend-AI-BigDataDay-2017-10-2048.jpg)

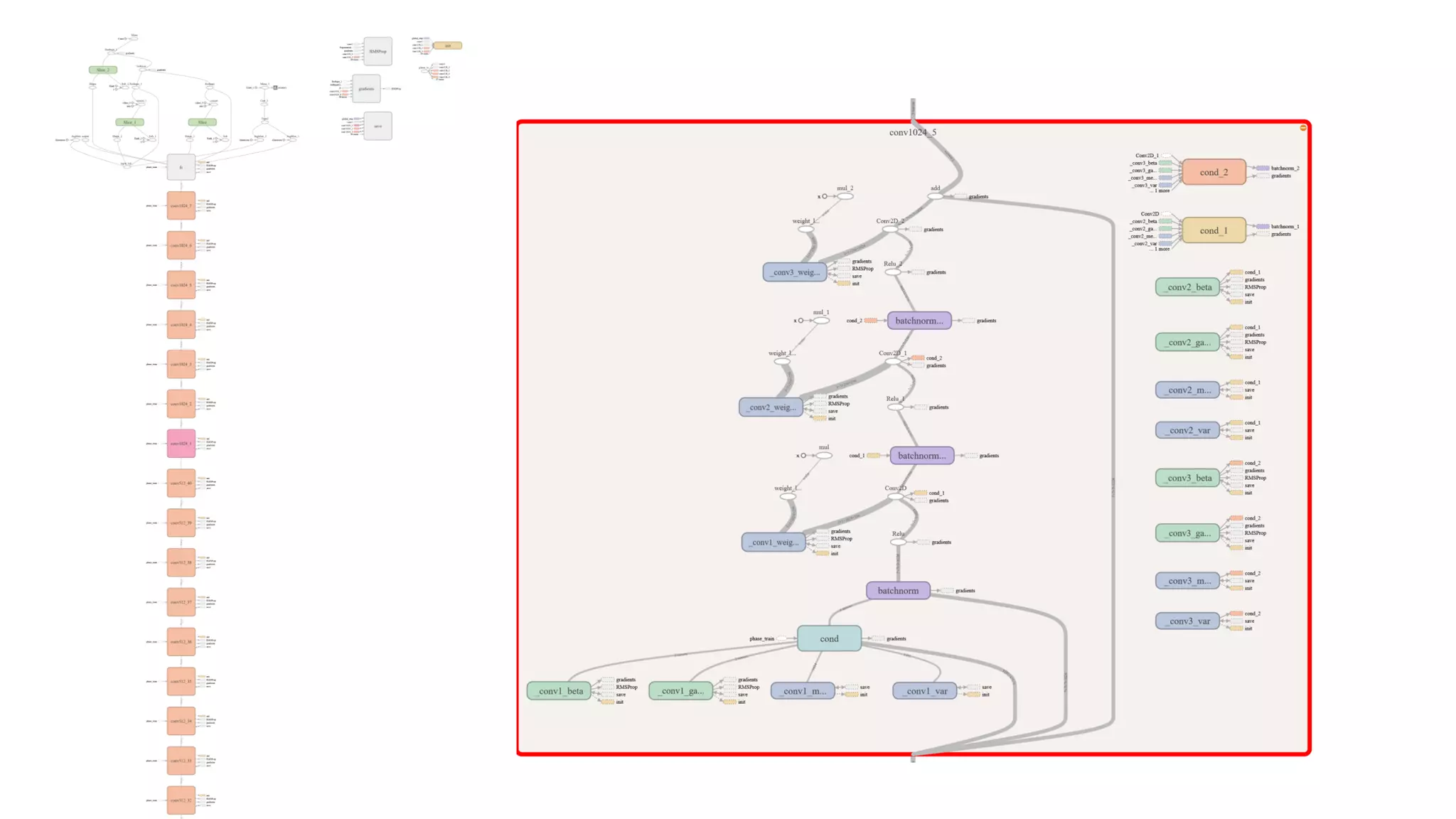

![def residual_block_im(inpt, output_depth, down_sample, phase, projection=False, name=''):

input_depth = inpt.get_shape().as_list()[3]

assert len(inpt.get_shape().as_list()) == 4, inpt.get_shape().as_list()

if down_sample:

inpt = max_pool_layer(inpt, 2,2,'down_sample')

assert len(inpt.get_shape().as_list()) == 4, inpt.get_shape().as_list()

conv1 = conv_layer_resnet_im(inpt, [3,3,input_depth,output_depth], 1, phase, name=name+'_conv1')

conv2 = conv_layer_resnet_im(conv1,[3,3,output_depth,output_depth], 1,phase, name=name+'_conv2')

if input_depth != output_depth:

if projection:

input_layer =

conv_layer(inpt,[1,1,input_depth,output_depth],2,phase,name=name+'_conv_projection')

else:

input_layer = tf.pad(inpt, [[0,0], [0,0], [0,0], [0, output_depth - input_depth]])

else:

input_layer = inpt

res = conv2 + input_layer

return res](https://image.slidesharecdn.com/dsromaniukshelpukdeep-learning-tensorflow-and-fashion-170504064659/75/Sergey-Shelpuk-Olha-Romaniuk-Deep-learning-Tensorflow-and-Fashion-how-to-stay-in-trend-AI-BigDataDay-2017-12-2048.jpg)

![def resnet200_im(inpt, num_classes, phase):

layers = []

with tf.variable_scope('conv1'):

conv1 = conv_layer(inpt, [7, 7, 3, 64], 2, phase)

conv1_pooled = max_pool_layer(conv1, filter=3, stride=2)

layers.append(conv1_pooled)

num_blocks = 7

num_filters = 128

for i in range(num_blocks):

with tf.variable_scope('conv%d_%d' % (num_filters, i + 1)):

conv = residual_block_deep_im(layers[-1], num_filters, False, phase)

layers.append(conv)

assert conv.get_shape().as_list()[1:] == [56, 56, num_filters]

num_blocks = 12

num_filters = 256

for i in range(num_blocks):

down_sample = True if i == 0 else False

with tf.variable_scope('conv%d_%d' % (num_filters, i + 1)):

conv = residual_block_deep_im(layers[-1], num_filters, down_sample, phase)

layers.append(conv)

assert conv.get_shape().as_list()[1:] == [28, 28, num_filters]

...](https://image.slidesharecdn.com/dsromaniukshelpukdeep-learning-tensorflow-and-fashion-170504064659/75/Sergey-Shelpuk-Olha-Romaniuk-Deep-learning-Tensorflow-and-Fashion-how-to-stay-in-trend-AI-BigDataDay-2017-13-2048.jpg)