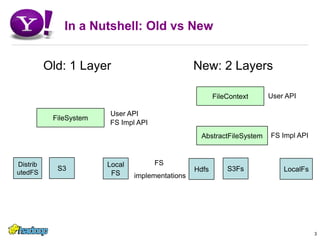

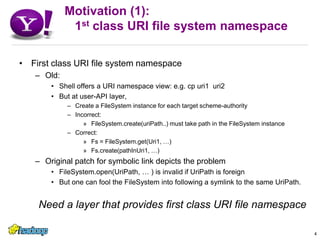

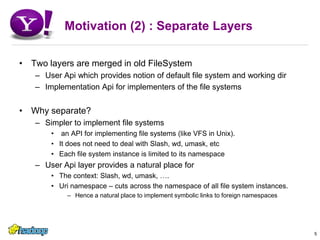

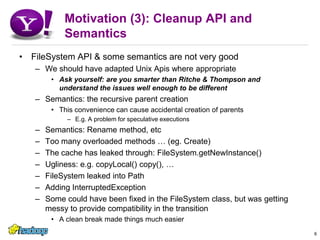

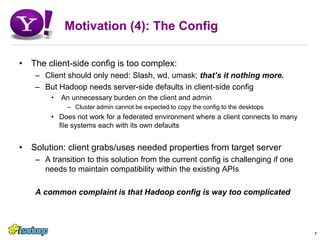

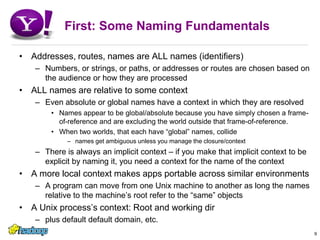

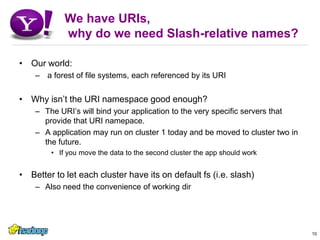

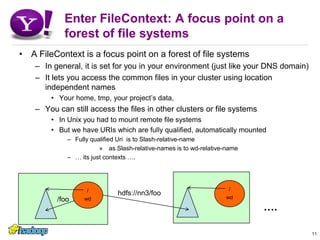

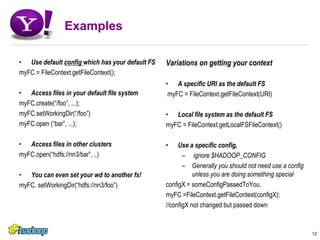

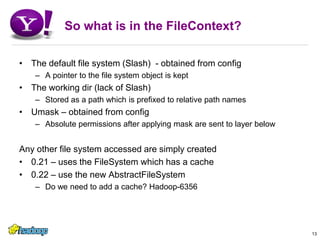

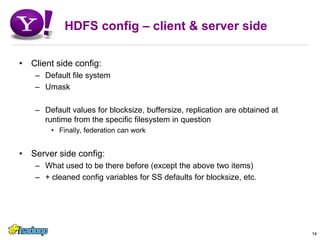

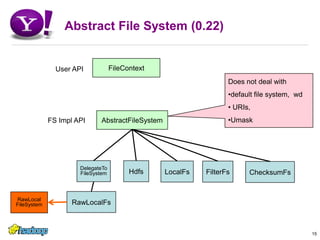

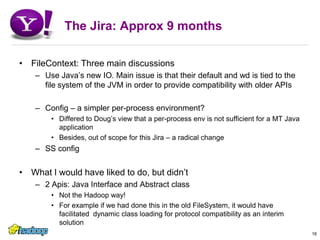

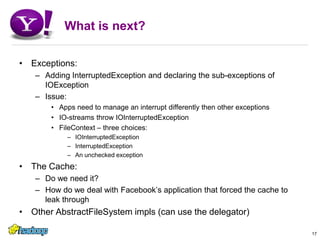

The document summarizes the motivation and design of the new File system API in Hadoop, including FileContext and AbstractFileSystem. It aims to separate the user API layer from the implementation layer, introduce FileContext as a focus point on multiple file systems, and clean up some issues with the old FileSystem API. The new APIs provide a first-class URI namespace, simplify file system implementation, and allow client configuration to come from the target server.