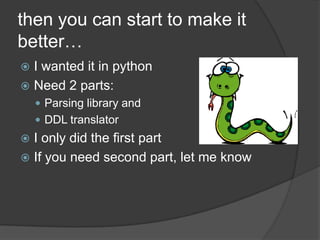

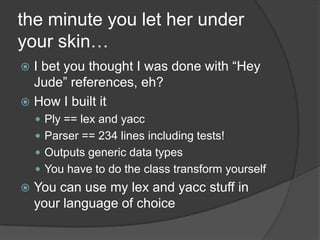

This document discusses building a Hadoop Record Reader in Python. It summarizes that Hadoop has a native data storage format called Hadoop Record or "Jute" that has a Data Definition Language and compiler. It then discusses how the author built a Python parsing library to parse this format into generic Python data types by using Ply (lex and yacc for Python). Future work mentioned includes building a DDL translator and integrating feedback.

![remember, to only use C++/Java$rcc--help Usage: rcc --language[java|c++] ddl-files](https://image.slidesharecdn.com/hadooprecordreaderinpython-091202150908-phpapp02/85/Hadoop-Record-Reader-In-Python-6-320.jpg)

![and any time you feel the pain…Parsing the binary format is hardVector vsstruct???struct= "s{" record *("," record) "}"vector = "v{" [record *("," record)] "}"LazyString – don’t decode if not needed99% of my hadoop time was decoding strings I didn’t needBinary on disk -> CSV -> python == wastefulHadoopupacks zip files – name it .mod](https://image.slidesharecdn.com/hadooprecordreaderinpython-091202150908-phpapp02/85/Hadoop-Record-Reader-In-Python-11-320.jpg)