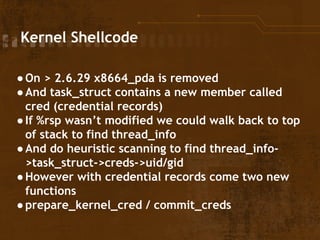

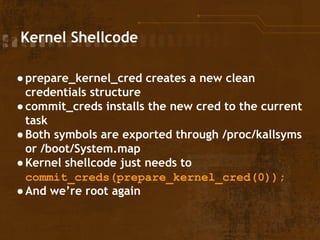

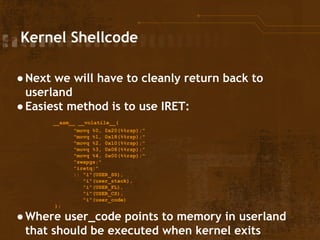

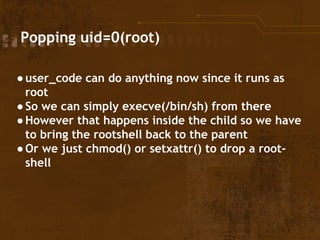

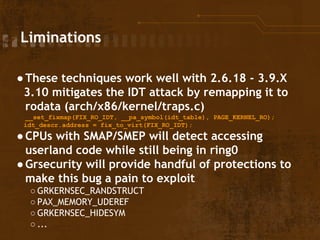

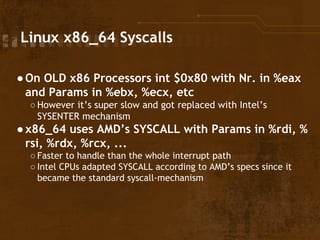

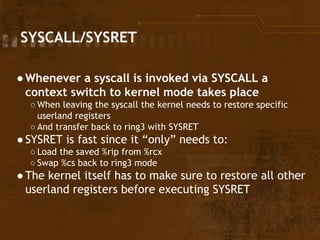

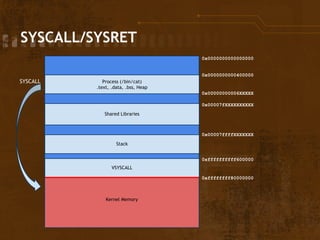

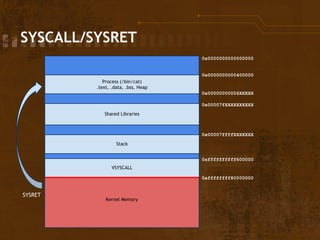

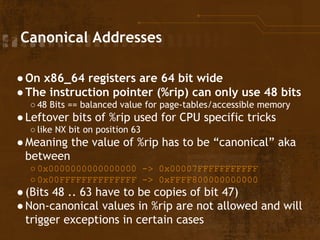

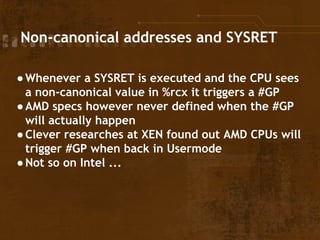

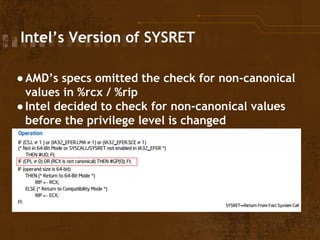

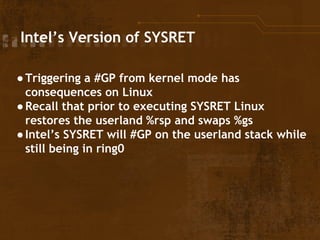

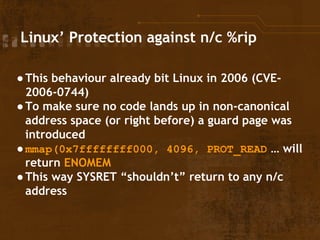

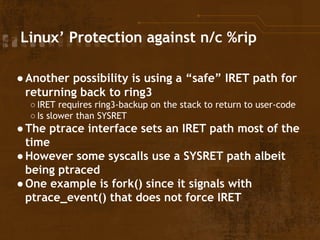

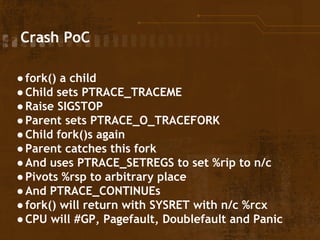

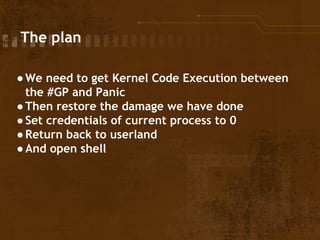

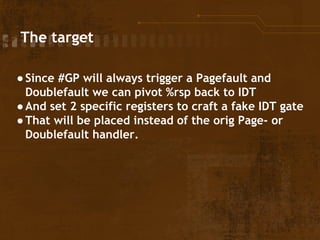

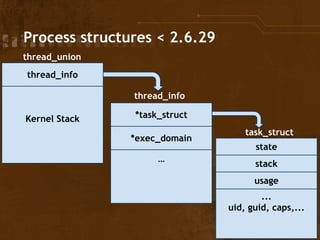

The document discusses exploitation techniques of the Linux kernel through Intel's sysret implementation, highlighting the vulnerabilities associated with syscall and context switches. It explains how non-canonical addresses can trigger general protection faults, leading to potential privilege escalation in the Linux environment. Finally, the document outlines a method to exploit these vulnerabilities to gain root access by manipulating kernel structures and executing arbitrary code in userland.

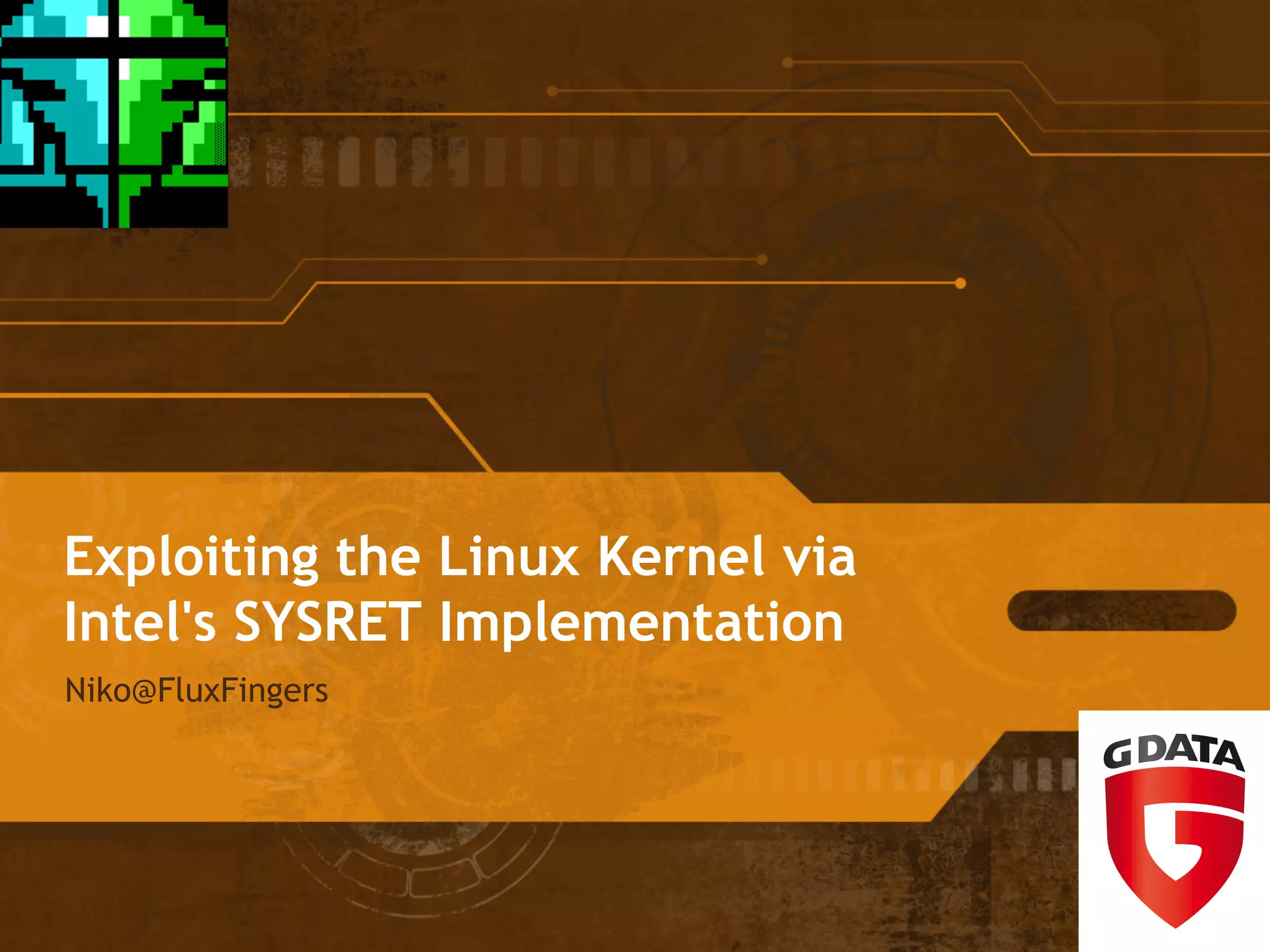

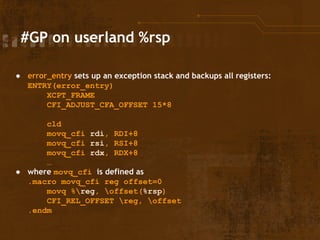

![#GP on userland %rsp

● When setting up the stack frame in error_entry all

(general) registers are saved to x(%rsp) / [rsp+x]

● The kernel restored the userland %rsp and

registers before SYSRET

● => Arbitrary memory write while in ring0

● Classic possibility for privilege escalation](https://image.slidesharecdn.com/rootinglinuxviaintelssysretimplementation-141031100441-conversion-gate02/85/Exploiting-the-Linux-Kernel-via-Intel-s-SYSRET-Implementation-14-320.jpg)

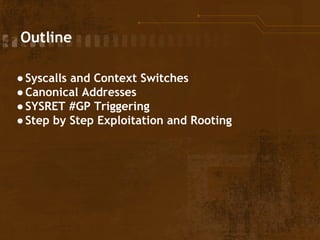

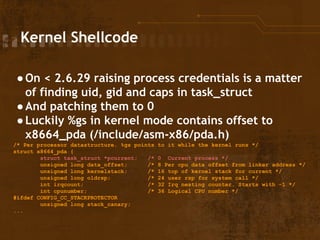

![Kernel Shellcode

● %gs:0 will point to task_struct

● So we can simply:

asm("movq %%gs:0, %0" : "=r"(ptr));

cred = (uint32_t *)ptr;

for (i = 0; i < 1000; i++, cred++) {

if (cred[0] == uid && cred[1] == uid && cred[2] == uid && cred[3] == uid &&

cred[4] == gid && cred[5] == gid && cred[6] == gid && cred[7] == gid) {

cred[0] = cred[1] = cred[2] = cred[3] = 0;

cred[4] = cred[5] = cred[6] = cred[7] = 0;

● Where uid/gid are getuid() and getdid()

● And our process will be root](https://image.slidesharecdn.com/rootinglinuxviaintelssysretimplementation-141031100441-conversion-gate02/85/Exploiting-the-Linux-Kernel-via-Intel-s-SYSRET-Implementation-30-320.jpg)