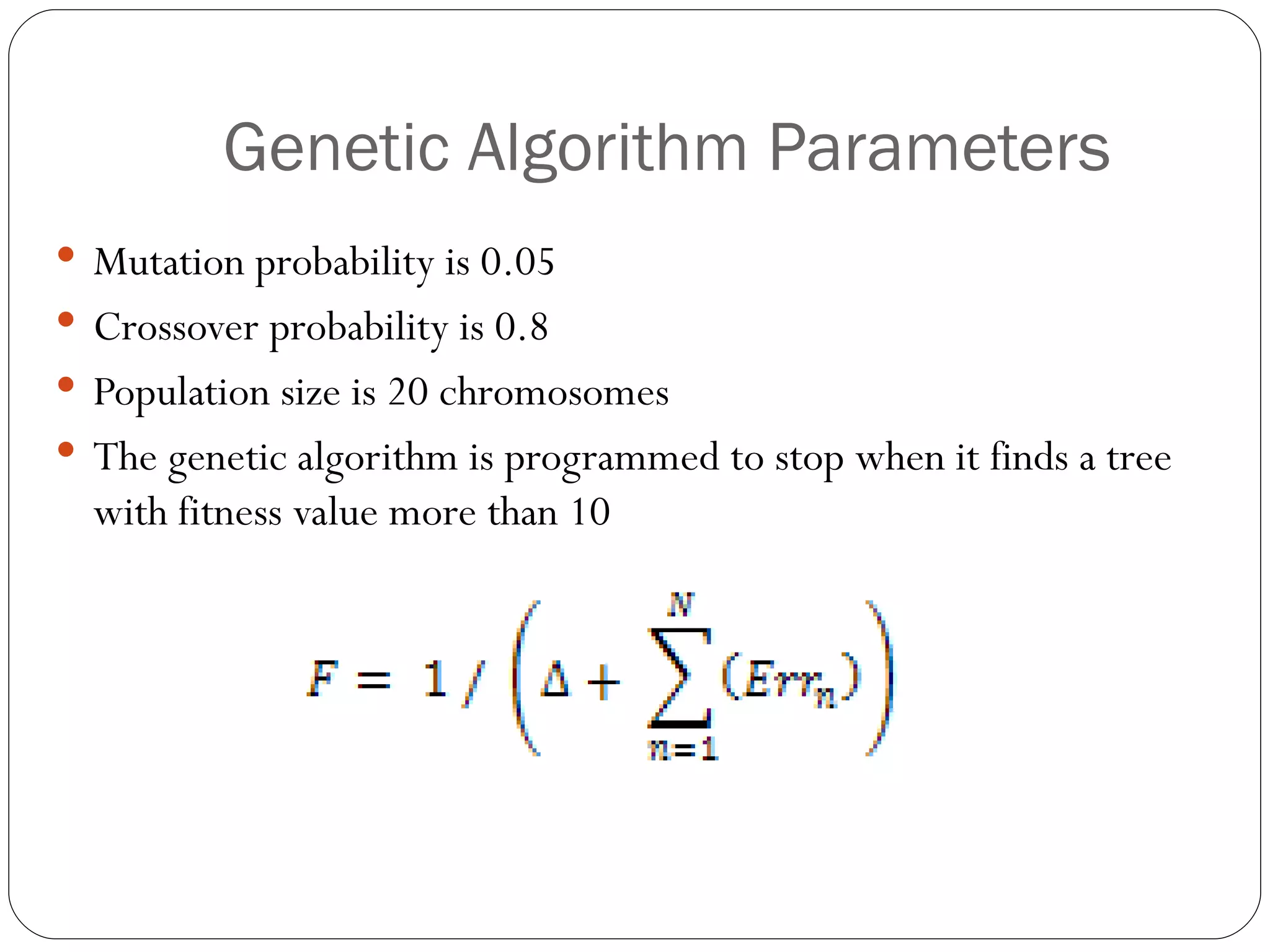

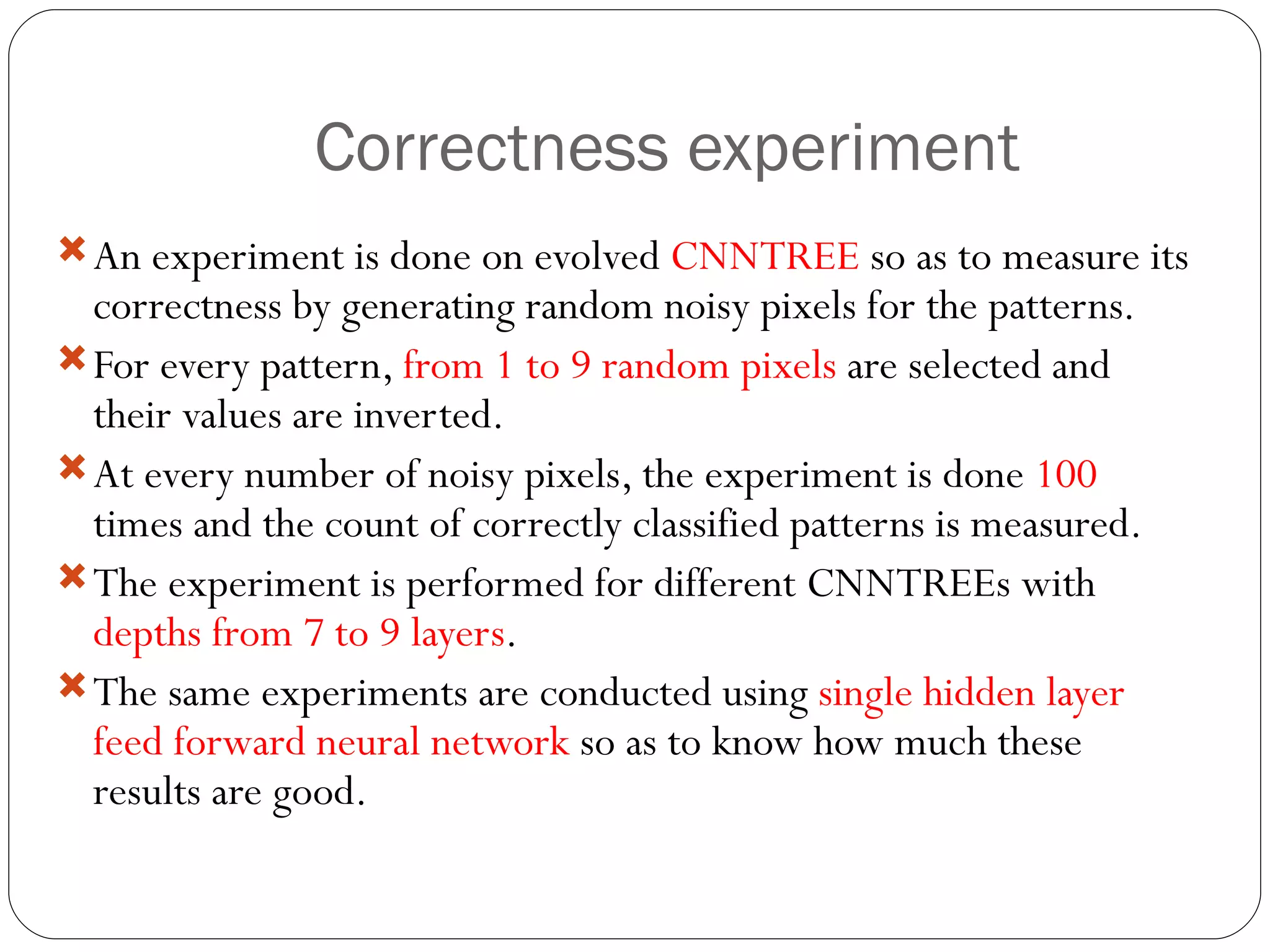

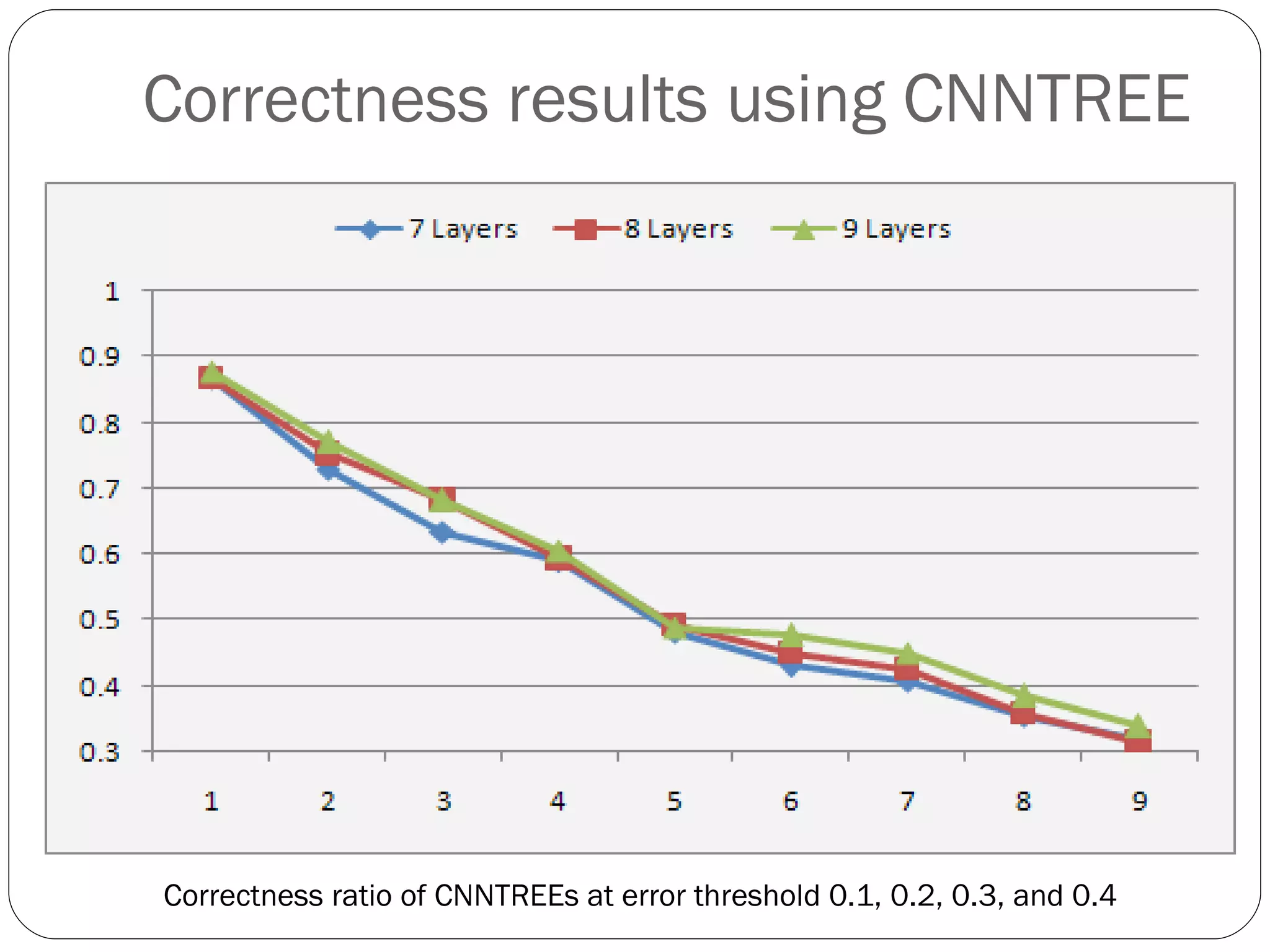

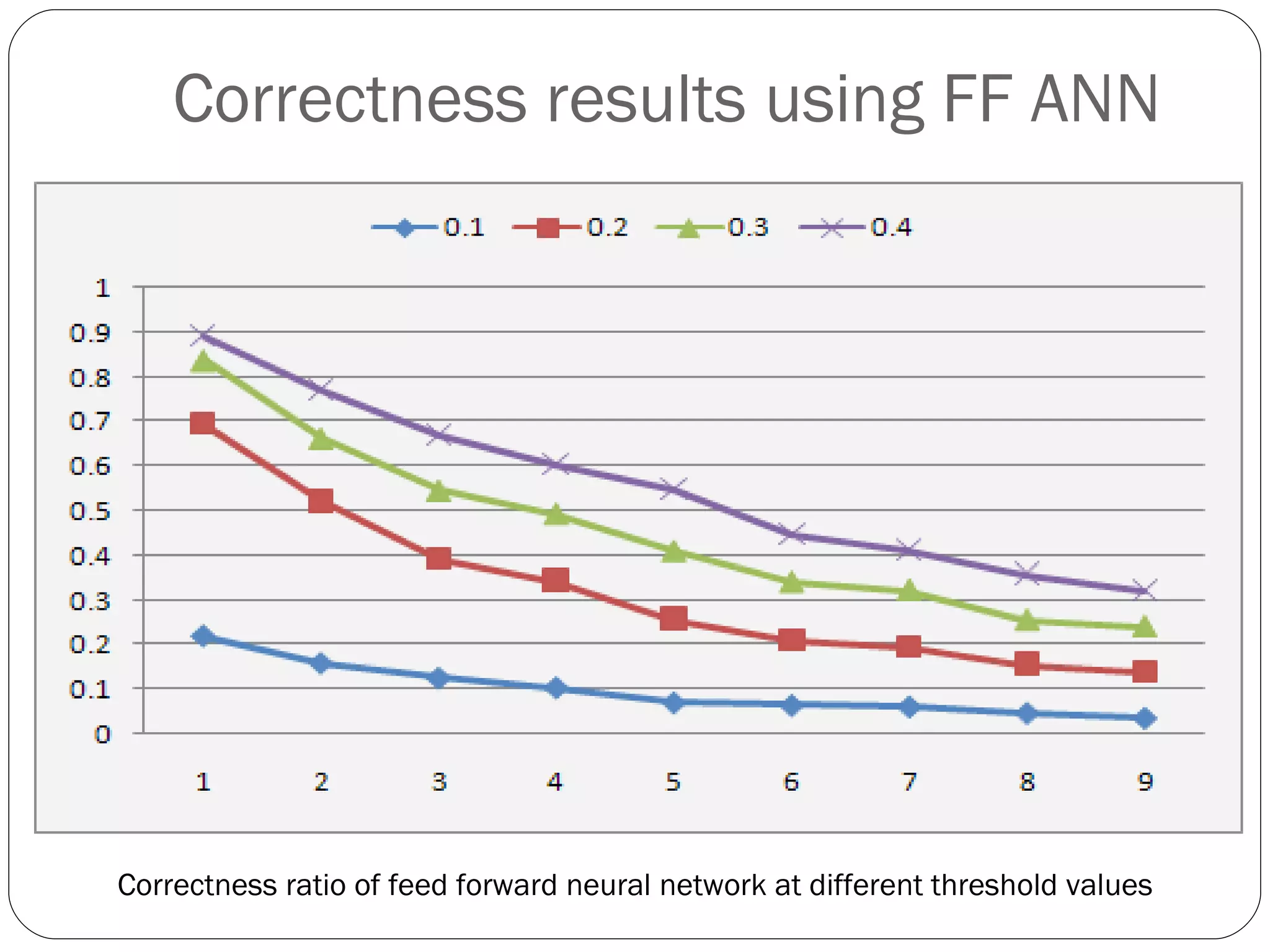

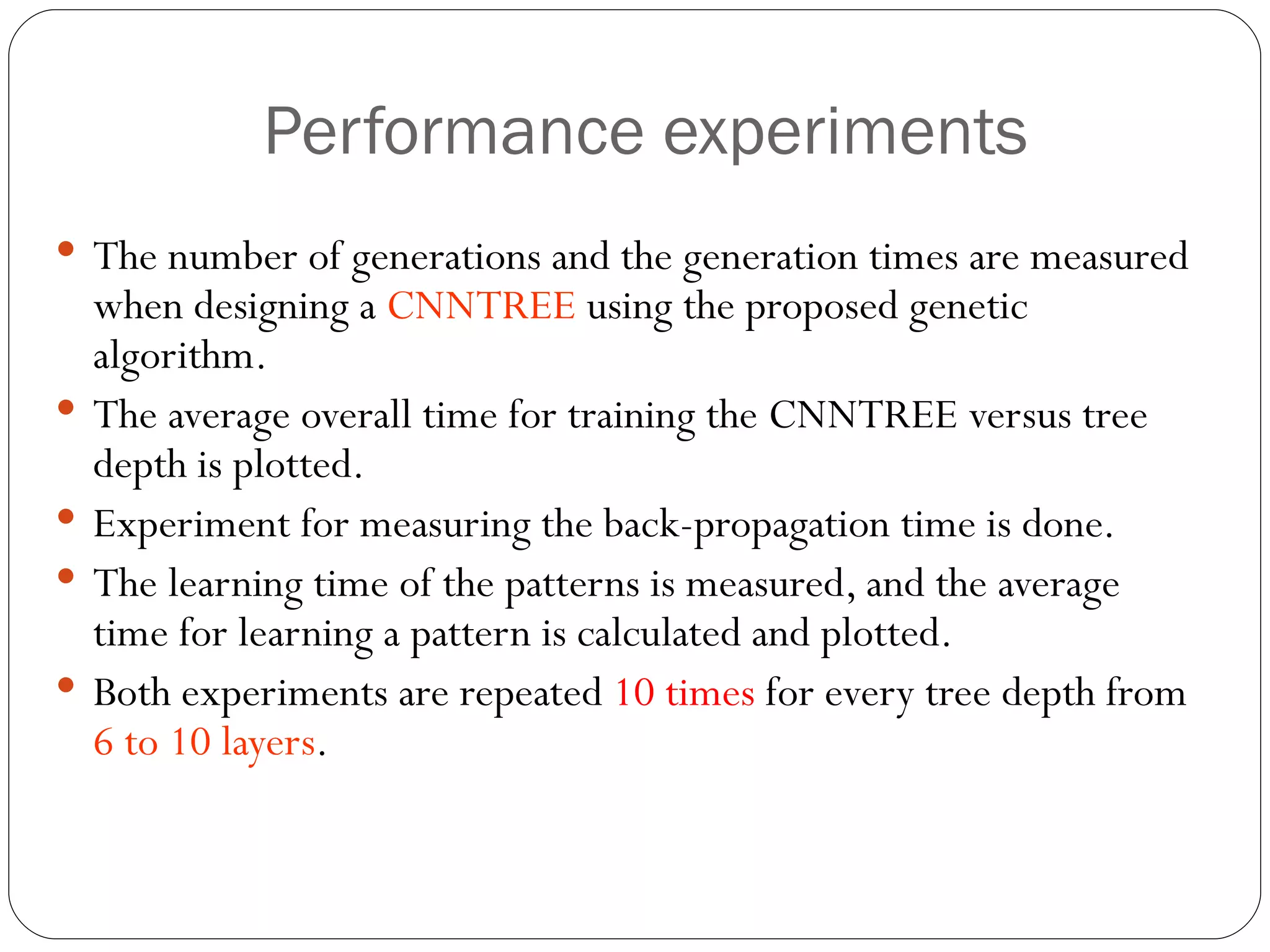

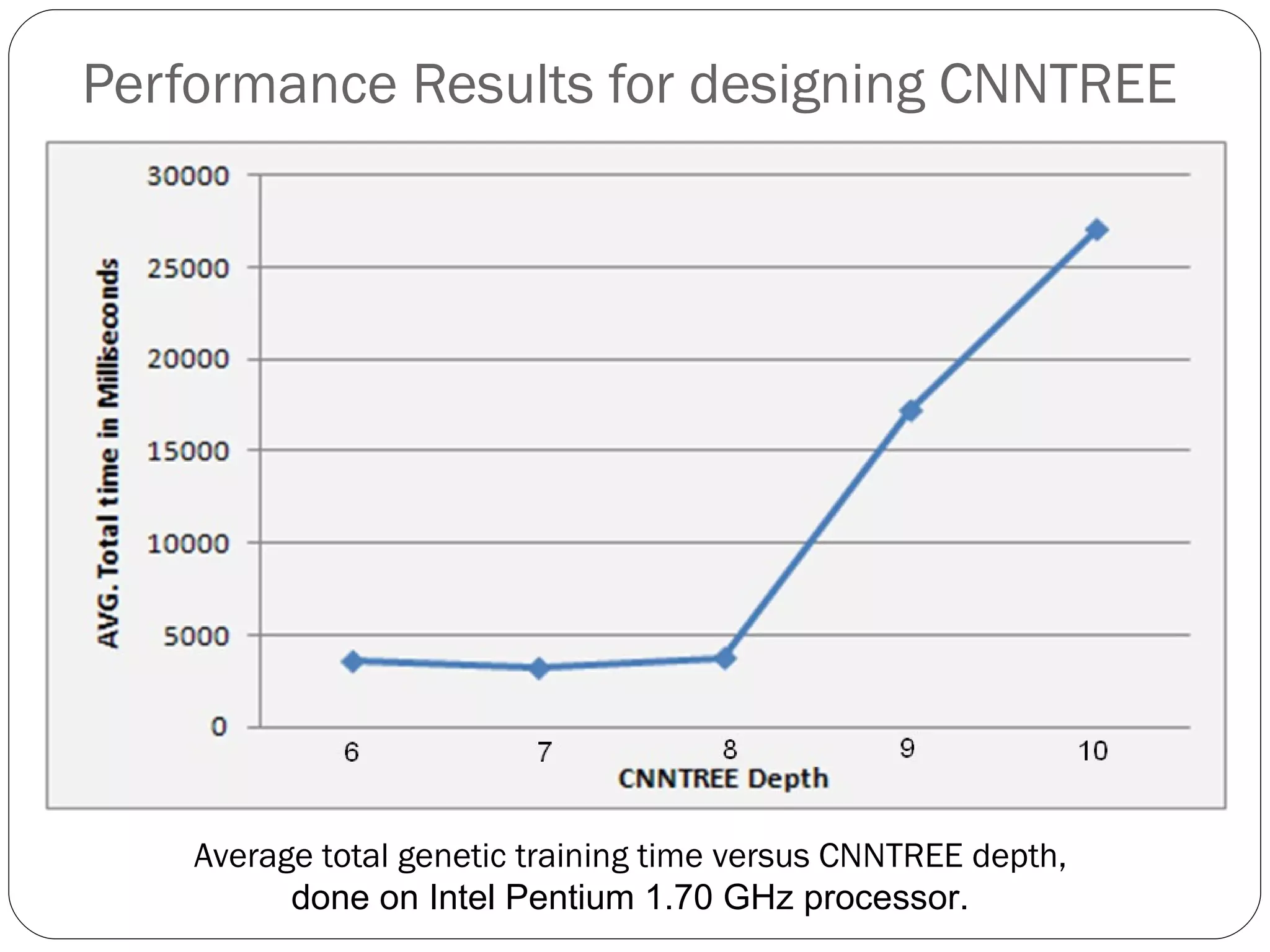

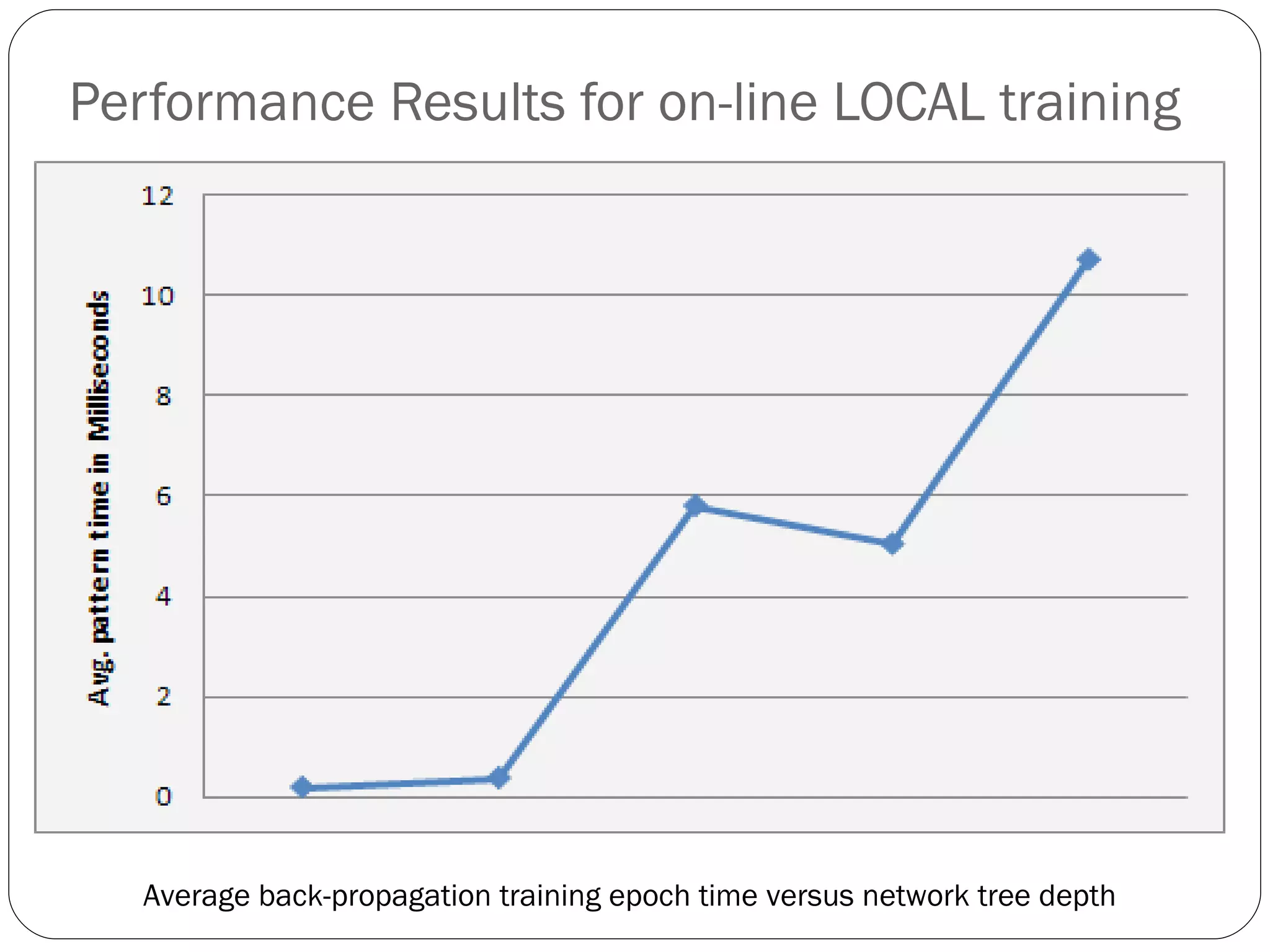

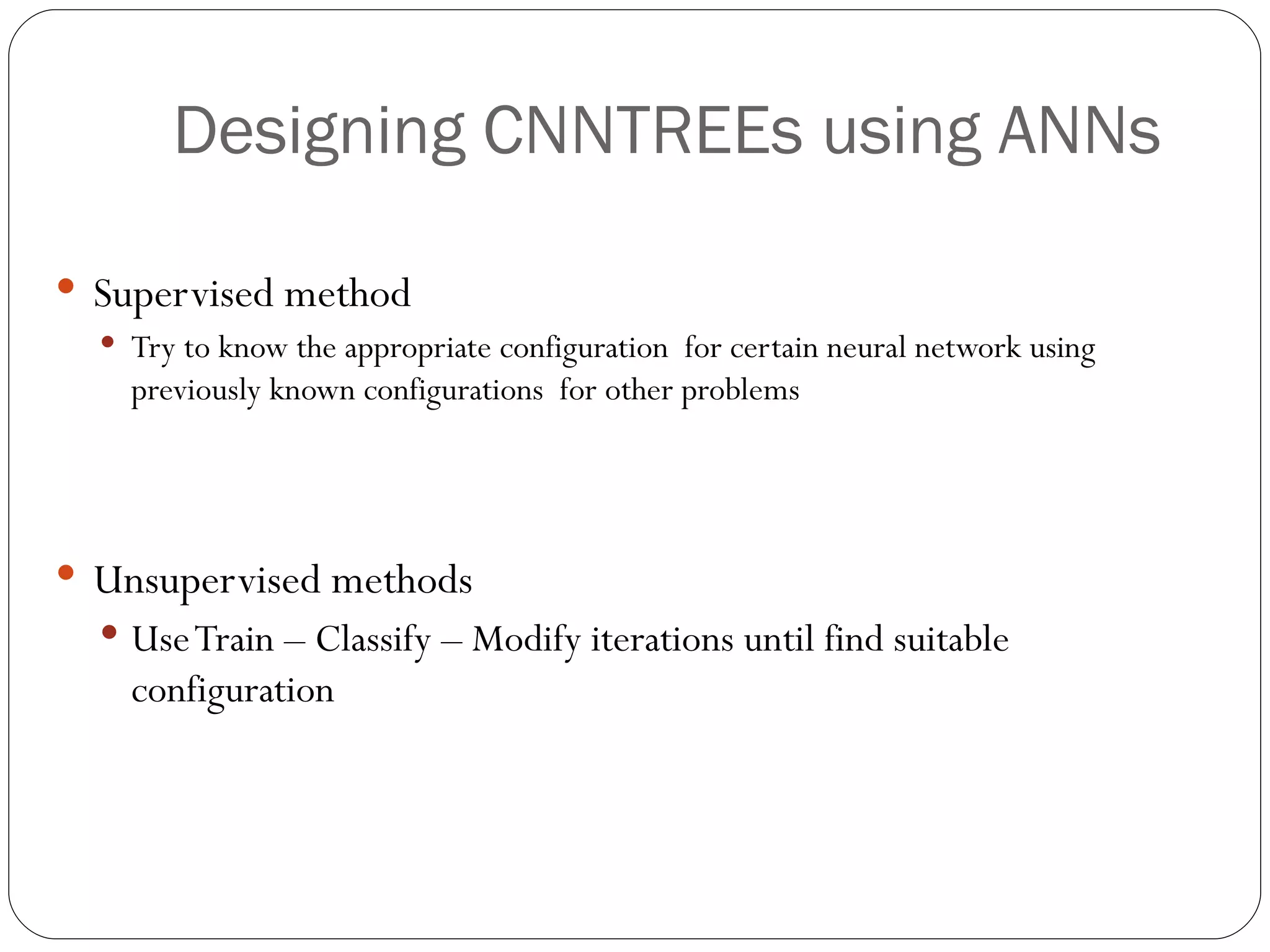

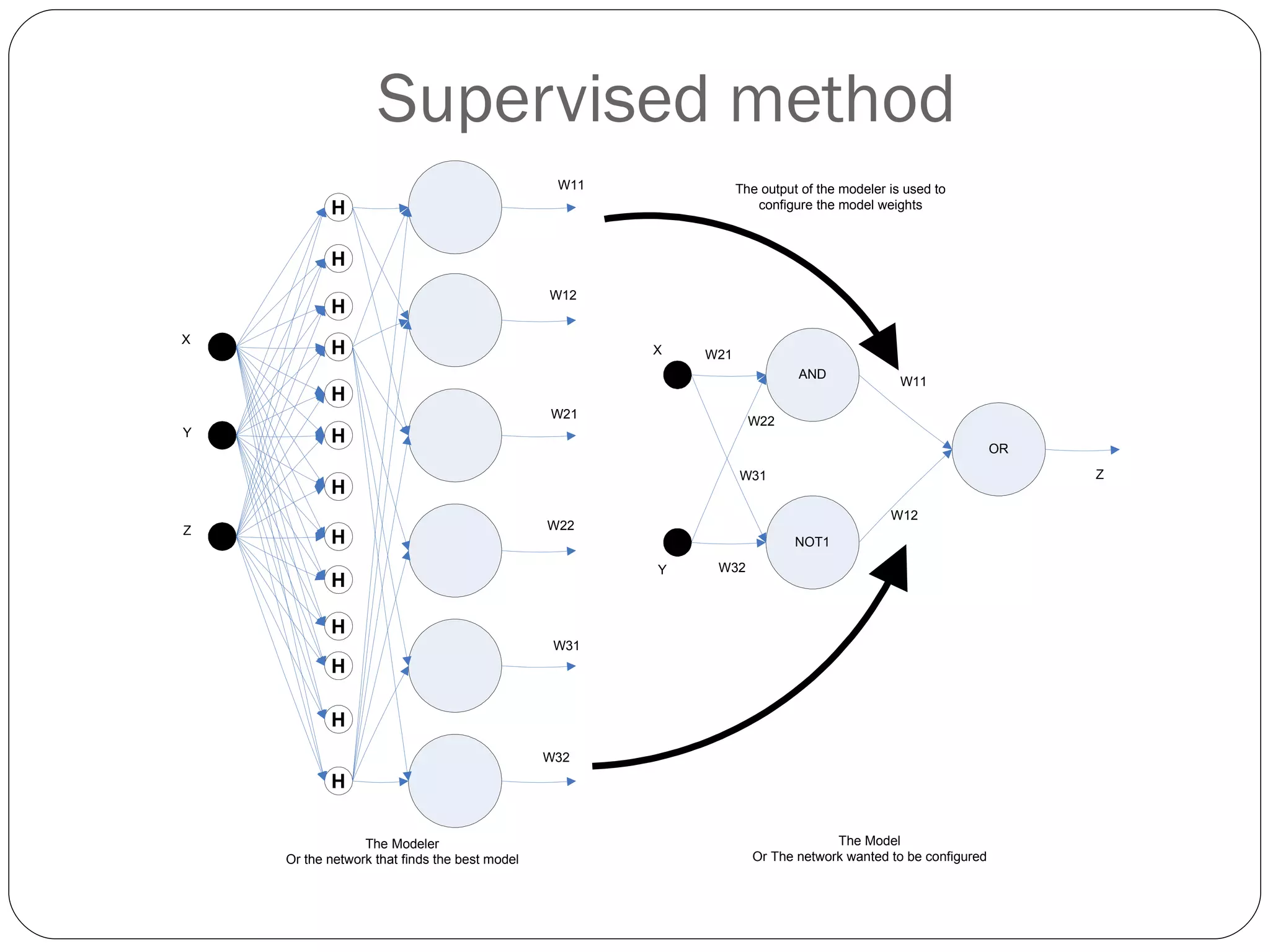

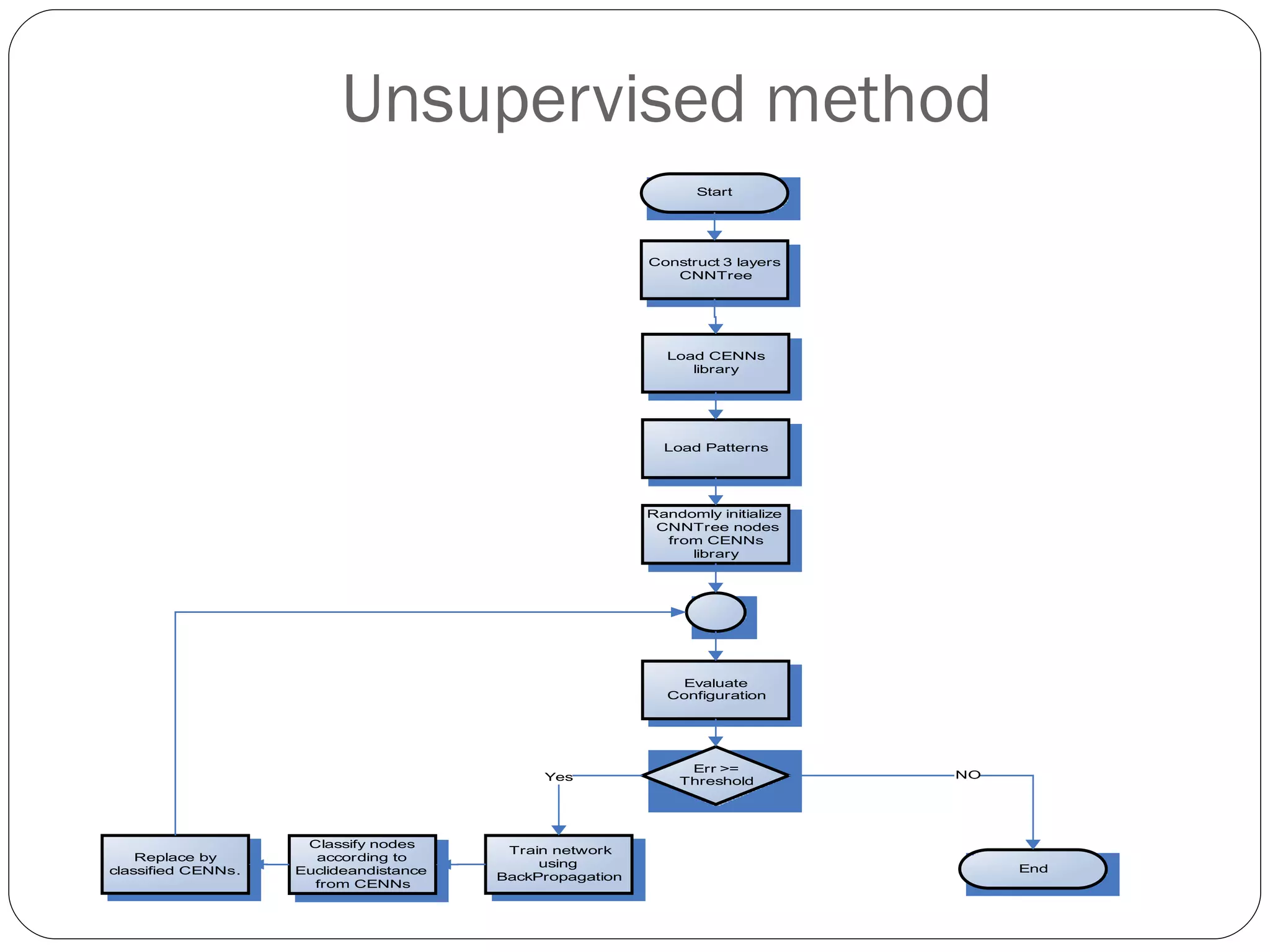

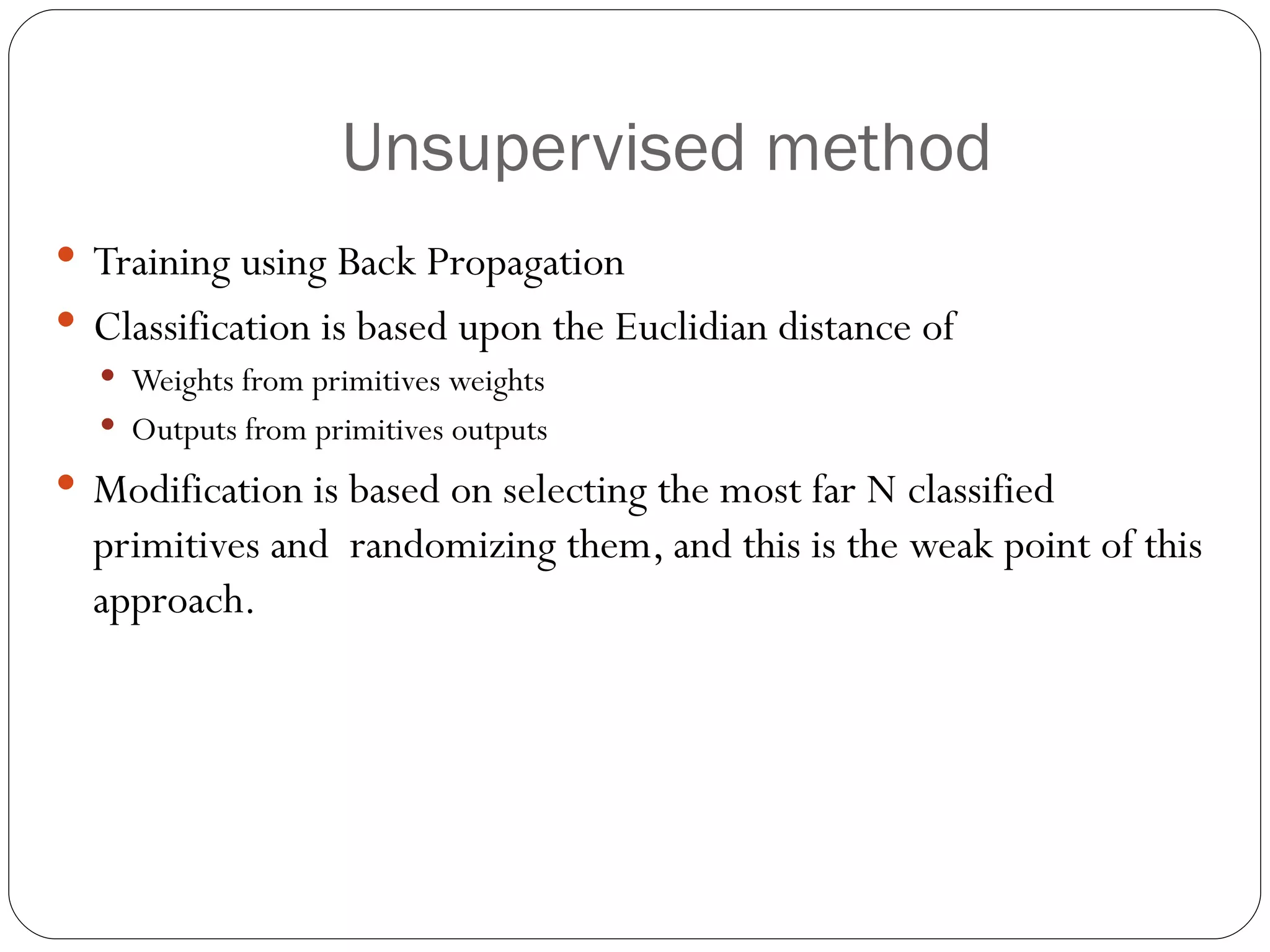

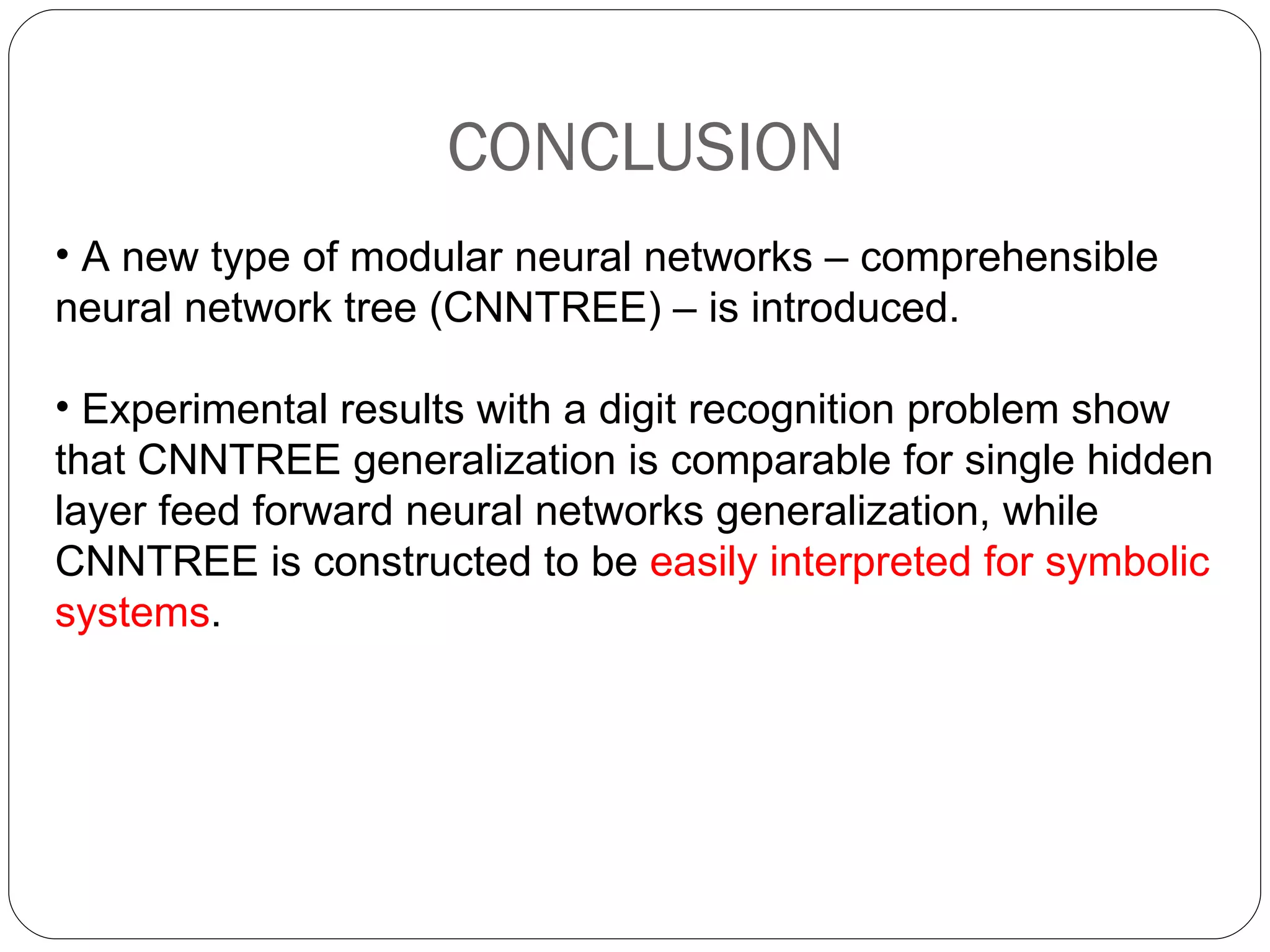

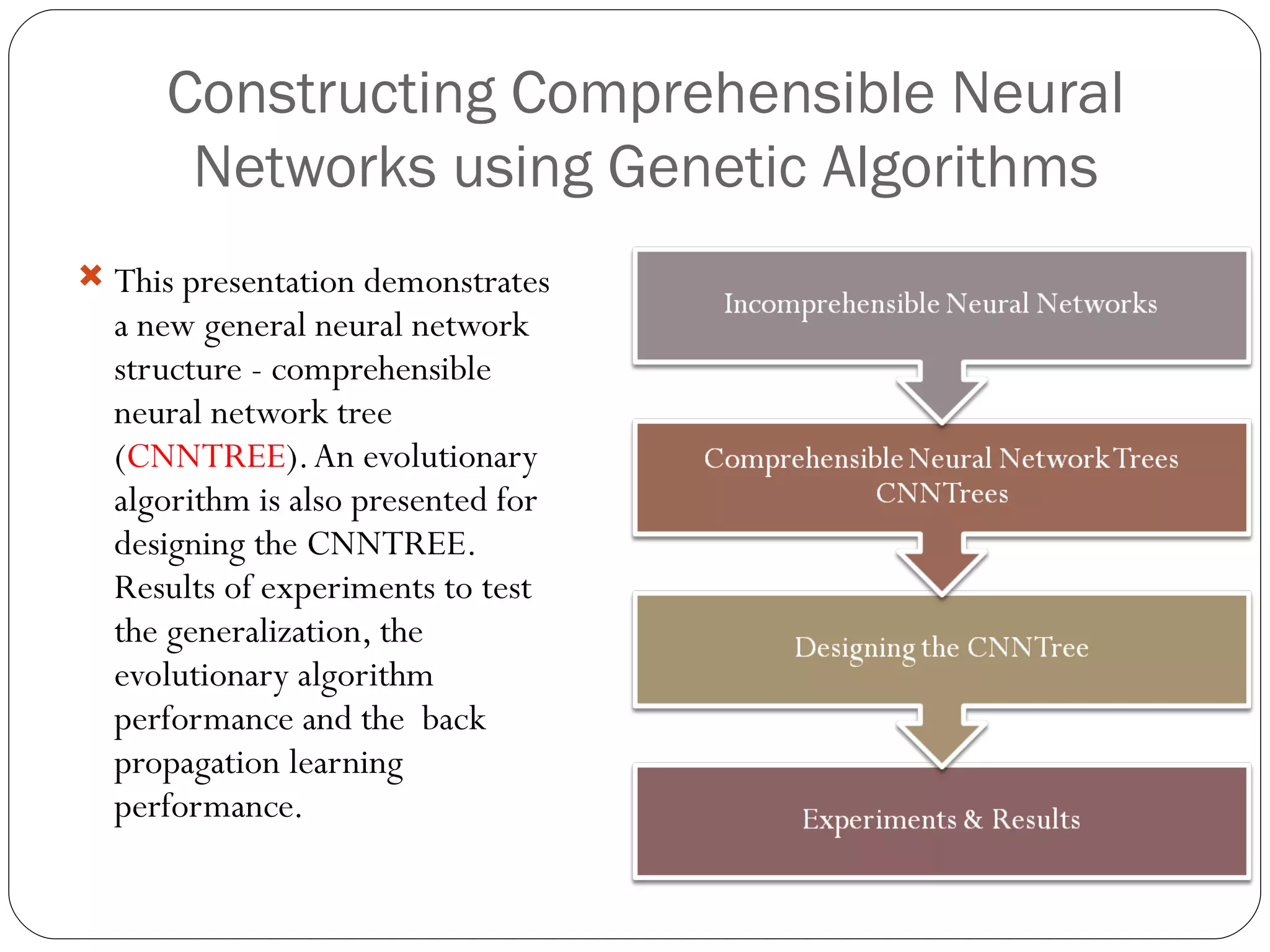

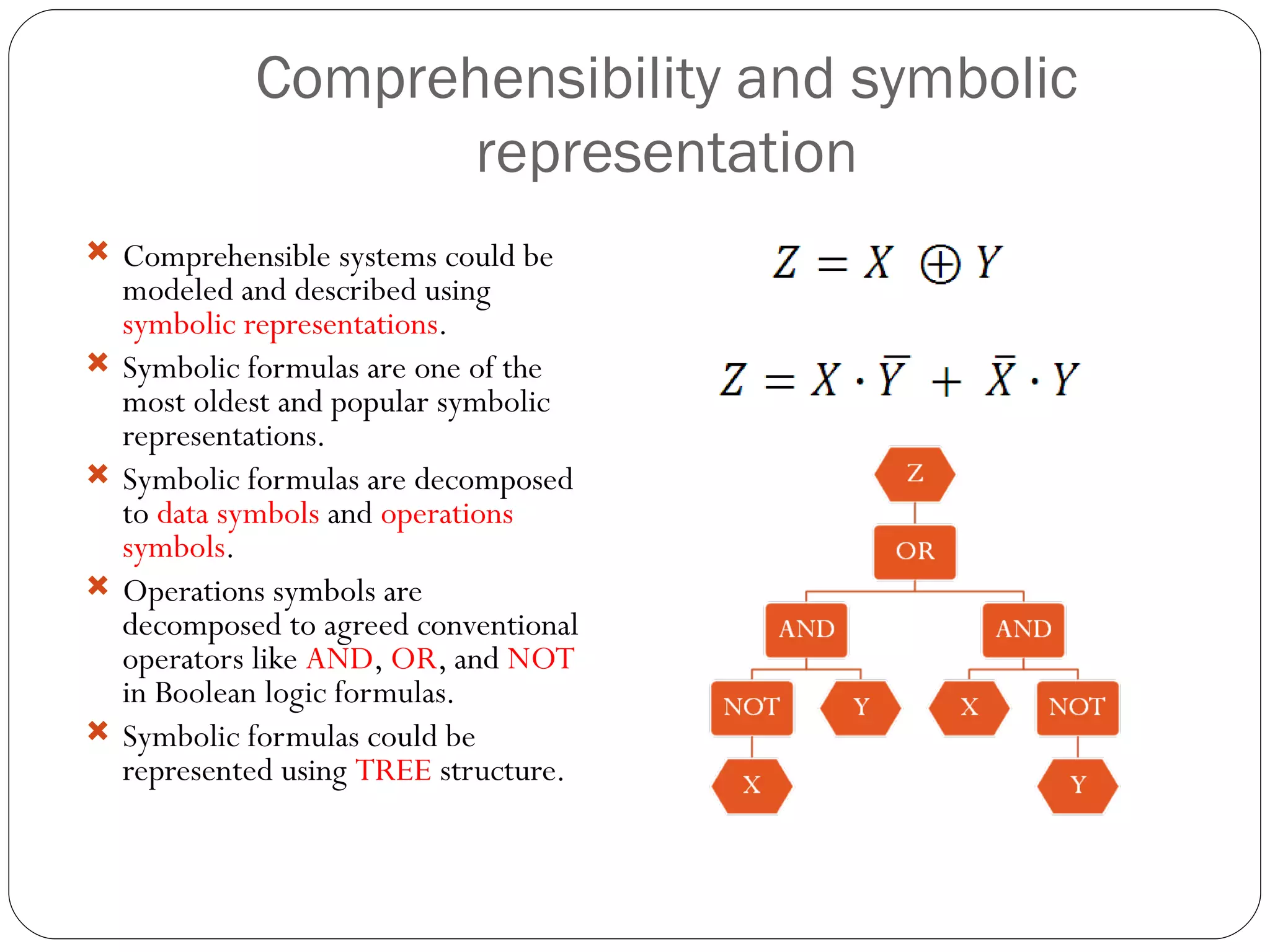

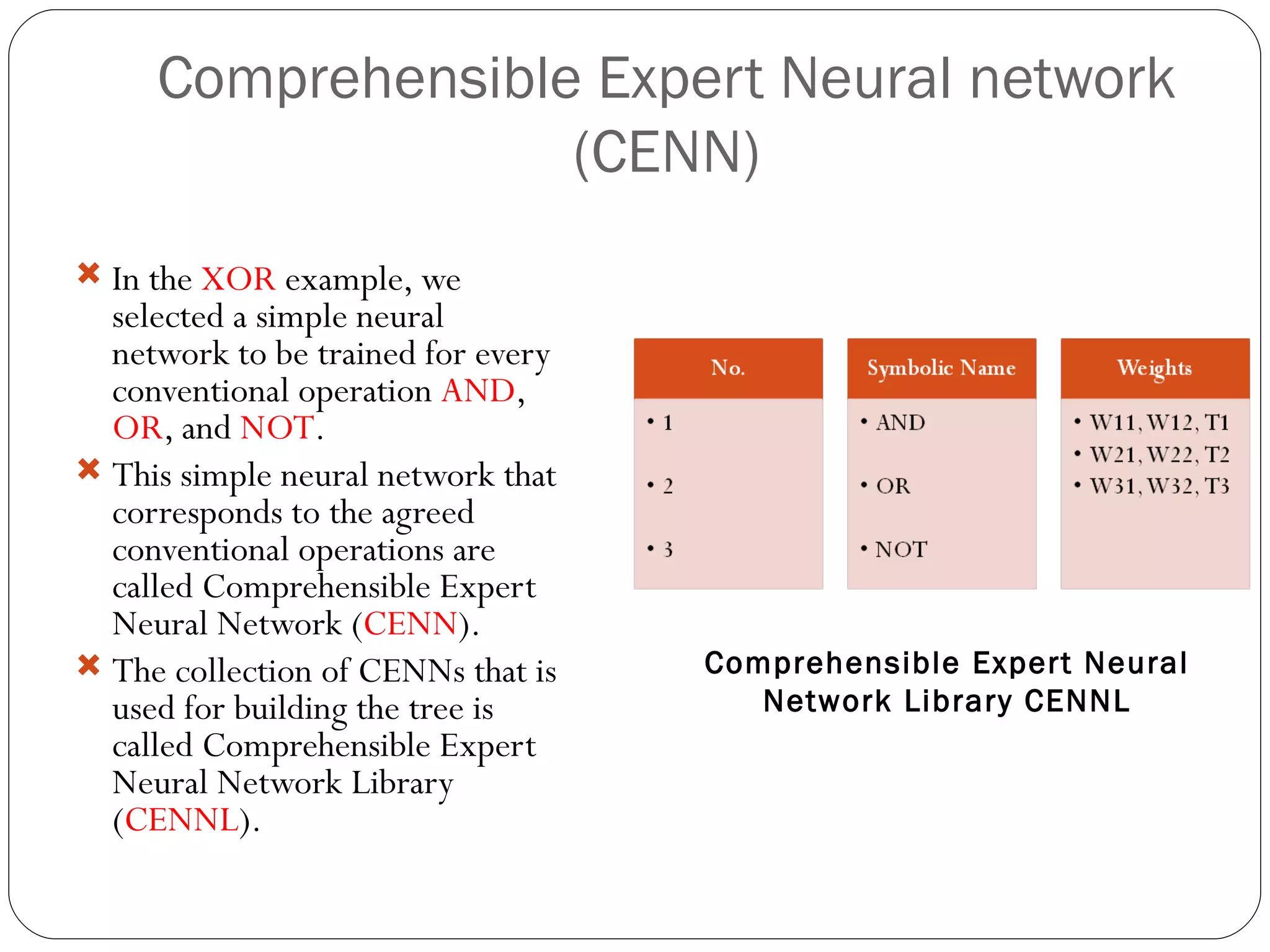

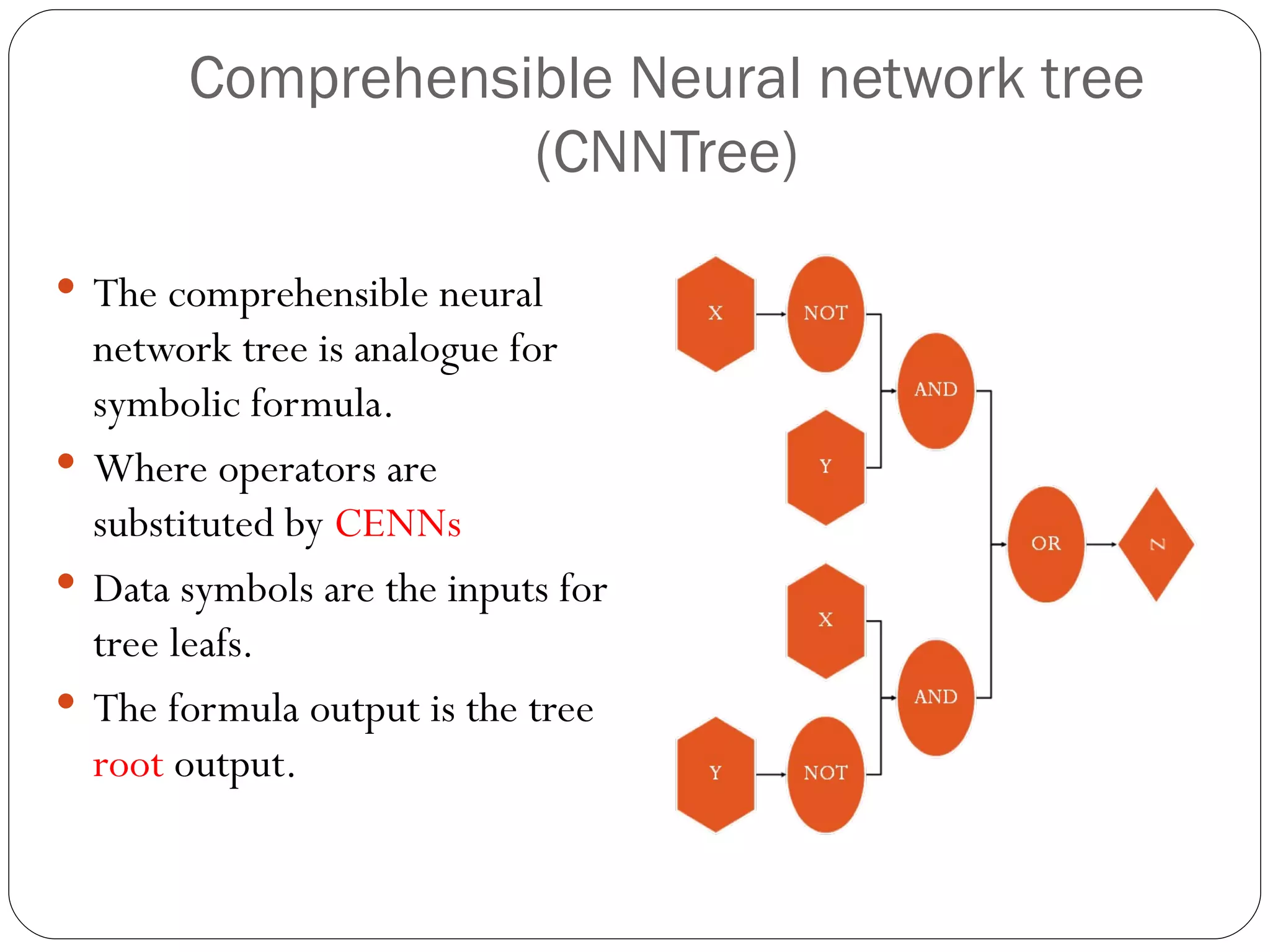

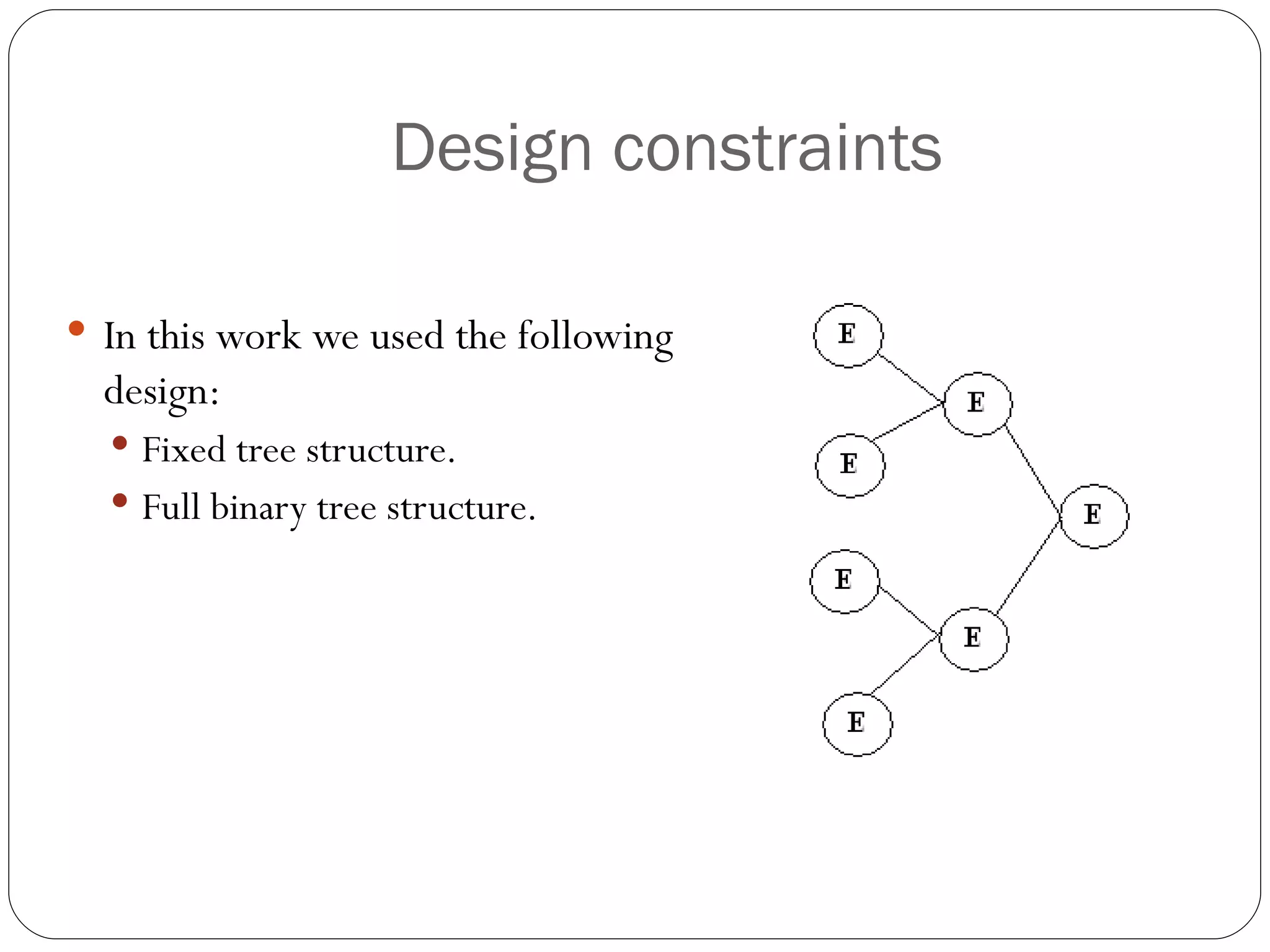

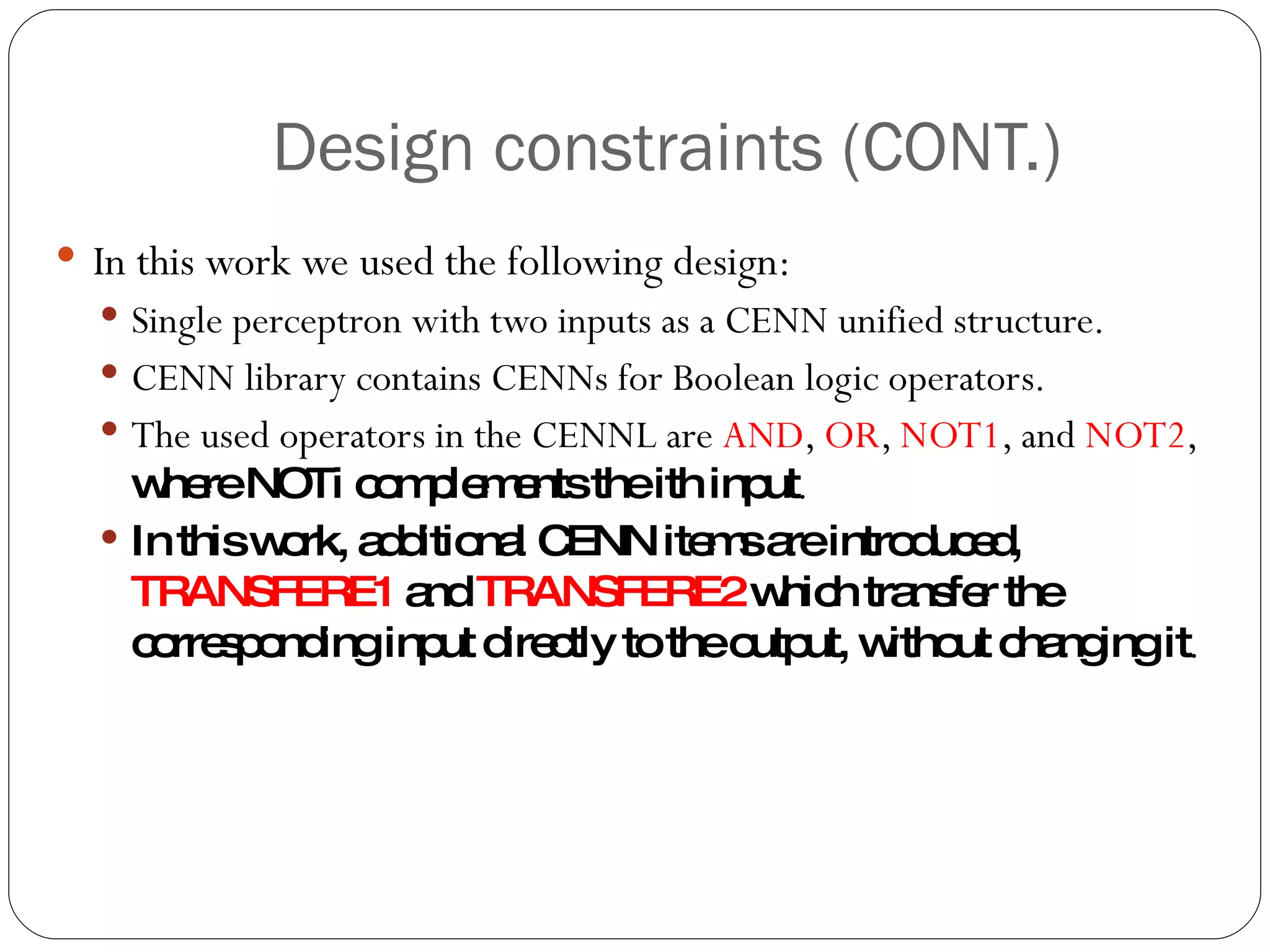

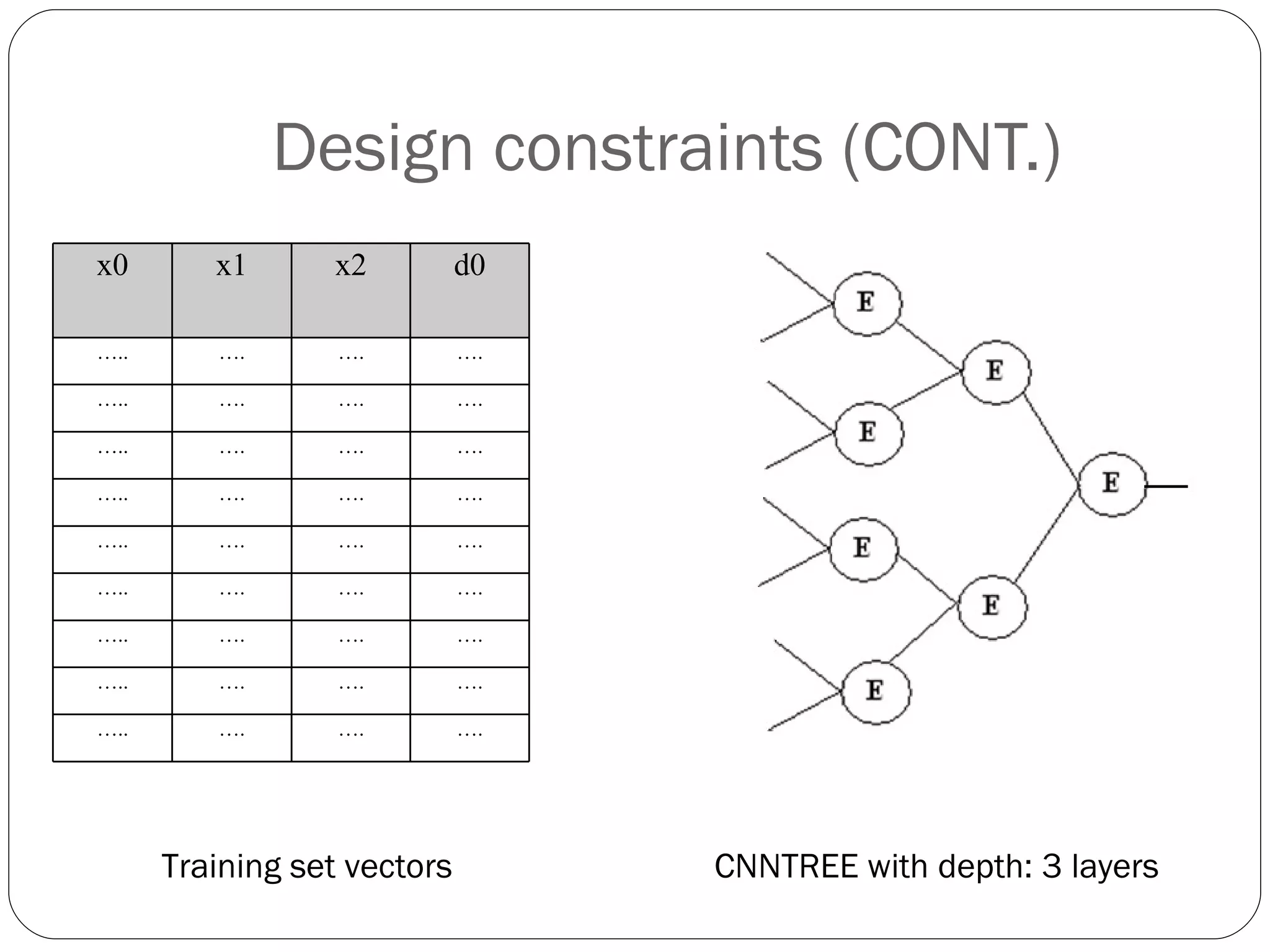

The document presents a master's thesis on constructing comprehensible neural networks using genetic algorithms, introducing a novel structure called the comprehensible neural network tree (CNNTREE). It discusses the challenges of interpreting traditional artificial neural networks and proposes a design that enhances understandability through symbolic representations. Experimental results demonstrate the CNNTREE's comparable generalization performance in digit recognition tasks while offering improved interpretability over conventional neural networks.

![PRIA-8-2007 8th International Conference on PATTERN RECOGNITION and IMAGE ANALYSIS: NEW INFORMATION TECHNOLOGIES. INTERNATIONAL ASSOCIATION FOR PATTERN RECOGNITION (IAPR). Paper Title “ EVOLVING COMPREHENSIBLE NEURAL NETWORK TREES USING GENETIC ALGORITHMS ”. Conference proceeding Volume 1, pages [186 - 190]. Accepted for publish in international journal. Would be published in NO 4. Vol.18. English papers are published in Springer. Paper Title “ EVOLVING COMPREHENSIBLE NEURAL NETWORK TREES USING GENETIC ALGORITHMS ”. Pattern Recognition and Image Analysis. Advances in Mathematical Theory and Applications](https://image.slidesharecdn.com/presentedevolvingcomprehensibleneuralnetworktrees-12614925085269-phpapp01/75/Evolving-Comprehensible-Neural-Network-Trees-2-2048.jpg)

![Digits recognition An experiment of recognizing numerical character patterns is illustrated here Ten numerical character patterns are used [0-9] . Each pattern consists of 64 (8 x 8) pixels black and white.](https://image.slidesharecdn.com/presentedevolvingcomprehensibleneuralnetworktrees-12614925085269-phpapp01/75/Evolving-Comprehensible-Neural-Network-Trees-19-2048.jpg)