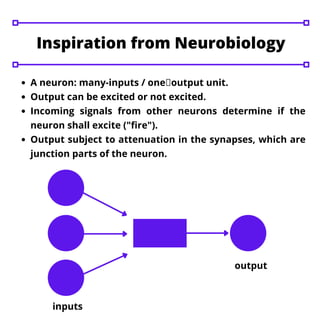

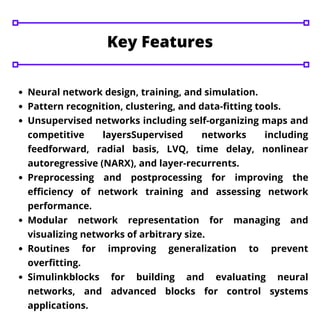

An artificial neural network (ANN) is inspired by biological nervous systems, composed of interconnected neurons for applications like pattern recognition and data classification. It learns by example, providing advantages over traditional algorithmic methods, particularly in cases where computing solutions is complex. Key features include adaptive learning, real-time operation, and various network types such as supervised and unsupervised networks.