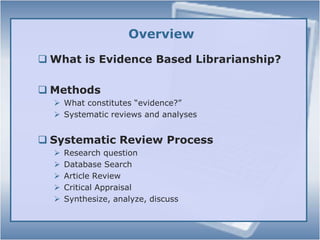

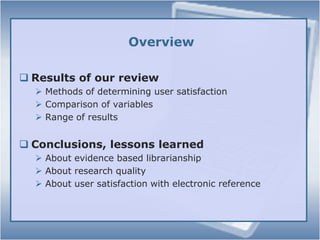

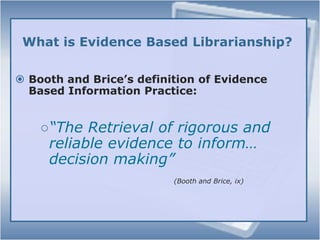

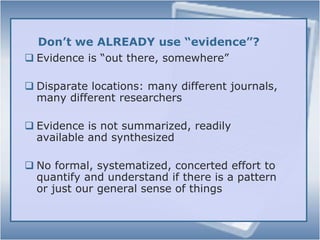

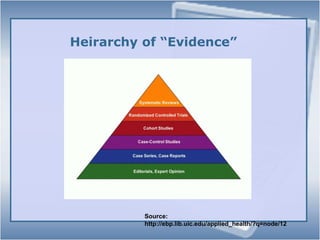

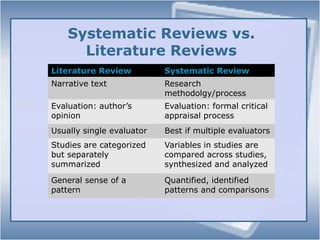

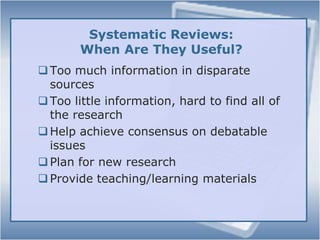

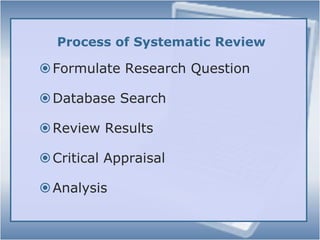

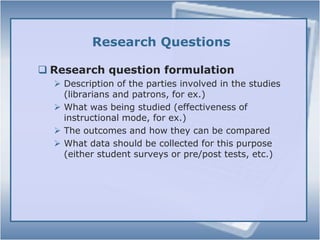

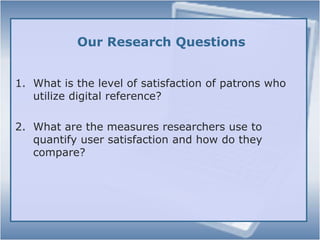

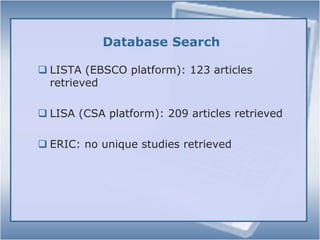

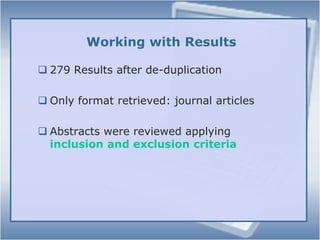

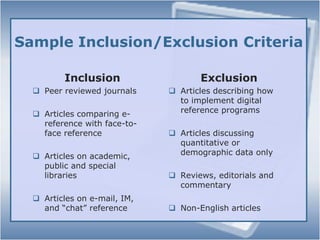

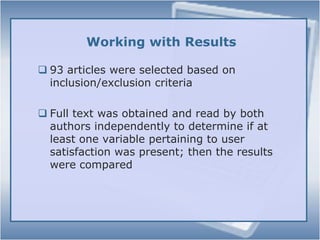

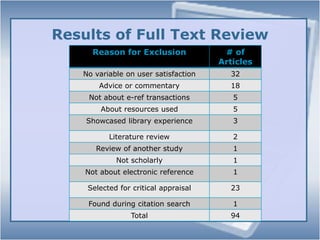

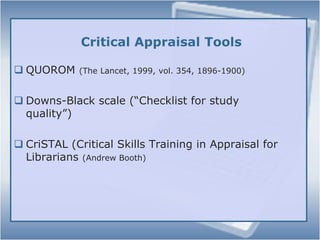

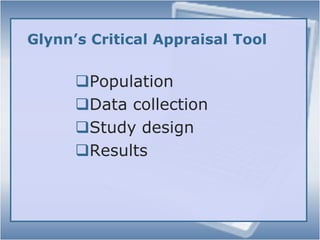

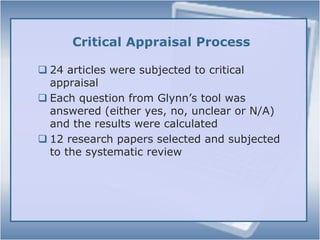

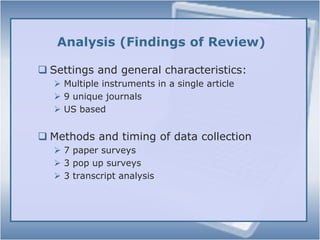

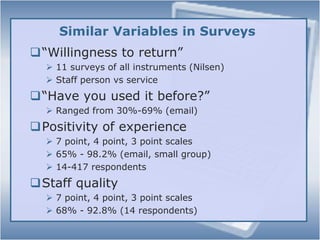

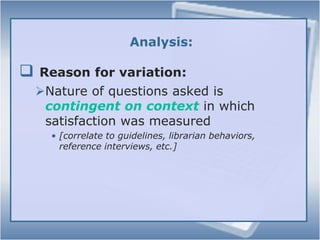

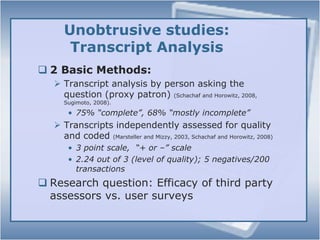

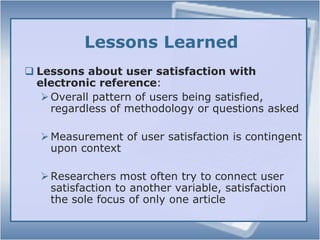

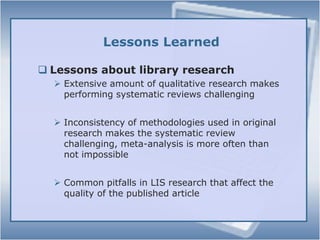

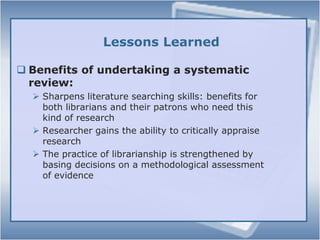

The document presents an overview of evidence-based librarianship (EBL) and its relevance in assessing user satisfaction with electronic reference services. It discusses systematic review methodologies, research questions, and the criteria for selecting relevant studies, ultimately identifying trends in user satisfaction despite varying research methodologies. Key lessons include the challenges of qualitative research in systematic reviews and the benefits of synthesizing evidence for informed decision-making in librarianship.

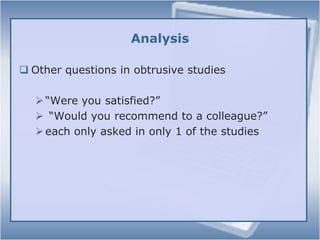

![Analysis: Reason for variation:Nature of questions asked is contingent on context in which satisfaction was measured [correlate to guidelines, librarian behaviors, reference interviews, etc.]](https://image.slidesharecdn.com/evaluatinge-reference-100820142334-phpapp02/85/Evaluating-e-reference-29-320.jpg)