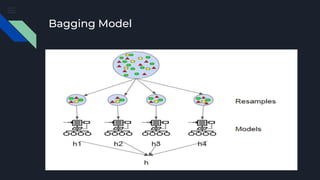

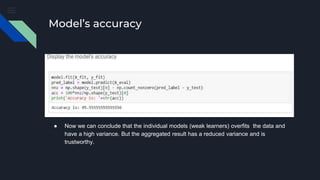

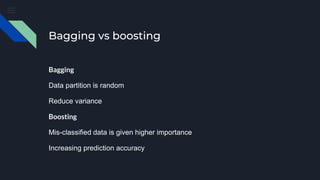

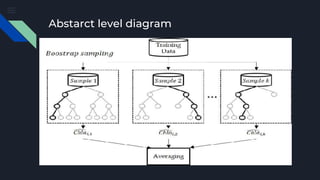

The document discusses bagging, an ensemble machine learning method. Bagging (bootstrap aggregating) uses multiple models fitted on random subsets of a dataset to improve stability and accuracy compared to a single model. It works by training base models in parallel on random samples with replacement of the original dataset and aggregating their predictions. Key benefits are reduced variance, easier implementation through libraries like scikit-learn, and improved performance over single models. However, bagging results in less interpretable models compared to a single model.