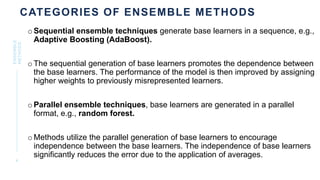

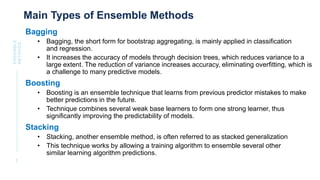

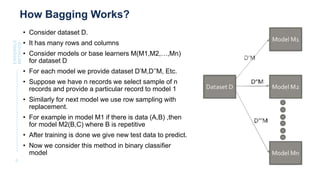

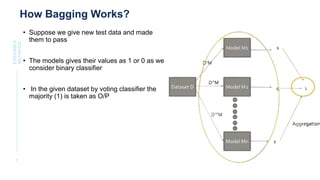

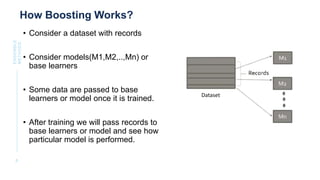

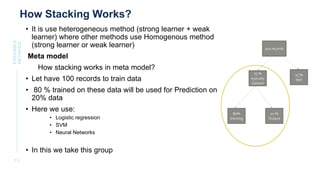

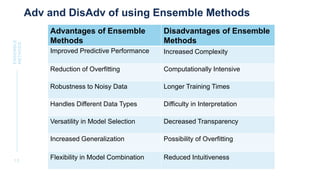

The document discusses ensemble methods in machine learning. It begins by defining ensemble methods as meta-algorithms that combine several machine learning models to improve predictive performance compared to a single model. It then categorizes ensemble methods as either sequential or parallel based on whether base learners are generated sequentially or in parallel. The main types - bagging, boosting, and stacking - are described in terms of how they work, with bagging using random sampling and averaging, boosting focusing on correcting previous predictions, and stacking combining heterogeneous models. Advantages of ensemble methods include improved accuracy, reduced variance and overfitting, while disadvantages include increased complexity, longer training times, and reduced interpretability.