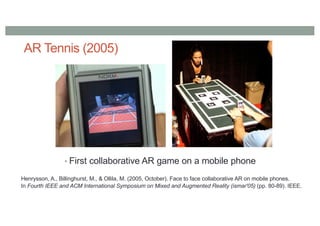

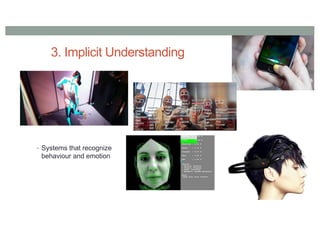

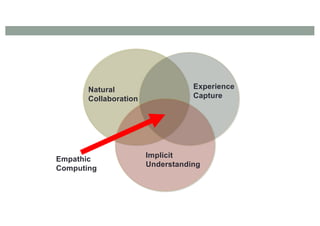

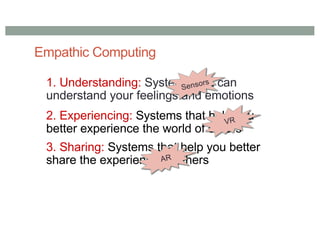

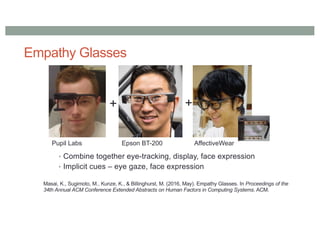

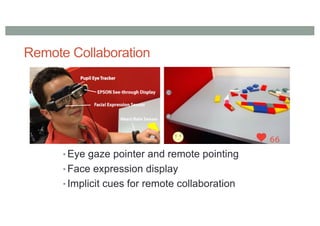

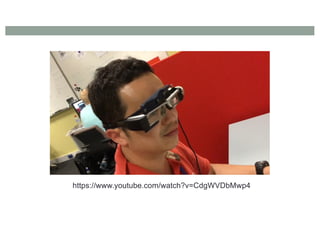

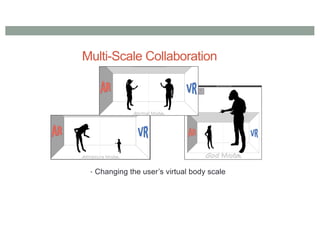

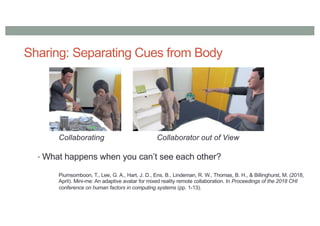

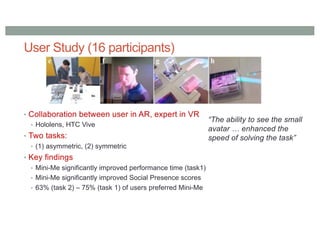

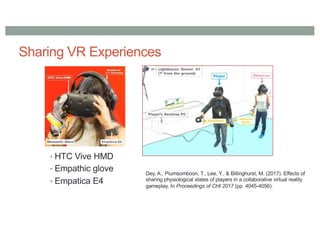

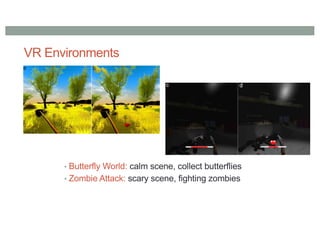

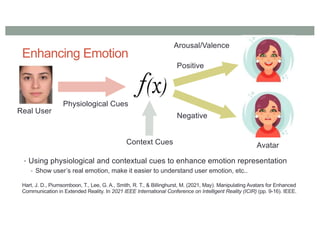

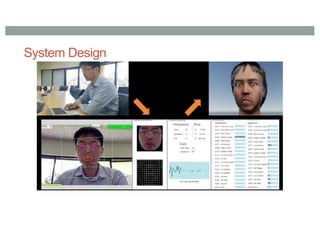

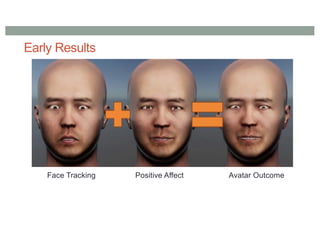

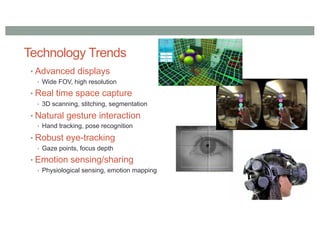

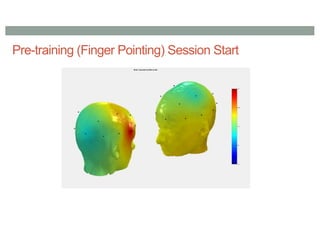

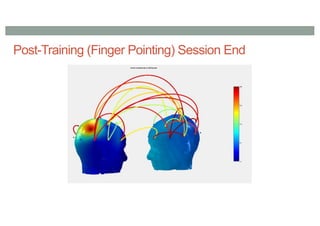

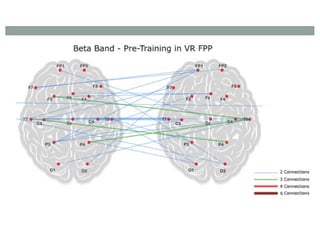

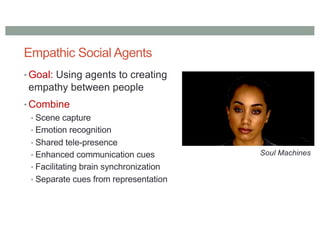

The document discusses empathic computing and its applications in gaming, focusing on enhancing collaboration and communication through augmented and virtual reality. It emphasizes the need for systems that can understand, experience, and share human emotions and behaviors, highlighting technological developments like empathy glasses and mini-me avatars. Ultimately, it outlines a vision for future research directions in empathic computing to improve interactions and social presence in digital environments.