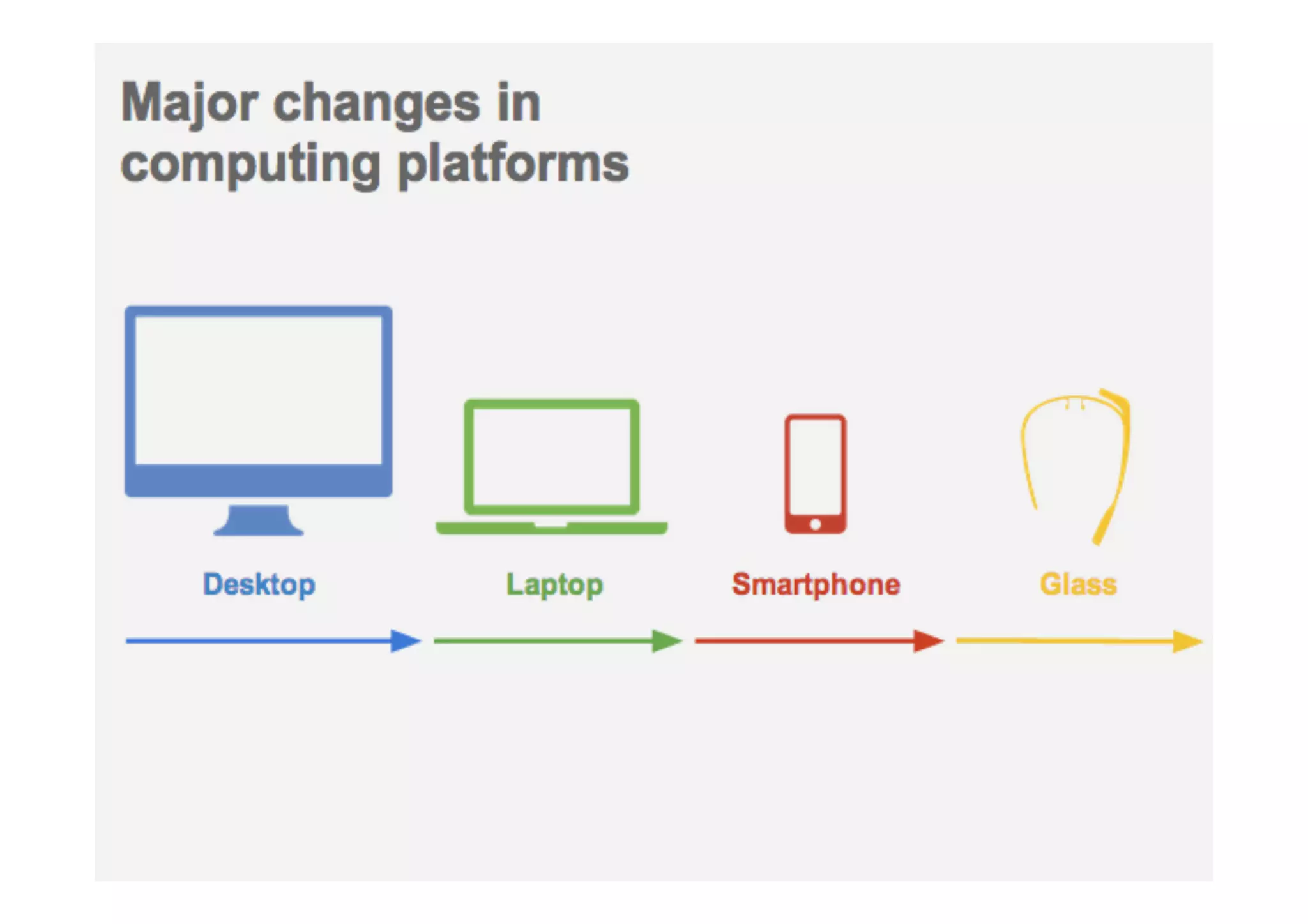

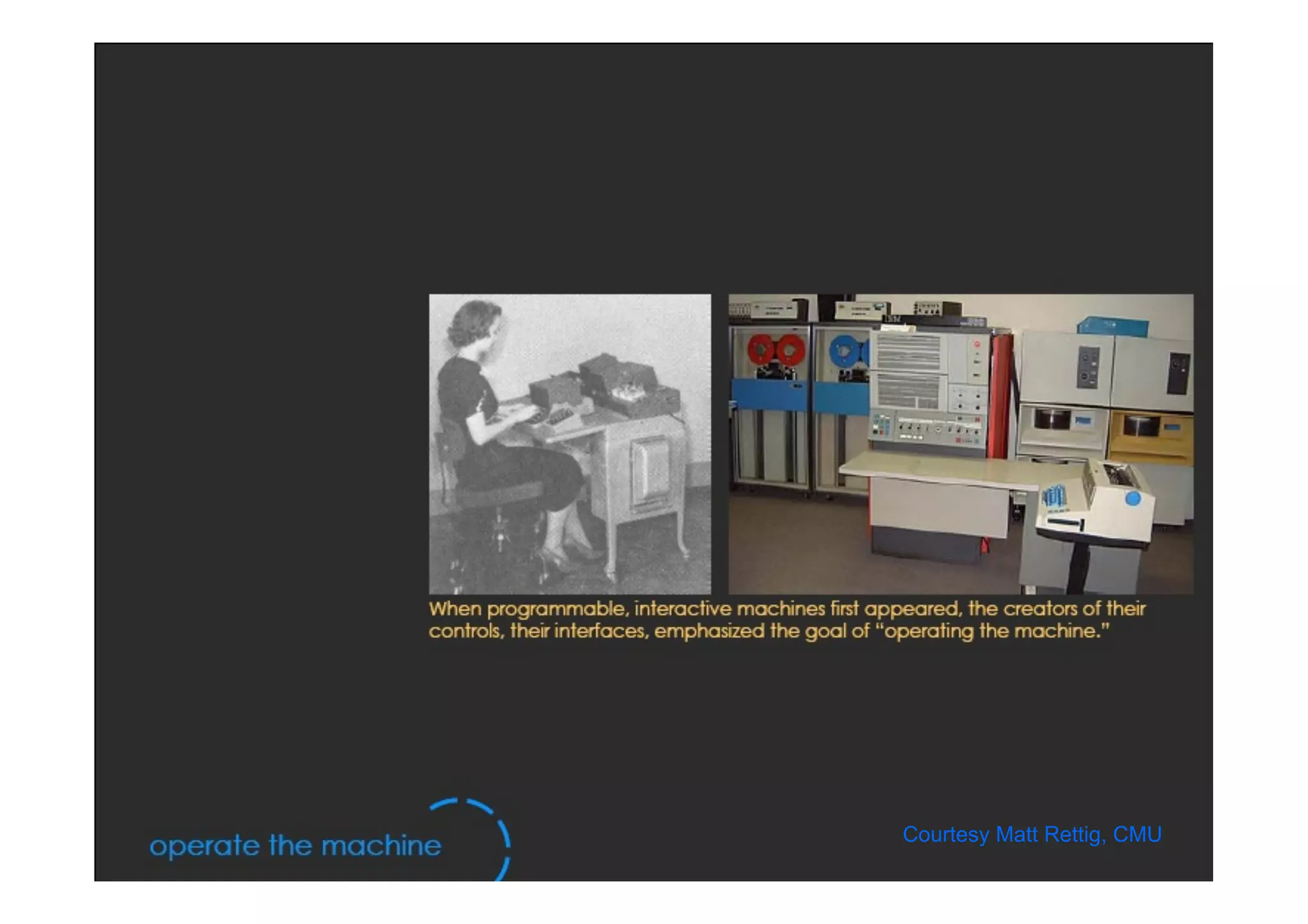

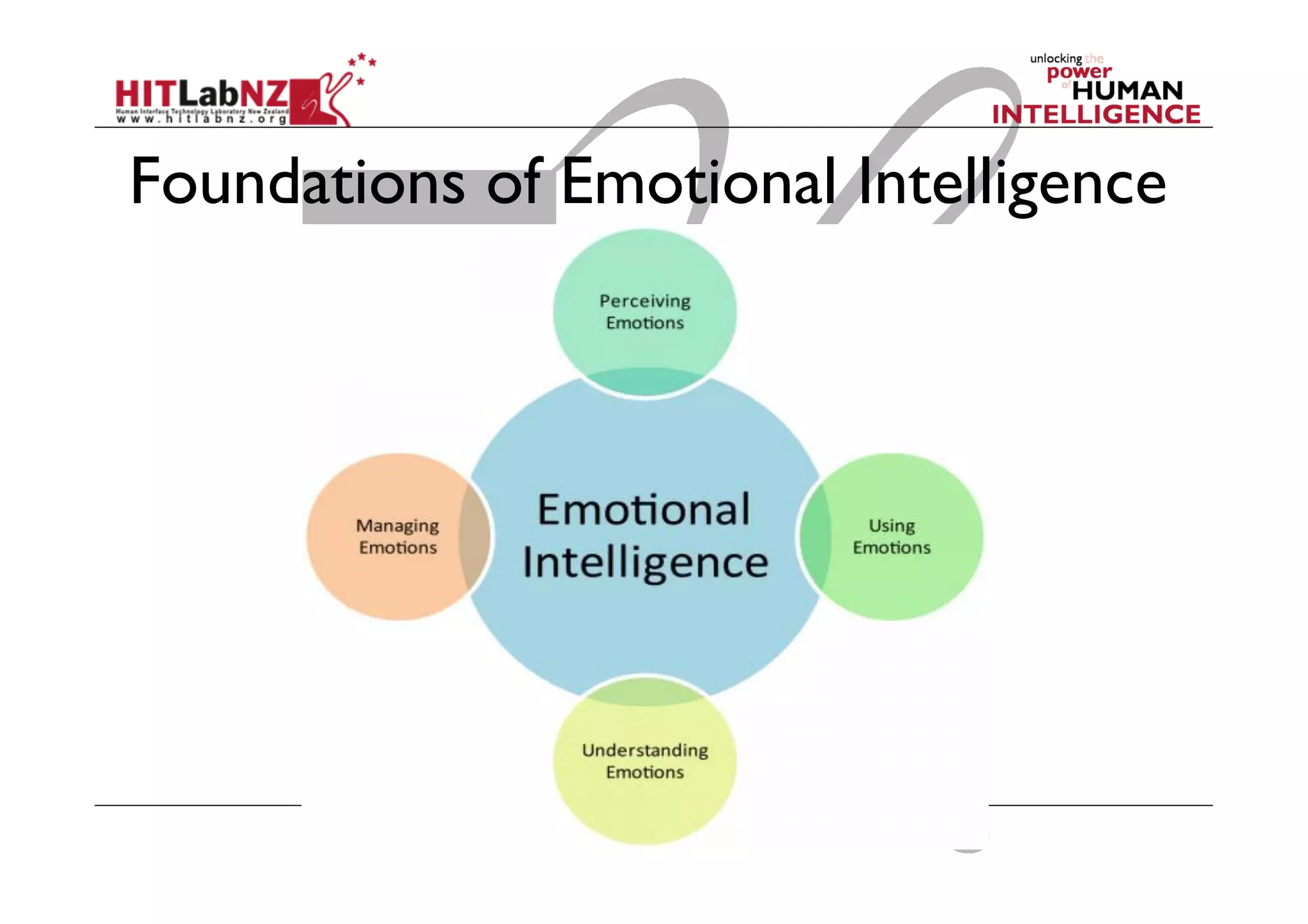

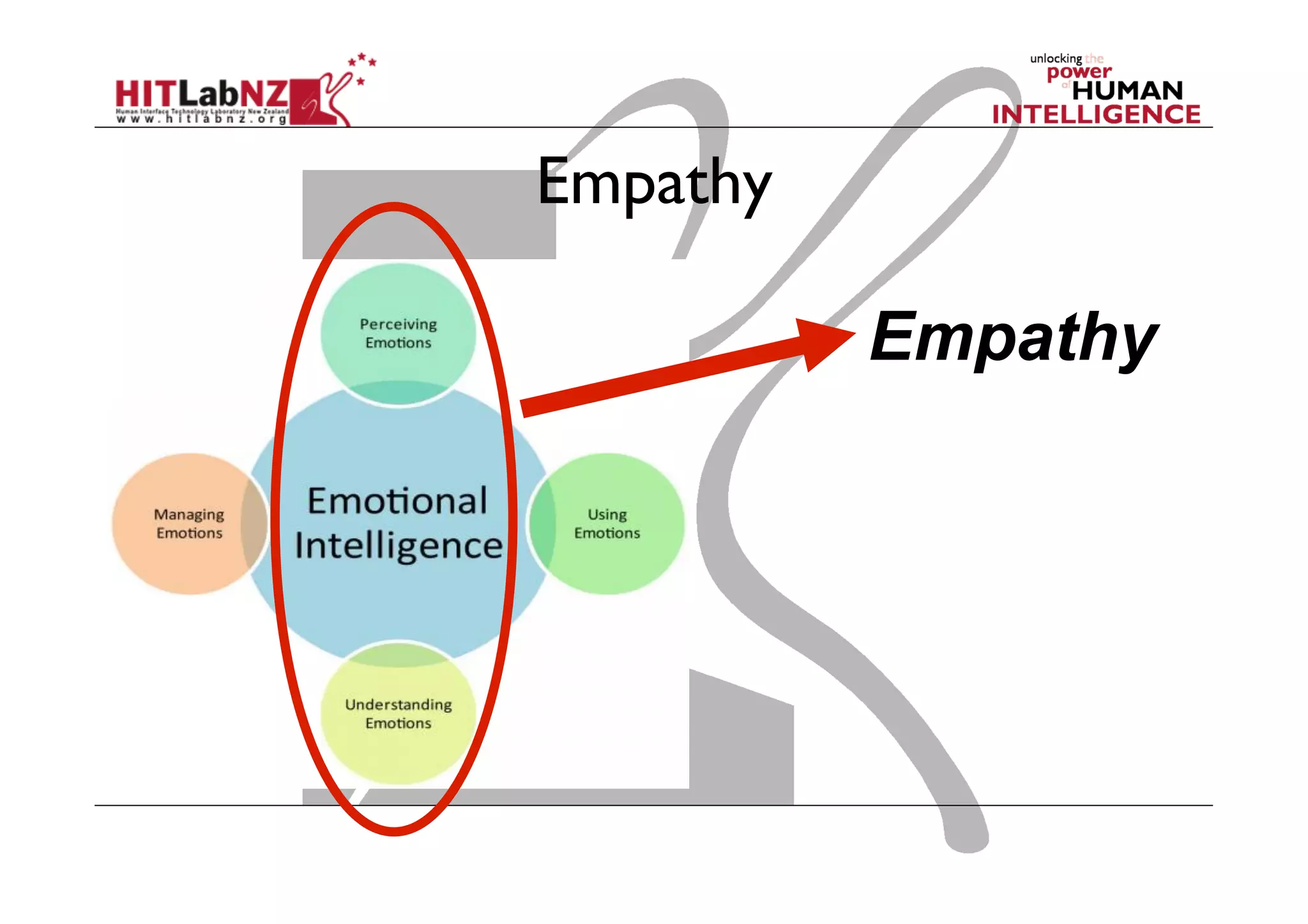

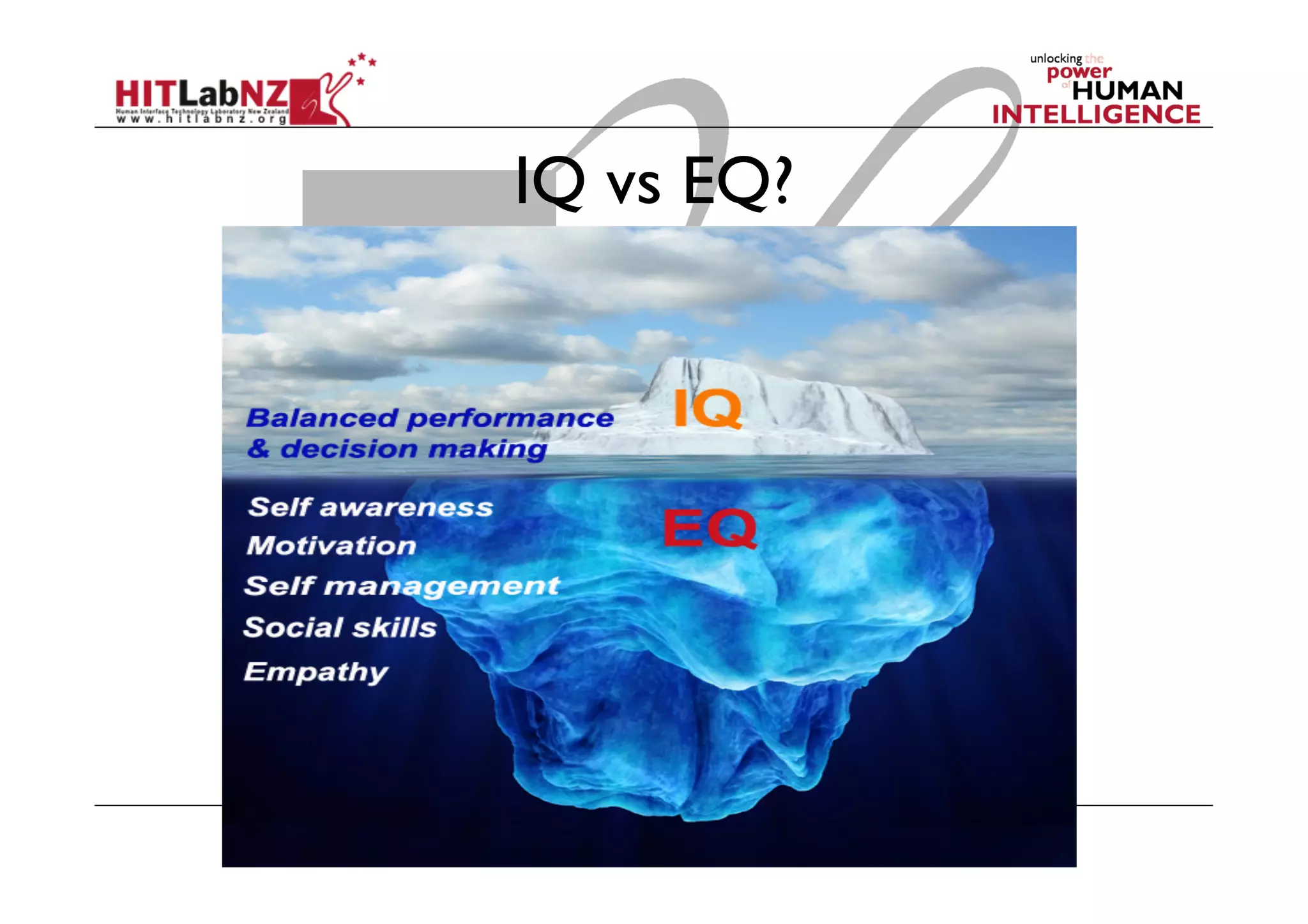

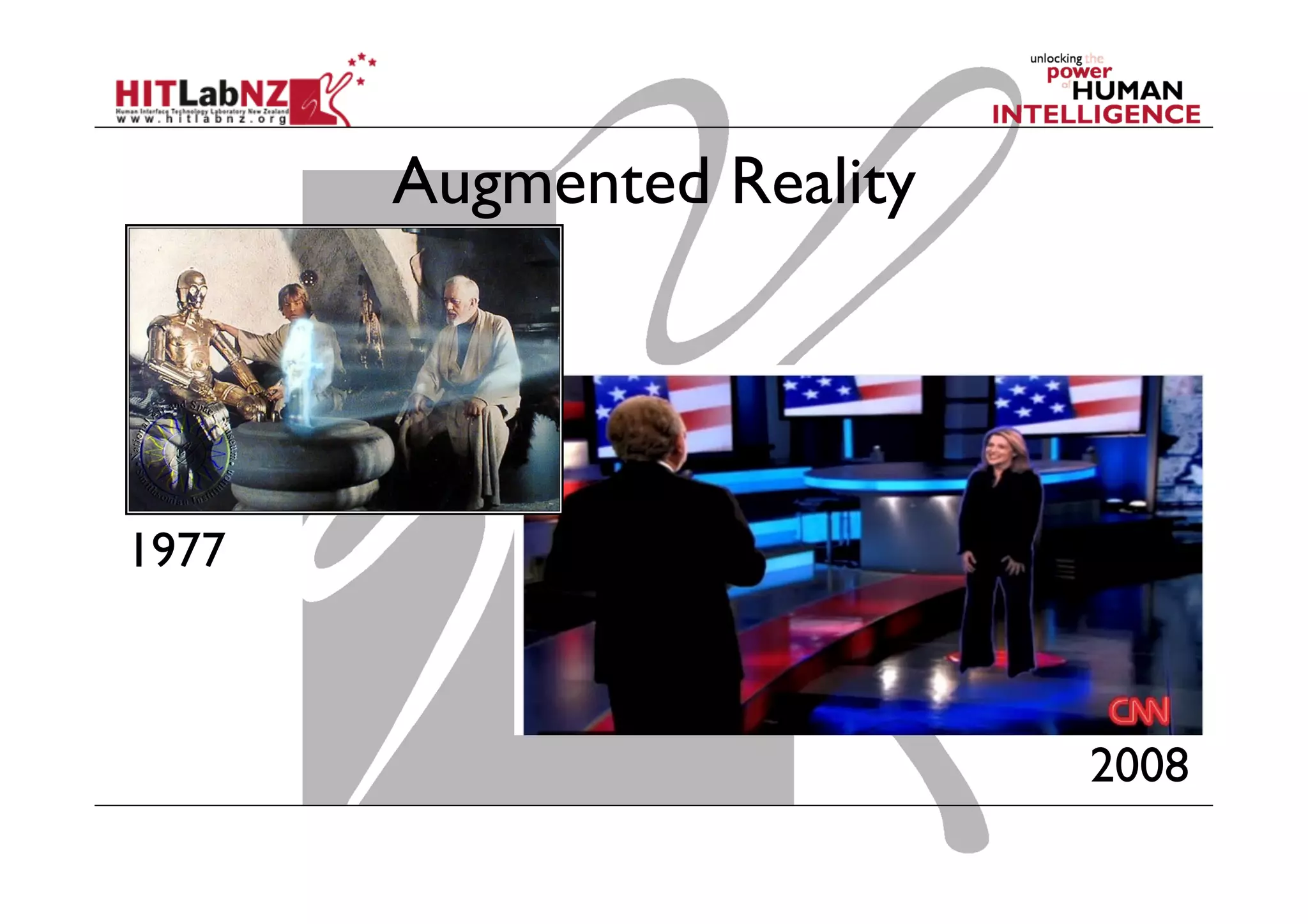

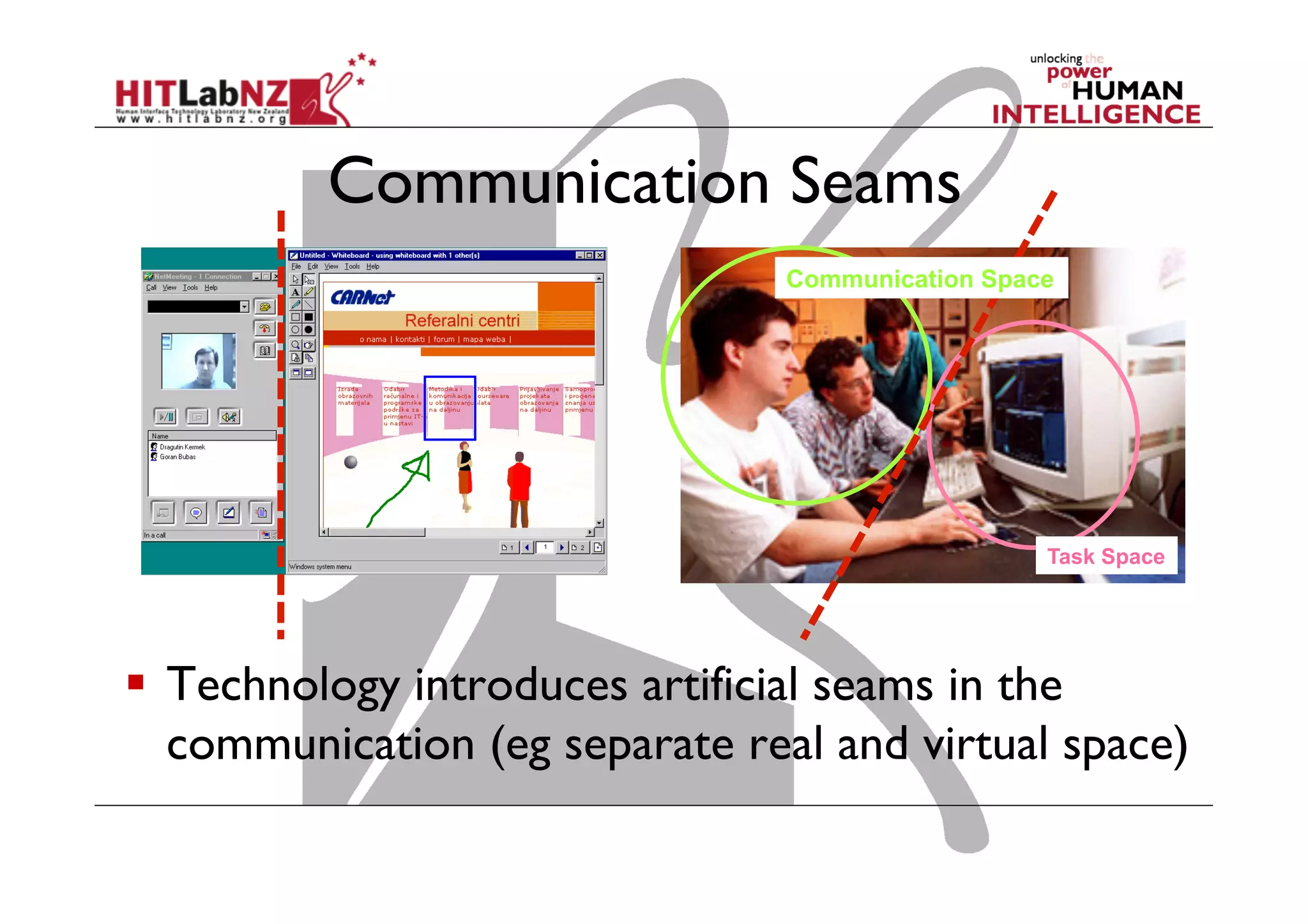

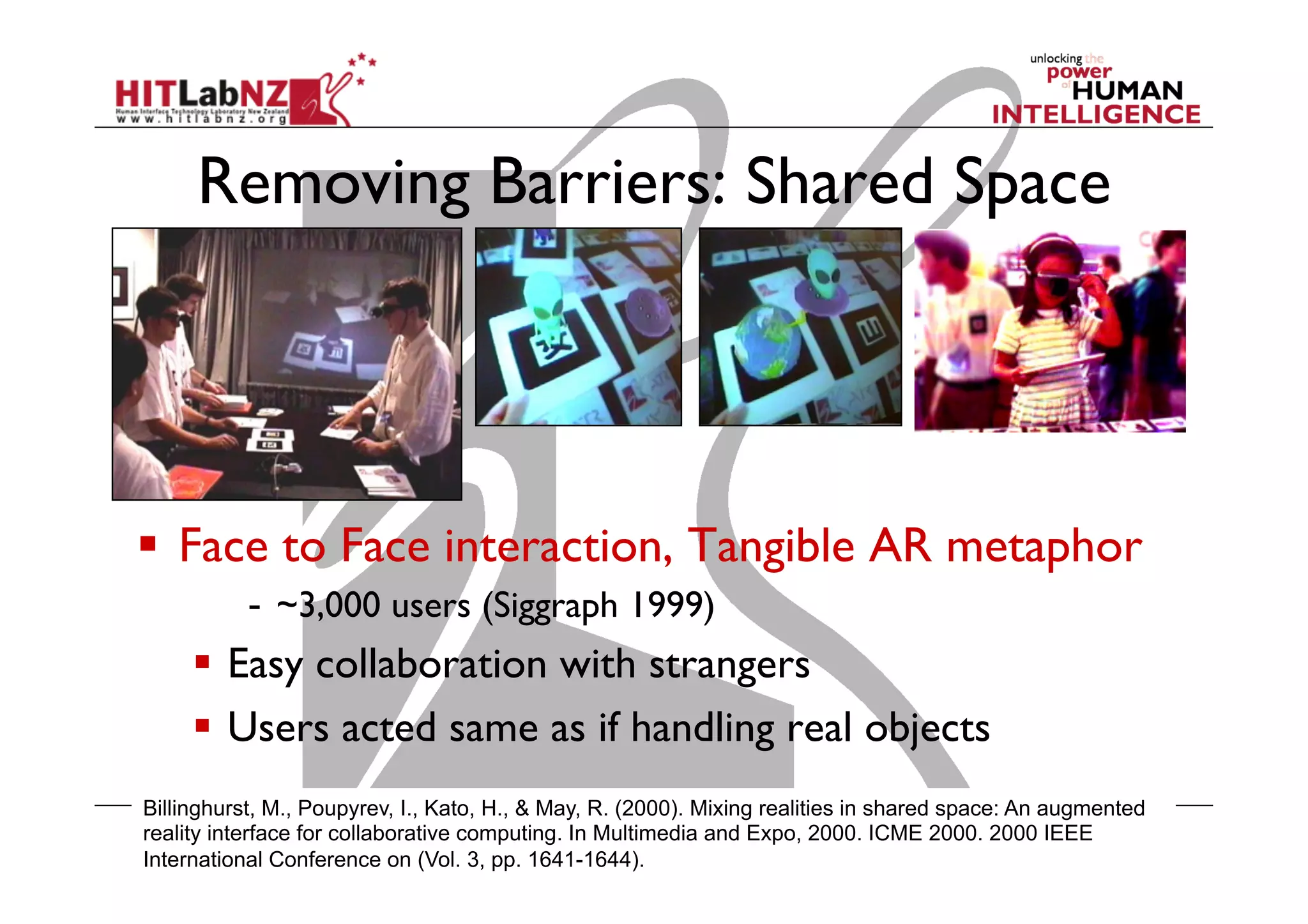

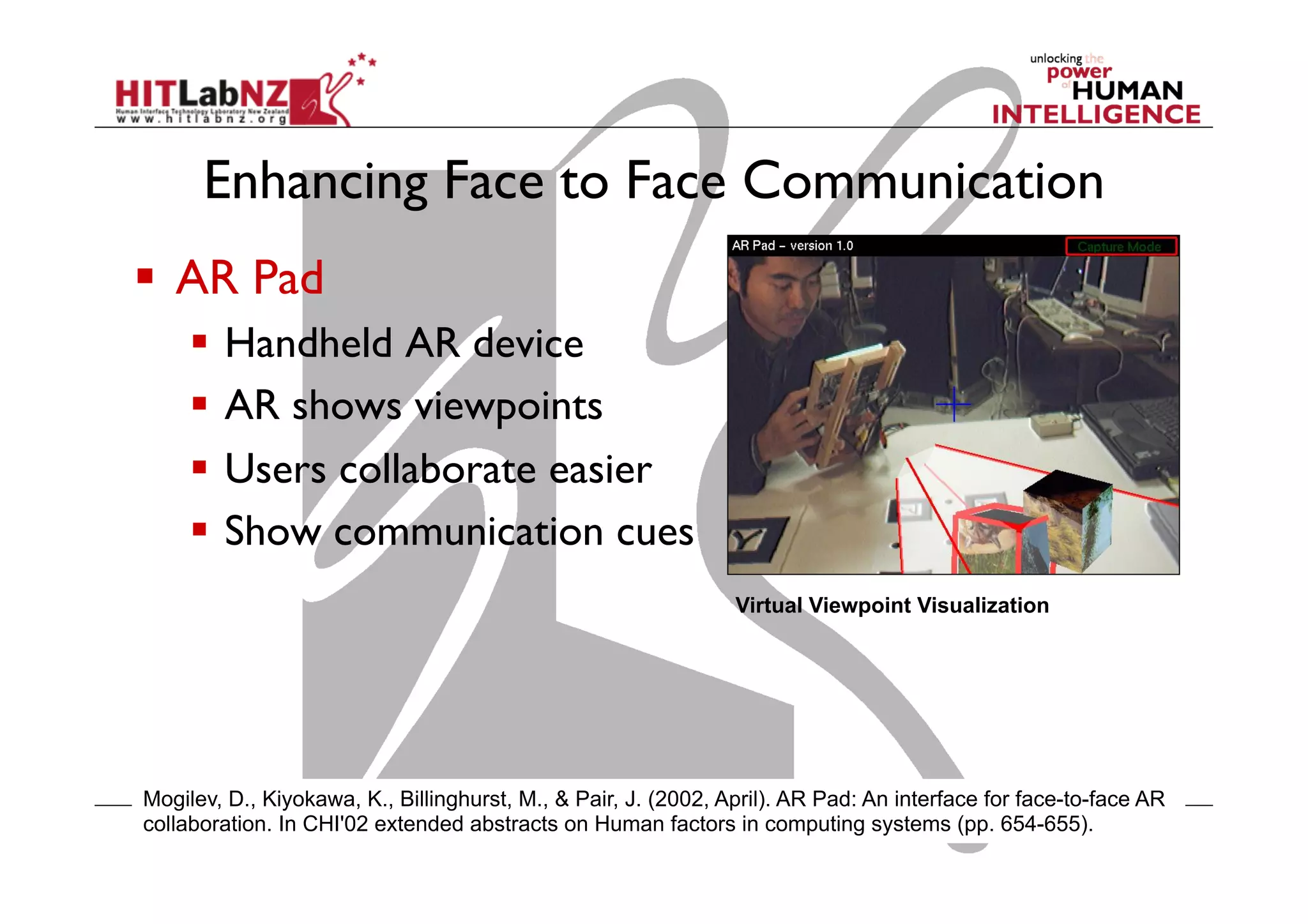

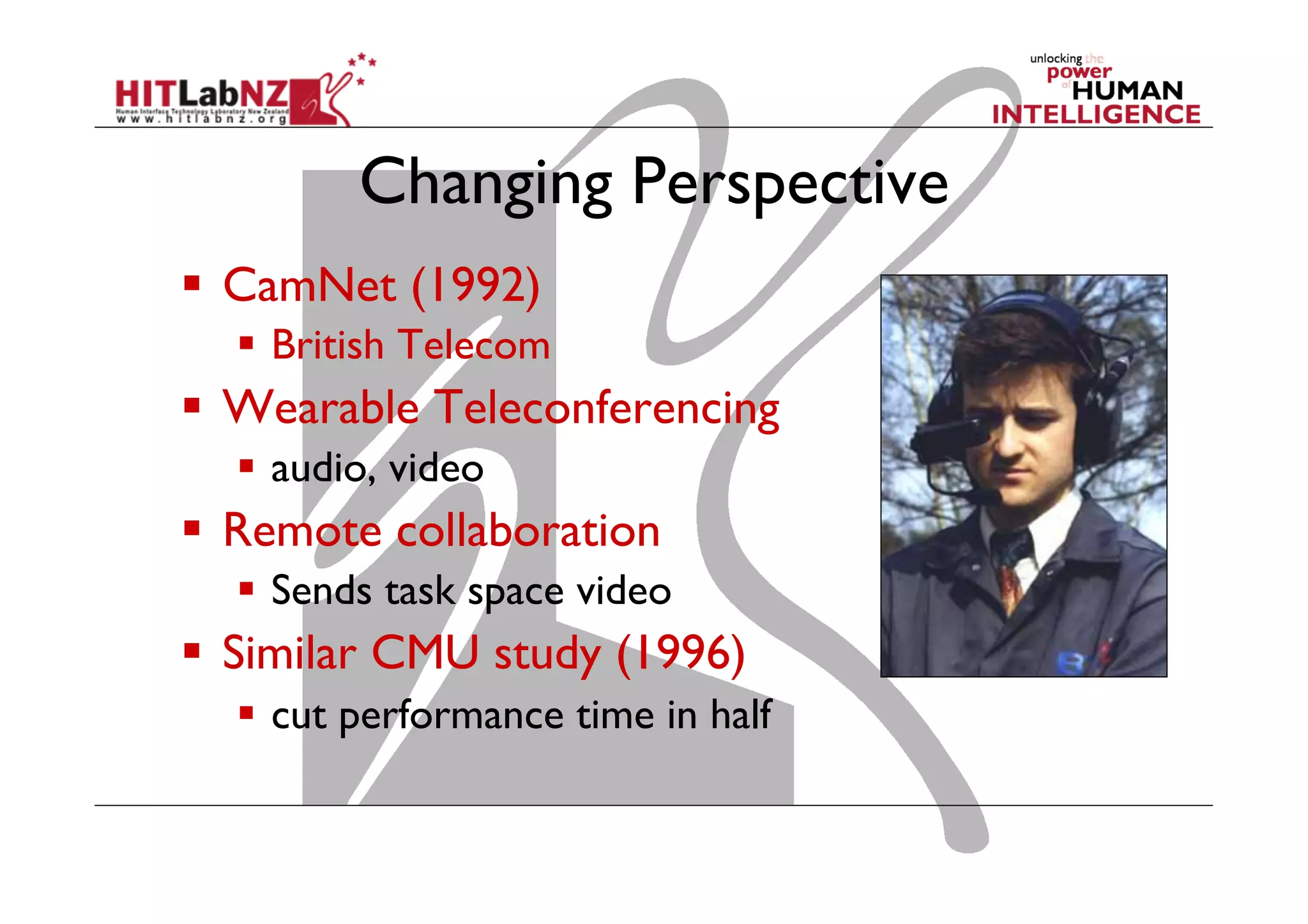

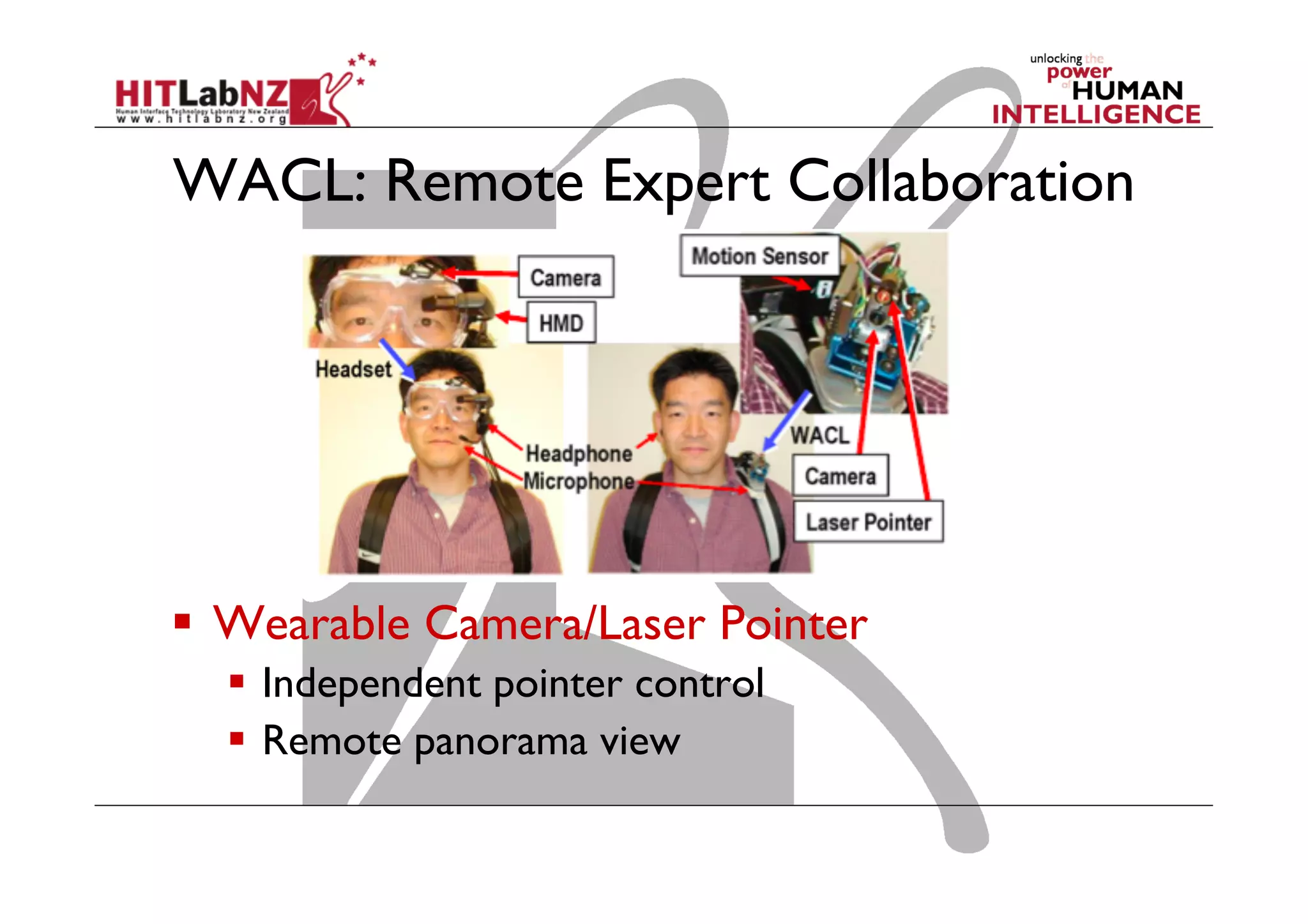

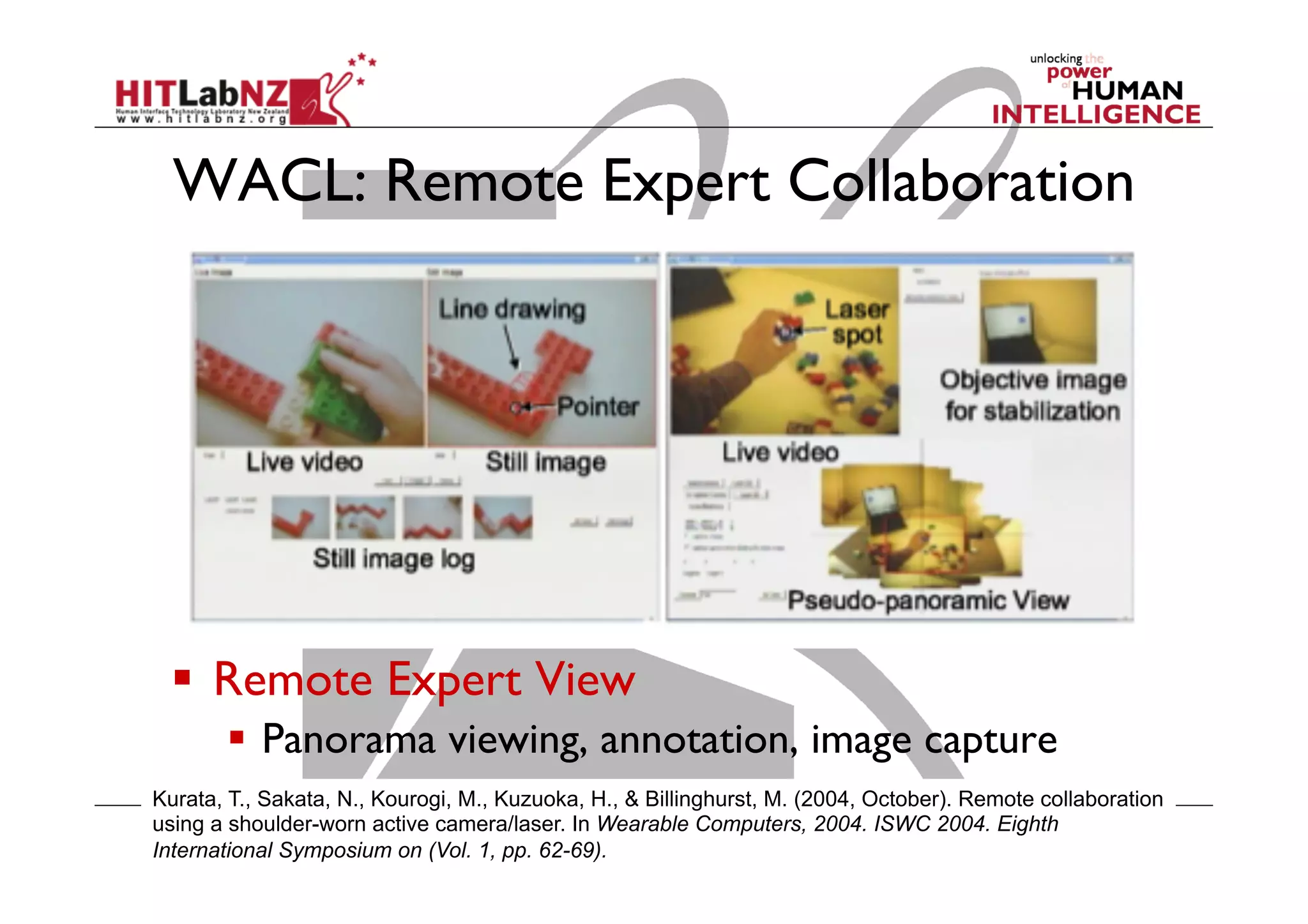

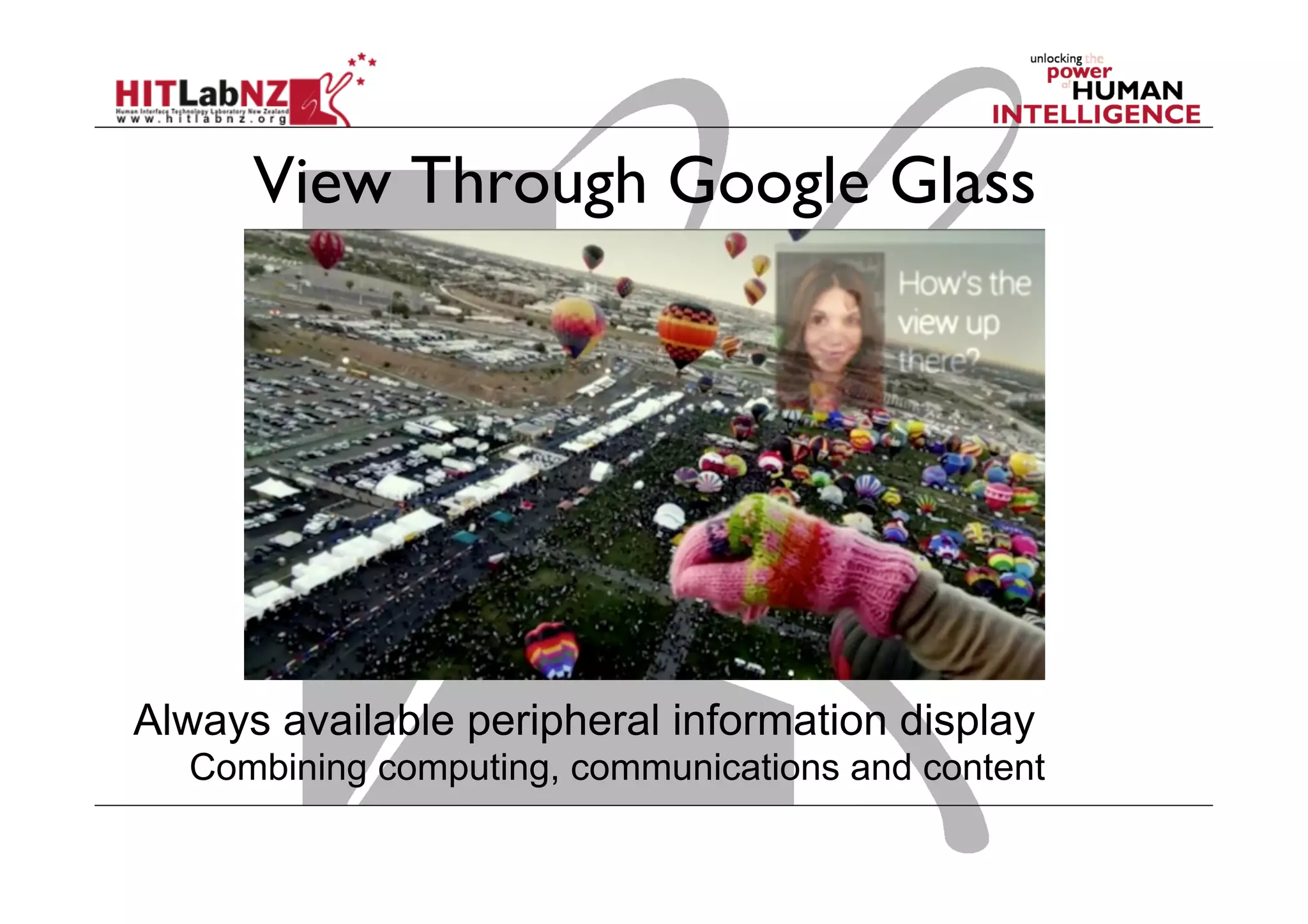

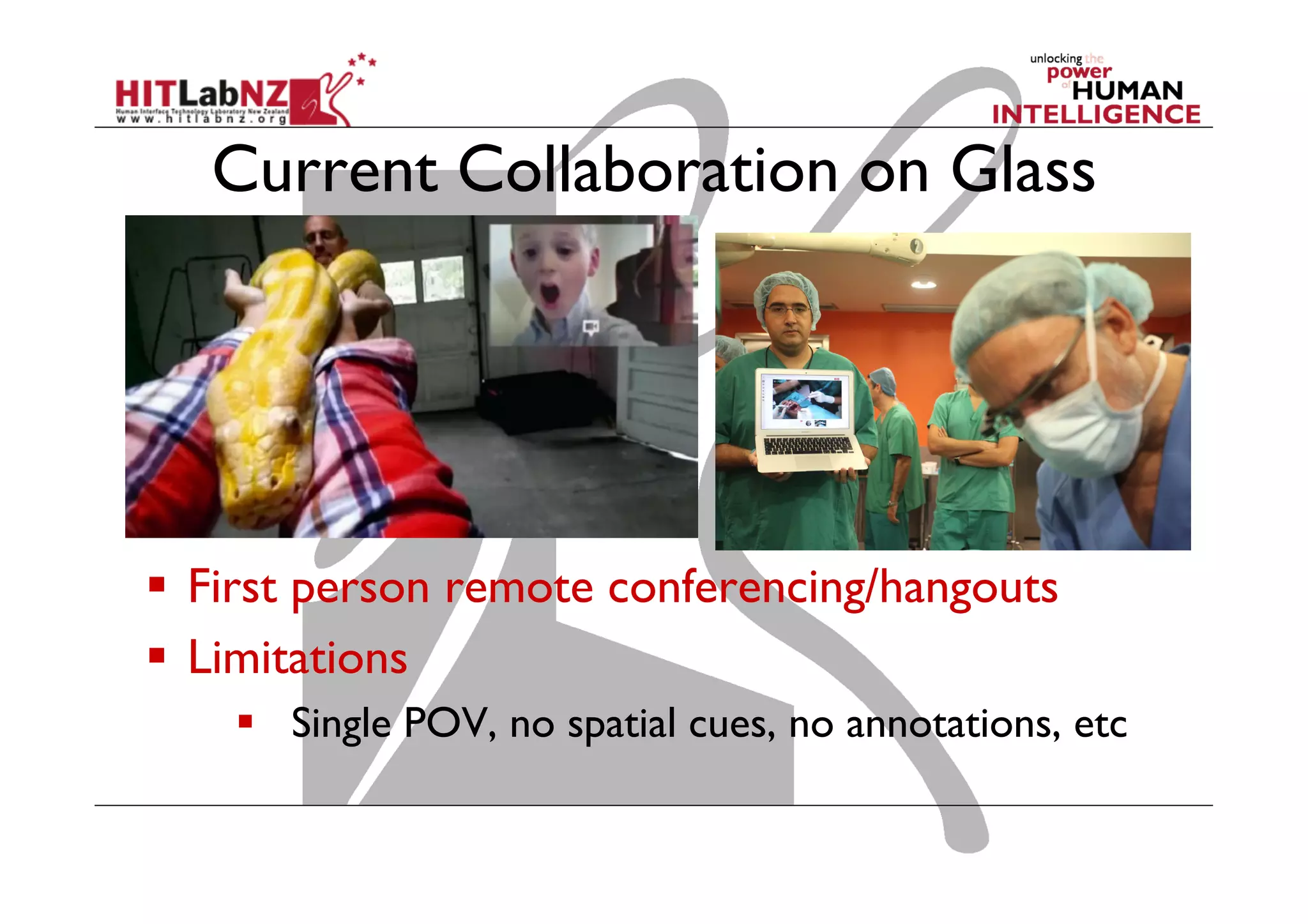

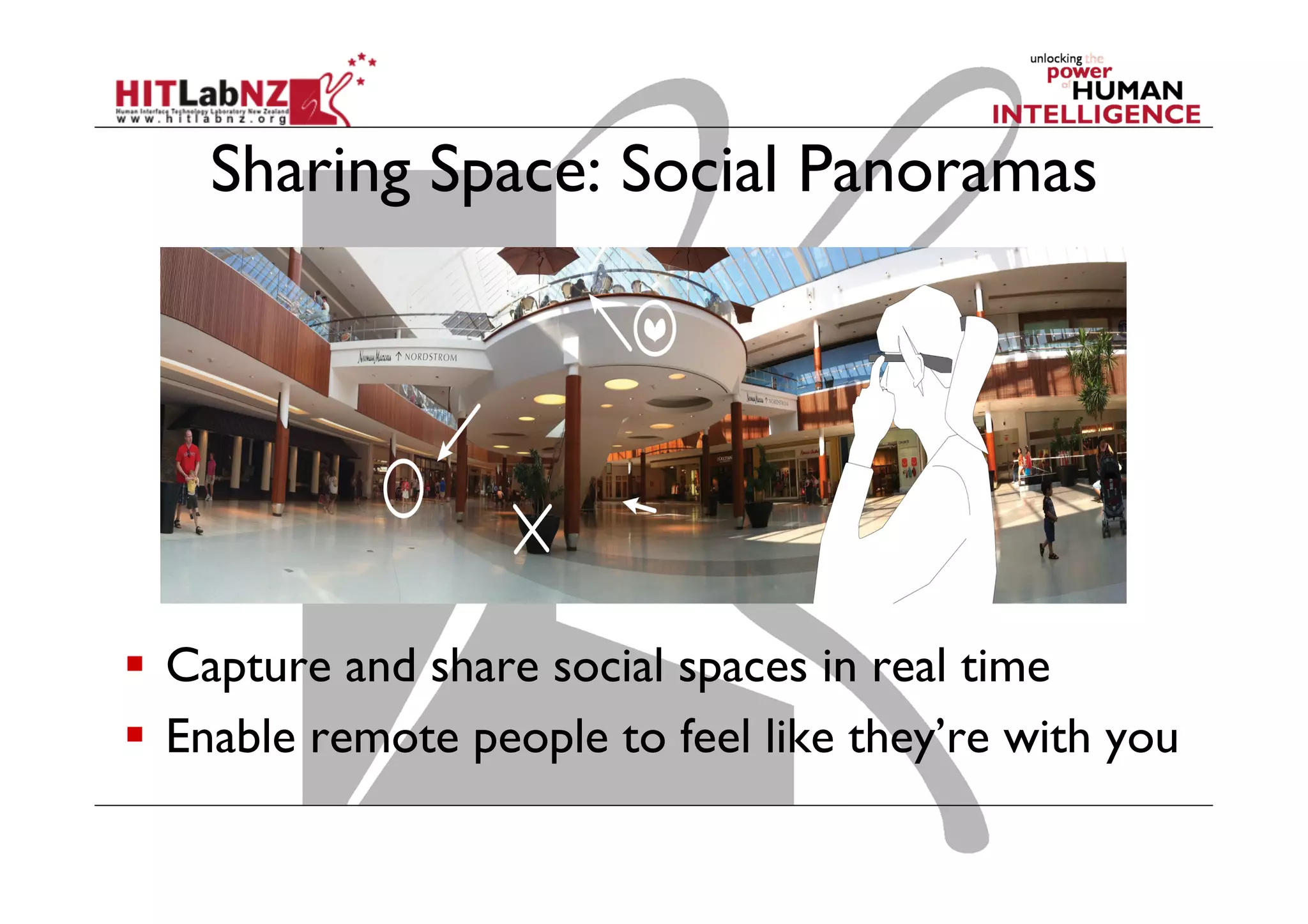

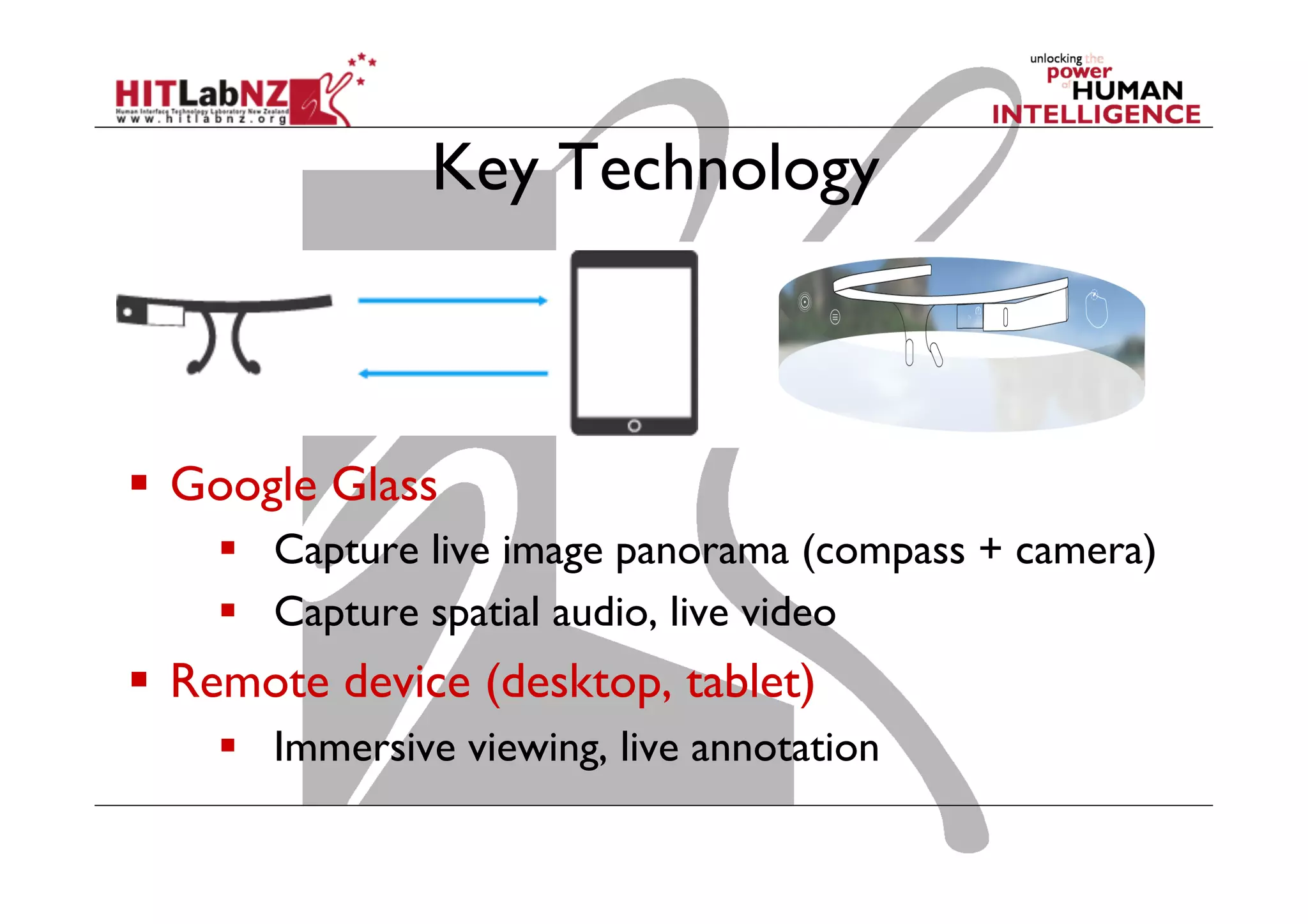

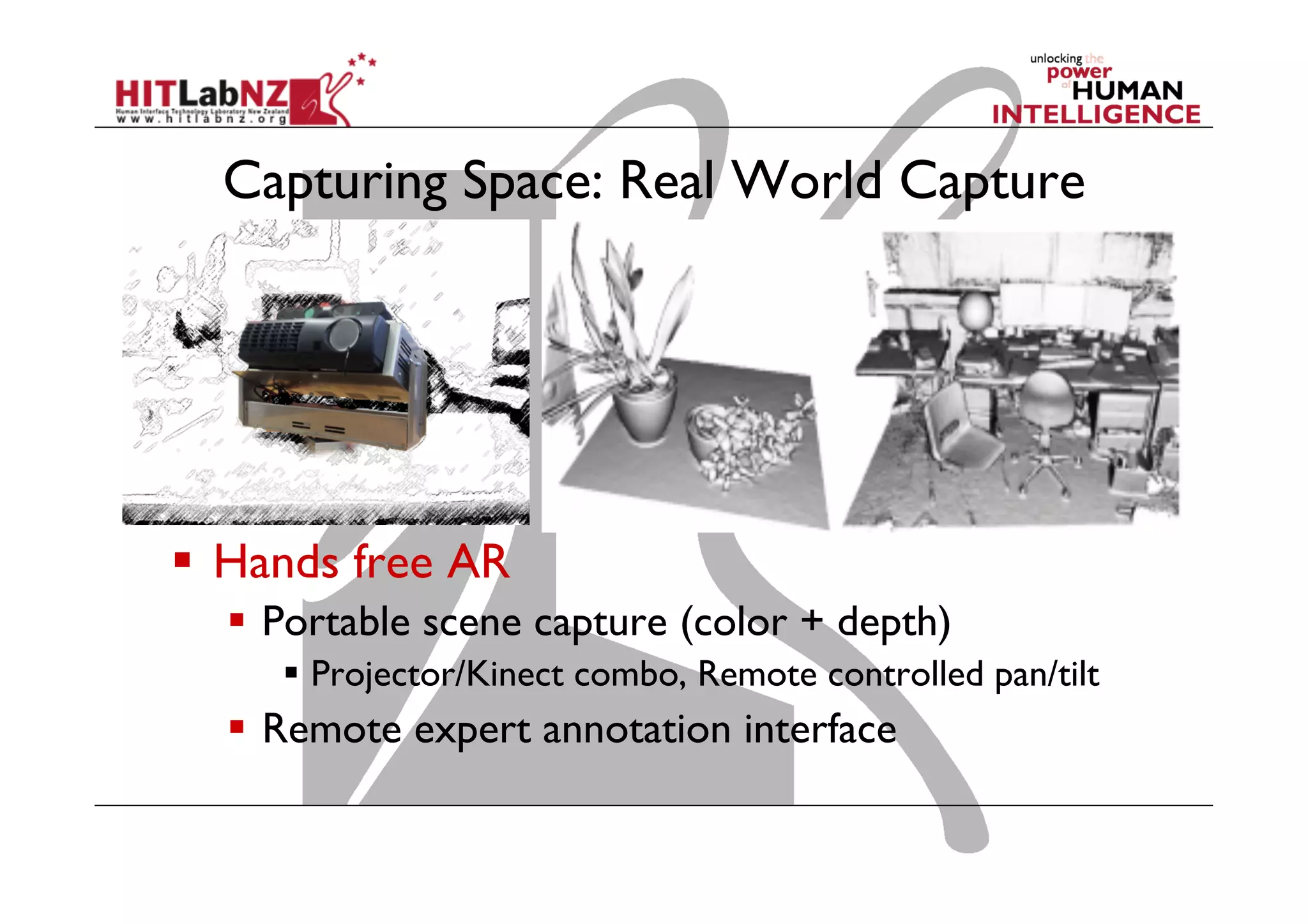

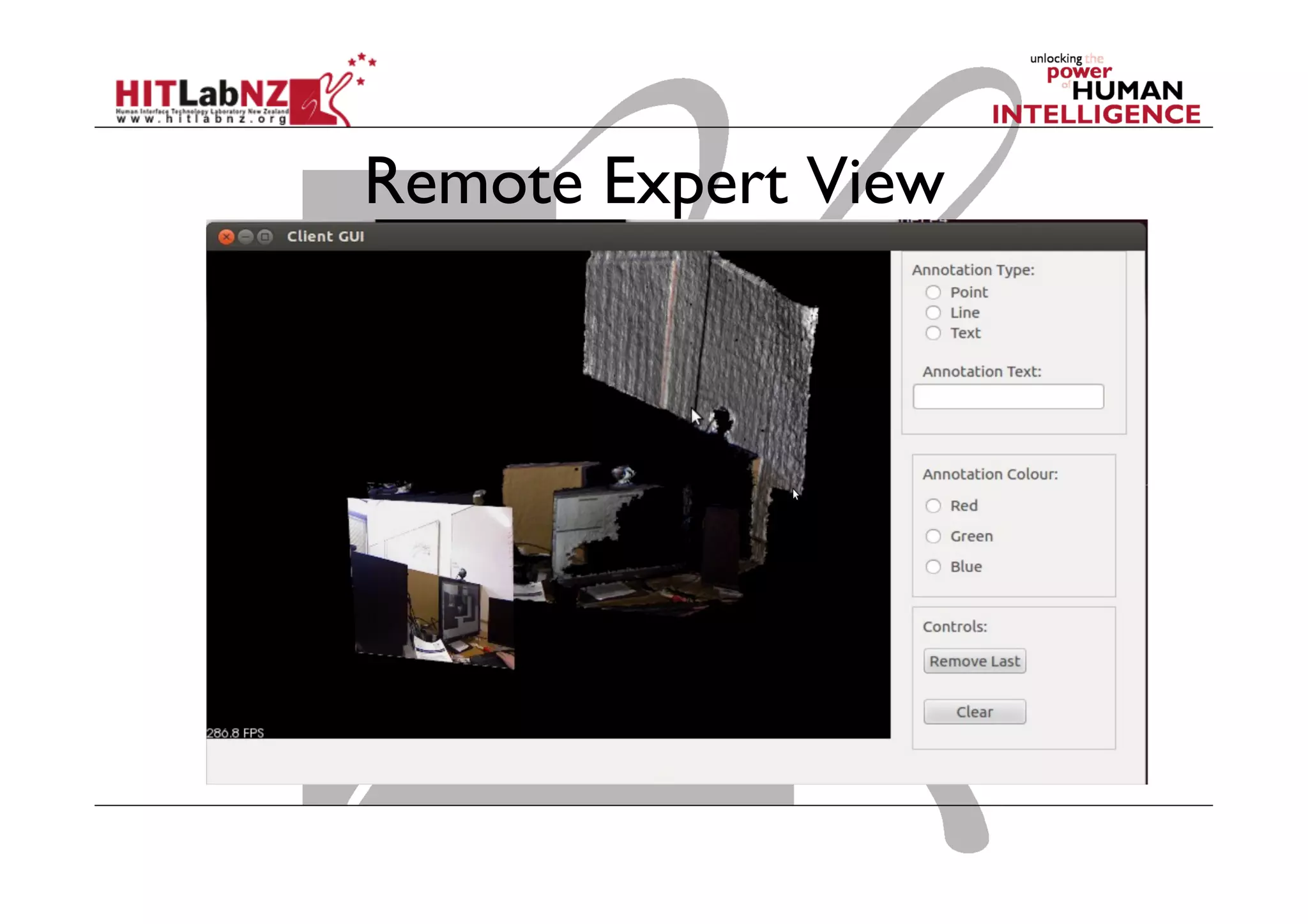

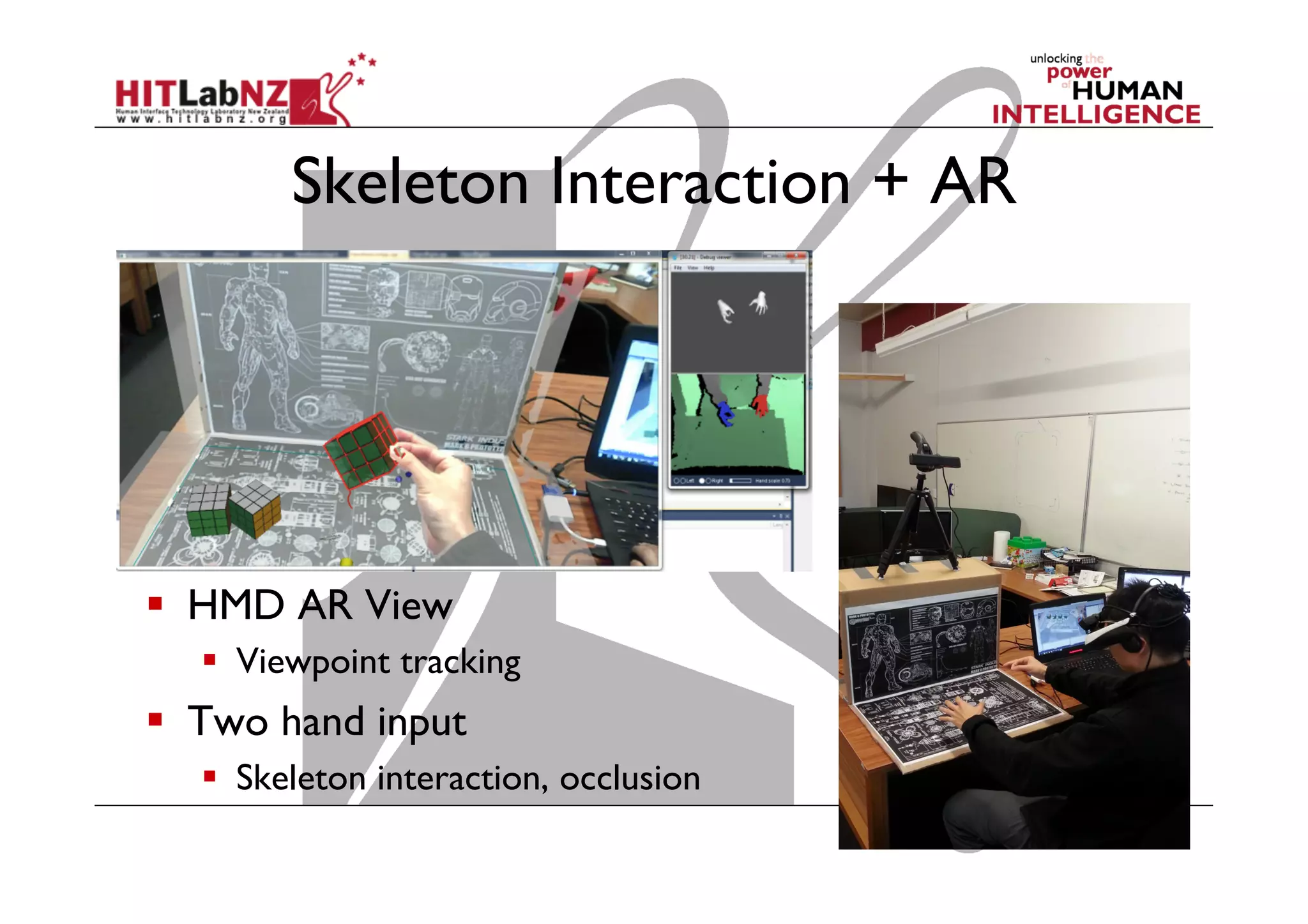

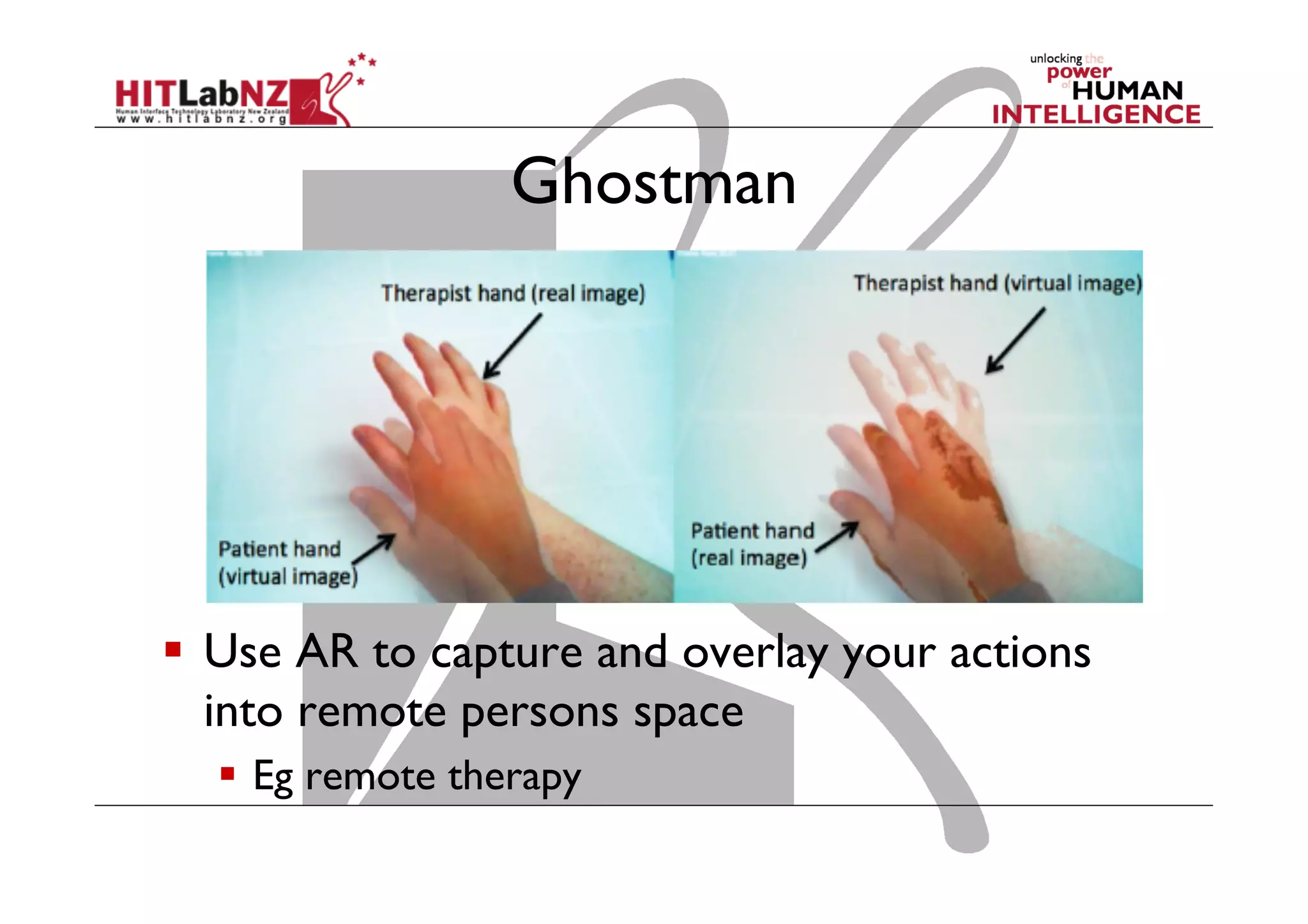

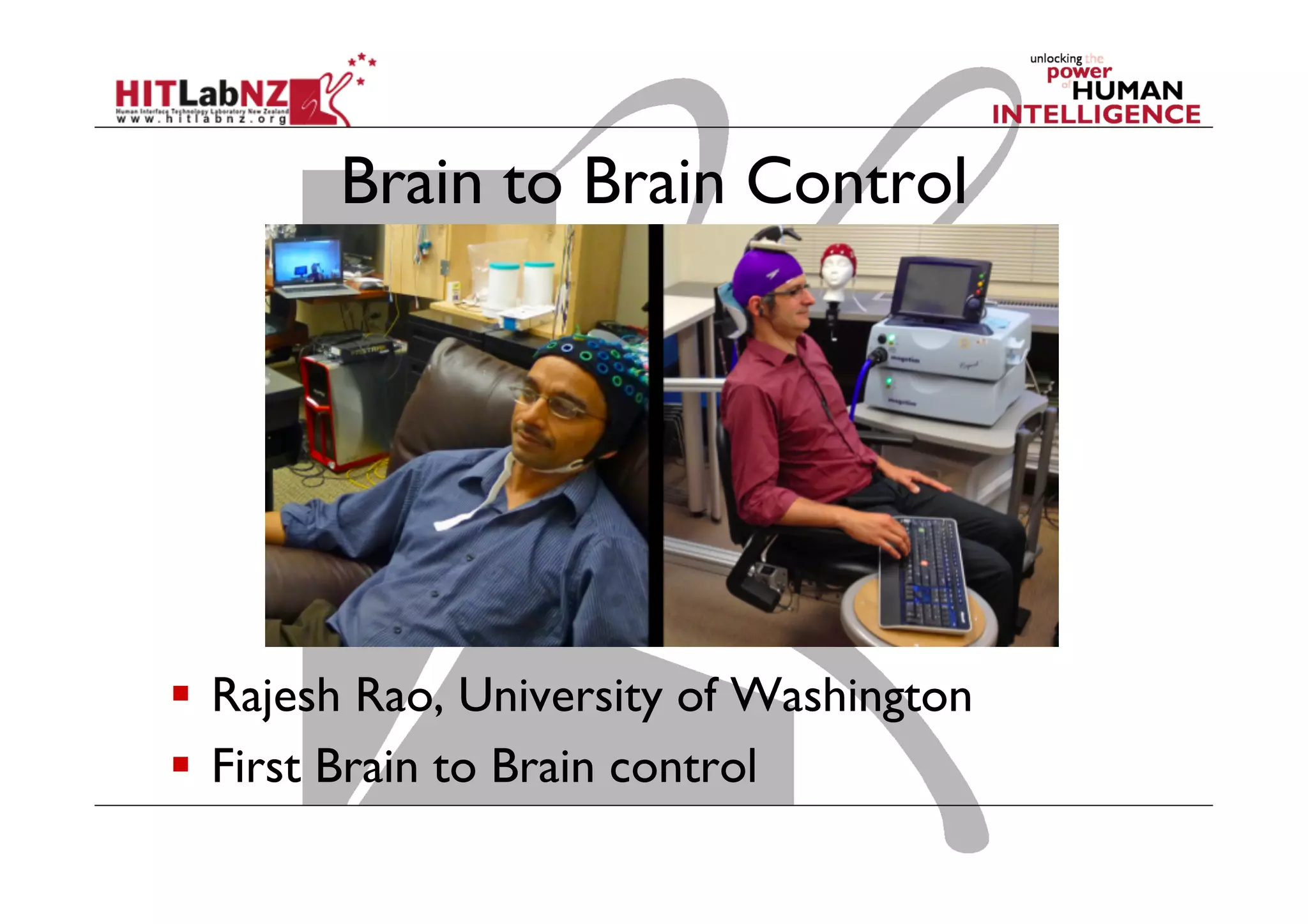

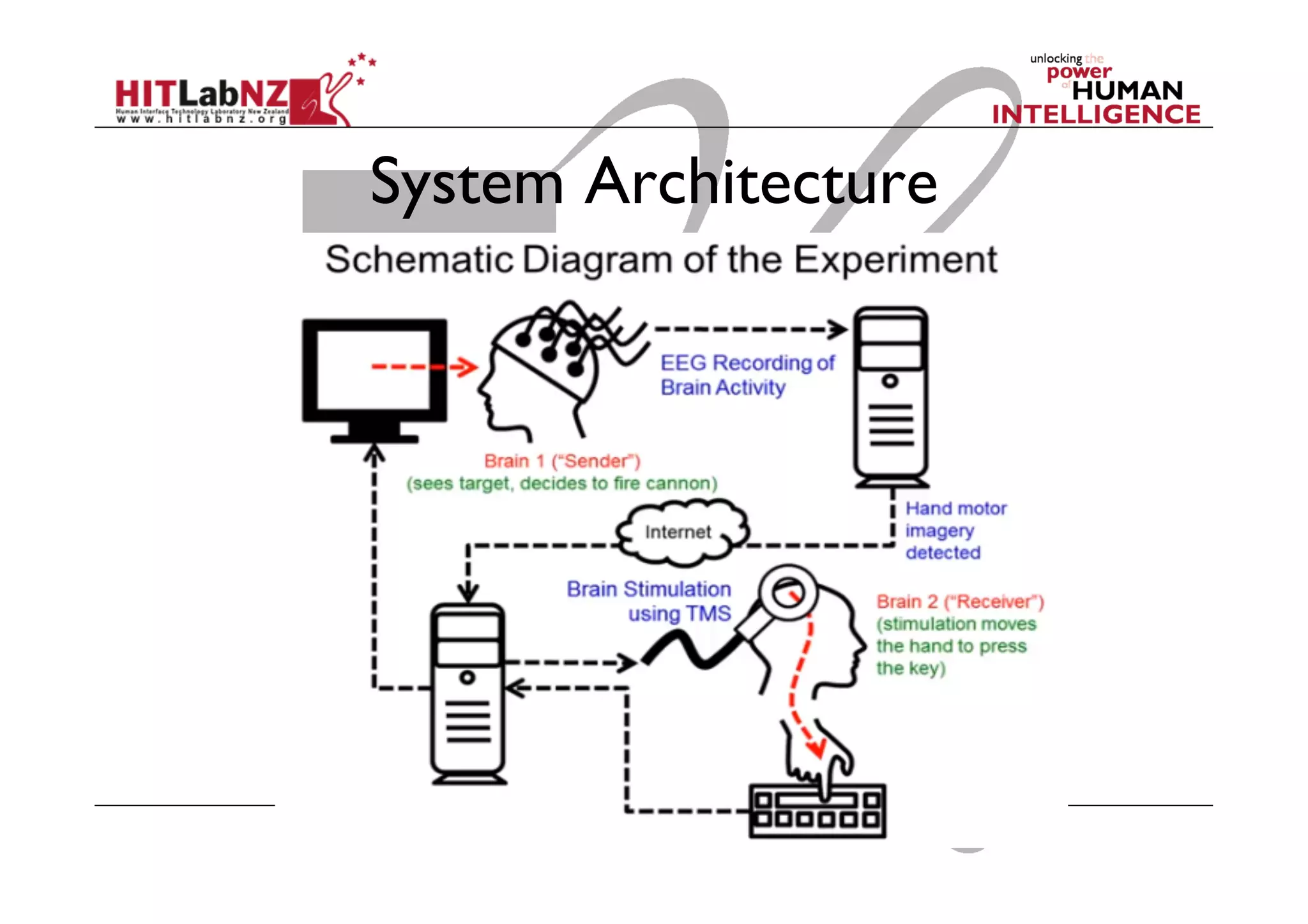

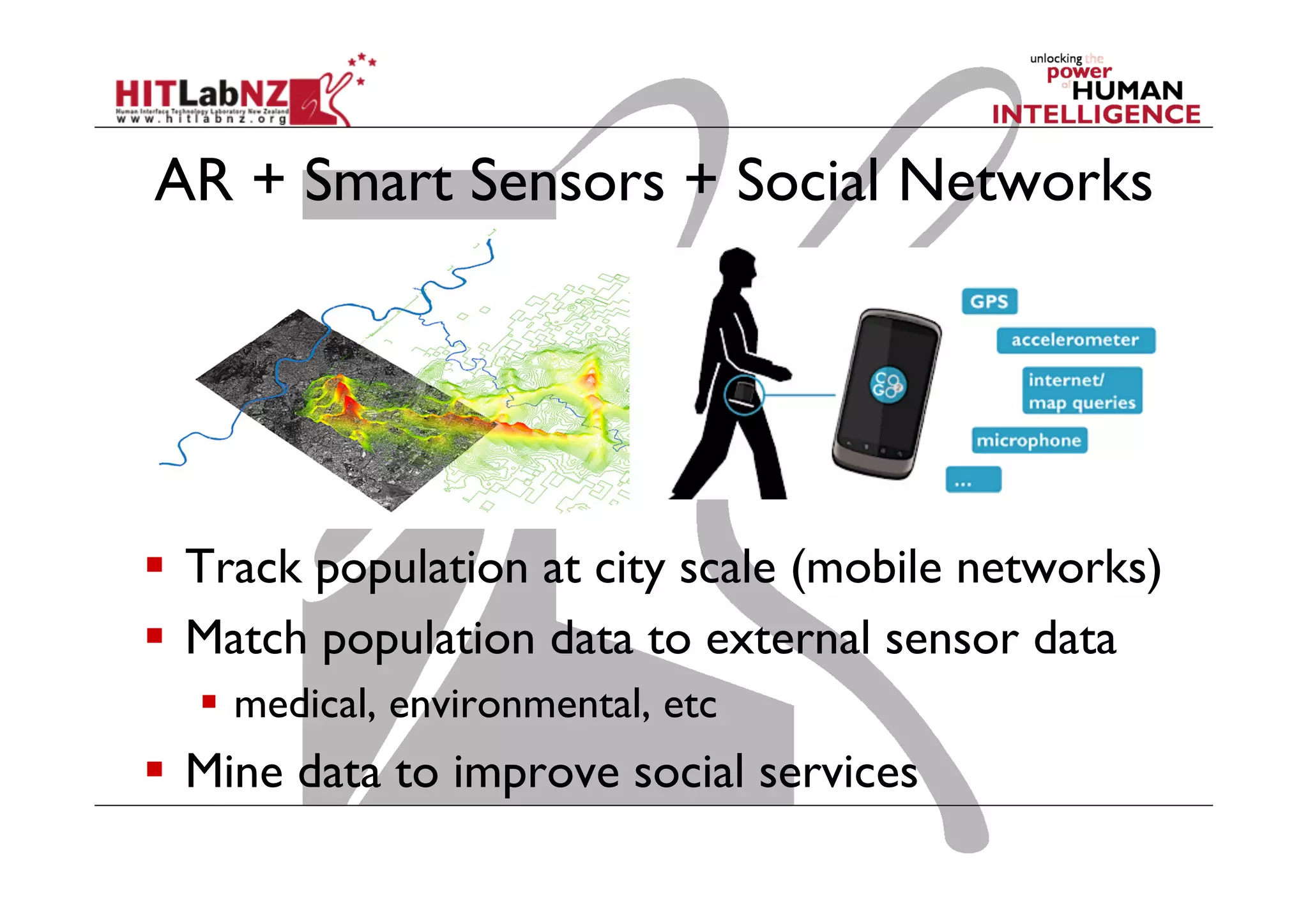

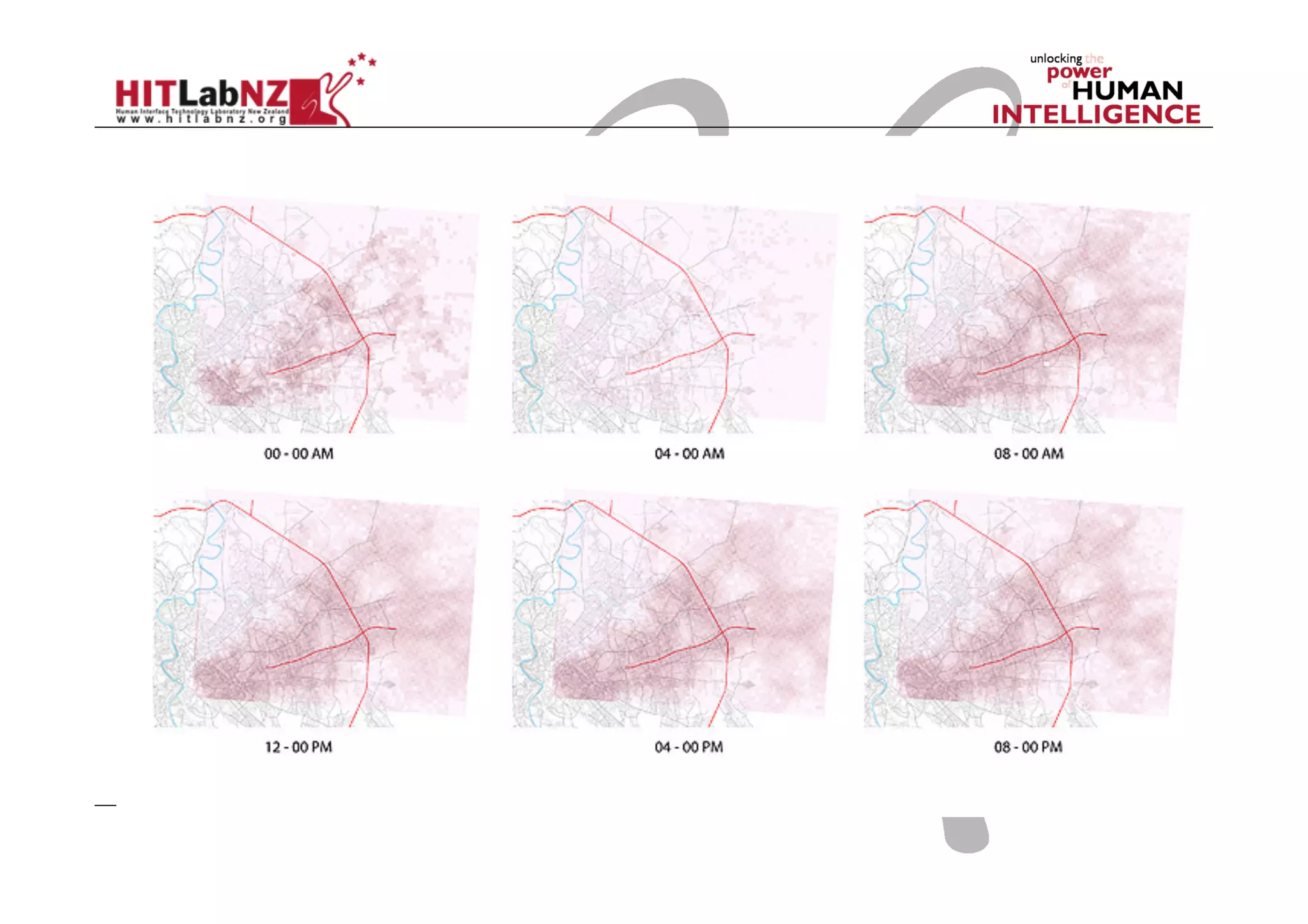

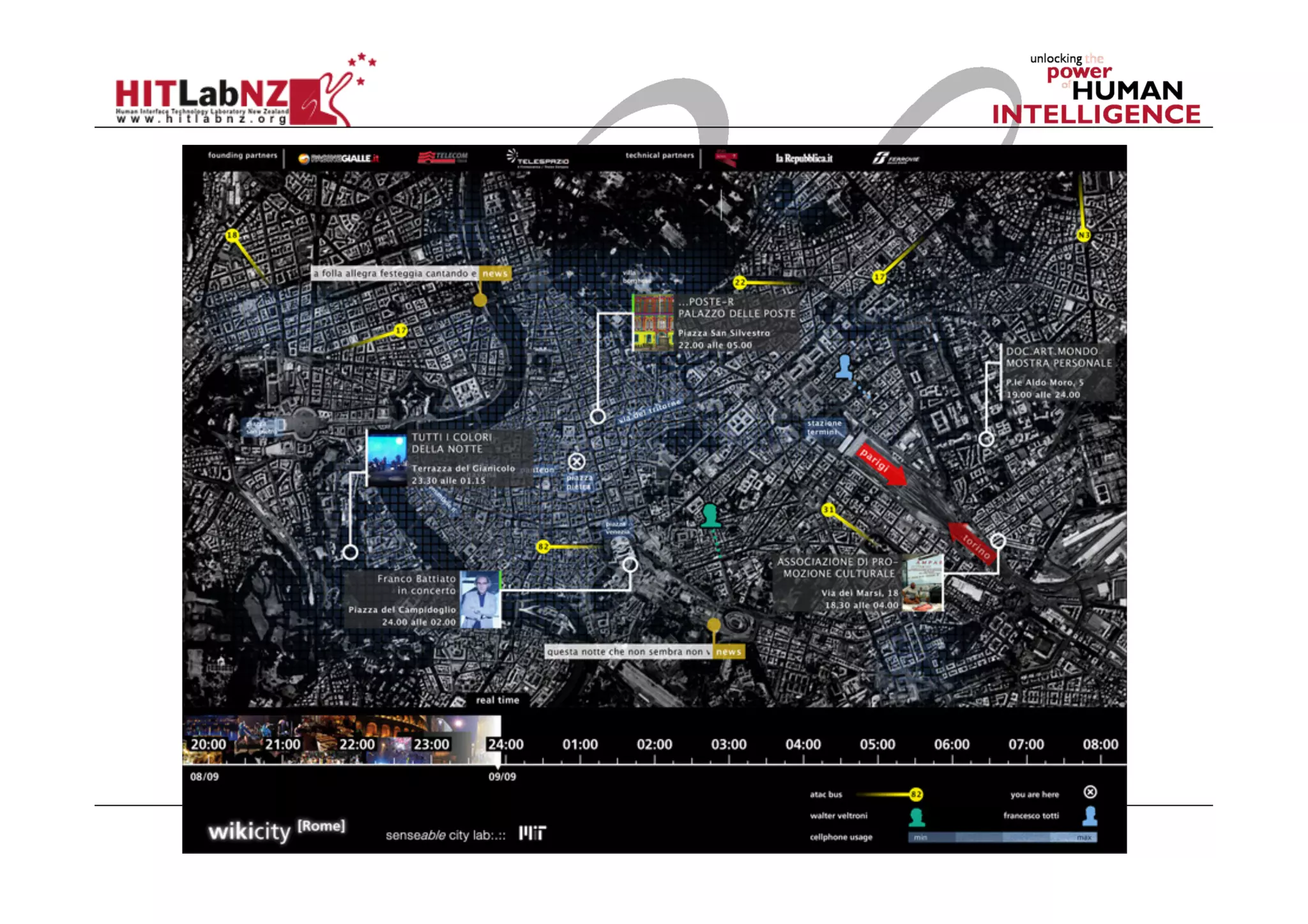

The document discusses the use of augmented reality (AR) in creating empathic experiences and enhancing communication by removing technological barriers and changing perspectives. It highlights concepts such as emotional intelligence, empathic computing, and various applications of AR, including remote collaboration and social panoramas. The conclusion emphasizes that AR can facilitate shared experiences, which are essential for emotional connections, and identifies future research directions in scaling these applications.