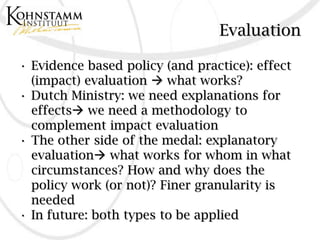

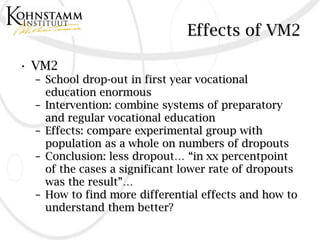

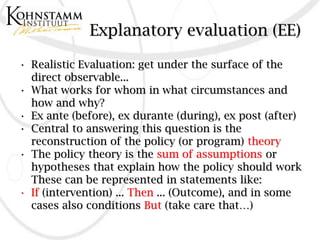

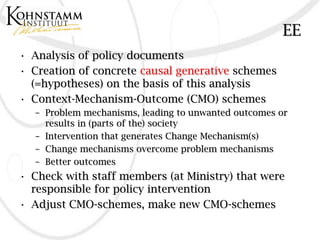

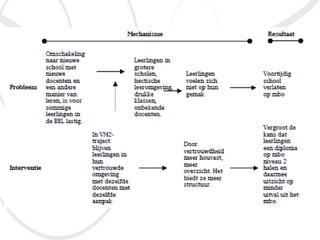

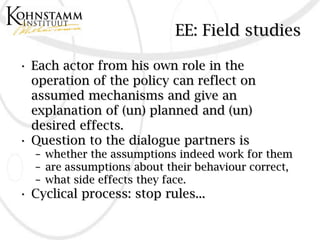

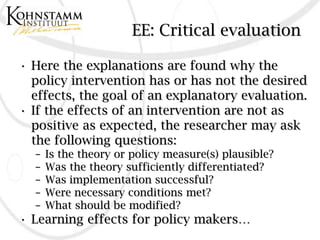

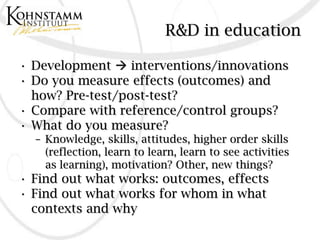

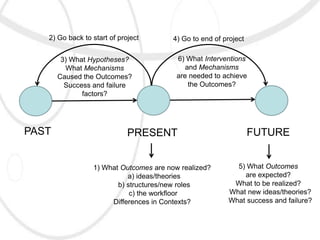

This document discusses explanatory evaluation as a methodology to better understand the effects of policy interventions. Explanatory evaluation aims to determine how and why interventions work (or not), providing a finer level of detail than traditional impact evaluations. It involves reconstructing the underlying theory of the intervention, developing hypotheses about change mechanisms, and testing these hypotheses through field studies with different stakeholders. The goal is to gain insights into what works for whom and in what contexts to inform future policy. The methodology is proposed as a way to complement impact evaluations and provide more explanatory power for policymakers.