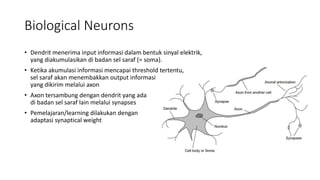

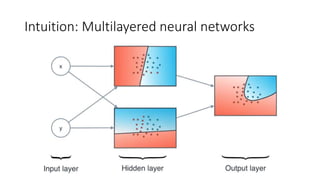

The document discusses the fundamentals of artificial neural networks (ANNs) by comparing them to biological neural networks, highlighting key aspects such as neuron structure, learning processes, and the significance of activation functions. It explains the McCulloch-Pitts processing unit and introduces techniques like backpropagation and gradient descent for training multi-layered networks. Various applications of neural networks, including face recognition and self-driving cars, are also mentioned.