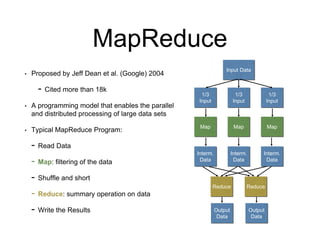

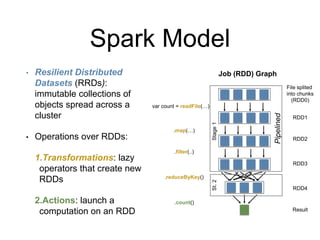

This document discusses distributed processing frameworks for big data. It introduces MapReduce as a programming model that enables parallel processing of large datasets across clusters. While MapReduce was novel, it was limited to batch processing and only supported map and reduce operations. Spark was then proposed as another framework to replace MapReduce, representing computations as directed acyclic graphs and caching datasets in memory for better performance. Both systems introduced challenges in measuring and improving performance at scale.