This document provides recommendations for improving performance in a big data environment. It suggests:

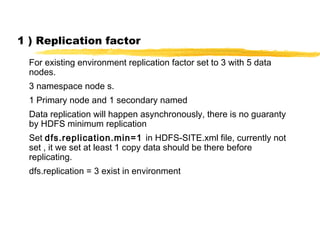

1. Increasing the replication factor from 3 to improve data availability.

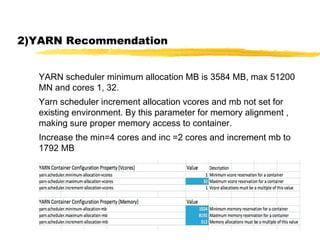

2. Adjusting YARN scheduler settings like minimum and maximum allocation to improve memory usage.

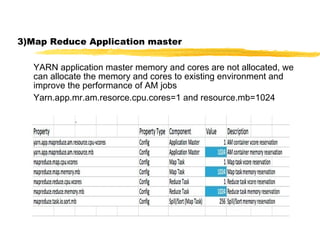

3. Allocating memory and cores to the application master to improve job performance.

4. Setting the JVM reuse property to reduce JVM overhead for tasks.

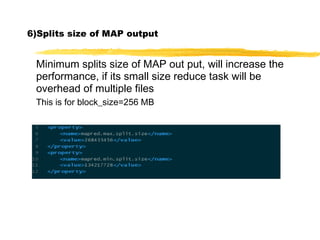

5. Increasing the minimum splits size for map output to reduce overhead of multiple files.

6. Increasing the block size from 128MB to 256MB to improve job performance on large data.