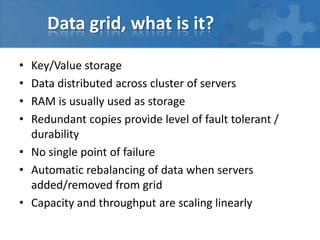

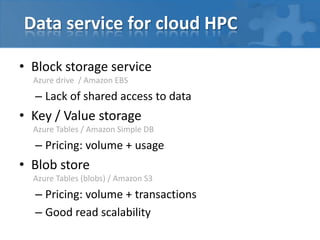

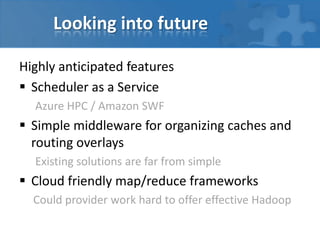

This document discusses high performance computing in the cloud. It addresses different types of workloads like I/O bound, CPU bound, and latency bound tasks. It also discusses strategies for handling task streams and structured batch jobs in the cloud. Specific challenges around data delivery and distribution are examined, along with approaches like data grids, caching, and task stealing. The document looks at how MapReduce frameworks could be better optimized for the cloud model. Overall trends and anticipated future features around schedulers as a service and cloud-friendly data processing frameworks are considered.