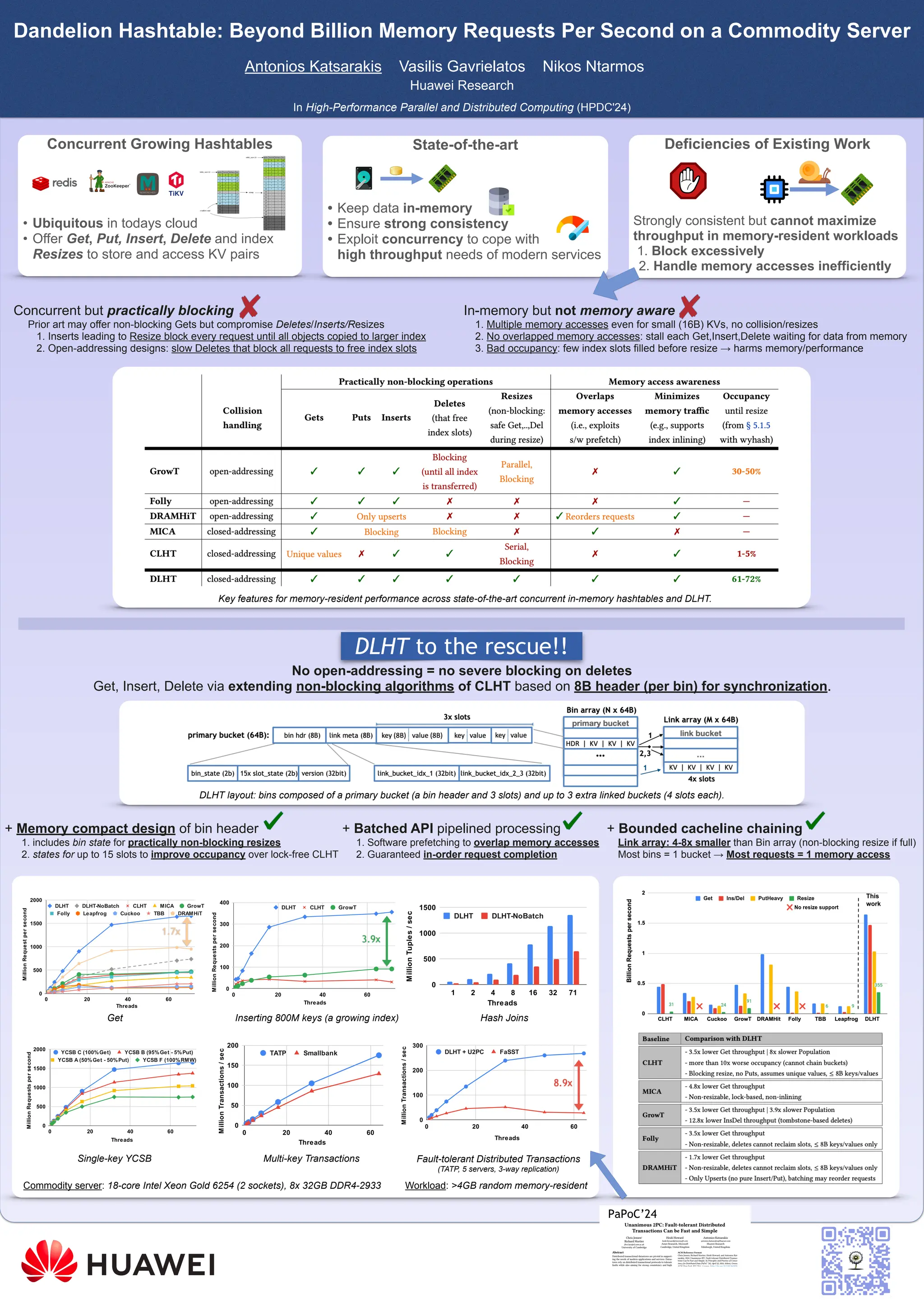

The document discusses the limitations of existing high-performance concurrent hashtables in handling memory accesses, particularly for in-memory workloads, and introduces the Dandelion Hashtable (DLHT) as an innovative solution. DLHT improves upon traditional designs by offering non-blocking operations, optimized memory access patterns, and efficient resizing mechanisms, achieving over a billion memory requests per second on a commodity server. Key features include a compact design, memory prefetching, and improved occupancy rates, demonstrating notable performance boosts over existing concurrent hashtables.