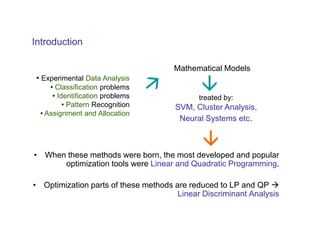

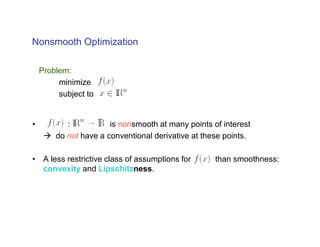

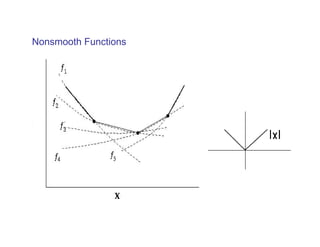

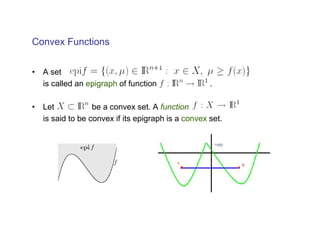

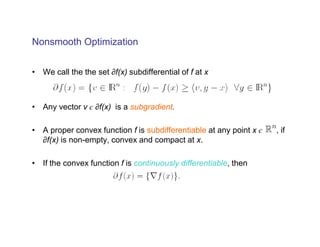

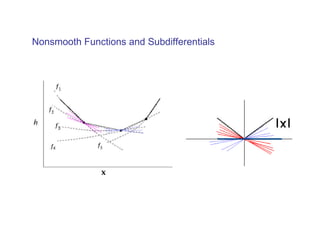

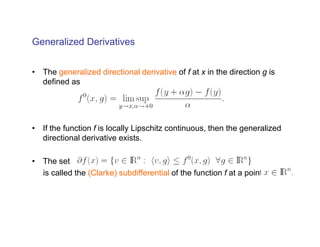

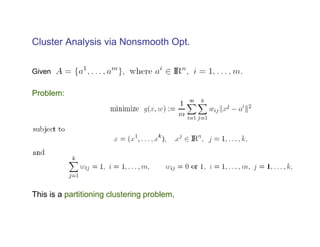

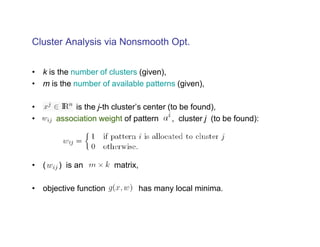

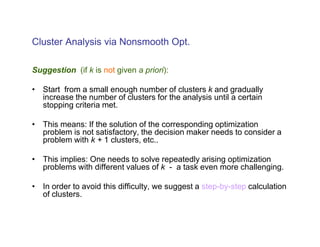

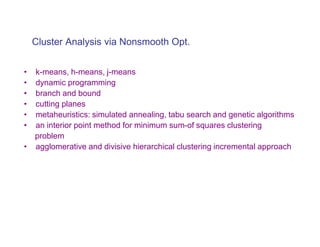

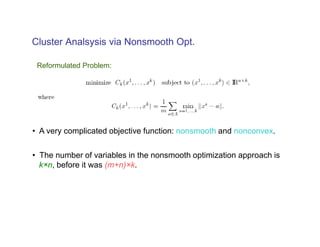

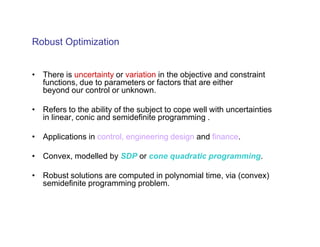

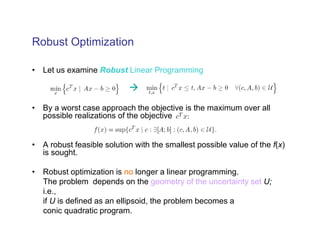

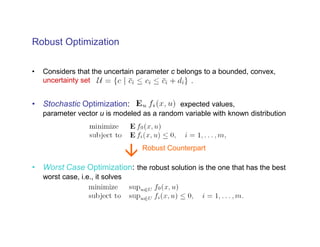

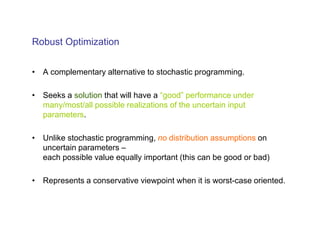

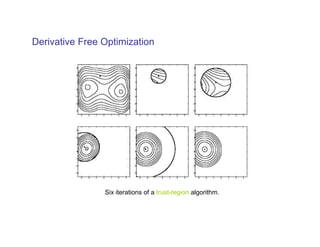

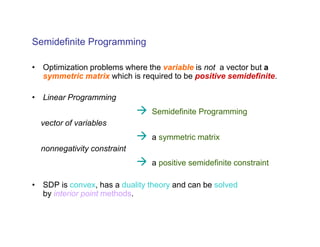

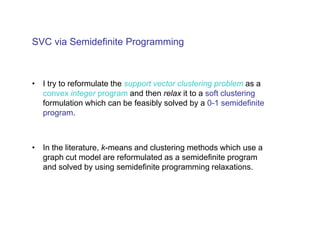

The document summarizes presentations on nonsmooth optimization, robust optimization, and derivative-free optimization given at the 4th International Summer School in Kiev, Ukraine. Gerhard Weber and Basak Akteke-Ozturk presented on nonsmooth optimization and its applications in clustering problems. Robust optimization approaches were discussed for problems with uncertain parameters. Methods for minimizing functions without computing derivatives, known as derivative-free optimization, were also covered. Examples included trust-region algorithms and using models based on interpolation. Semidefinite programming relaxations for support vector clustering were mentioned.