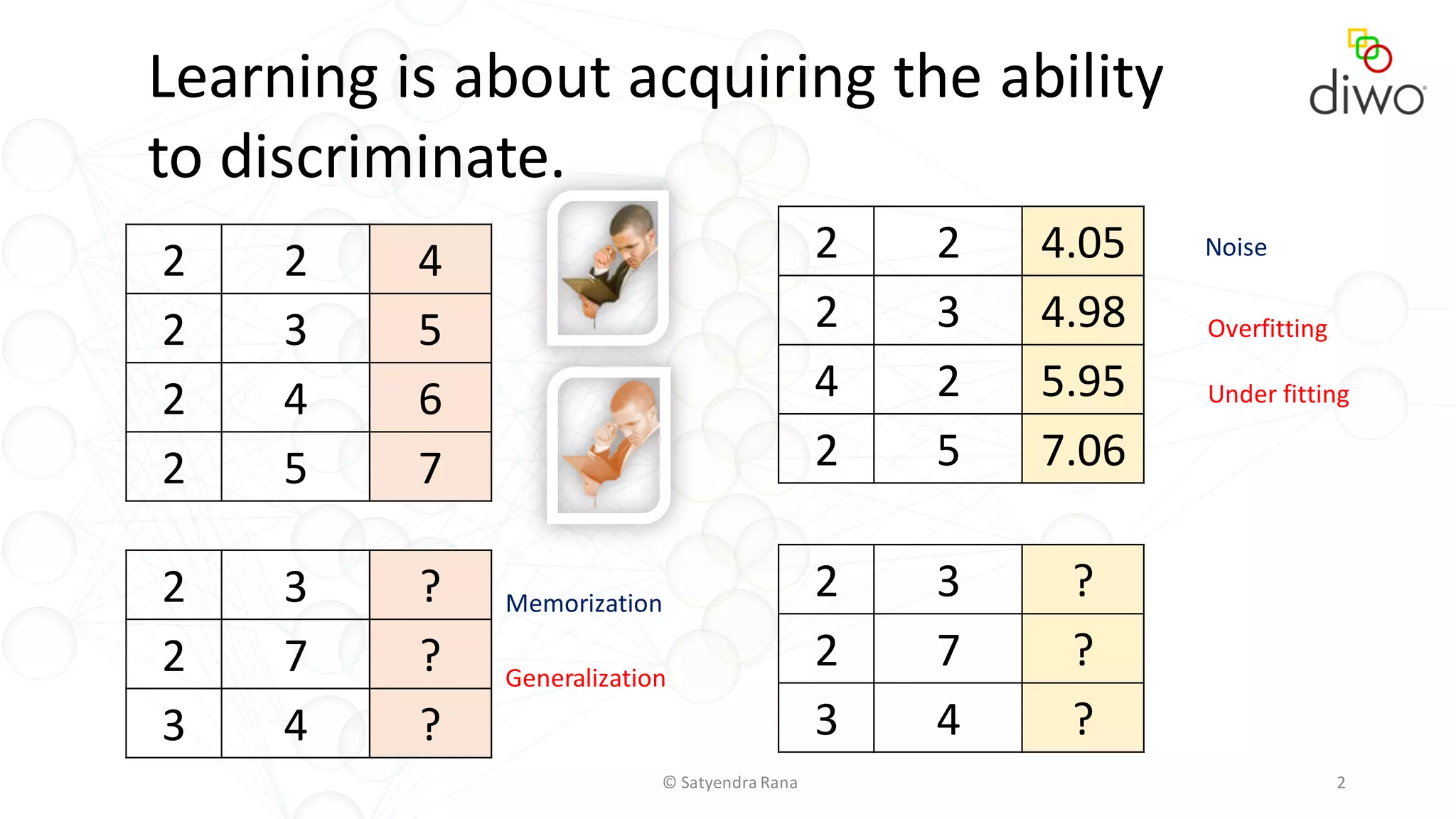

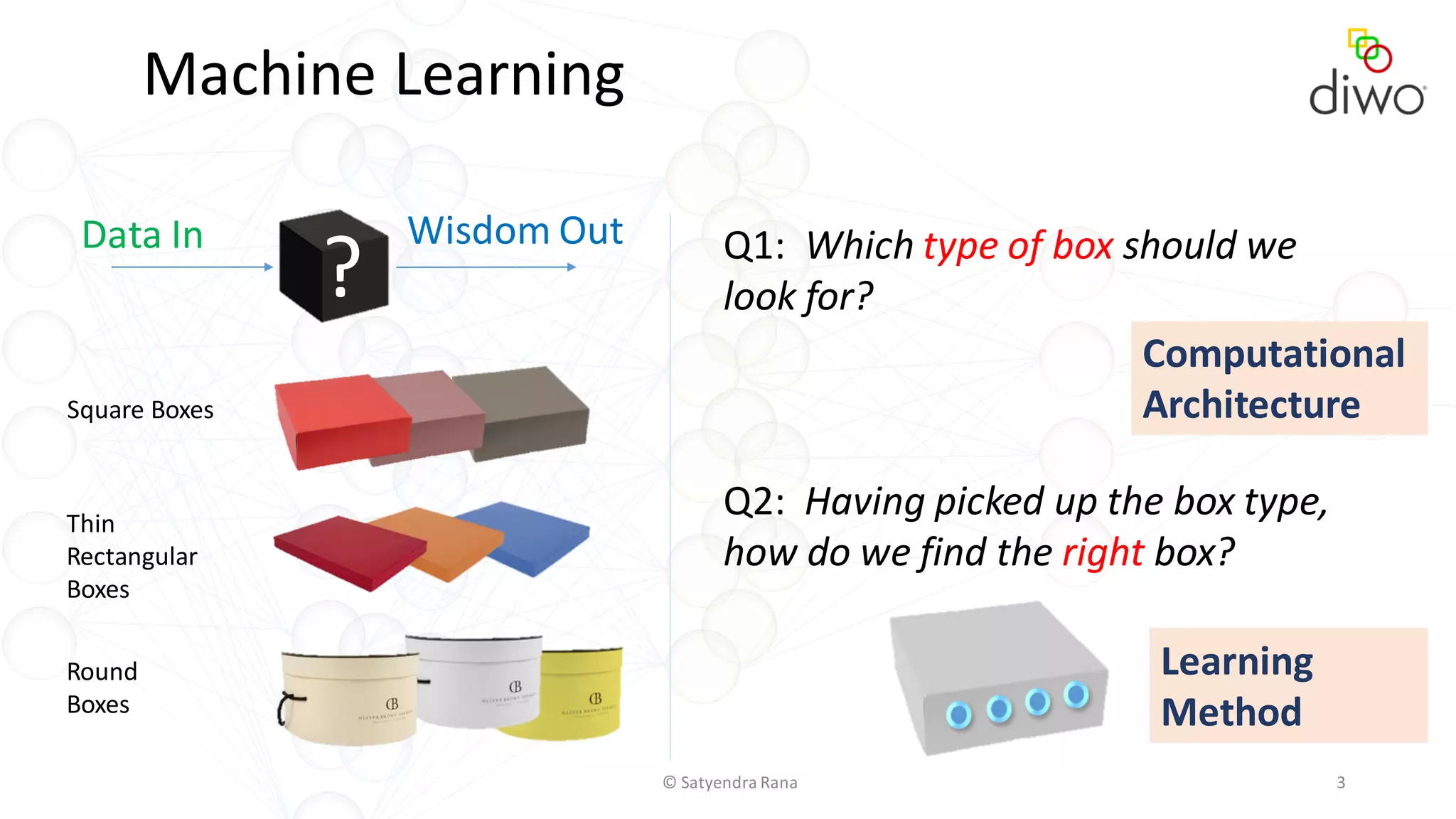

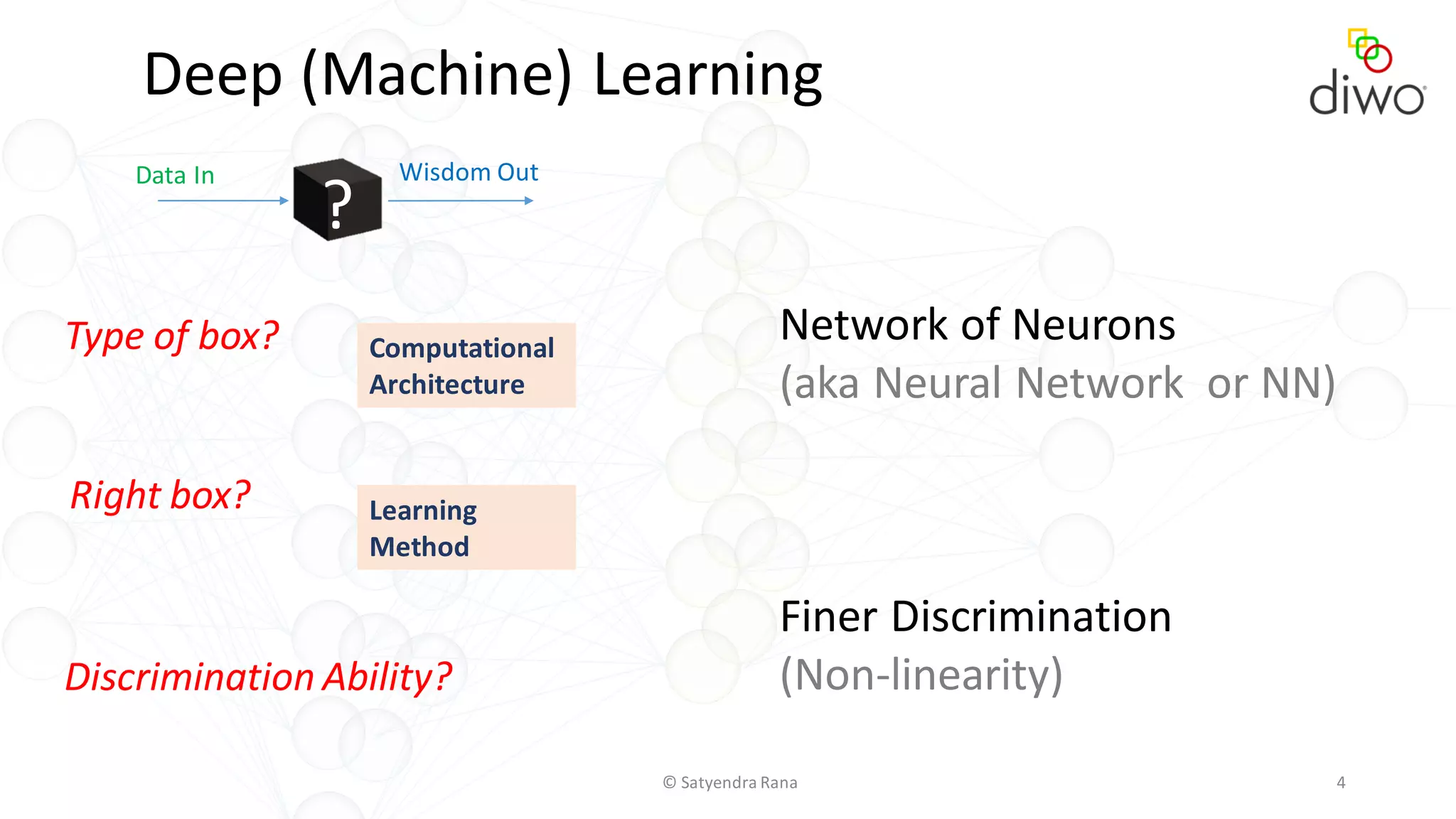

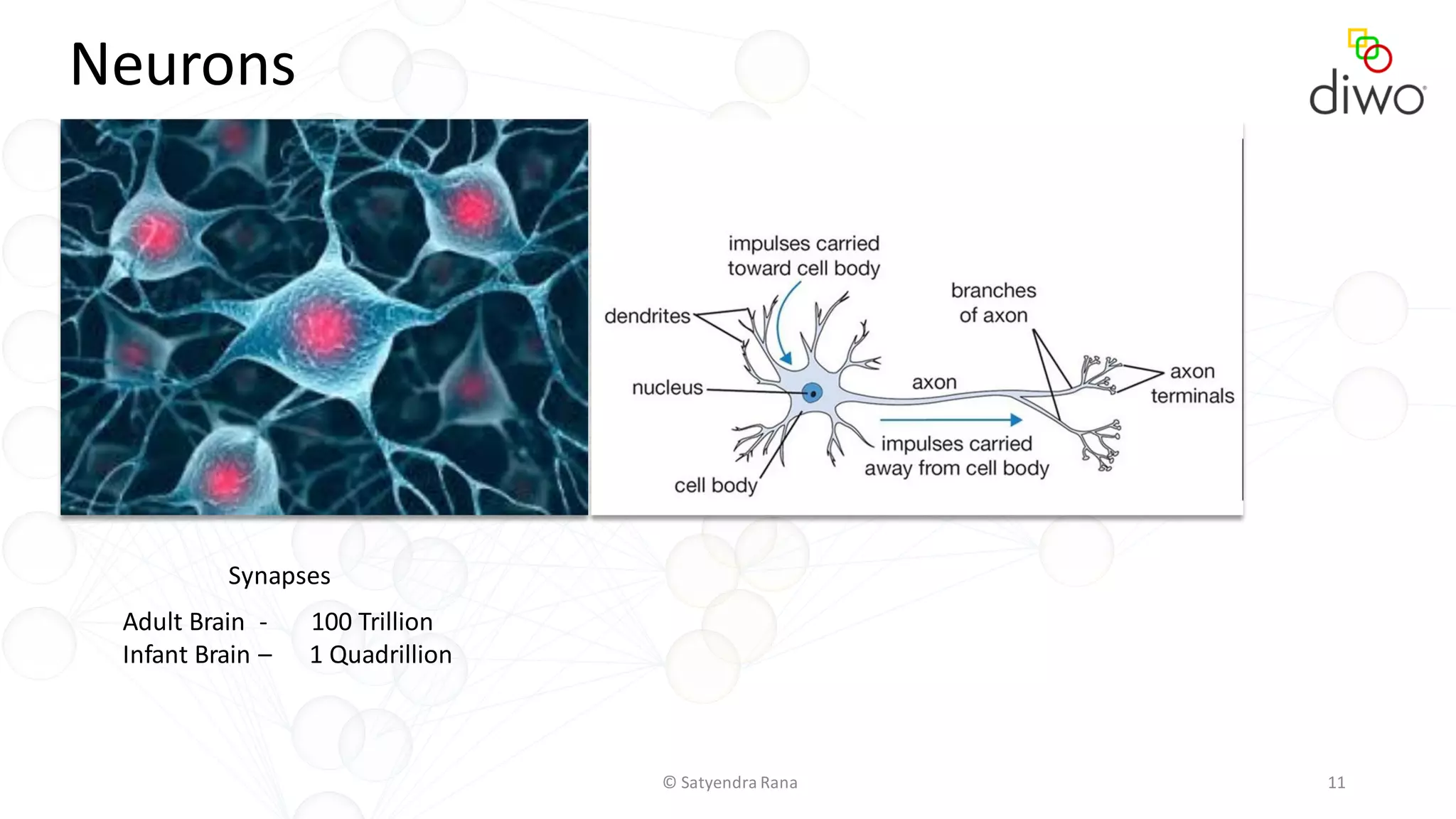

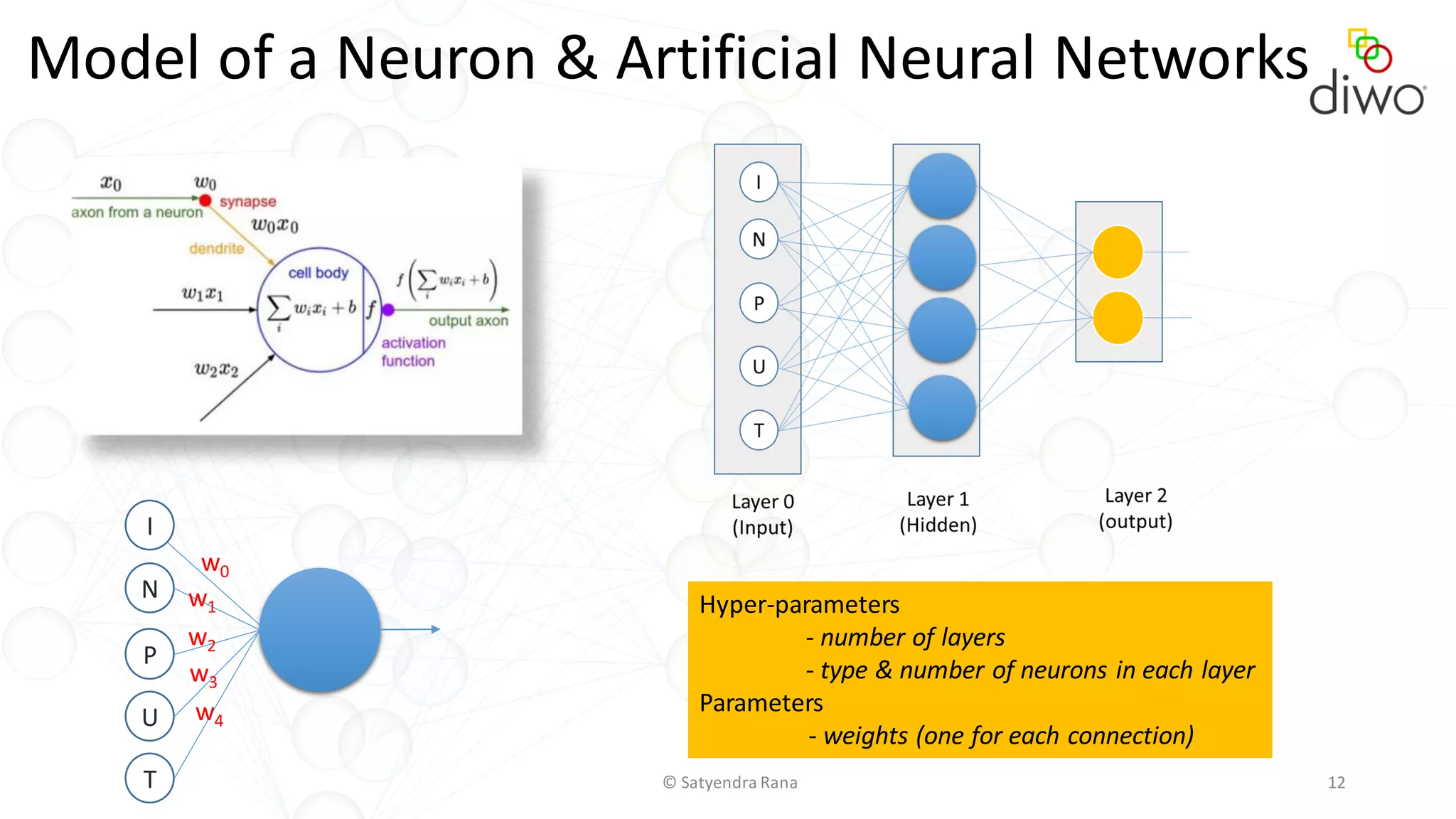

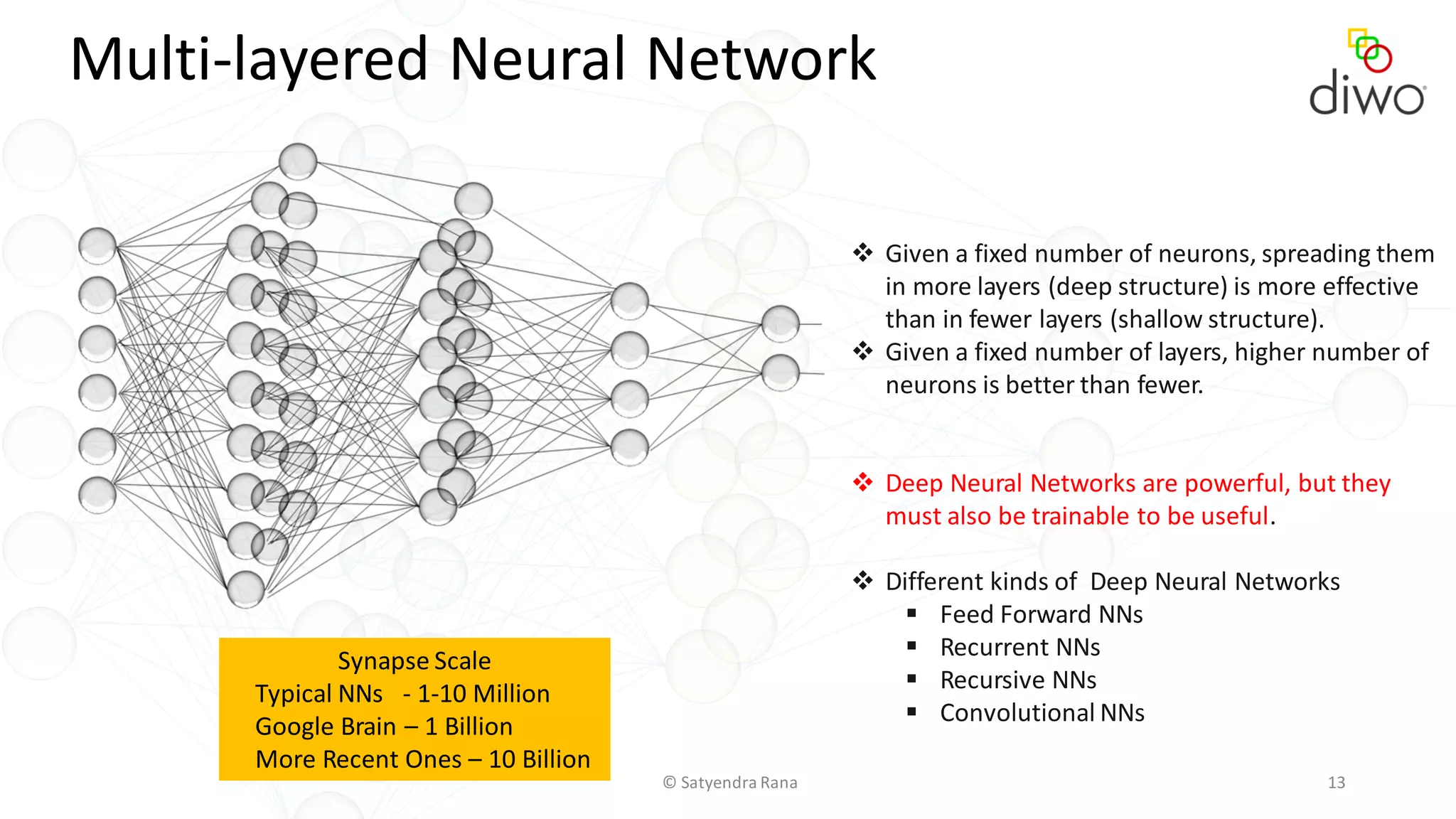

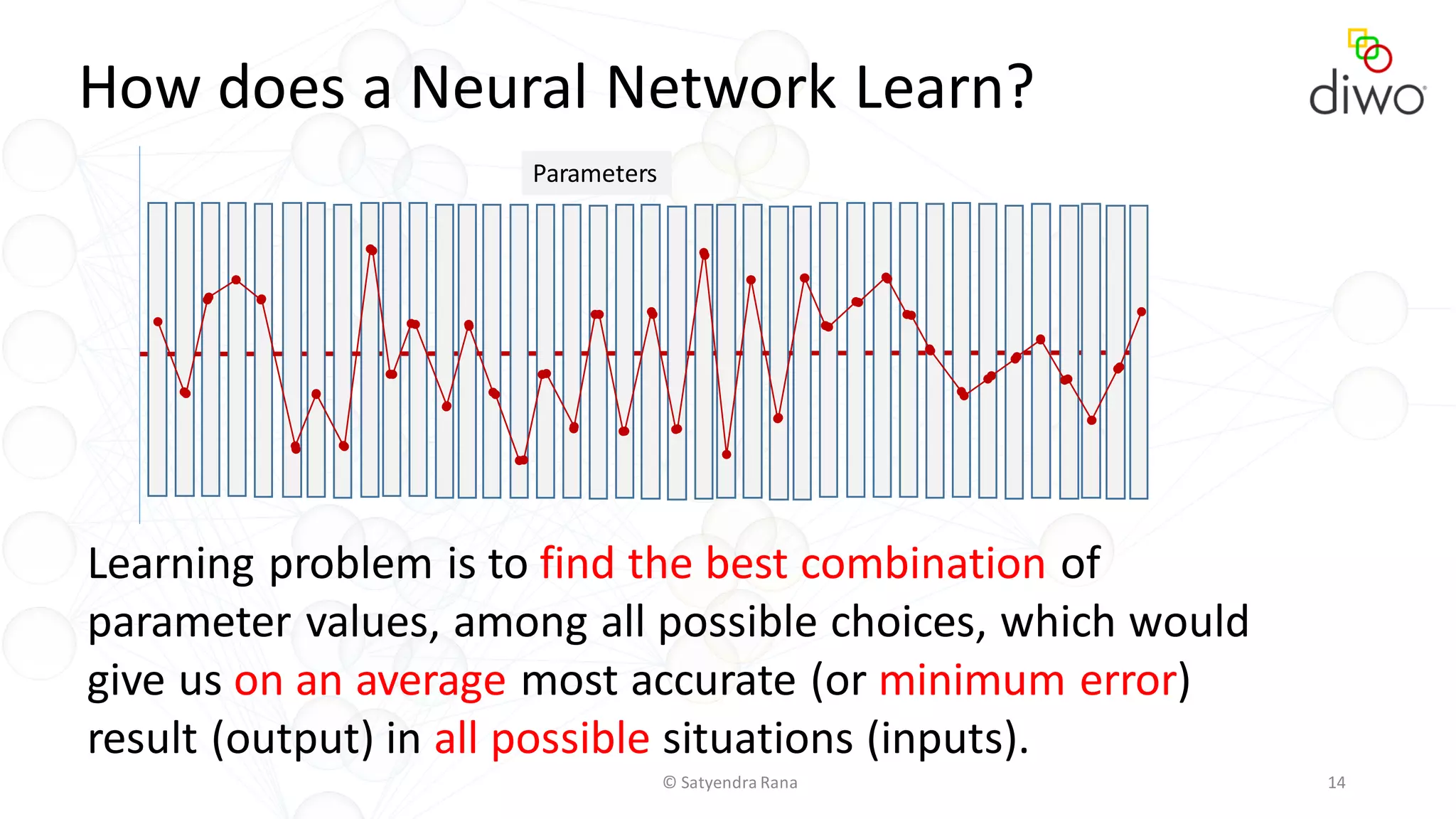

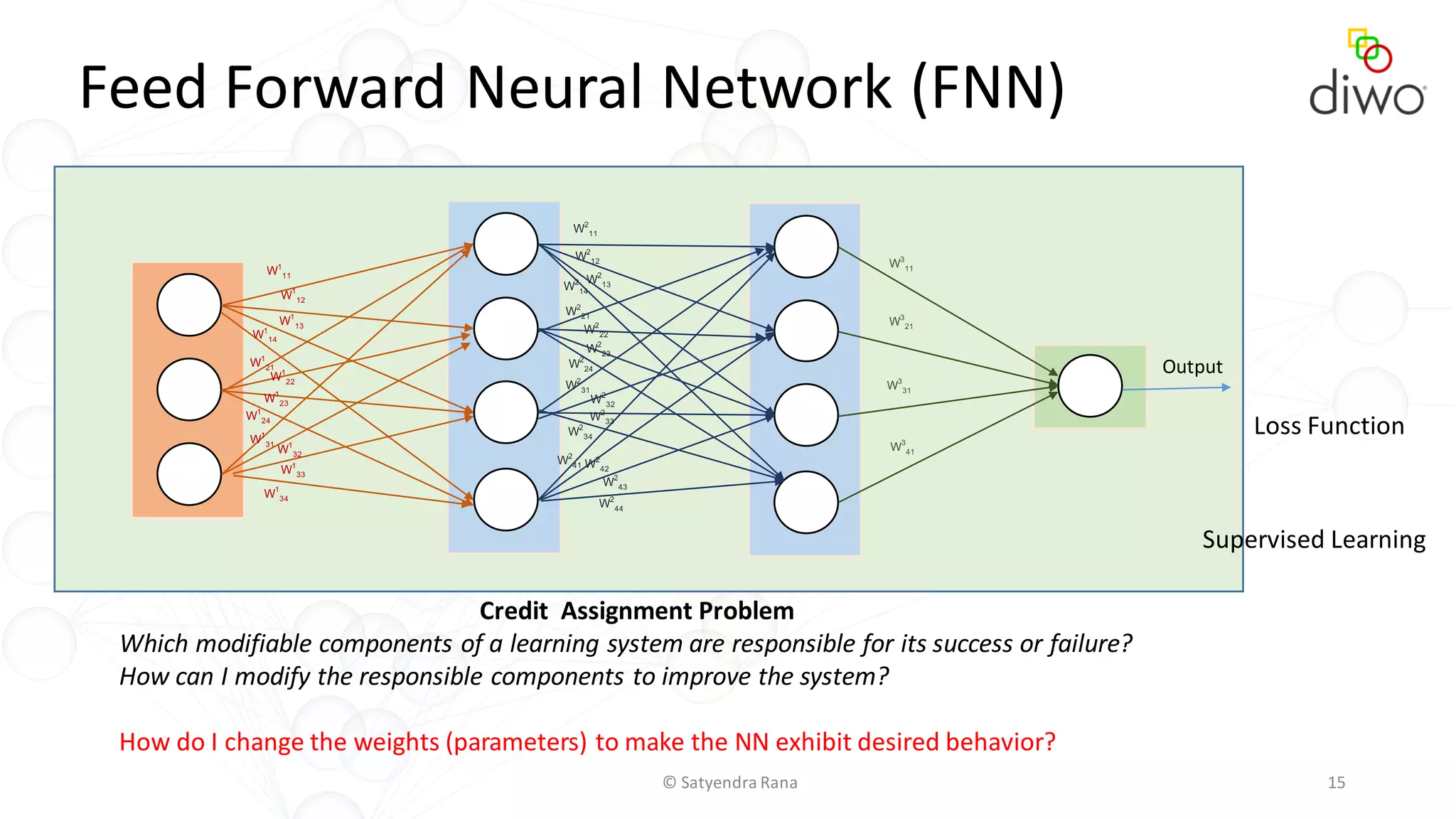

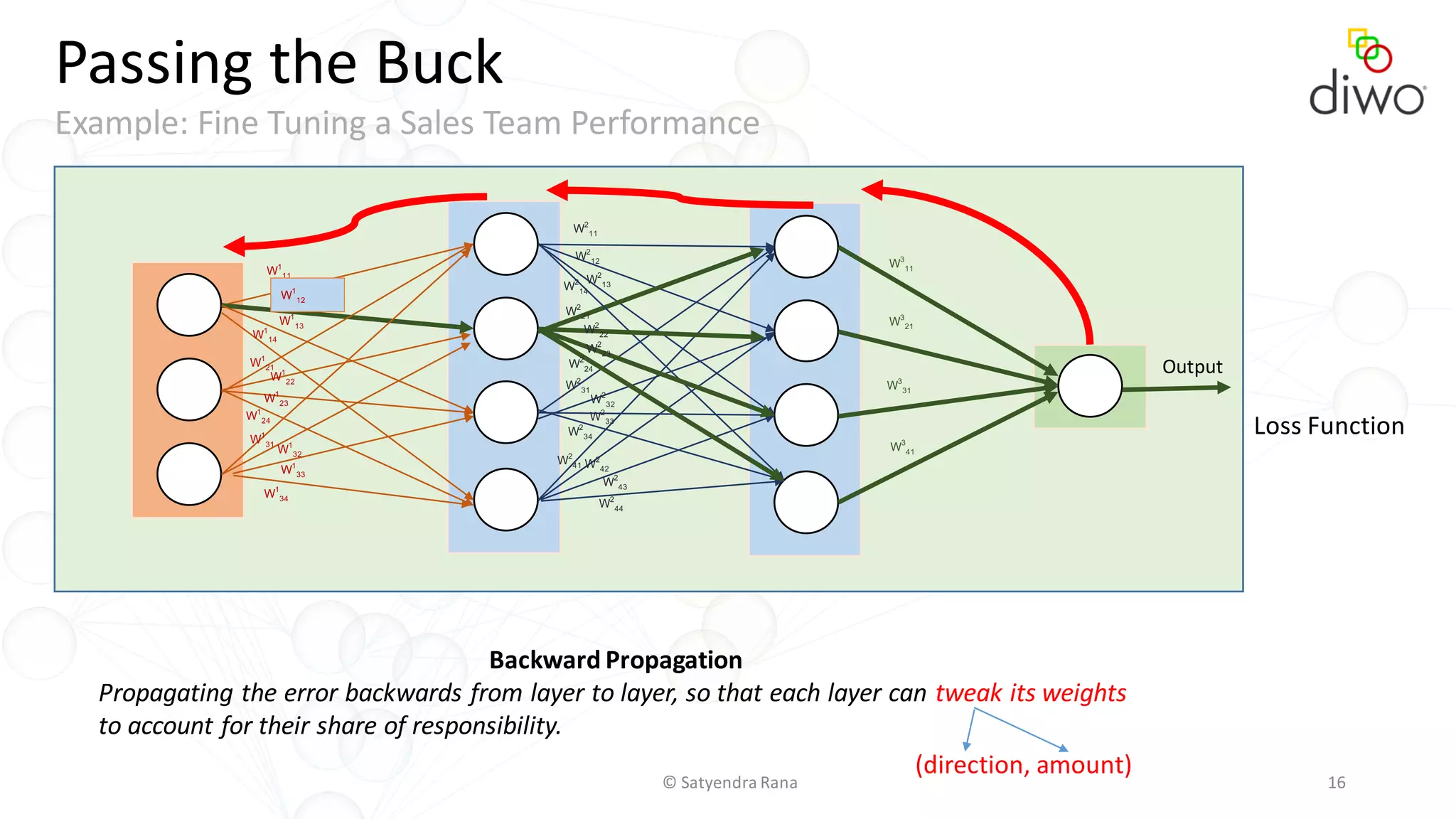

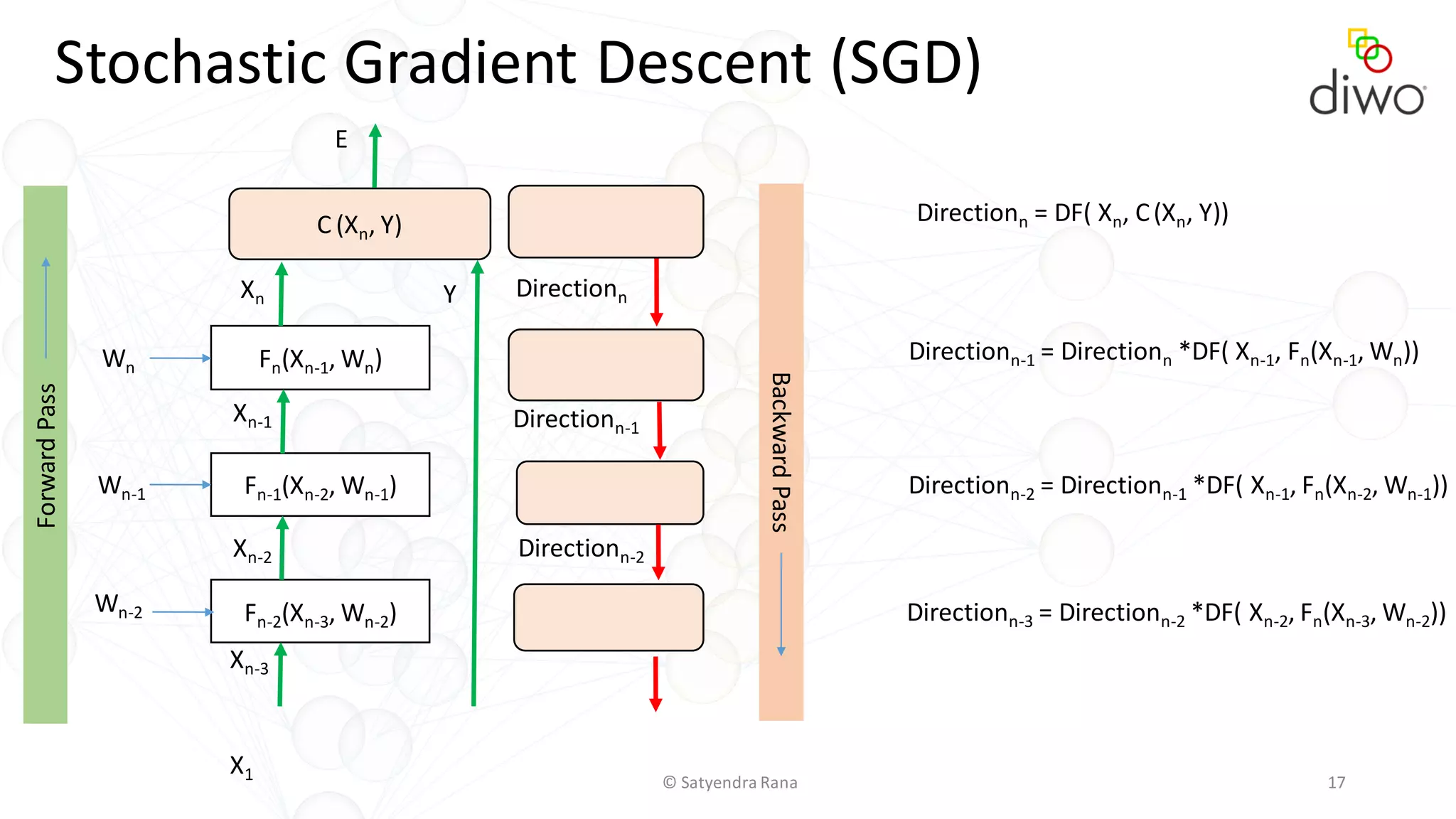

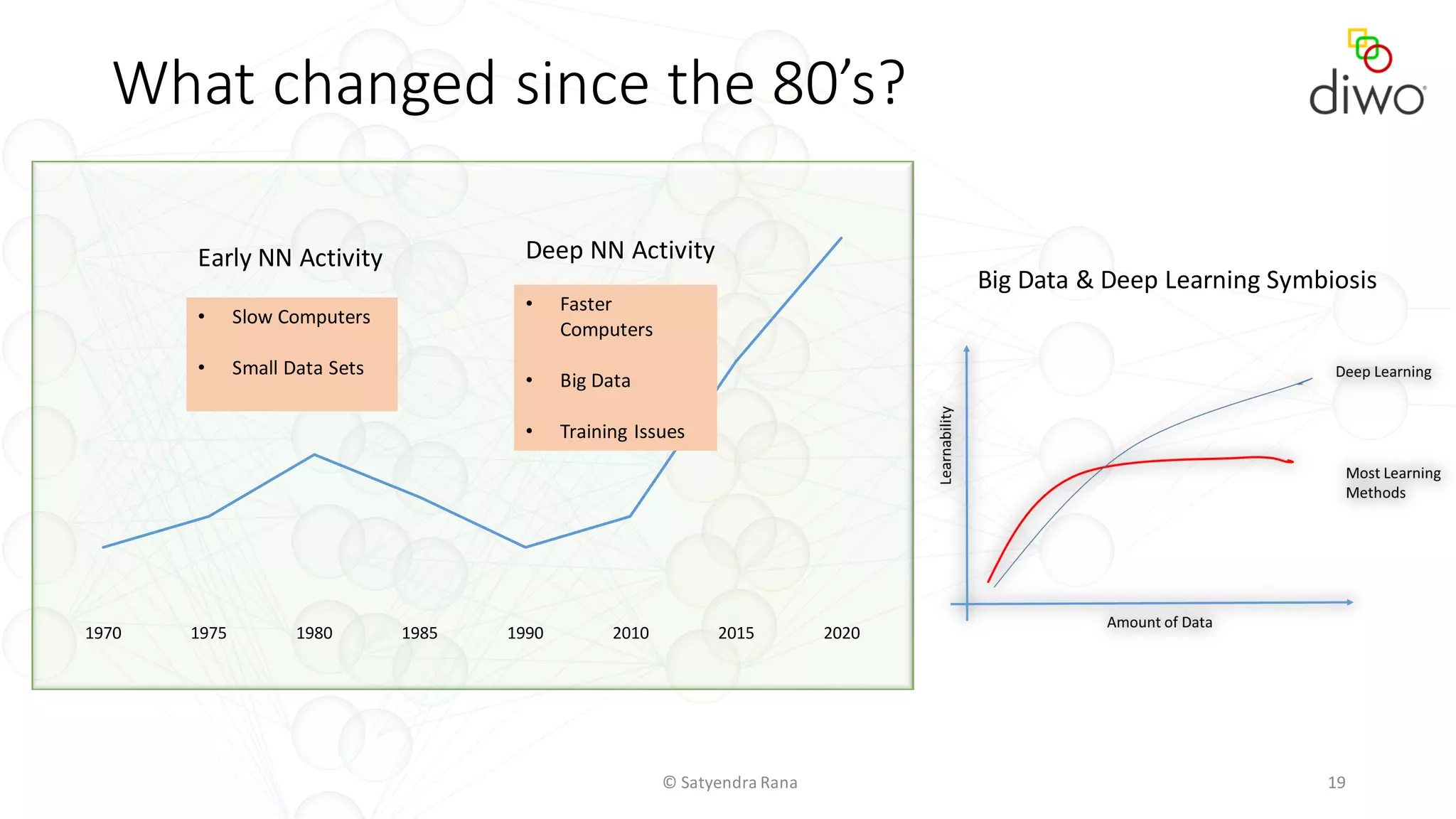

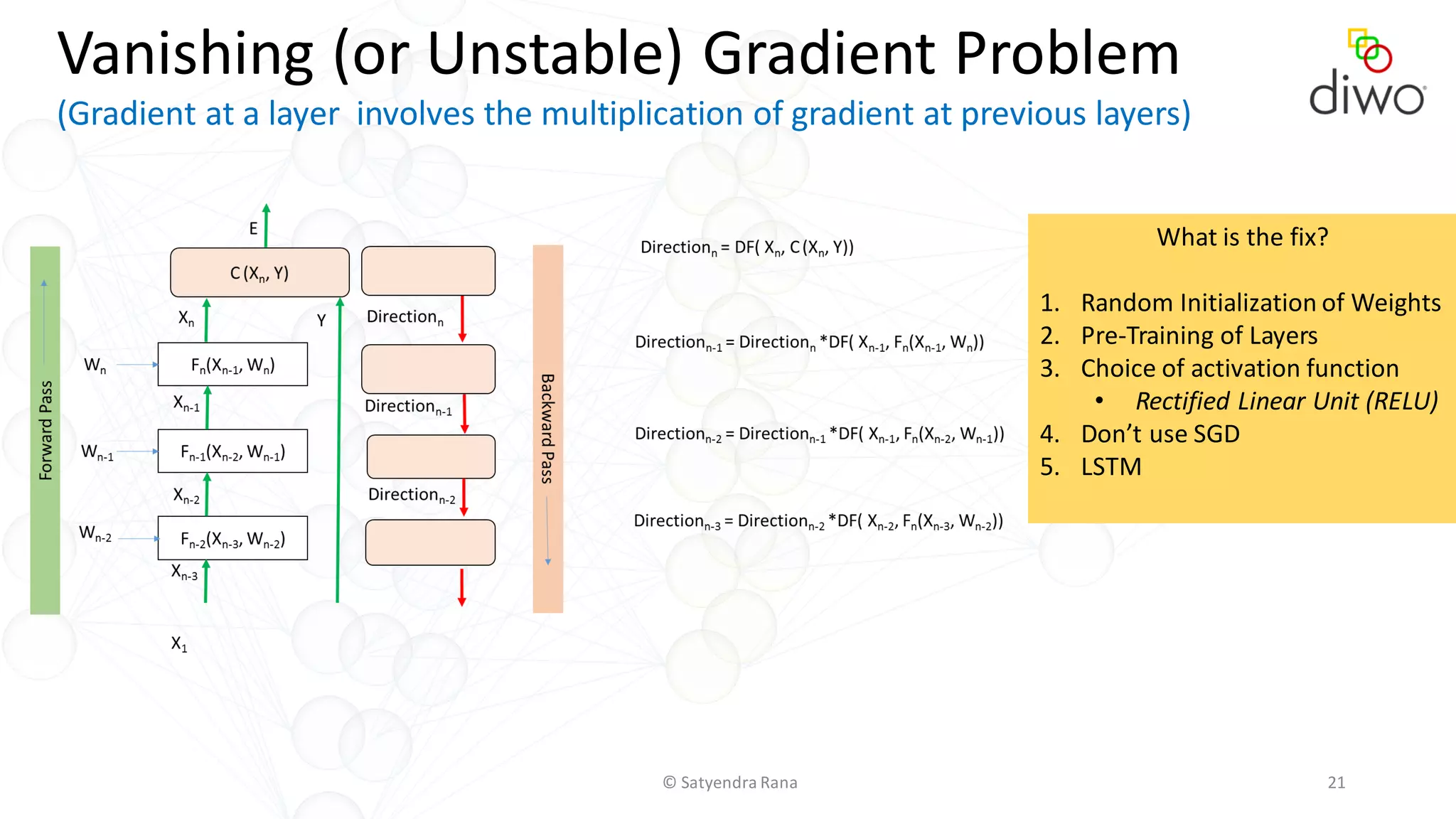

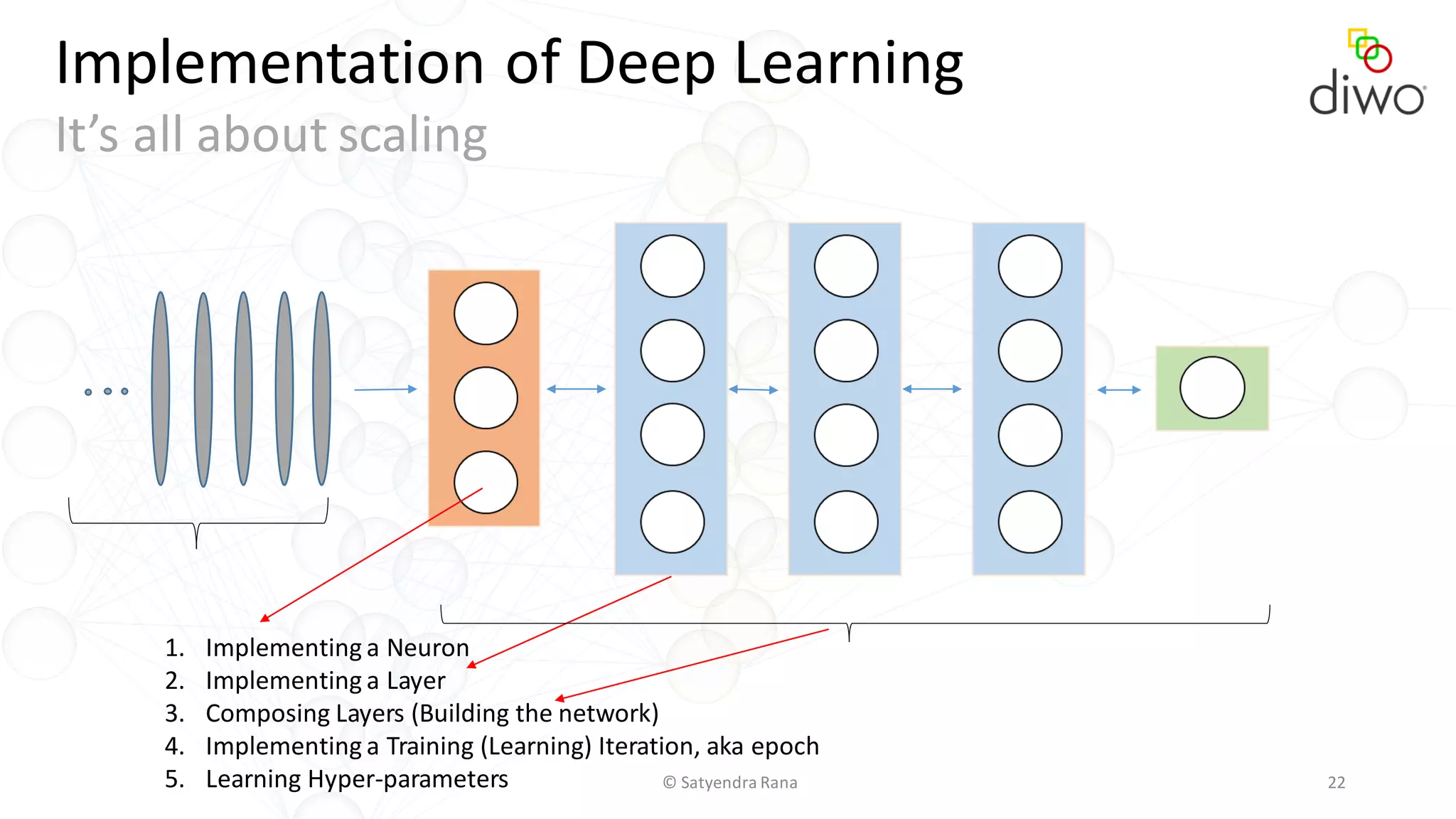

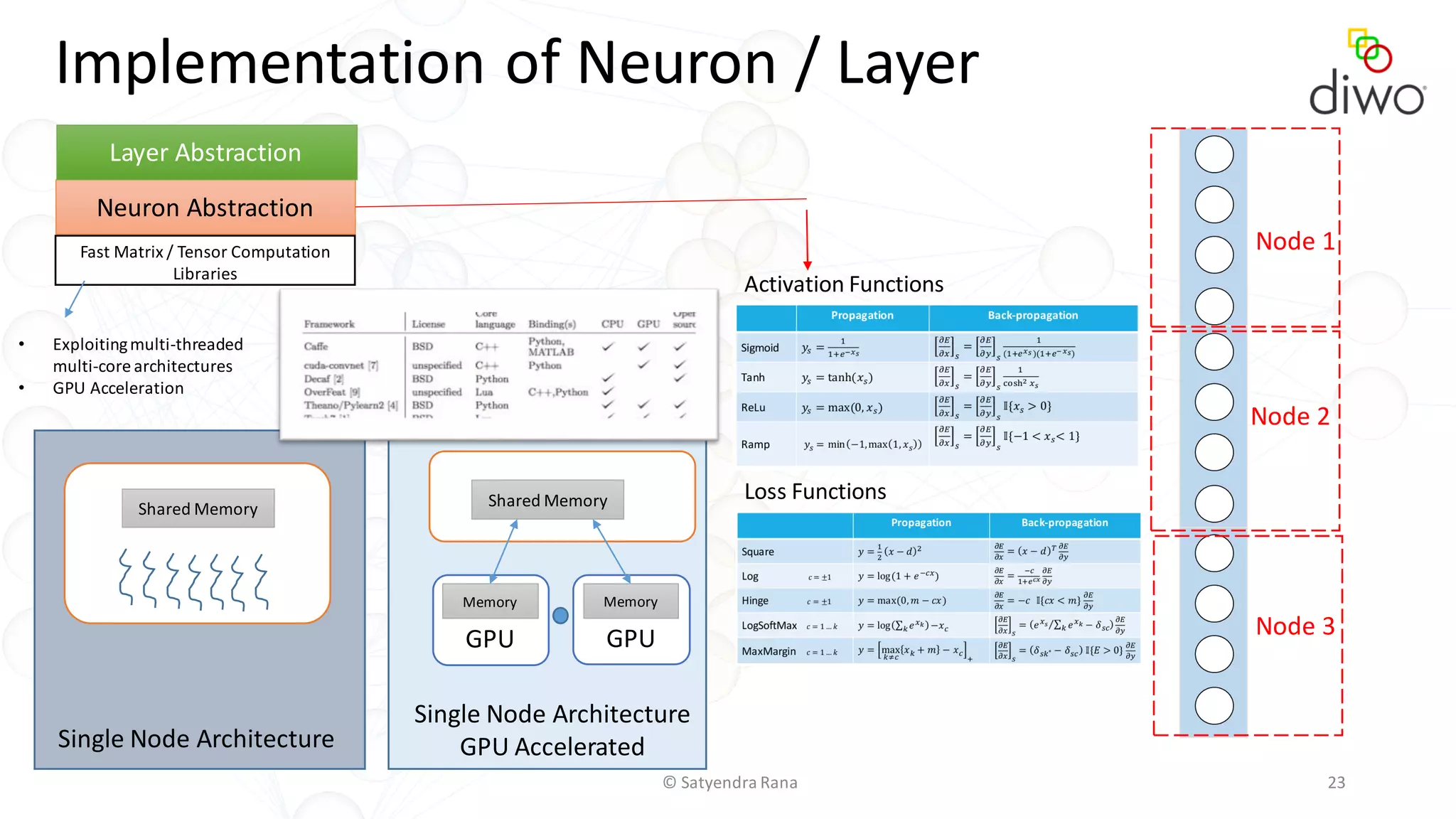

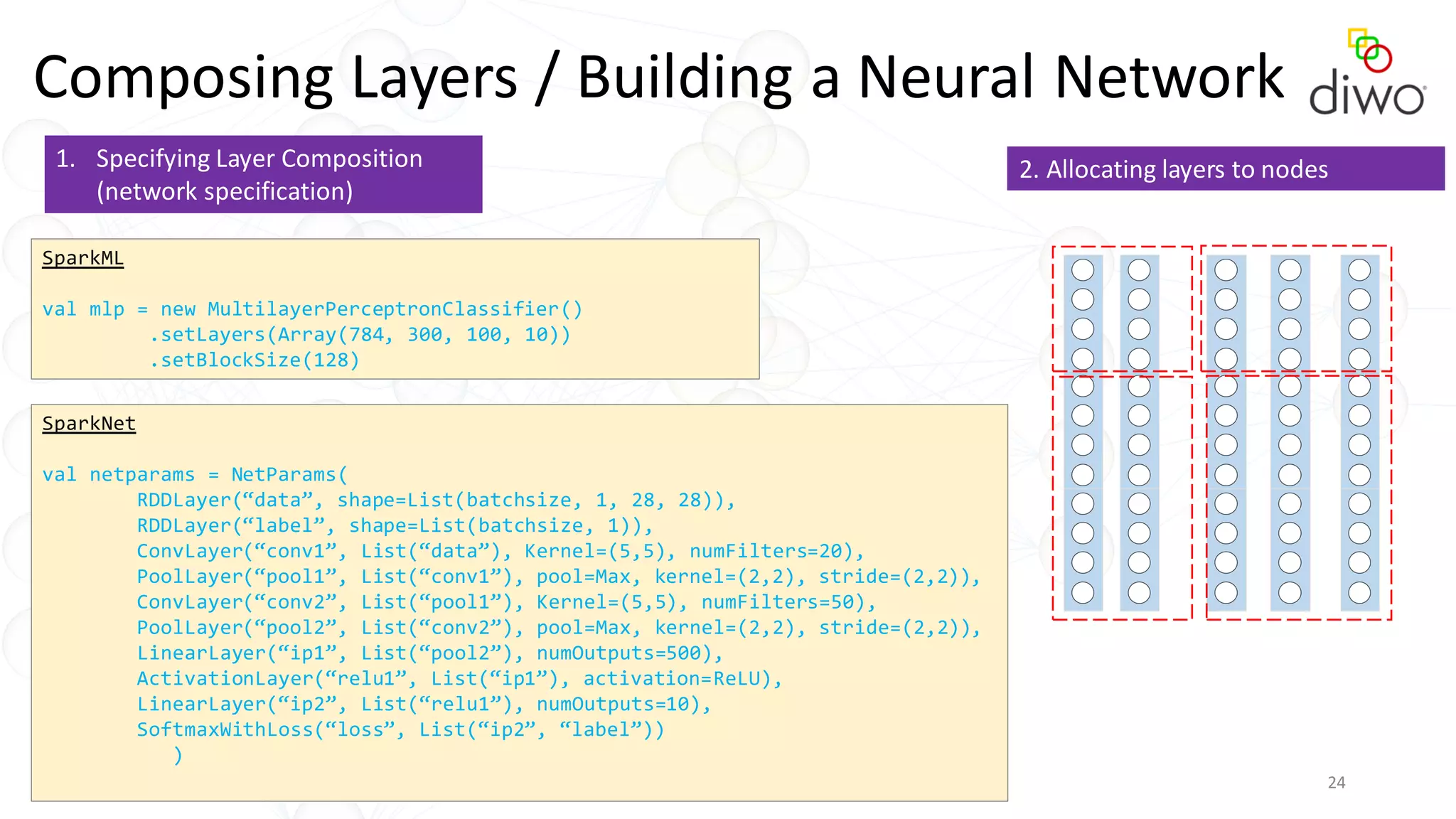

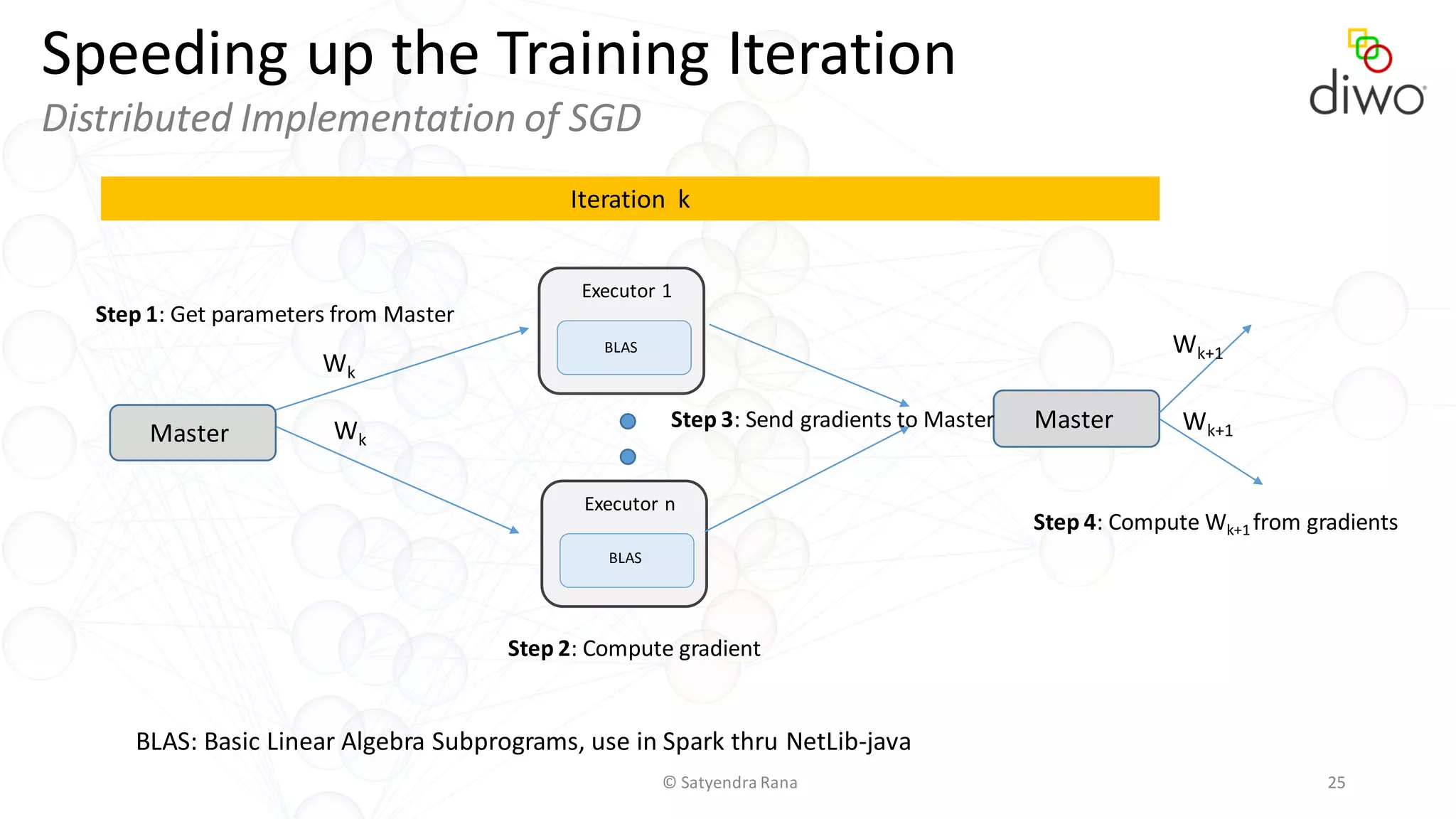

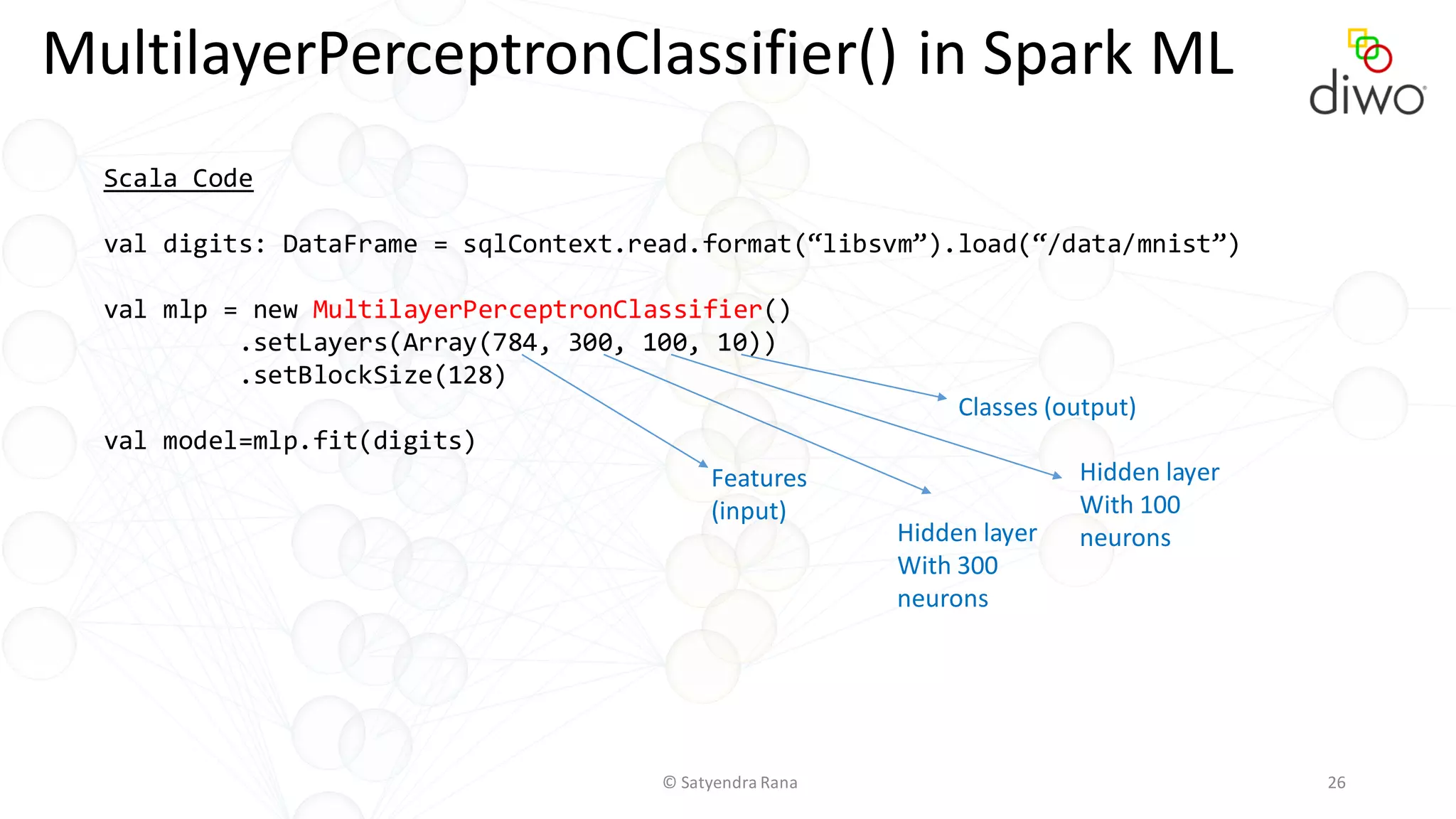

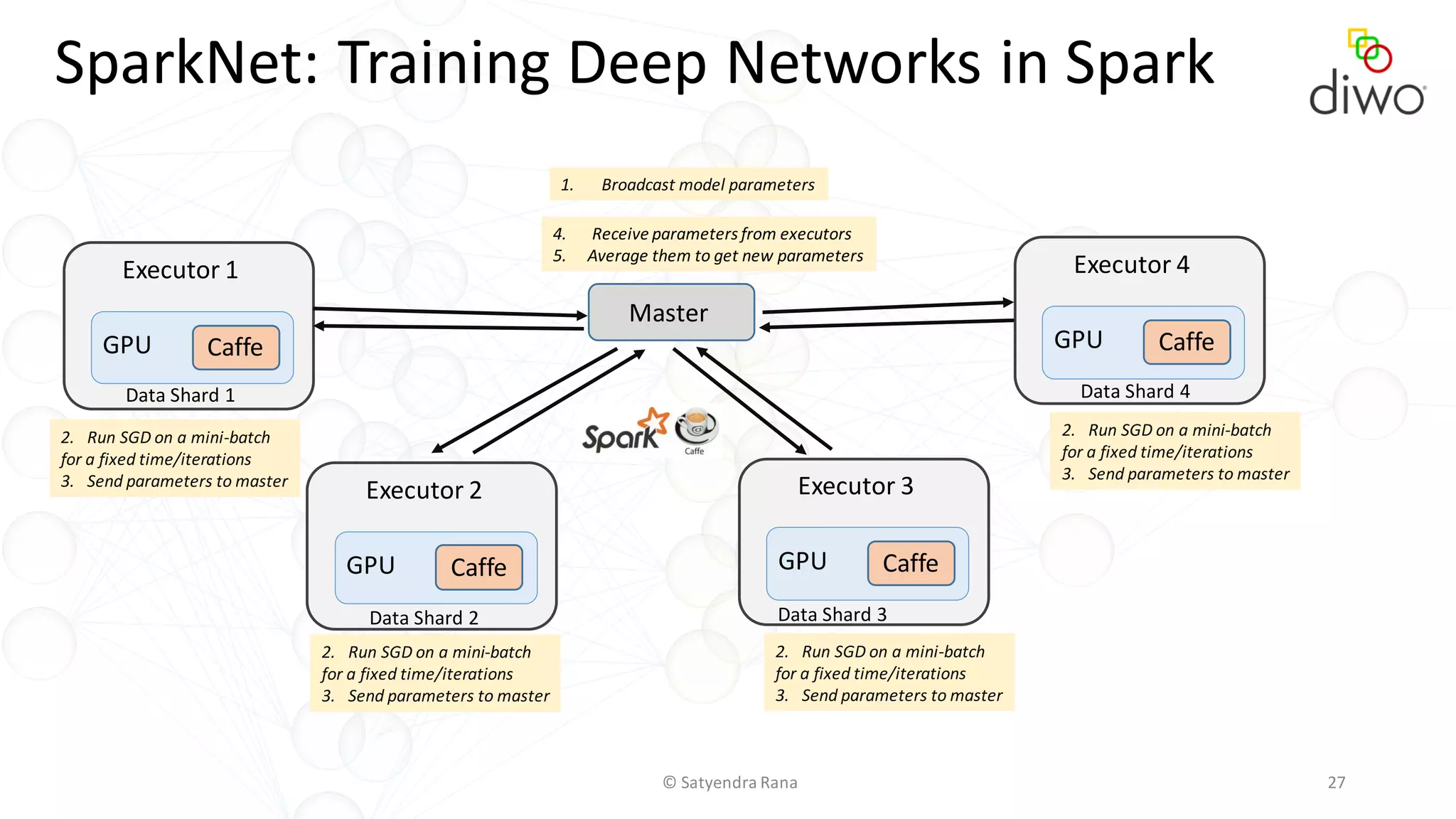

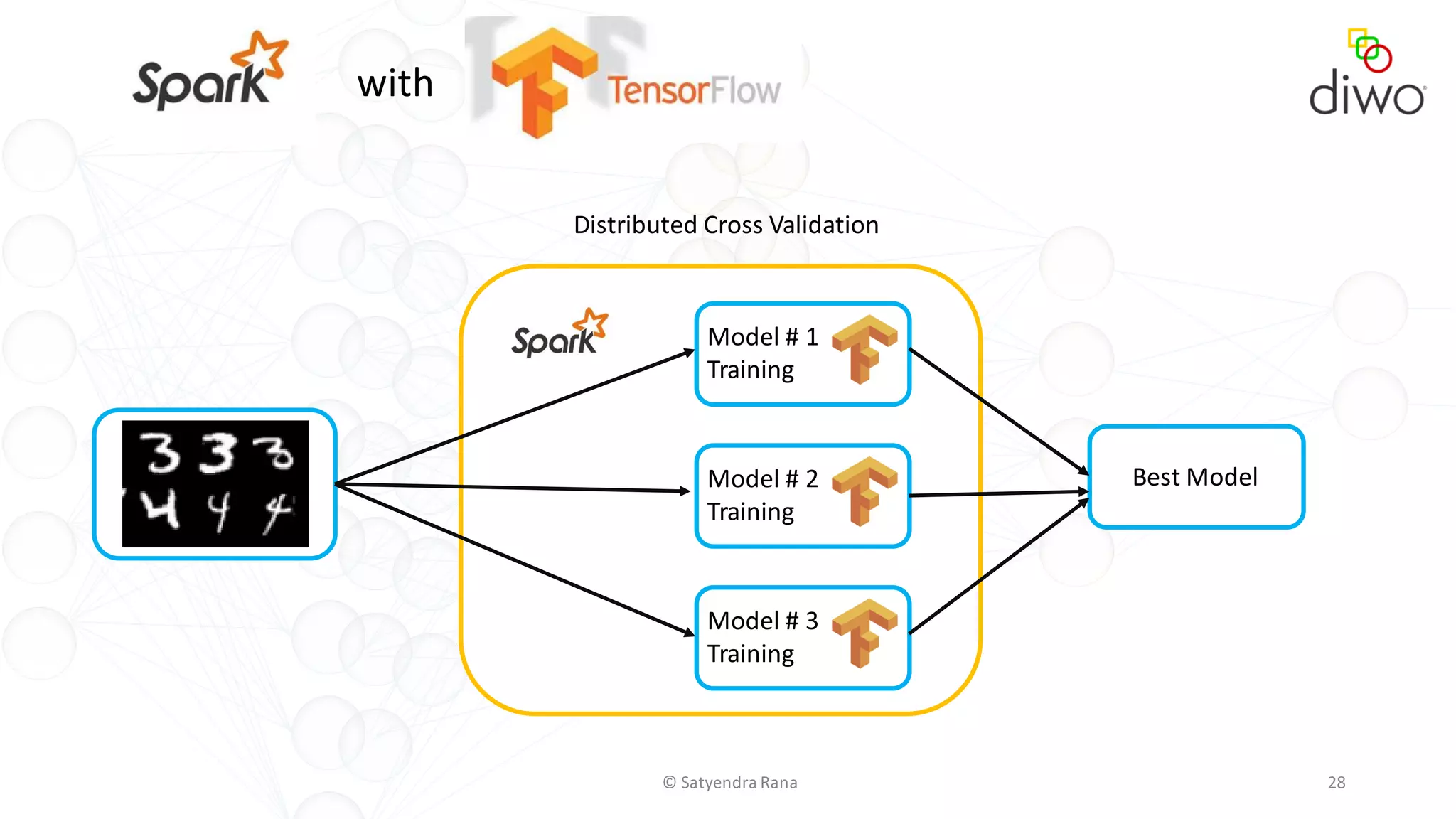

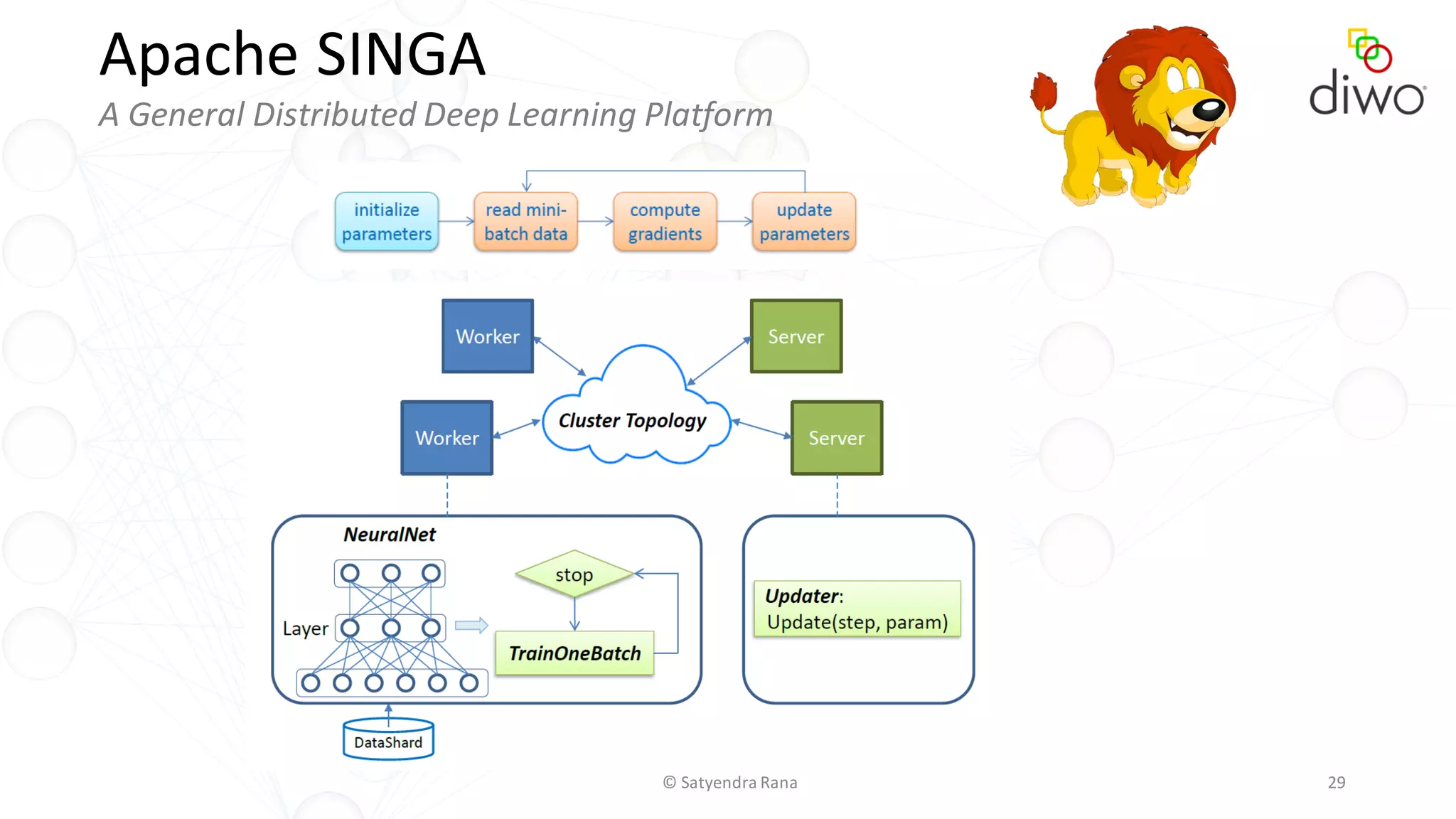

The document discusses various aspects of machine learning, particularly focusing on neural networks, their architecture, and learning methods. It highlights the importance of deep learning, types of neural networks, training techniques, and overcoming challenges such as the vanishing gradient problem. Additionally, it emphasizes the integration of deep learning with distributed computing frameworks like Apache Spark for efficient model training.