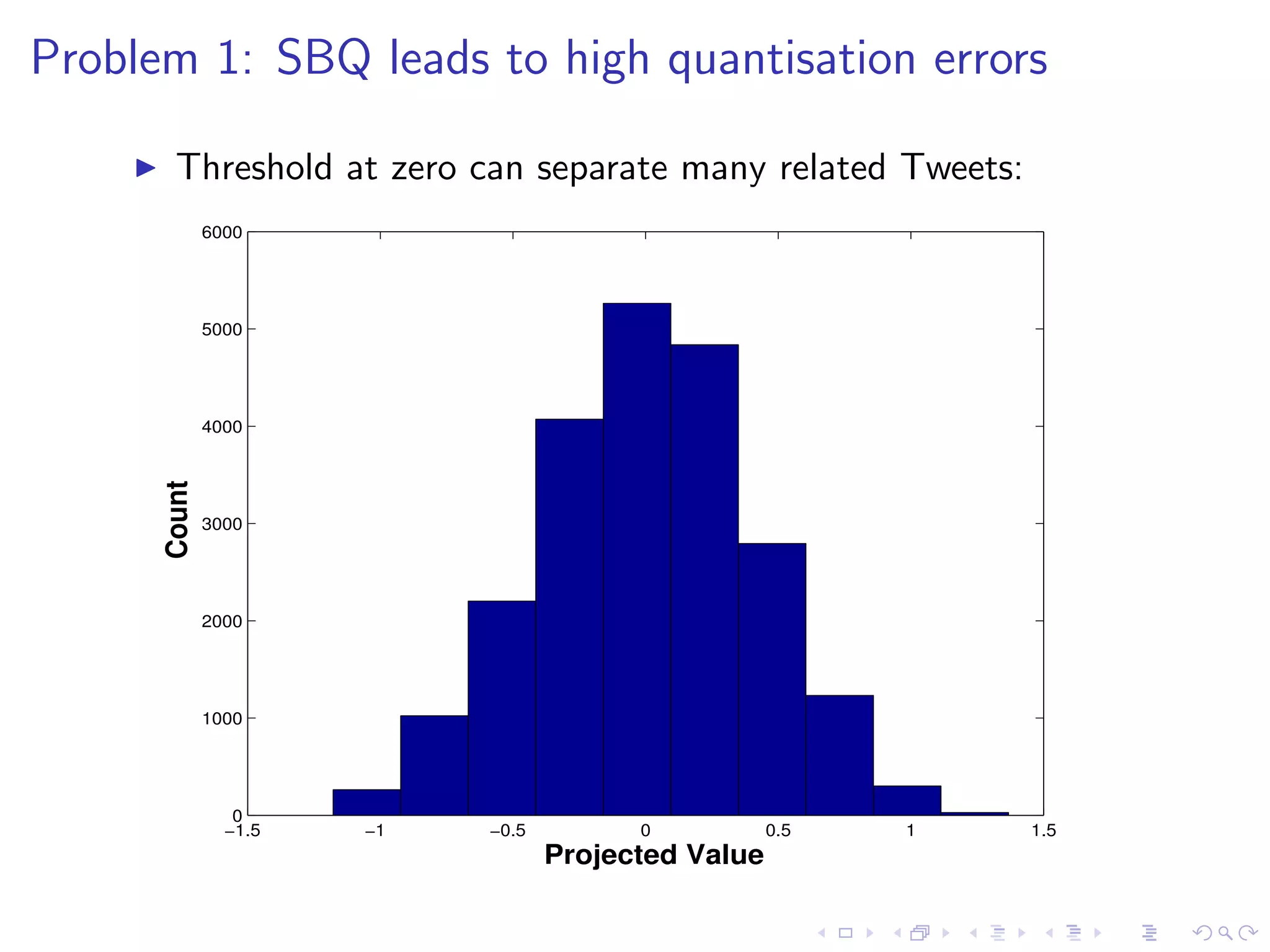

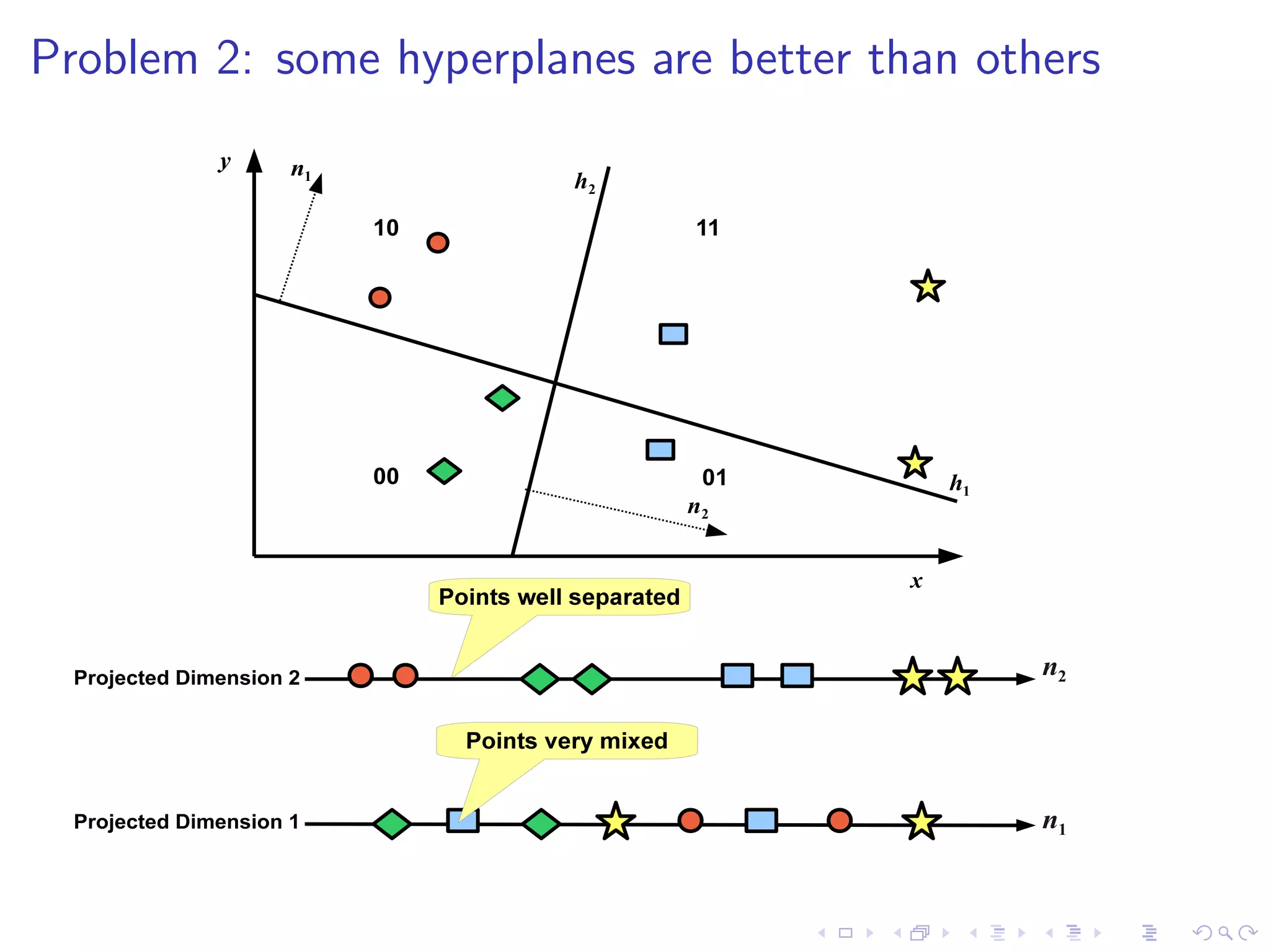

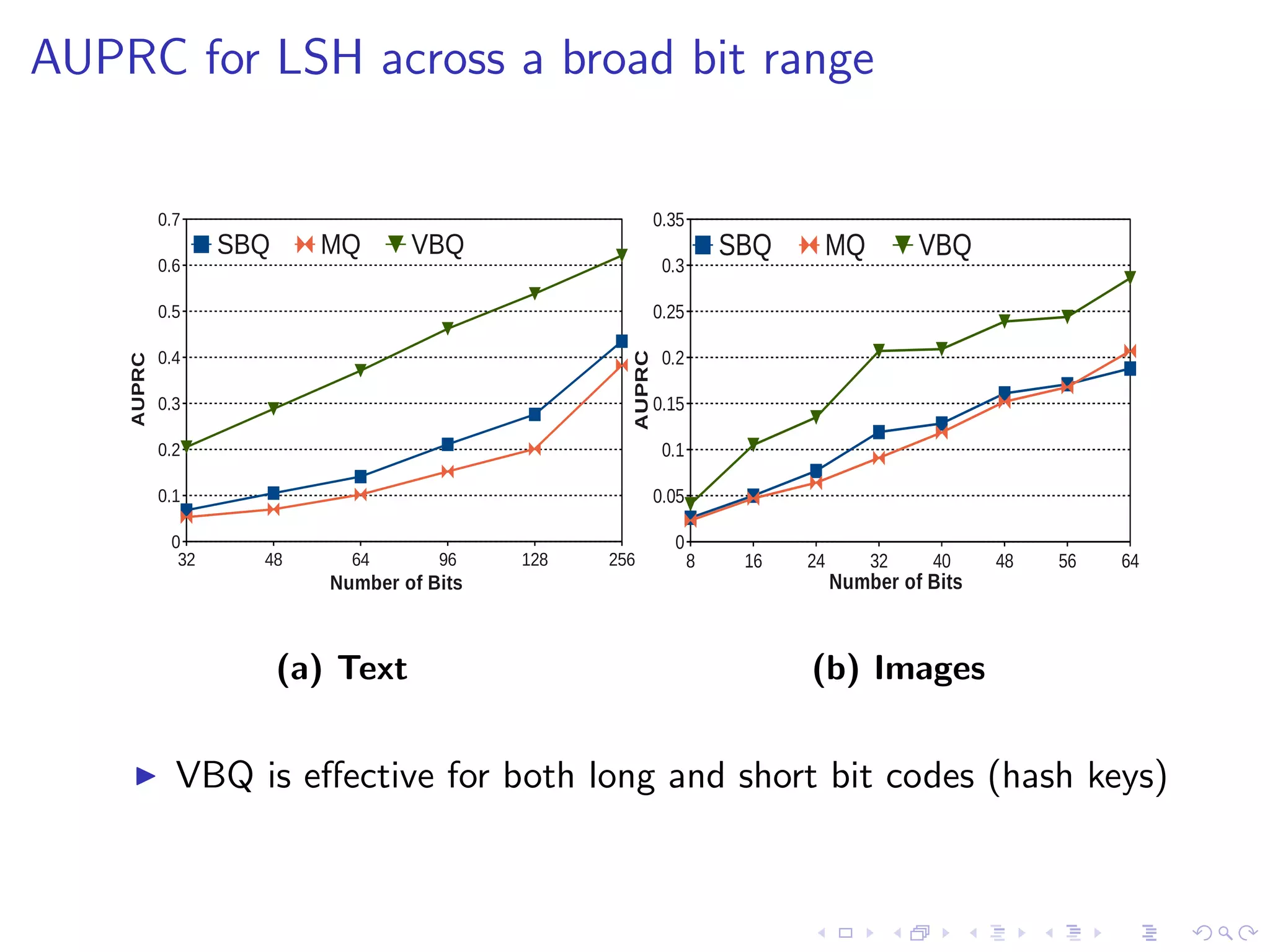

The document describes research on variable bit quantisation for locality sensitive hashing (LSH) to improve nearest neighbor search for large-scale applications. It discusses problems with existing single bit quantisation for LSH, such as high quantisation error. The proposed variable bit quantisation approach assigns bits differently to hyperplanes based on how well they preserve neighborhood structure. Evaluation on text and image retrieval tasks demonstrates substantial gains in retrieval accuracy compared to baseline methods.

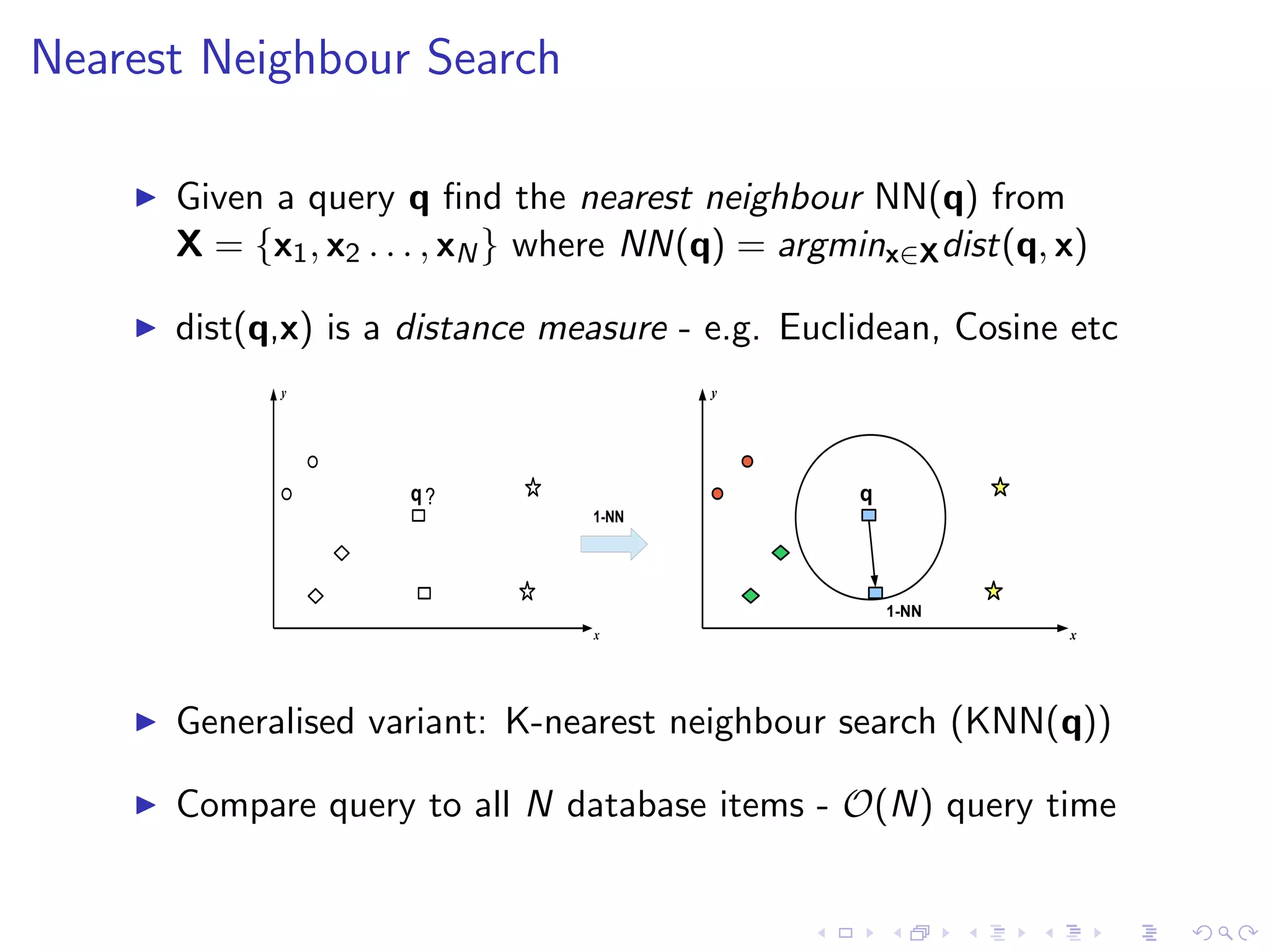

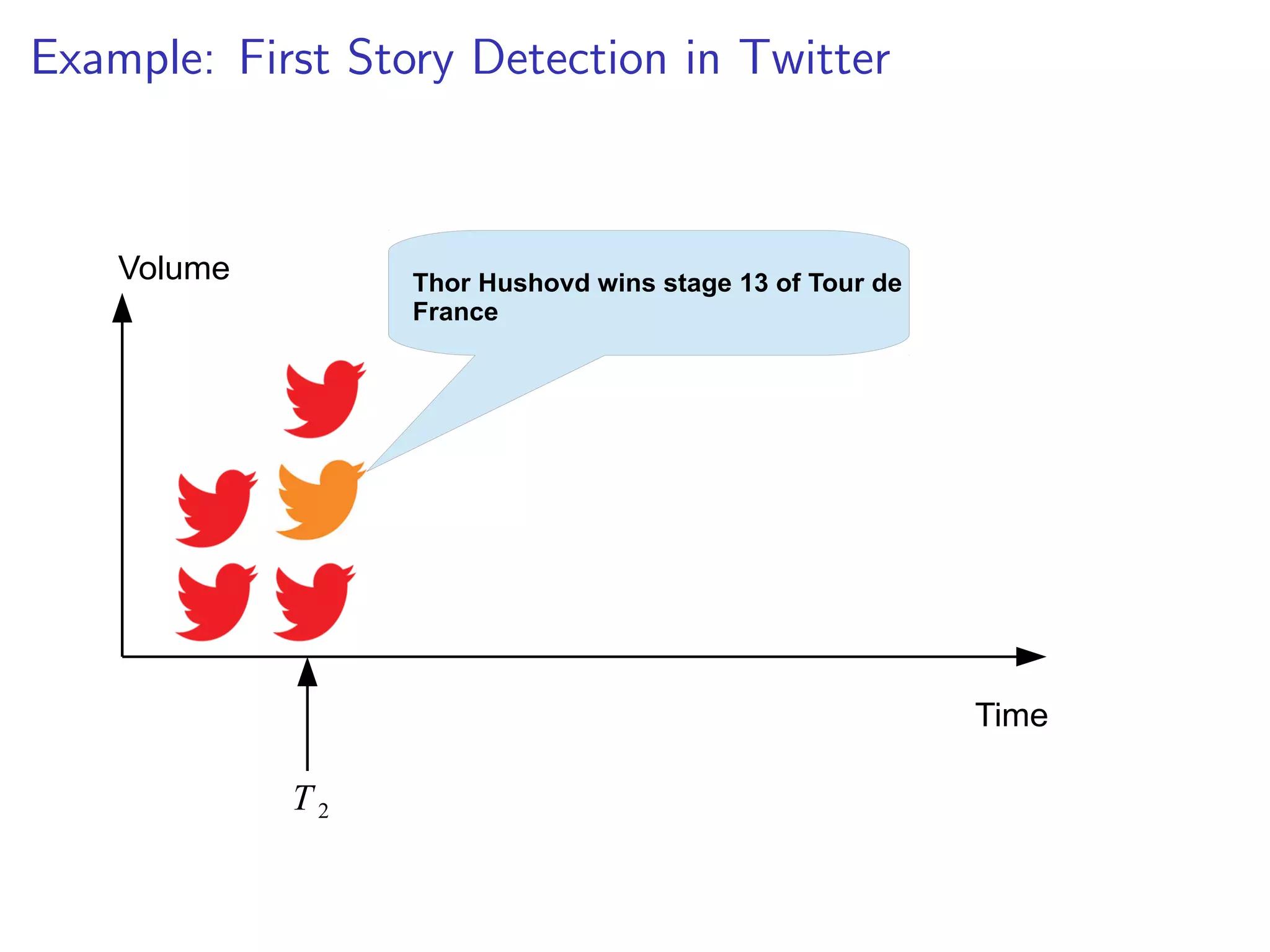

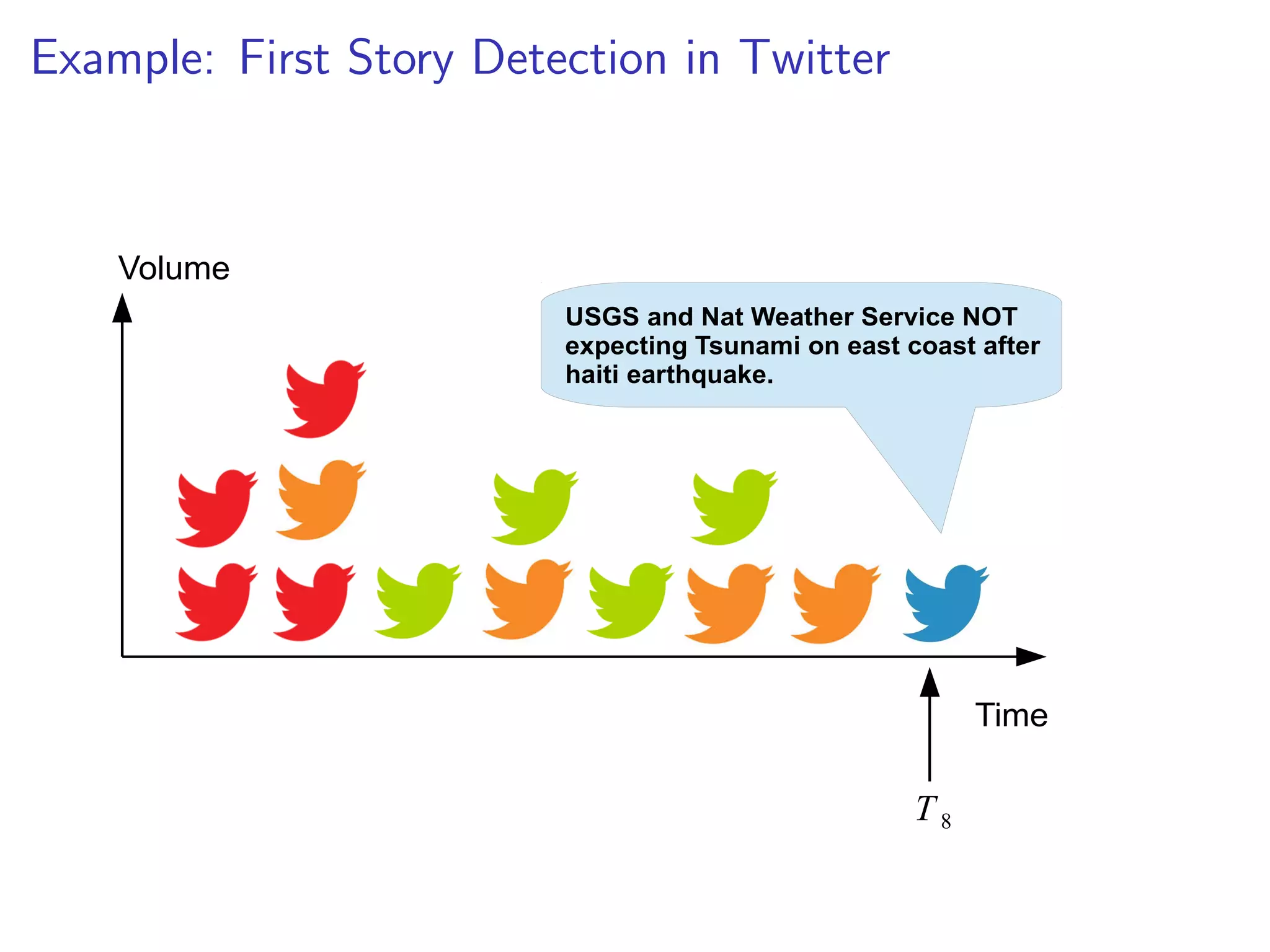

![rst story at 22:17 UTC

I 22:17:43 justinholtweb NOT expecting Tsunami on east coast

after haiti earthquake. good news.

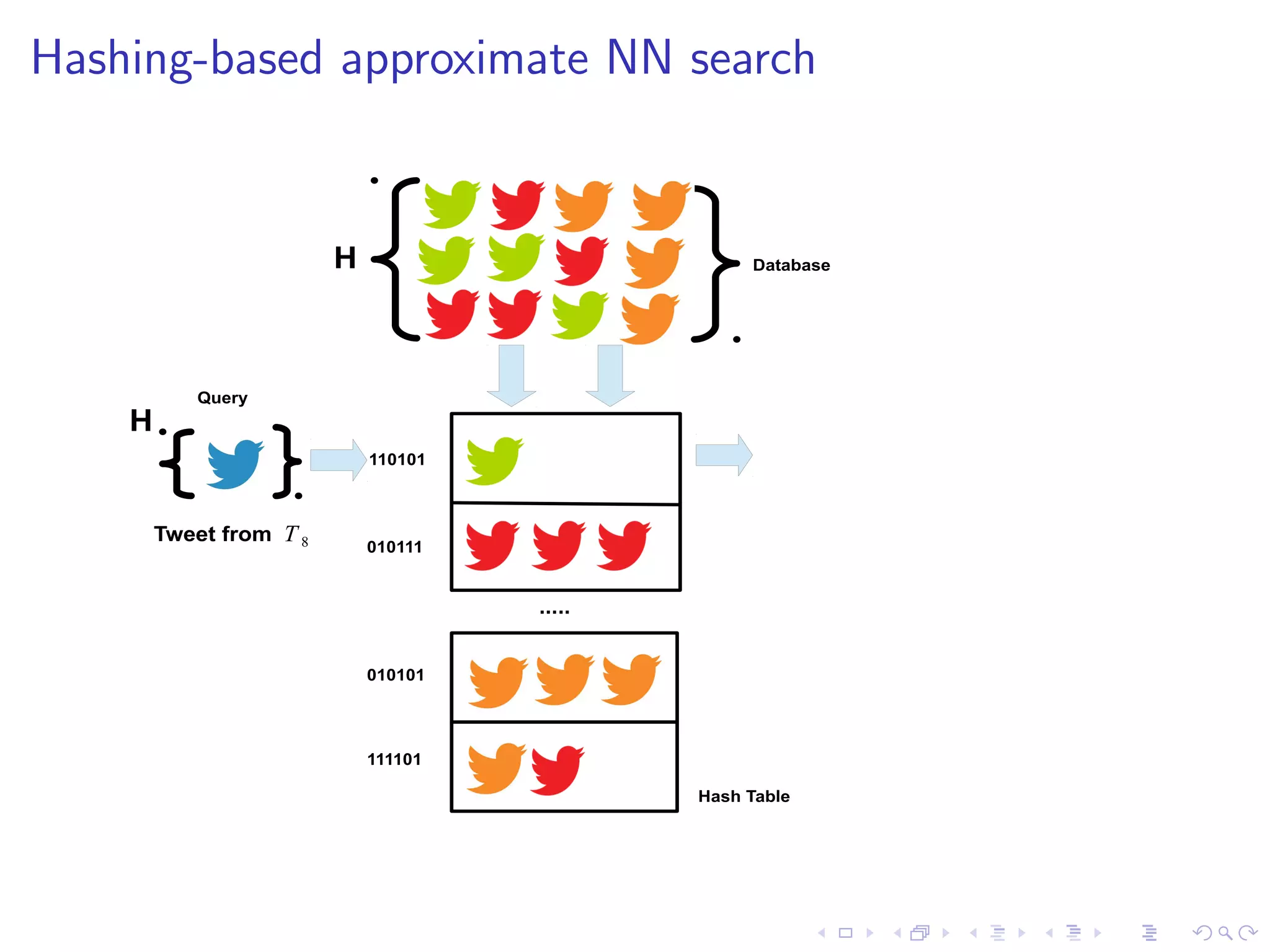

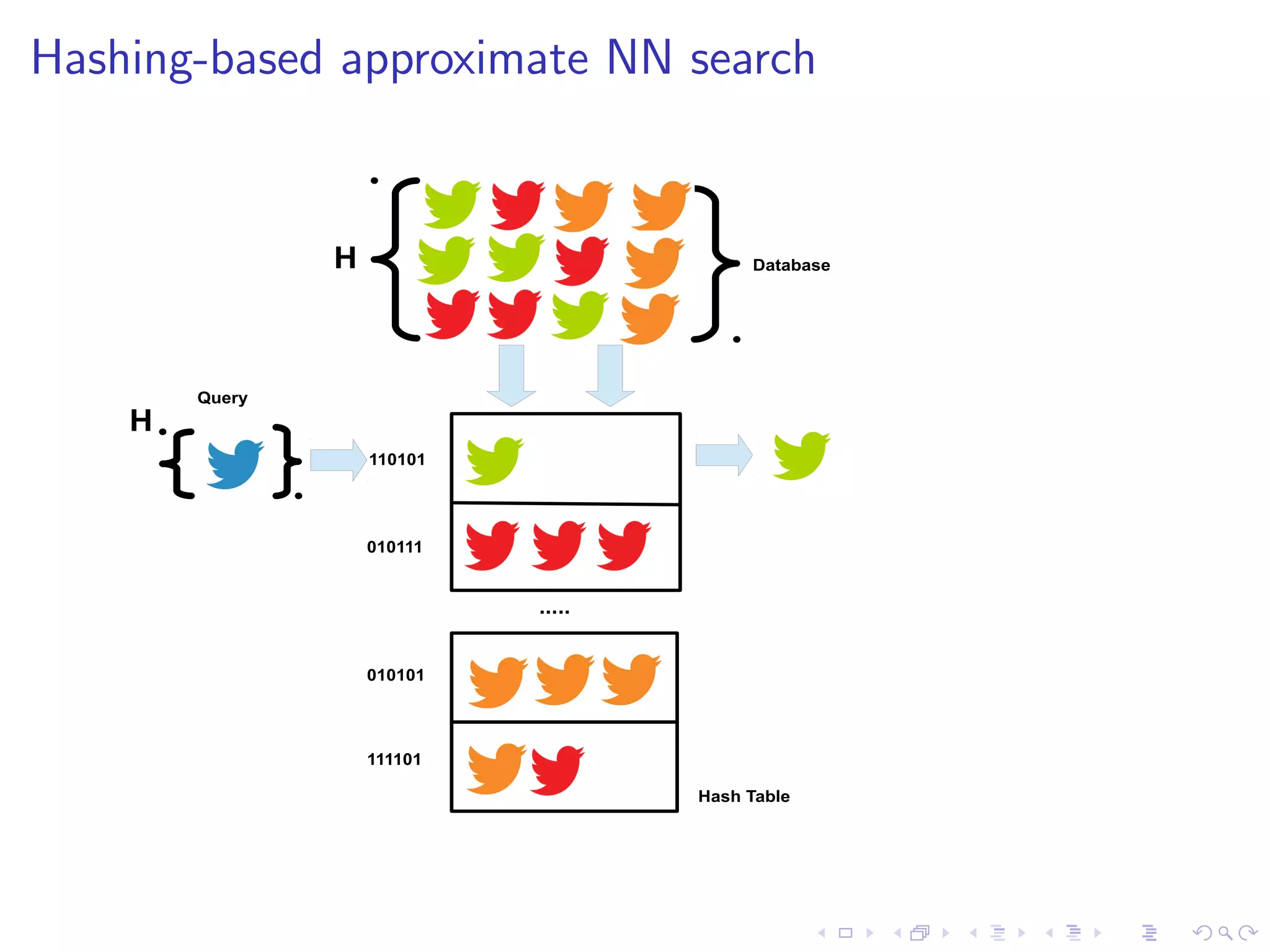

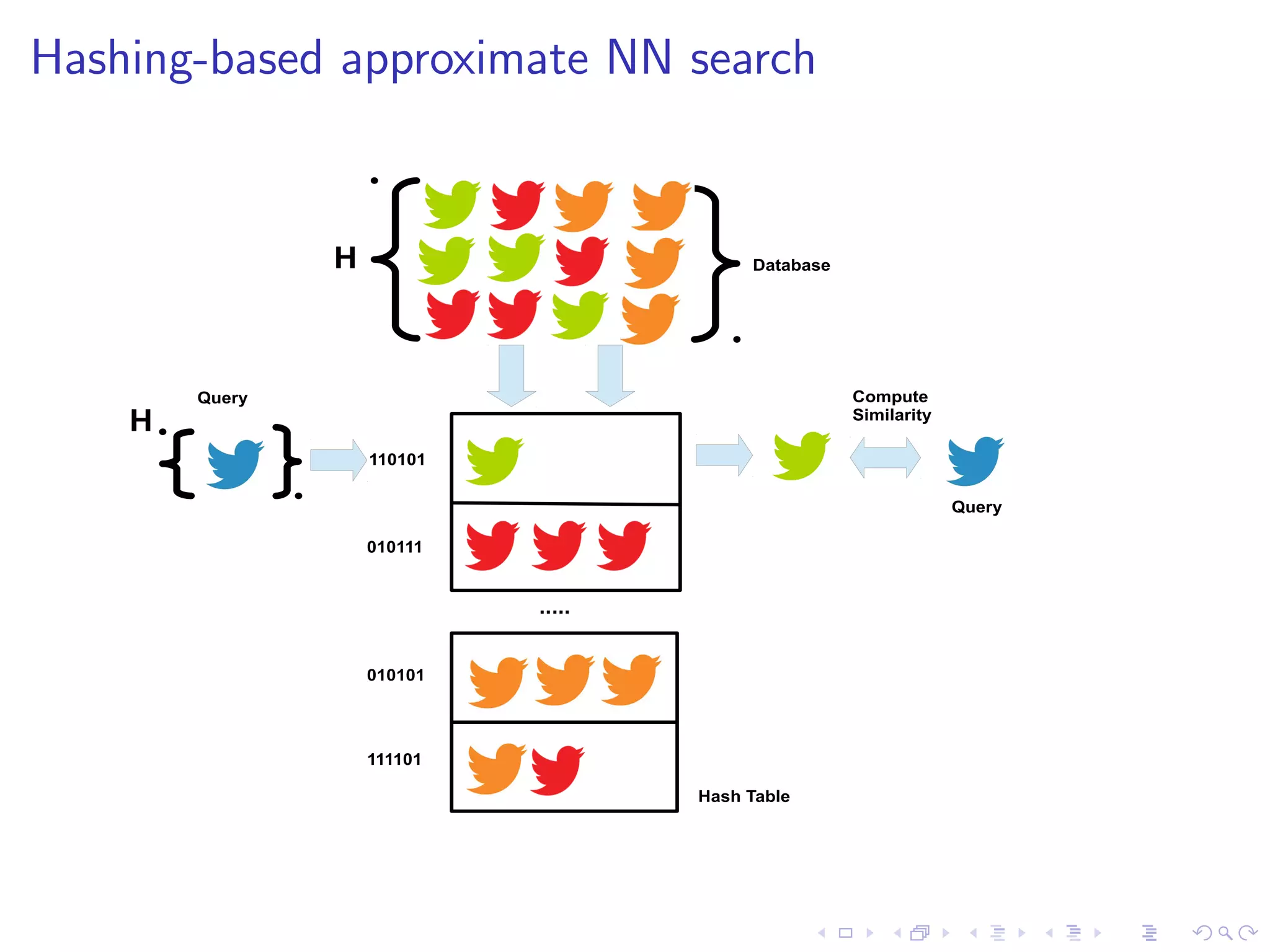

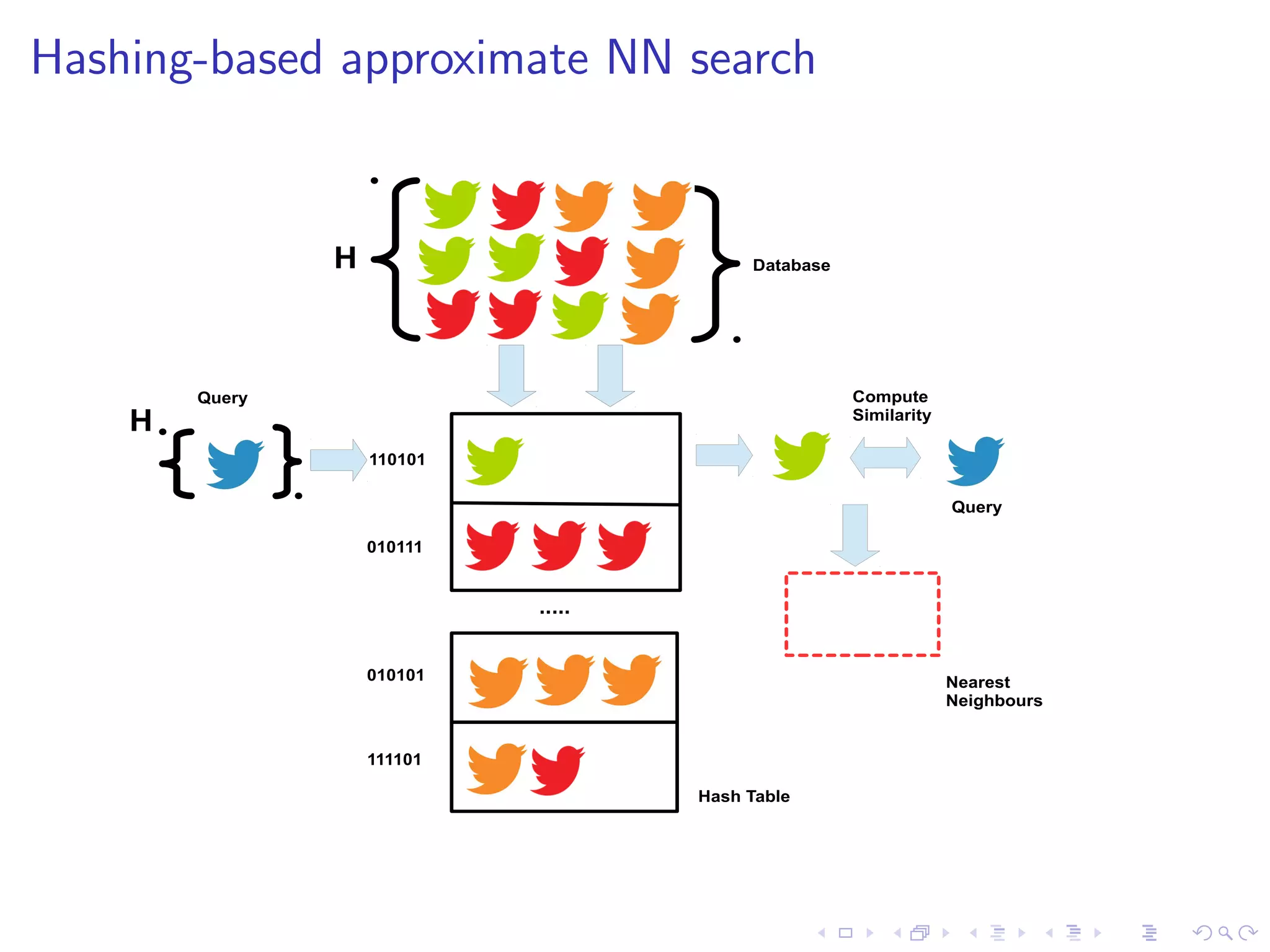

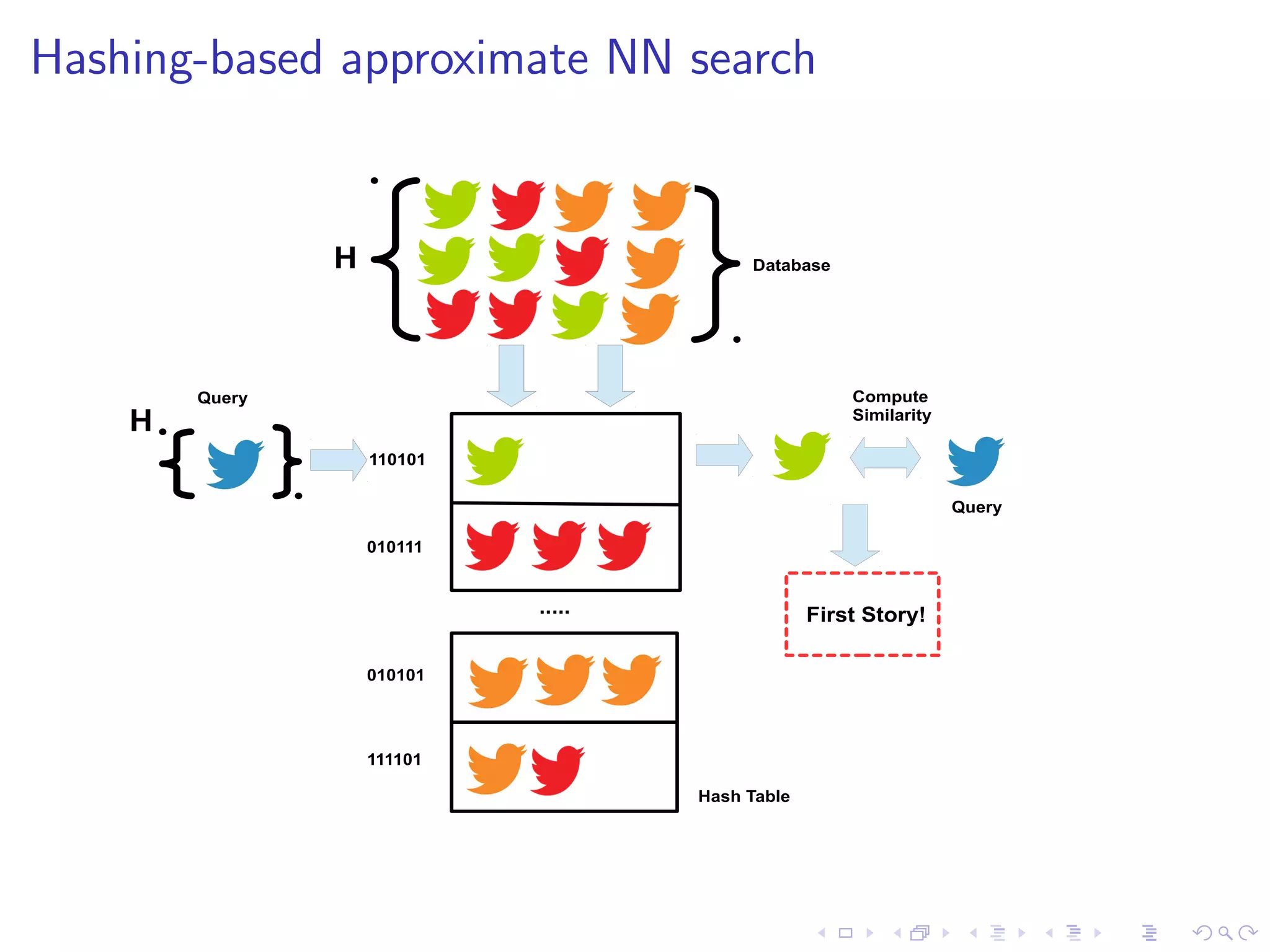

I State-of-the-art FSD uses NN search under the bonnet

I Problems: dimensionality (1 million+) and data volume

(250Gb/day)

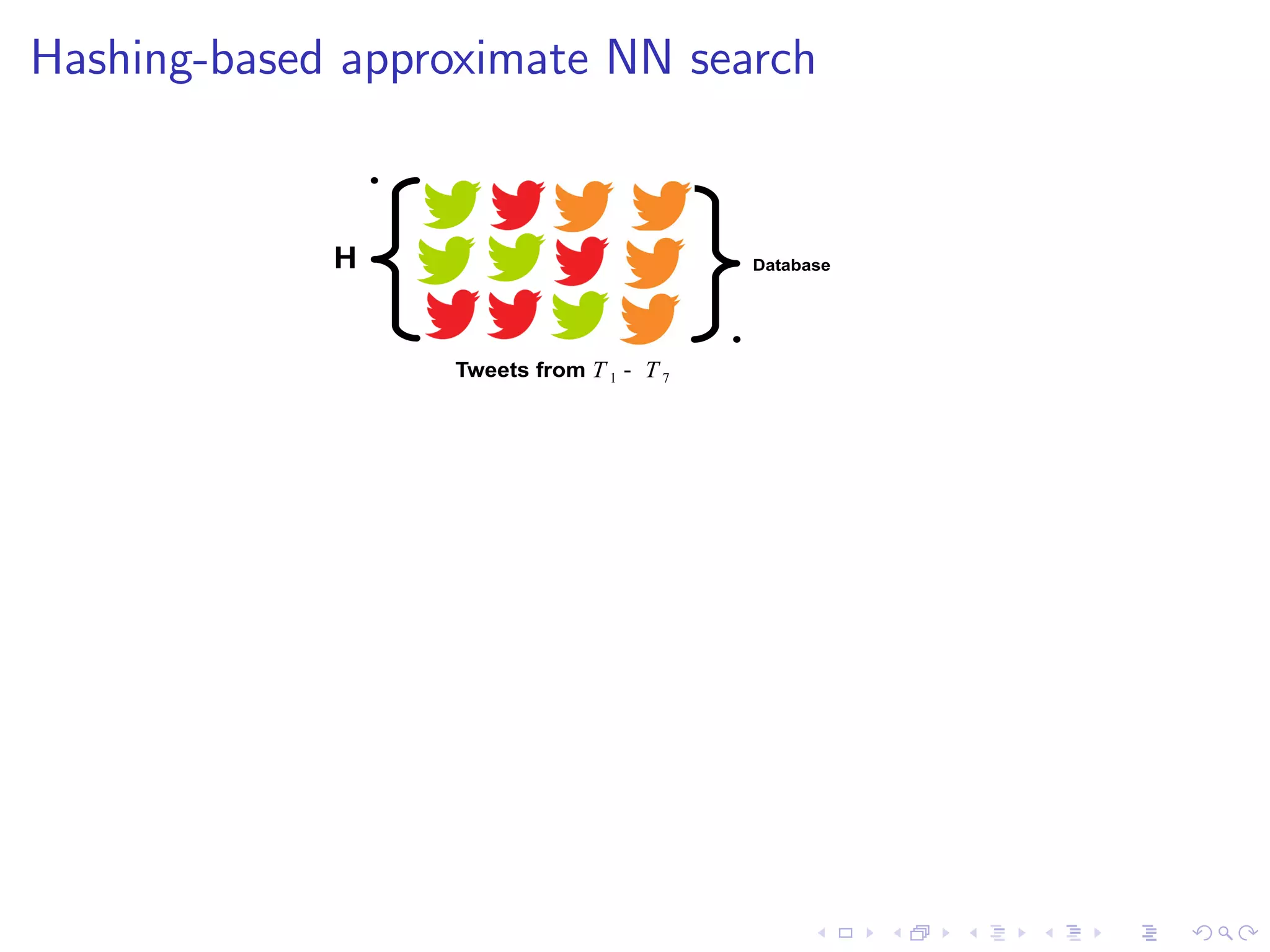

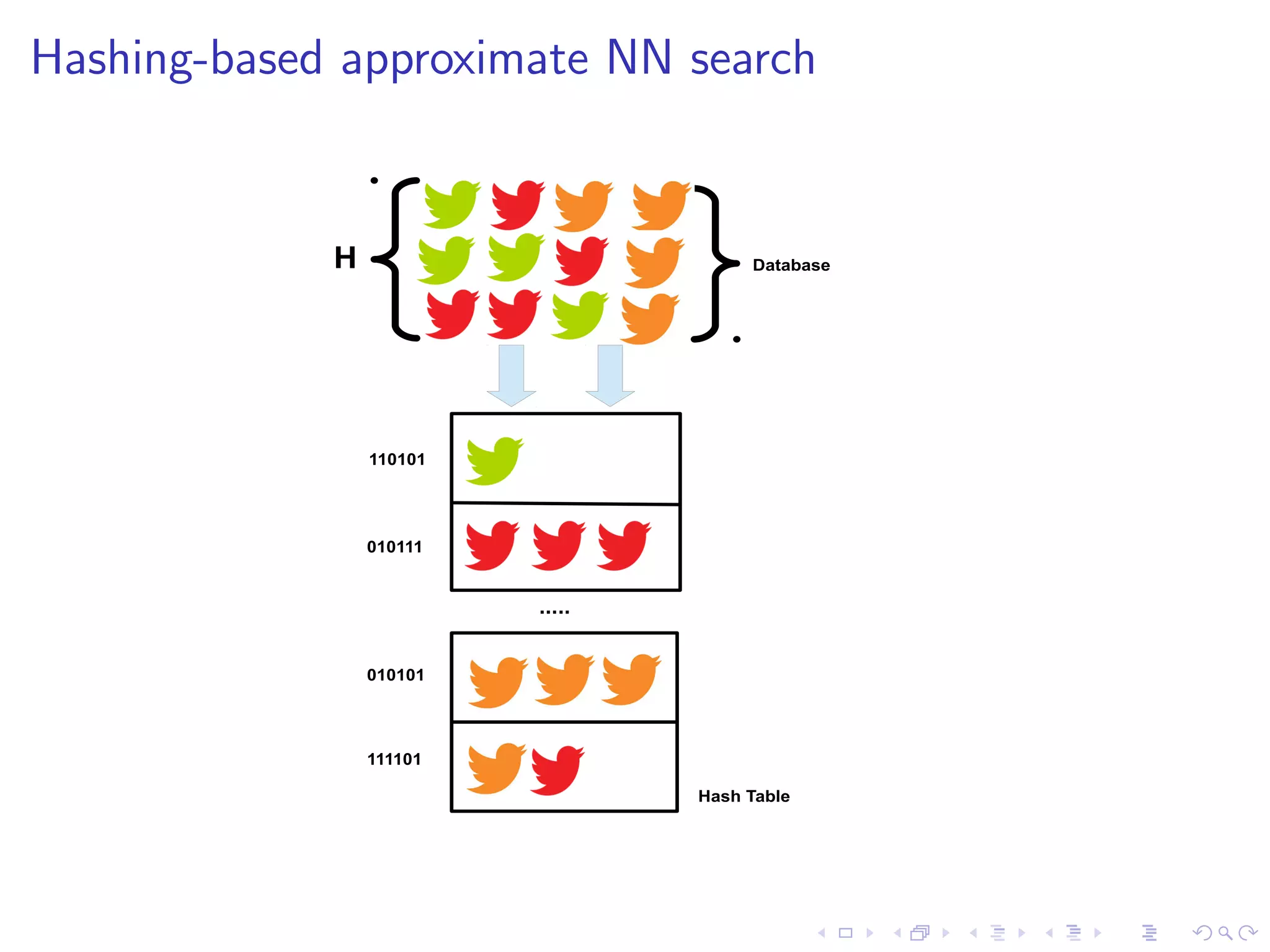

I Hashing-based approximate NN operates in O(1) time [1]

[1] Real-Time Detection, Tracking and Monitoring of Discovered Events in

Social Media. S. Moran et al. In ACL'14.](https://image.slidesharecdn.com/sean-talk-140915165803-phpapp02/75/Data-Science-Research-Day-Talk-8-2048.jpg)

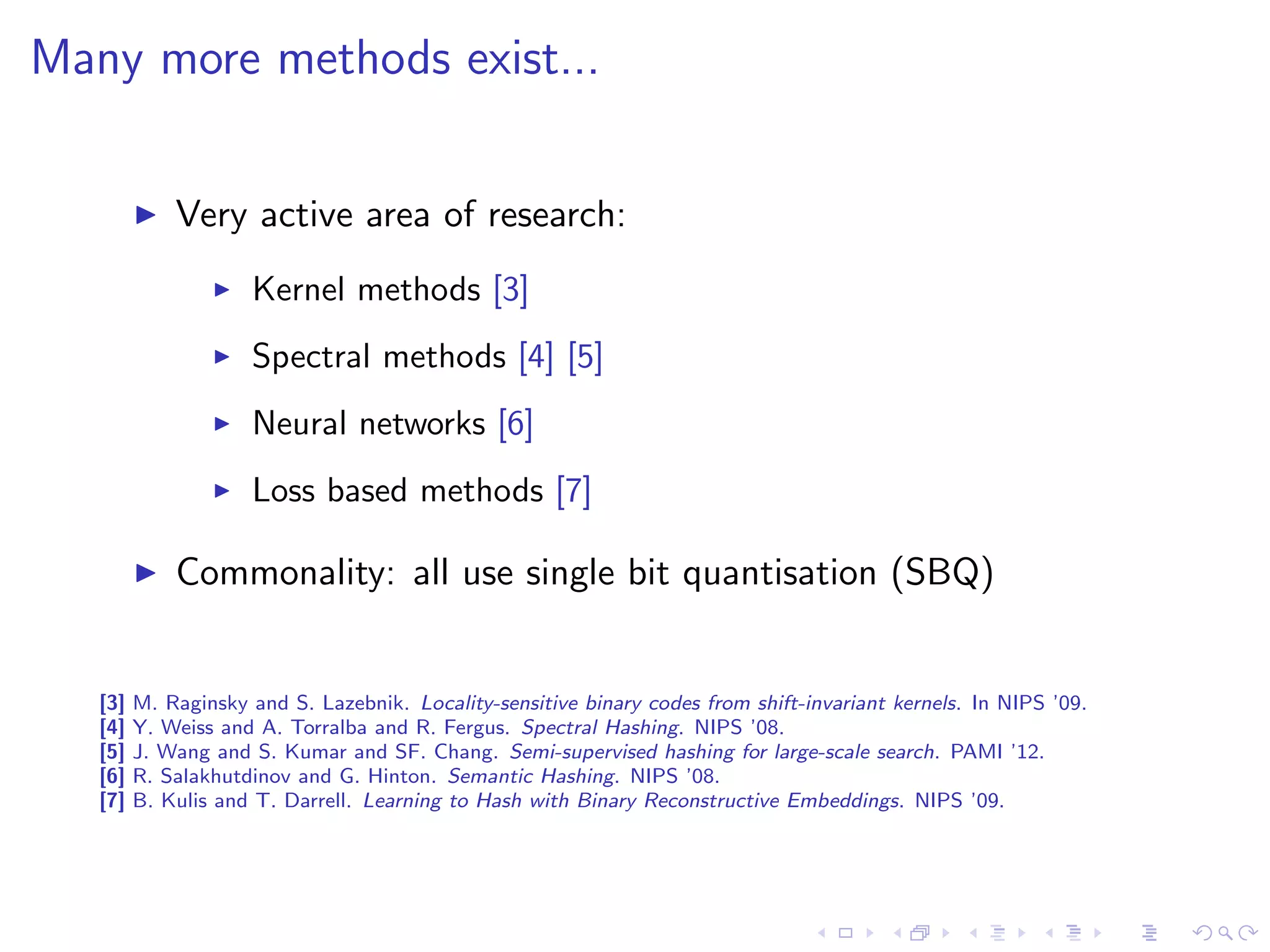

![Many more methods exist...

I Very active area of research:

I Kernel methods [3]

I Spectral methods [4] [5]

I Neural networks [6]

I Loss based methods [7]

I Commonality: all use single bit quantisation (SBQ)

[3] M. Raginsky and S. Lazebnik. Locality-sensitive binary codes from shift-invariant kernels. In NIPS '09.

[4] Y. Weiss and A. Torralba and R. Fergus. Spectral Hashing. NIPS '08.

[5] J. Wang and S. Kumar and SF. Chang. Semi-supervised hashing for large-scale search. PAMI '12.

[6] R. Salakhutdinov and G. Hinton. Semantic Hashing. NIPS '08.

[7] B. Kulis and T. Darrell. Learning to Hash with Binary Reconstructive Embeddings. NIPS '09.](https://image.slidesharecdn.com/sean-talk-140915165803-phpapp02/75/Data-Science-Research-Day-Talk-25-2048.jpg)

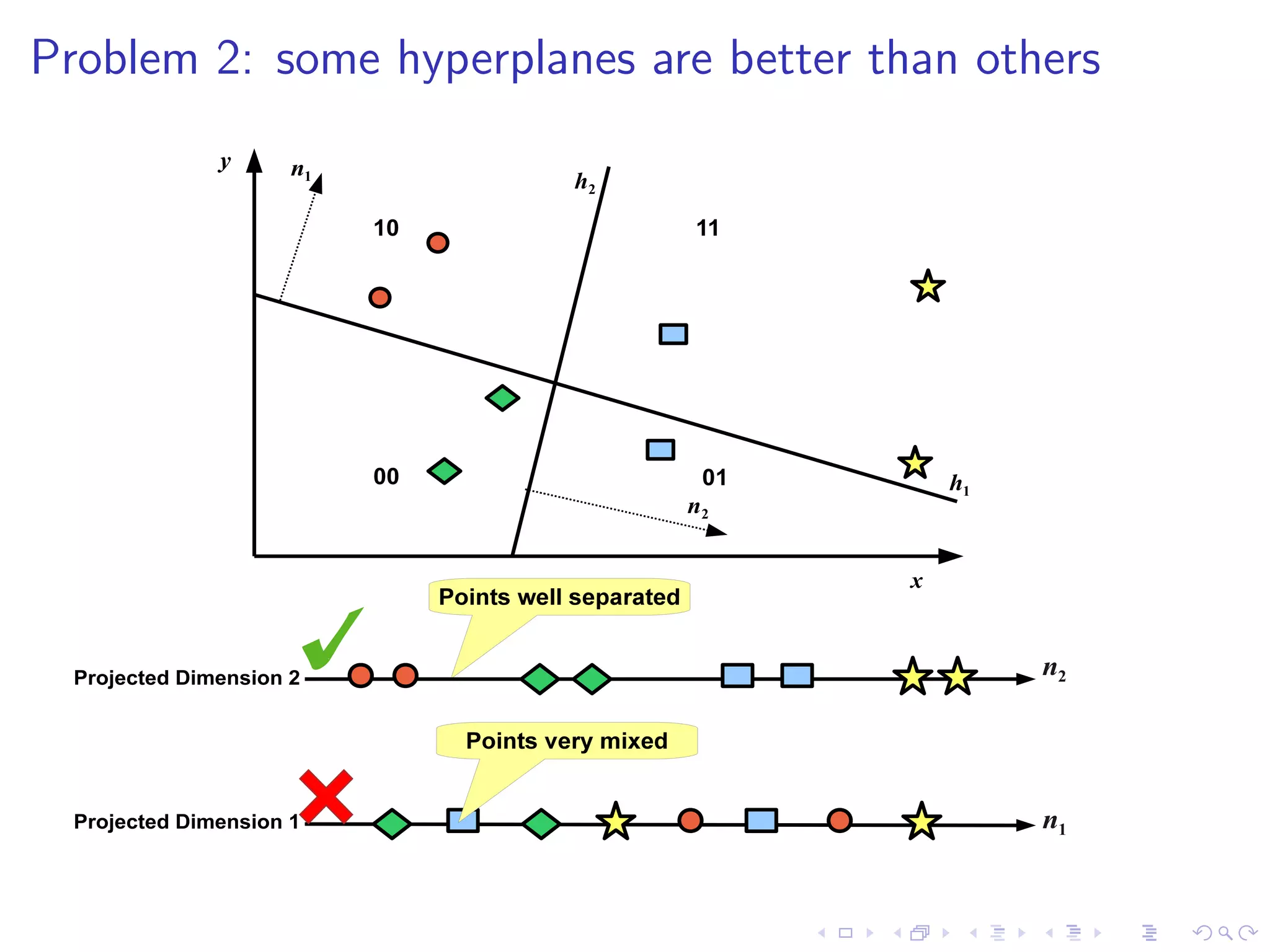

![Threshold Positioning

I Multiple bits per hyperplane requires multiple thresholds [8]

I F-score optimisation: maximise # related tweets falling inside

the same thresholded regions:

F1 score: 1.00

t1 t2 t3

00 01 10 11

n2

F1 score: 0.44

t1 t2 t3

00 01 10 11

n2

[8] Neighbourhood Preserving Quantisation for LSH. S. Moran et al. In

SIGIR'13](https://image.slidesharecdn.com/sean-talk-140915165803-phpapp02/75/Data-Science-Research-Day-Talk-31-2048.jpg)

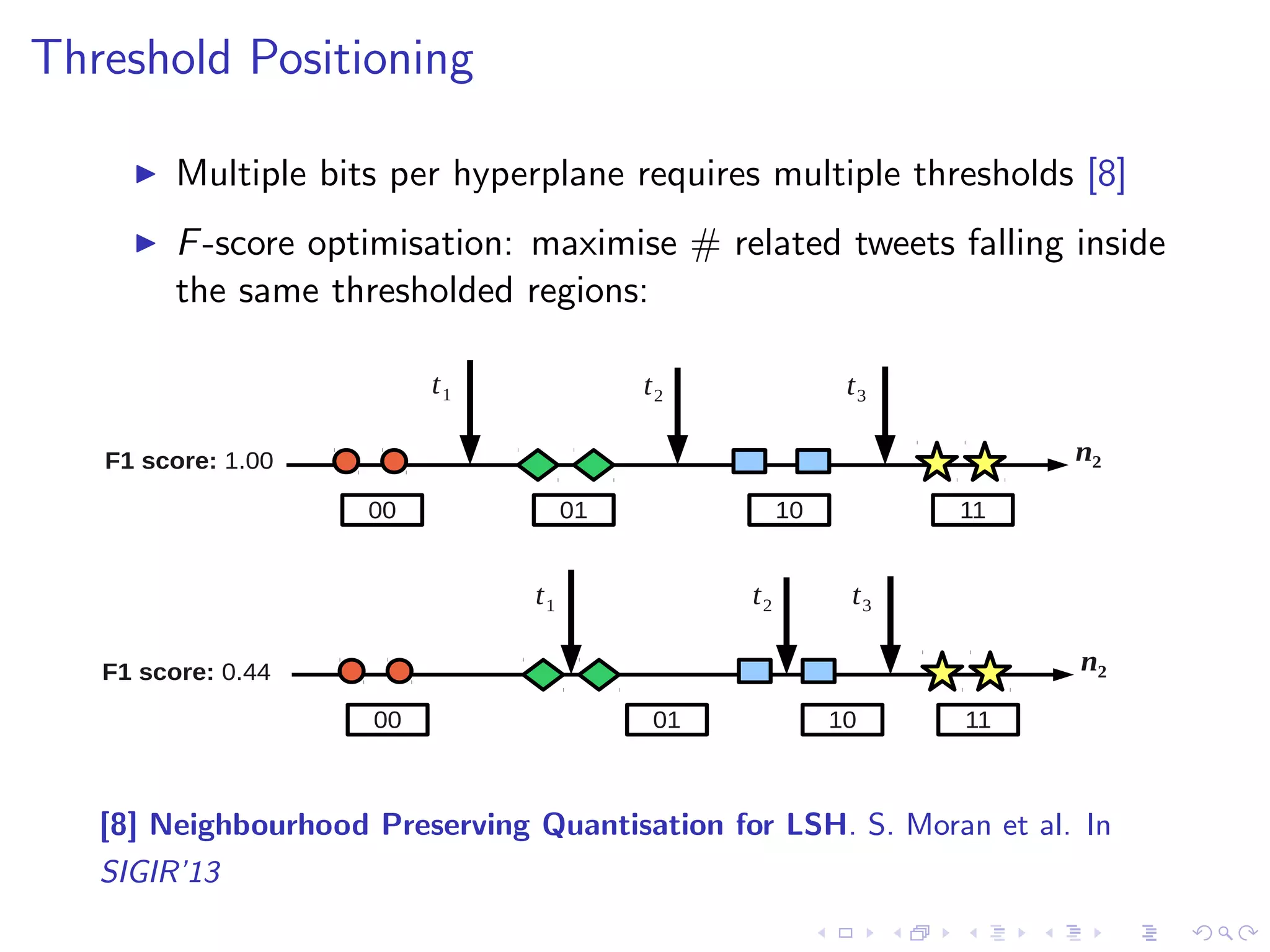

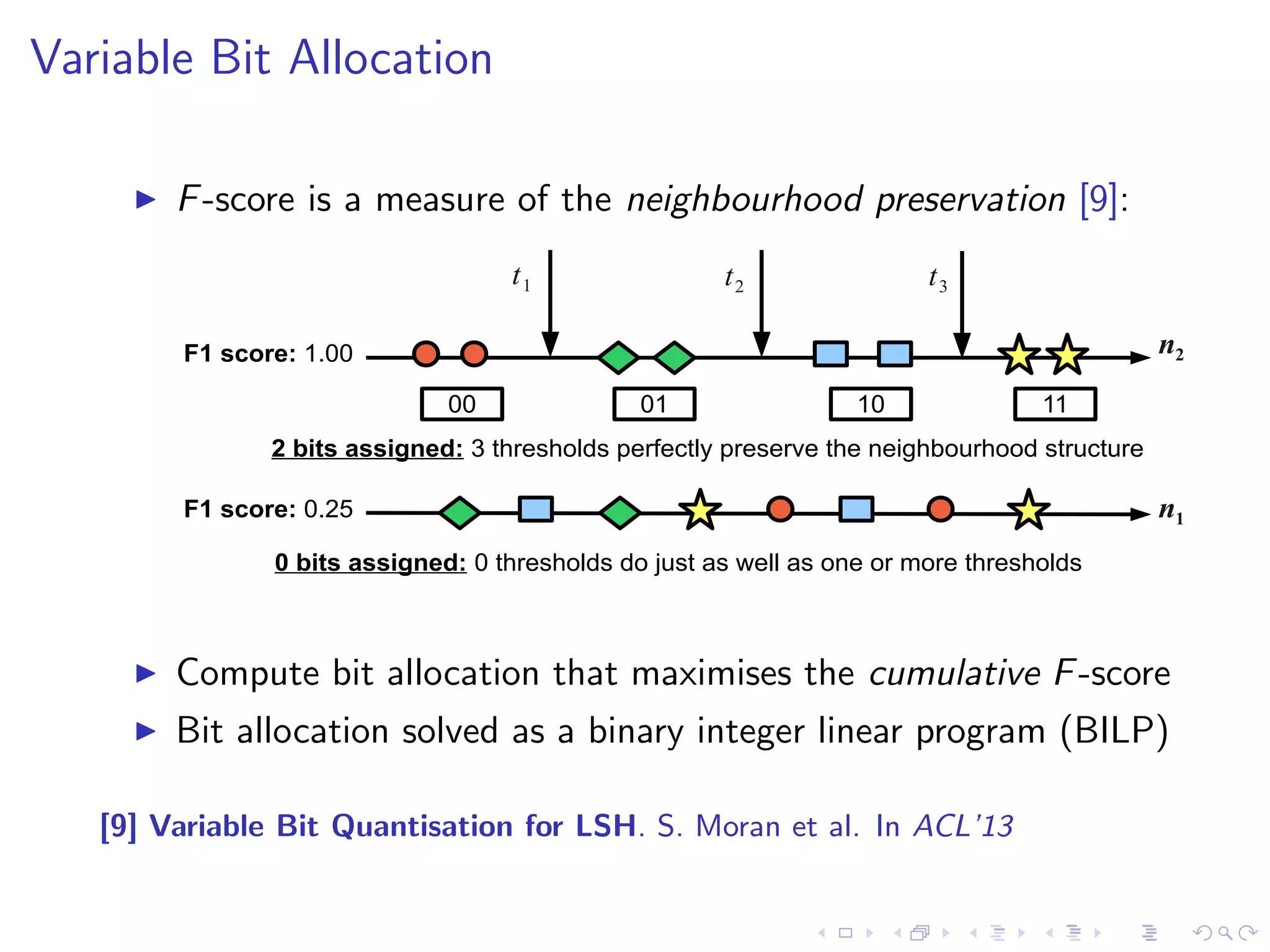

![Variable Bit Allocation

I F-score is a measure of the neighbourhood preservation [9]:

F1 score: 1.00

F1 score: 0.25

t1 t2 t3

00 01 10 11

0 bits assigned: 0 thresholds do just as well as one or more thresholds

n2

n1

2 bits assigned: 3 thresholds perfectly preserve the neighbourhood structure

I Compute bit allocation that maximises the cumulative F-score

I Bit allocation solved as a binary integer linear program (BILP)

[9] Variable Bit Quantisation for LSH. S. Moran et al. In ACL'13](https://image.slidesharecdn.com/sean-talk-140915165803-phpapp02/75/Data-Science-Research-Day-Talk-32-2048.jpg)

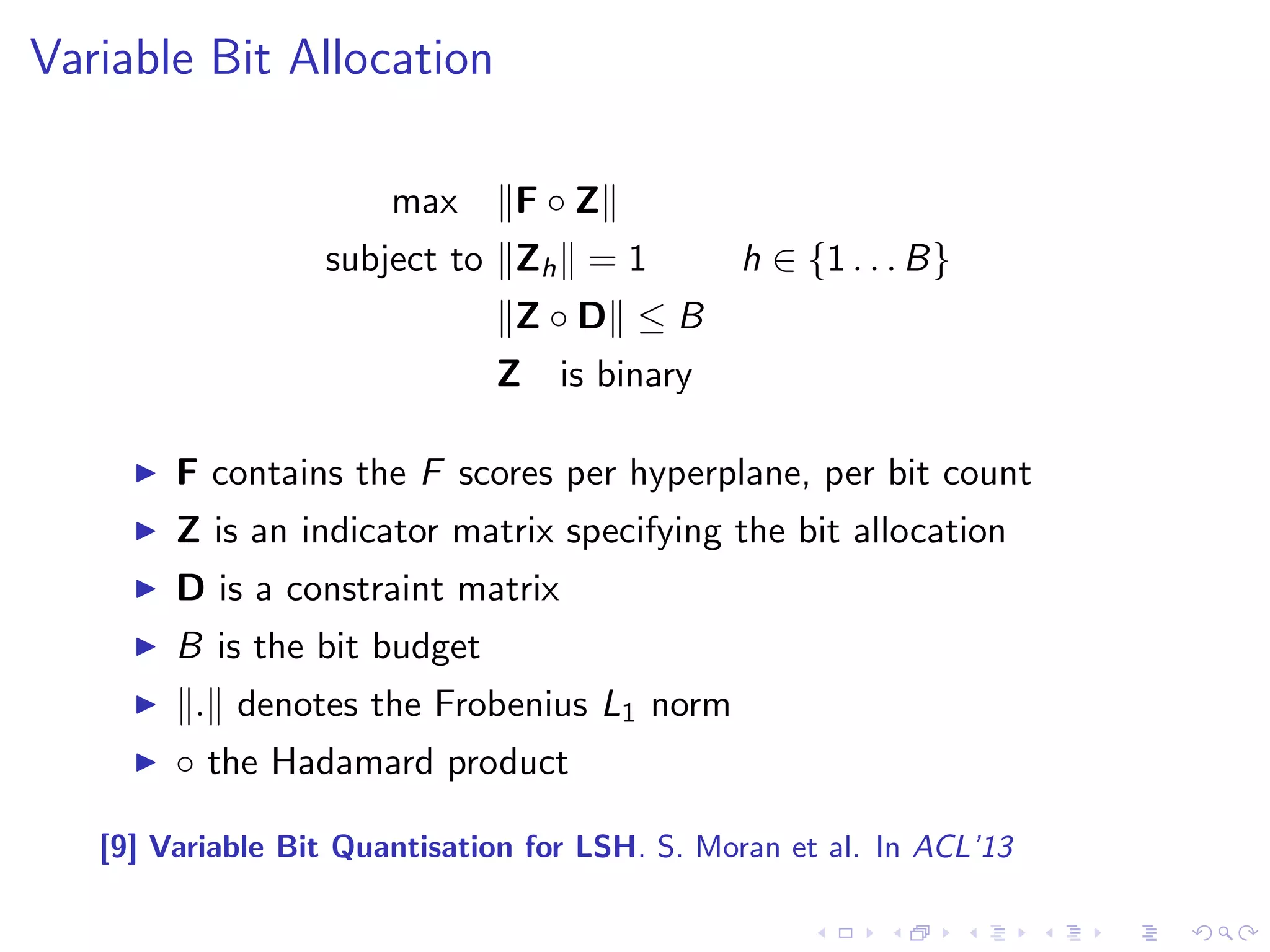

![Variable Bit Allocation

max kF Zk

subject to kZhk = 1 h 2 f1 : : : Bg

kZ Dk B

Z is binary

I F contains the F scores per hyperplane, per bit count

I Z is an indicator matrix specifying the bit allocation

I D is a constraint matrix

I B is the bit budget

I k:k denotes the Frobenius L1 norm

I the Hadamard product

[9] Variable Bit Quantisation for LSH. S. Moran et al. In ACL'13](https://image.slidesharecdn.com/sean-talk-140915165803-phpapp02/75/Data-Science-Research-Day-Talk-33-2048.jpg)

![Variable Bit Allocation

max kF Zk

subject to kZhk = 1 h 2 f1 : : : Bg

kZ Dk B

Z is binary

I F contains the F scores per hyperplane, per bit count

I Z is an indicator matrix specifying the bit allocation

I D is a constraint matrix

I B is the bit budget

I k:k denotes the Frobenius L1 norm

I the Hadamard product

[9] Variable Bit Quantisation for LSH. S. Moran et al. In ACL'13](https://image.slidesharecdn.com/sean-talk-140915165803-phpapp02/75/Data-Science-Research-Day-Talk-34-2048.jpg)

![Variable Bit Allocation

max kF Zk

subject to kZhk = 1 h 2 f1 : : : Bg

kZ Dk B

Z is binary

I F contains the F scores per hyperplane, per bit count

I Z is an indicator matrix specifying the bit allocation

I D is a constraint matrix

I B is the bit budget

I k:k denotes the Frobenius L1 norm

I the Hadamard product

[9] Variable Bit Quantisation for LSH. S. Moran et al. In ACL'13](https://image.slidesharecdn.com/sean-talk-140915165803-phpapp02/75/Data-Science-Research-Day-Talk-35-2048.jpg)

![Variable Bit Allocation

max kF Zk

subject to kZhk = 1 h 2 f1 : : : Bg

kZ Dk B

Z is binary

I F contains the F scores per hyperplane, per bit count

I Z is an indicator matrix specifying the bit allocation

I D is a constraint matrix

I B is the bit budget

I k:k denotes the Frobenius L1 norm

I the Hadamard product

[9] Variable Bit Quantisation for LSH. S. Moran et al. In ACL'13](https://image.slidesharecdn.com/sean-talk-140915165803-phpapp02/75/Data-Science-Research-Day-Talk-36-2048.jpg)

![Variable Bit Allocation

max kF Zk

subject to kZhk = 1 h 2 f1 : : : Bg

kZ Dk B

Z is binary

F 0

h1 h2

b0 0:25 0:25

b1 @

0:35 0:50

b2 0:40 1:00

1

A

0

@

D

0 0

1 1

2 2

1

A

0

@

Z

1 0

0 0

0 1

1

A

I Sparse solution: lower quality hyperplanes discarded [9].

[9] Variable Bit Quantisation for LSH. S. Moran et al. In ACL'13](https://image.slidesharecdn.com/sean-talk-140915165803-phpapp02/75/Data-Science-Research-Day-Talk-37-2048.jpg)

![Evaluation Protocol

I Task: Text and image retrieval

I Projections: LSH [2], Shift-invariant kernel hashing (SIKH)

[3], Spectral Hashing (SH) [4] and PCA-Hashing (PCAH) [5].

I Baselines: Single Bit Quantisation (SBQ), Manhattan

Hashing (MQ)[10], Double-Bit quantisation (DBQ) [11].

I Evaluation: how well do we retrieve the NN of queries?

[2] P. Indyk and R. Motwani. Approximate nearest neighbors: removing the curse of dimensionality. In STOC '98.

[3] M. Raginsky and S. Lazebnik. Locality-sensitive binary codes from shift-invariant kernels. In NIPS '09.

[4] Y. Weiss and A. Torralba and R. Fergus. Spectral Hashing. NIPS '08.

[9] J. Wang and S. Kumar and SF. Chang. Semi-supervised hashing for large-scale search. PAMI '12.

[10] W. Kong and W. Li and M. Guo. Manhattan hashing for large-scale image retrieval. SIGIR '12.

[11] W. Kong and W. Li. Double Bit Quantisation for Hashing. AAAI '12.](https://image.slidesharecdn.com/sean-talk-140915165803-phpapp02/75/Data-Science-Research-Day-Talk-39-2048.jpg)