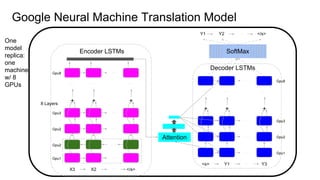

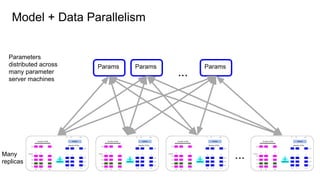

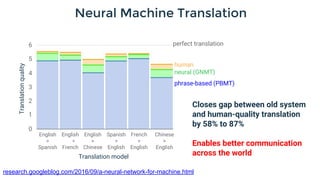

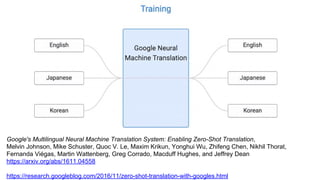

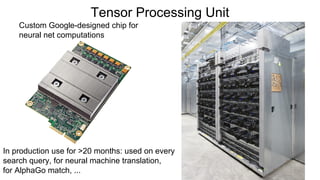

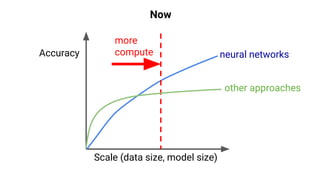

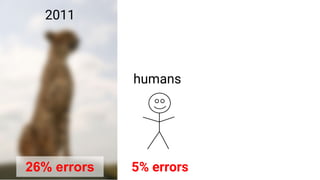

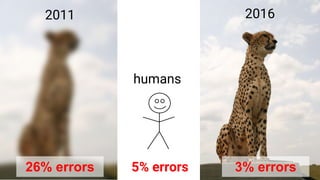

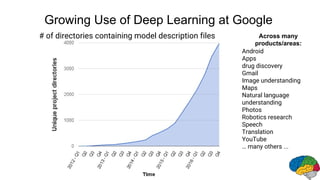

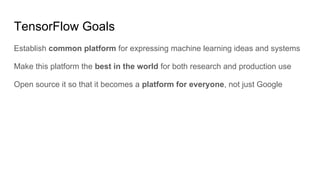

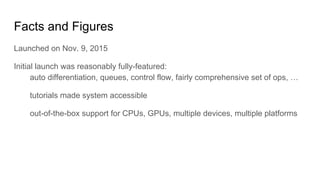

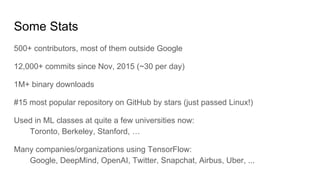

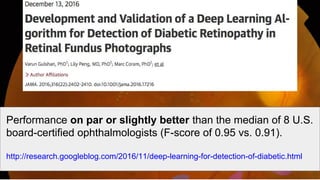

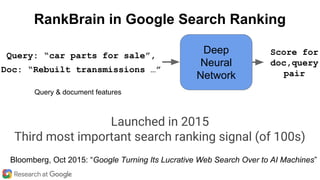

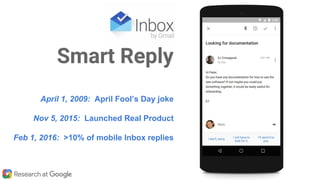

The document discusses advancements in deep learning, particularly through Google's TensorFlow, highlighting its impact on various applications such as search, language translation, and healthcare. It outlines TensorFlow's goals, features, and contributions to machine learning, emphasizing its scalability and flexibility for research and production use. Additionally, it describes the rapid progress and growing adoption of deep learning techniques within Google and beyond, showcasing their effectiveness in improving models across different tasks.

![Sequence-to-Sequence Model

A B C

v

D __ X Y Z

X Y Z Q

Input sequence

Target sequence

[Sutskever & Vinyals & Le NIPS 2014]

Deep LSTM](https://image.slidesharecdn.com/jeffdean-170115012221/85/Jeff-Dean-at-AI-Frontiers-Trends-and-Developments-in-Deep-Learning-Research-49-320.jpg)

![Sequence-to-Sequence Model: Machine Translation

v

Input sentence

Target sentence

[Sutskever & Vinyals & Le NIPS 2014] How

Quelle est taille?votre <EOS>](https://image.slidesharecdn.com/jeffdean-170115012221/85/Jeff-Dean-at-AI-Frontiers-Trends-and-Developments-in-Deep-Learning-Research-50-320.jpg)

![Sequence-to-Sequence Model: Machine Translation

v

Input sentence

Target sentence

[Sutskever & Vinyals & Le NIPS 2014] How

Quelle est taille?votre <EOS>

tall

How](https://image.slidesharecdn.com/jeffdean-170115012221/85/Jeff-Dean-at-AI-Frontiers-Trends-and-Developments-in-Deep-Learning-Research-51-320.jpg)

![Sequence-to-Sequence Model: Machine Translation

v

Input sentence

Target sentence

[Sutskever & Vinyals & Le NIPS 2014] How tall are

Quelle est taille?votre <EOS> How tall](https://image.slidesharecdn.com/jeffdean-170115012221/85/Jeff-Dean-at-AI-Frontiers-Trends-and-Developments-in-Deep-Learning-Research-52-320.jpg)

![Sequence-to-Sequence Model: Machine Translation

v

Input sentence

Target sentence

[Sutskever & Vinyals & Le NIPS 2014] How tall you?are

Quelle est taille?votre <EOS> How aretall](https://image.slidesharecdn.com/jeffdean-170115012221/85/Jeff-Dean-at-AI-Frontiers-Trends-and-Developments-in-Deep-Learning-Research-53-320.jpg)

![Sequence-to-Sequence Model: Machine Translation

v

Input sentence

[Sutskever & Vinyals & Le NIPS 2014]

At inference time:

Beam search to choose most probable

over possible output sequences

Quelle est taille?votre <EOS>](https://image.slidesharecdn.com/jeffdean-170115012221/85/Jeff-Dean-at-AI-Frontiers-Trends-and-Developments-in-Deep-Learning-Research-54-320.jpg)

![Image Captioning

W __ A young girl

A young girl asleep[Vinyals et al., CVPR 2015]](https://image.slidesharecdn.com/jeffdean-170115012221/85/Jeff-Dean-at-AI-Frontiers-Trends-and-Developments-in-Deep-Learning-Research-59-320.jpg)