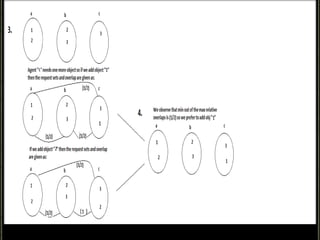

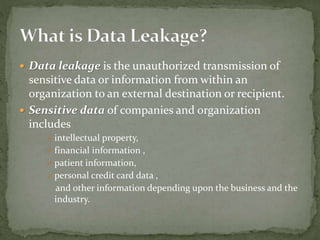

Data leakage occurs when sensitive data is transmitted outside an organization without authorization. To prevent leakage, organizations distribute data to third-party agents but must ensure the agents do not leak the data. The document proposes strategies for distributing data to agents in a way that improves the ability to detect which agent leaked the data, should a leak occur. Specifically, the strategies involve distributing disjoint or unique subsets of real data to agents, along with fake data to identify the source of any leaks. The strategies aim to minimize overlap in the data distributed to different agents.

![Watermarking

Overview:

A unique code is embedded in each distributed

copy. If that copy is later discovered in the hands of an

unauthorized party, the leaker can be identified.

Mechanism:

The main idea is to generate a watermark [W(x; y)]

using a secret key chosen by the sender such that W(x;

y) is indistinguishable from random noise for any

entity that does not know the key (i.e., the recipients).](https://image.slidesharecdn.com/dataleakagedetection-140410130656-phpapp01/85/Data-leakage-detection-6-320.jpg)