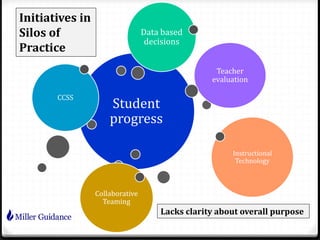

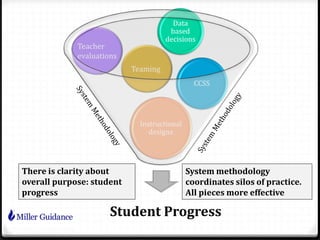

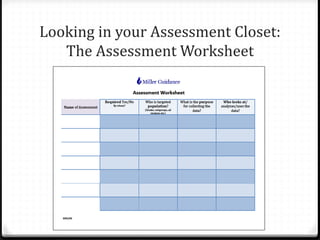

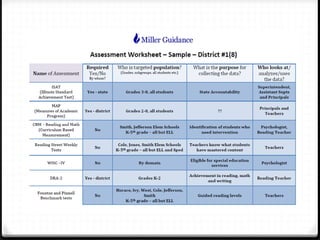

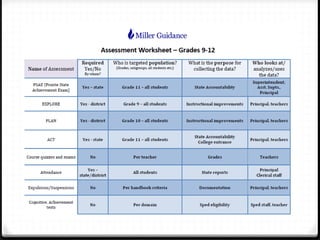

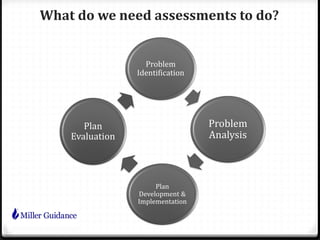

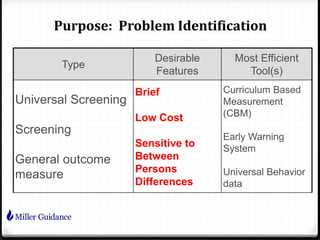

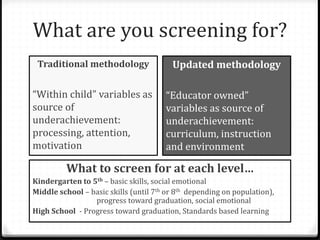

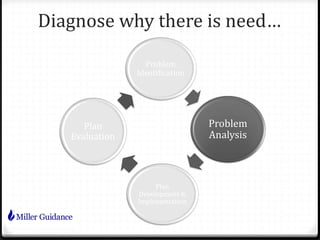

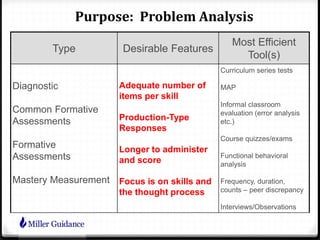

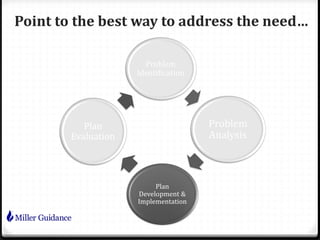

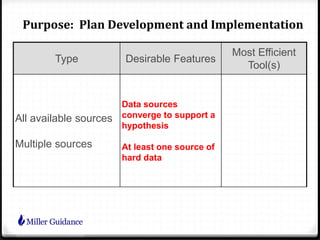

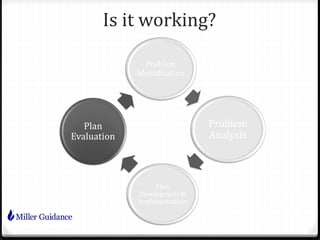

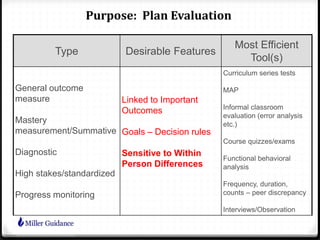

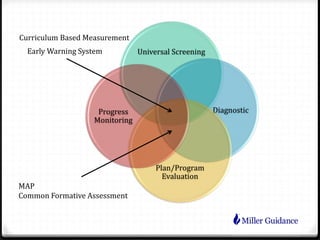

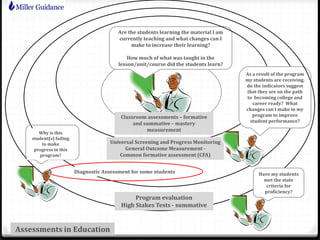

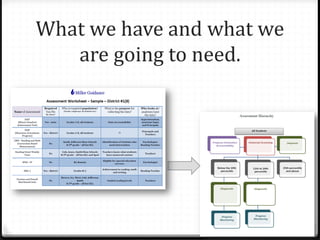

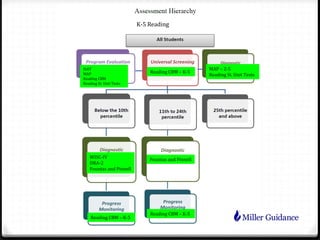

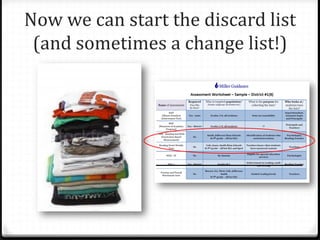

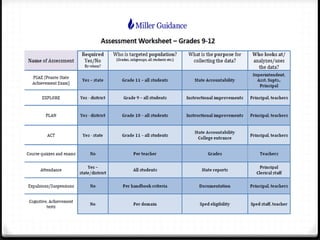

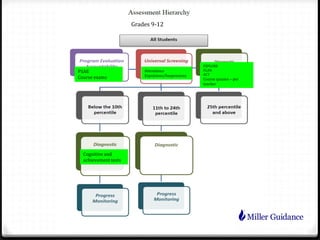

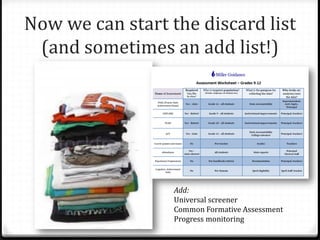

There are many assessments being used but lack of clarity about their purposes. An organized assessment plan is needed to coordinate different initiatives and ensure all elements work together toward the overall goal of student progress. The first step is to examine current assessments being used and discard any not serving a clear need, in order to simplify the system. A valid, reliable and coordinated assessment approach can provide the right data to inform instructional decisions at each level from problem identification to evaluation of plans.