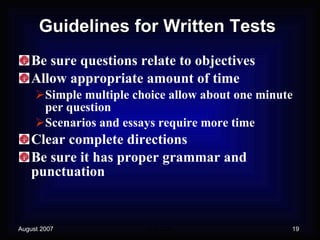

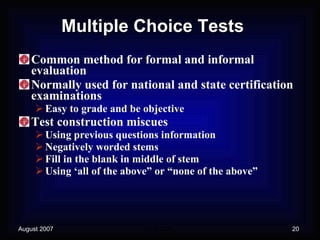

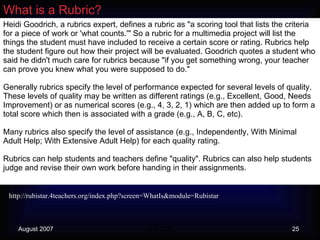

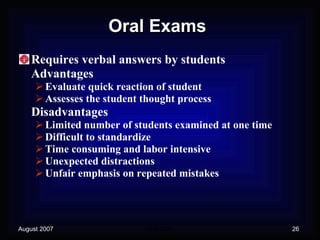

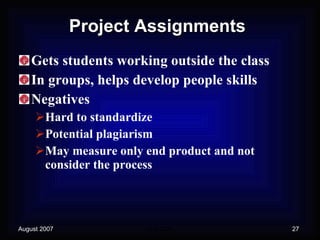

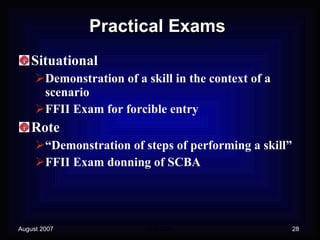

This document discusses techniques for testing and evaluation in fire service training. It covers four levels of evaluation (reaction, learning, transfer, business results), the difference between summative and formative evaluation, and various types of tests including written, oral, practical, and performance evaluations. Guidelines are provided for constructing written, multiple choice, true/false, matching, completion and essay tests. Sources for test materials are also discussed.