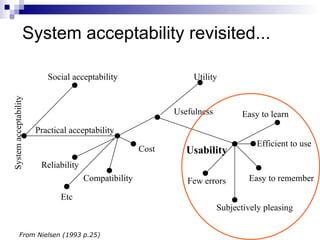

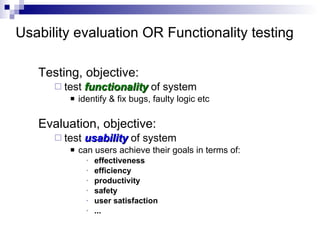

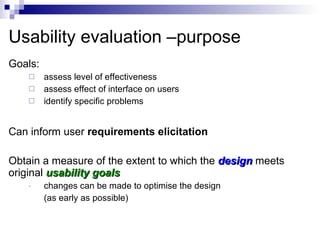

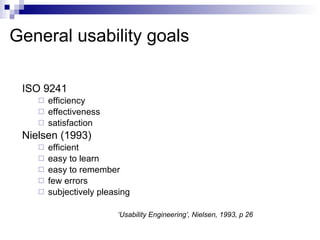

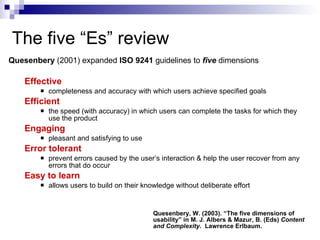

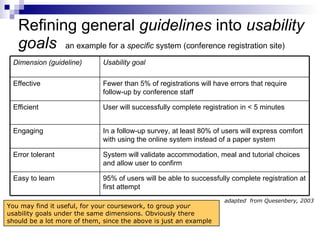

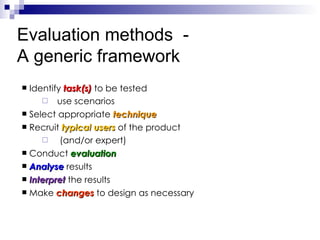

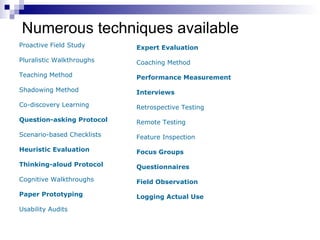

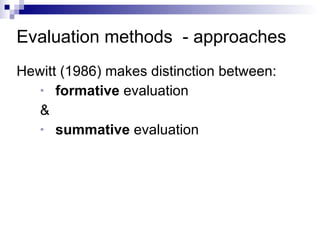

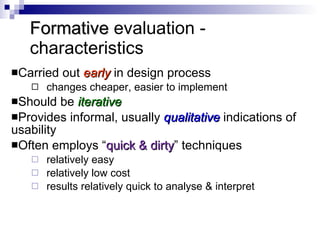

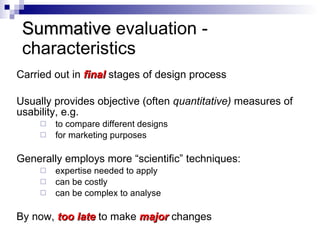

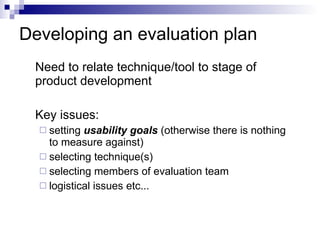

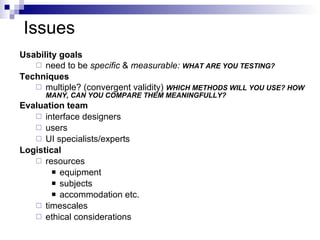

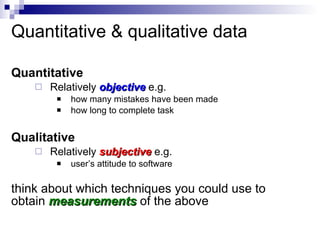

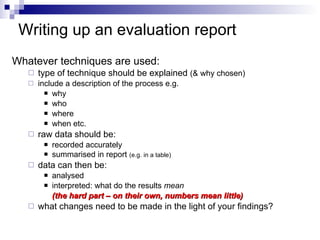

This document provides an overview of usability evaluation techniques for formative testing. It defines usability and discusses the purpose of usability evaluation to identify problems, inform requirements, and optimize design early. A variety of formative techniques are described, including thinking aloud, heuristic evaluation, and paper prototyping. The document emphasizes that usability evaluation should have specific, measurable goals and provide both qualitative and quantitative data to analyze and interpret results to improve the design.