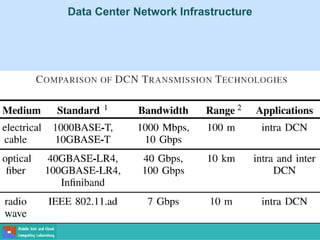

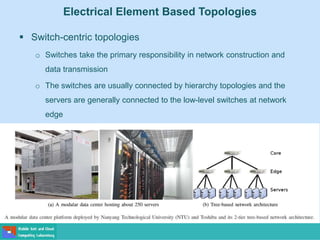

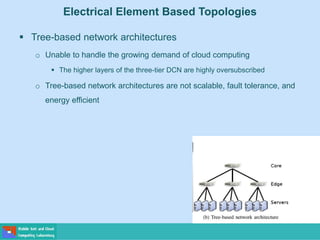

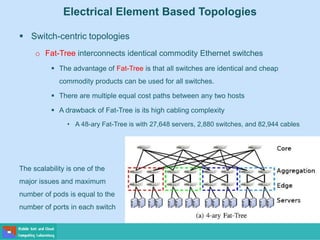

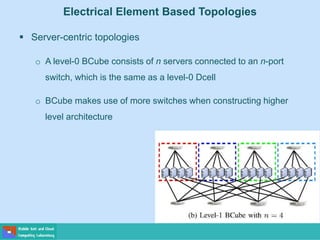

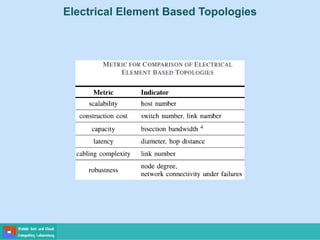

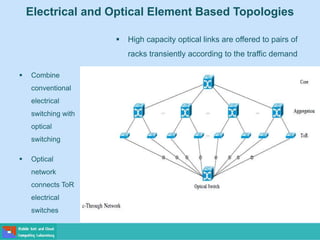

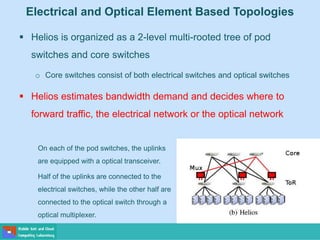

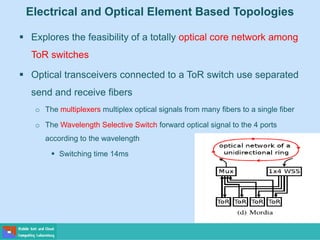

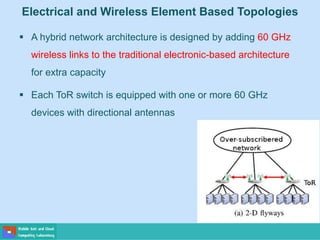

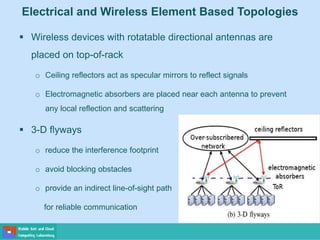

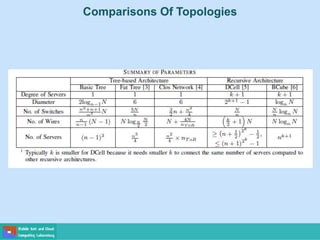

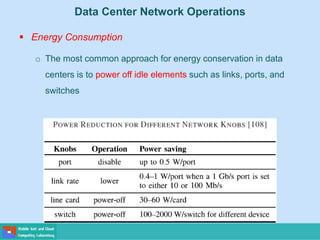

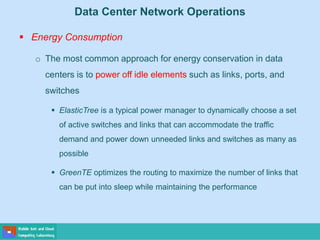

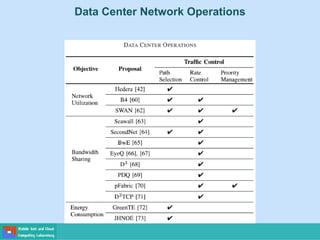

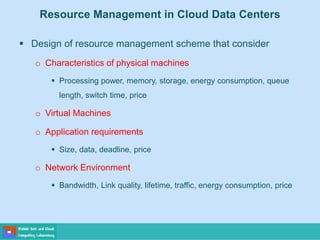

This document discusses data center networks. It describes how data centers contain large numbers of interconnected computing and storage nodes. It discusses challenges in data center network design including large scale, high energy consumption, and strict service requirements. It also describes different types of data center network infrastructures including intra and inter data center networks. For intra data center networks, it covers electrical, electrical/optical, and electrical/wireless topologies as well as traffic control methods.