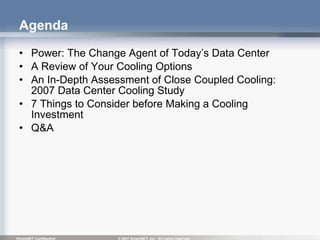

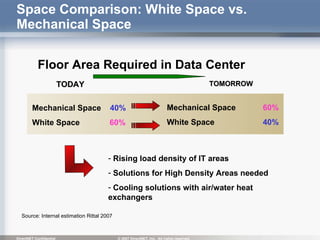

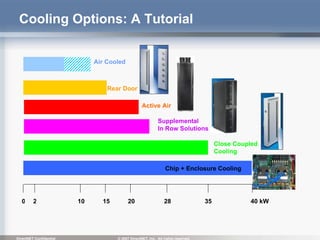

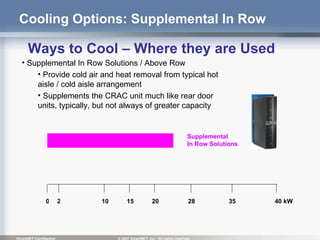

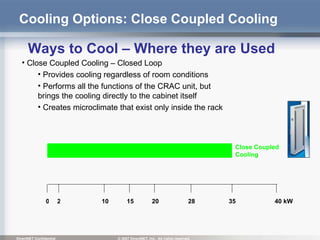

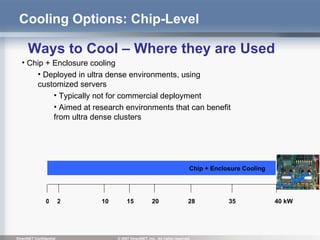

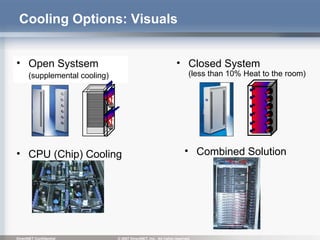

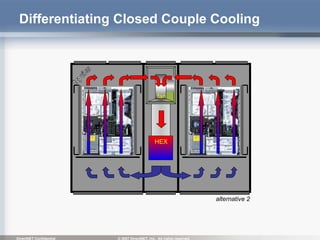

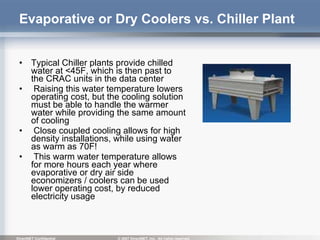

The document presents a 2007 study comparing conventional raised floor cooling systems with close coupled cooling technology for data centers. It outlines the advantages of close coupled cooling, such as improved energy efficiency and the ability to handle high-density IT loads, while discussing various cooling options and trends. The study emphasizes cost savings in energy and real estate, as well as the necessity for adaptive infrastructure in modern data centers.

![Q&A To Receive a Copy of Today’s Presentation: [email_address]](https://image.slidesharecdn.com/lcppresentationfinal-091210114647-phpapp02/85/Data-Center-Cooling-Study-on-Liquid-Cooling-40-320.jpg)