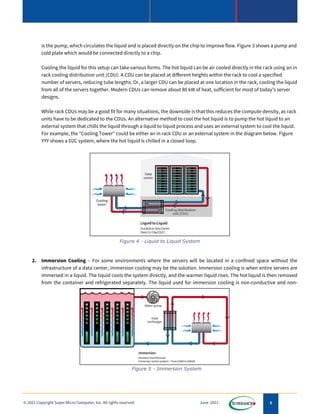

The document discusses server cooling challenges as CPUs and GPUs generate more heat. Liquid cooling is presented as a viable alternative to traditional air cooling, as it can remove heat more efficiently and reduce data center operating costs by over 40%. Several types of liquid cooling systems are described, including direct-to-chip cooling, immersion cooling, and rear door heat exchangers. Liquid cooling is shown to improve power usage effectiveness and enable higher performance computing within the same data center footprint. Supermicro offers several server solutions that integrate liquid cooling capabilities.