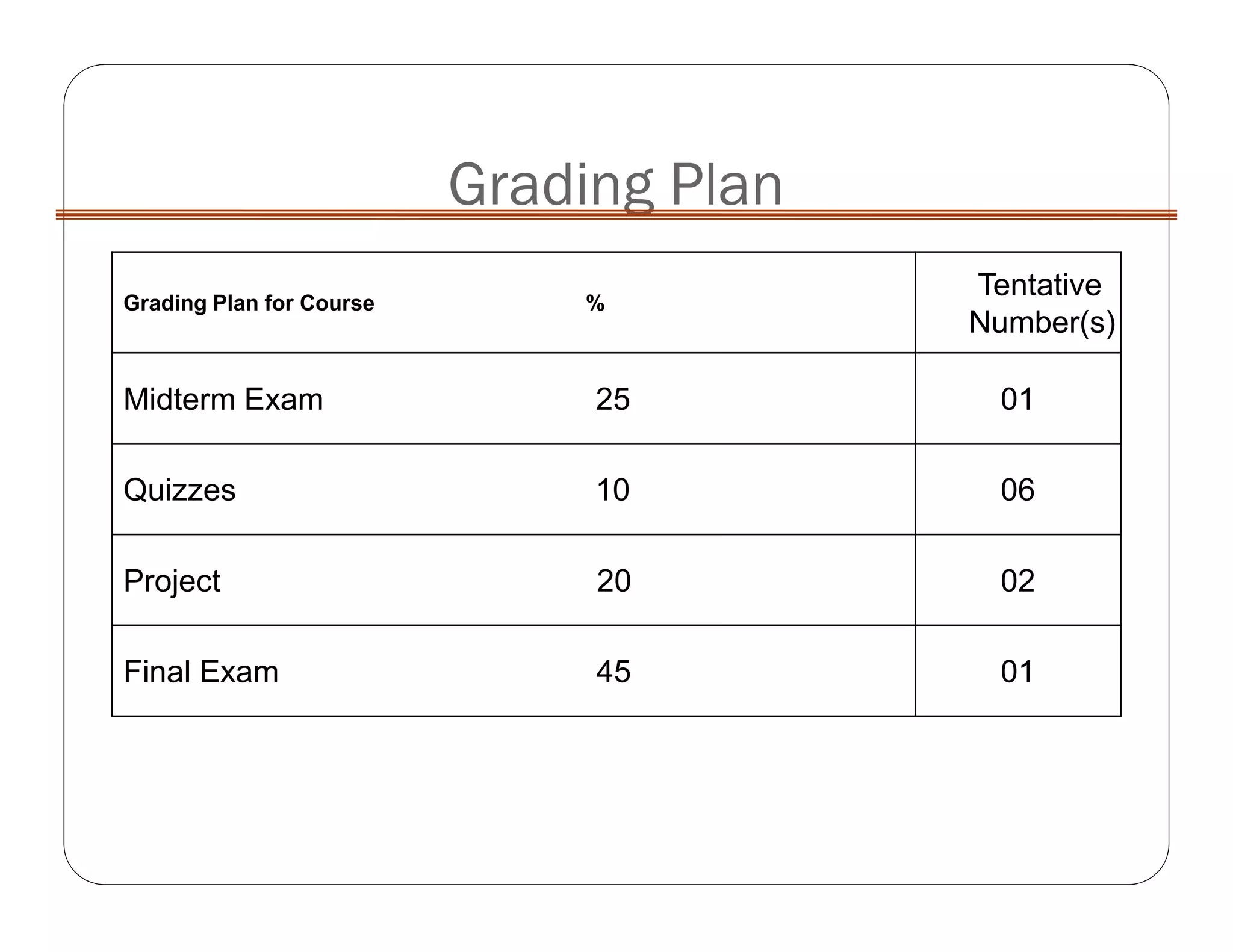

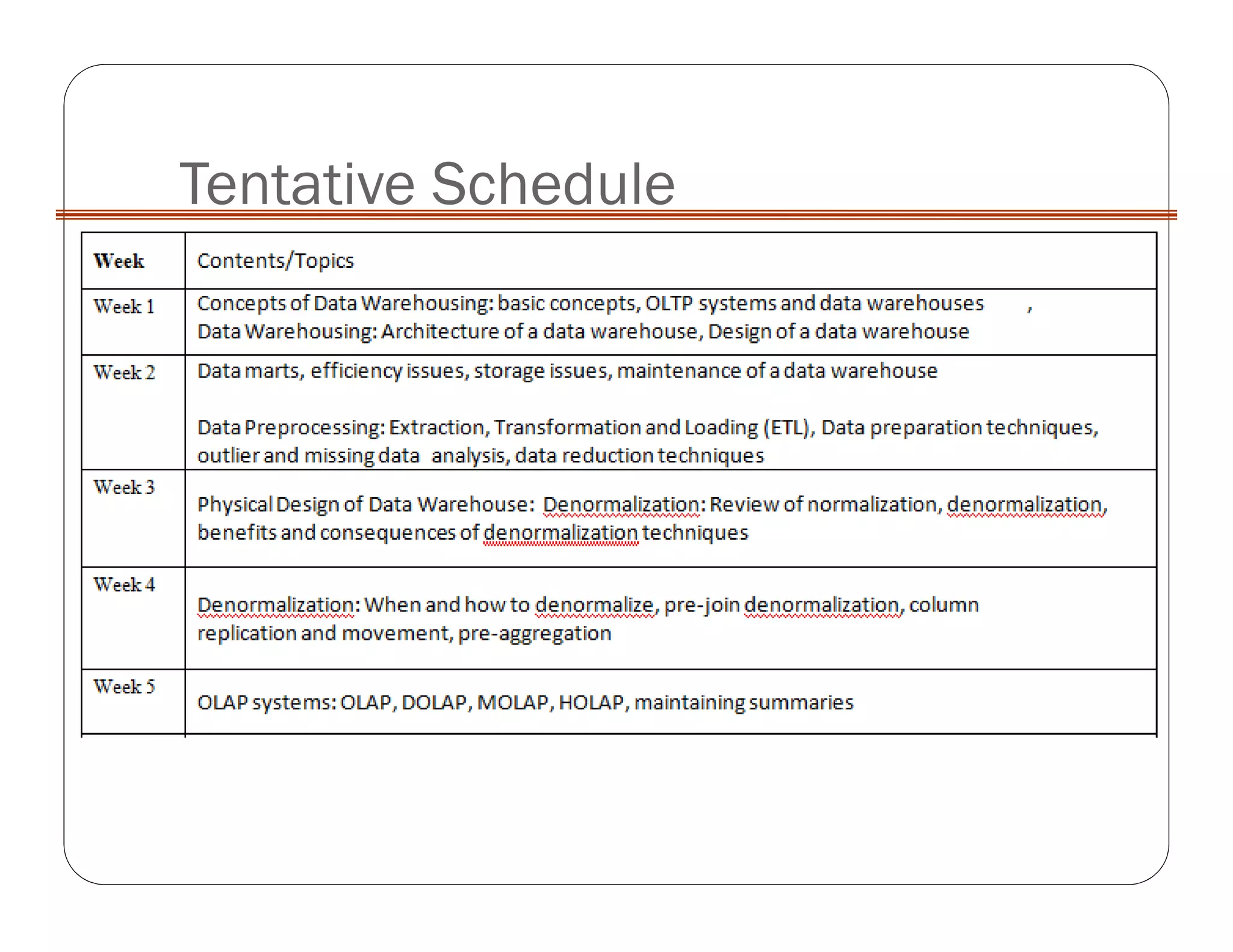

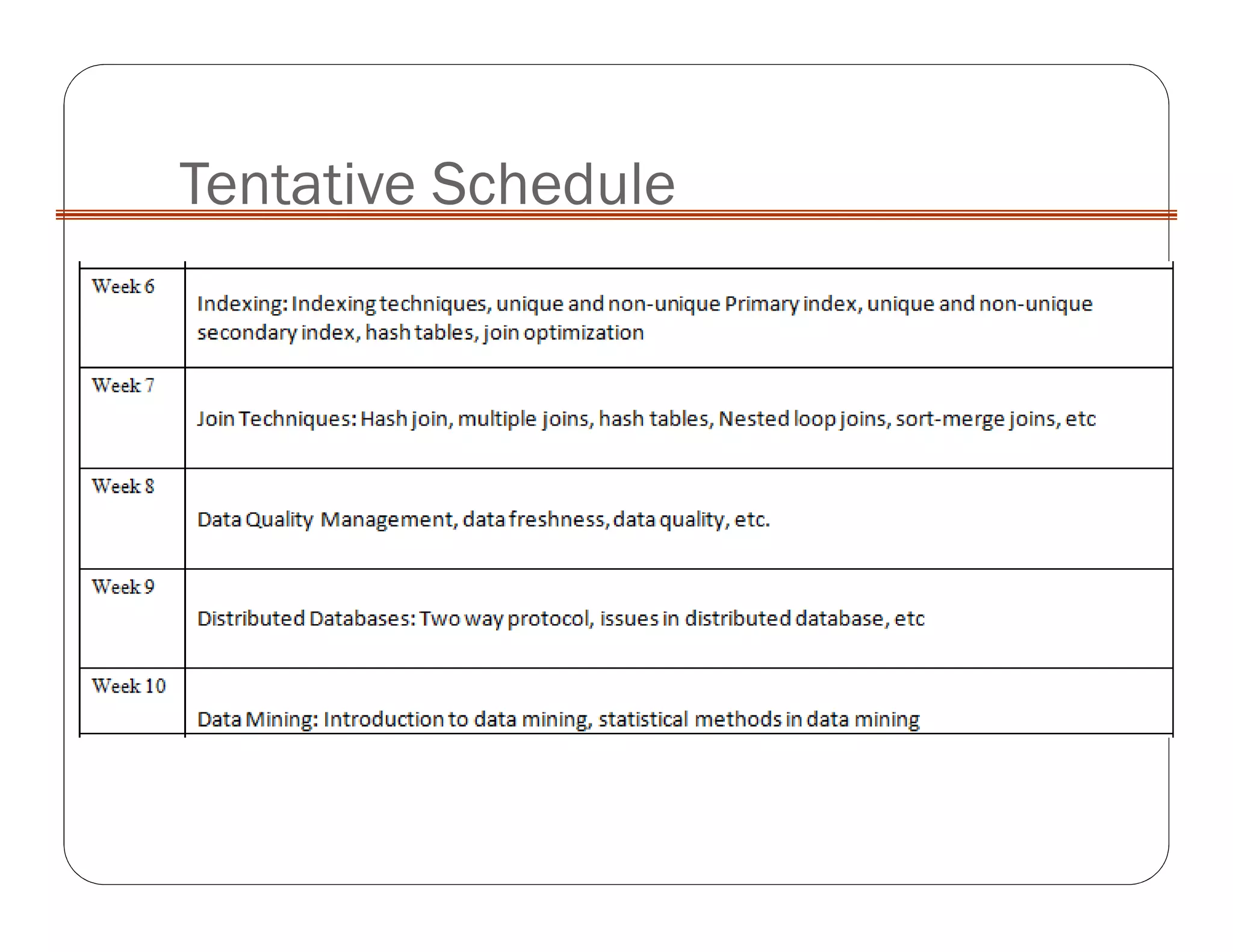

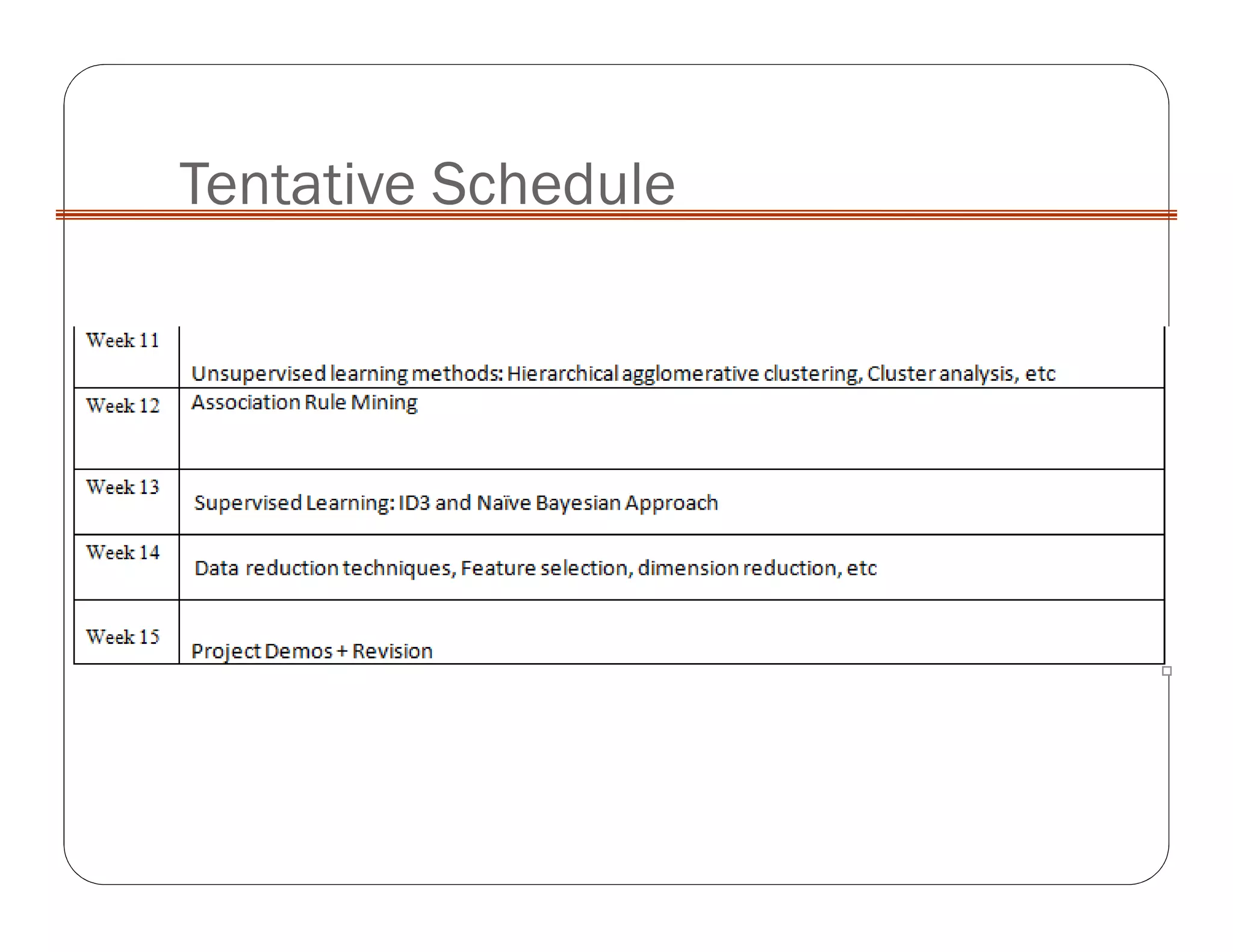

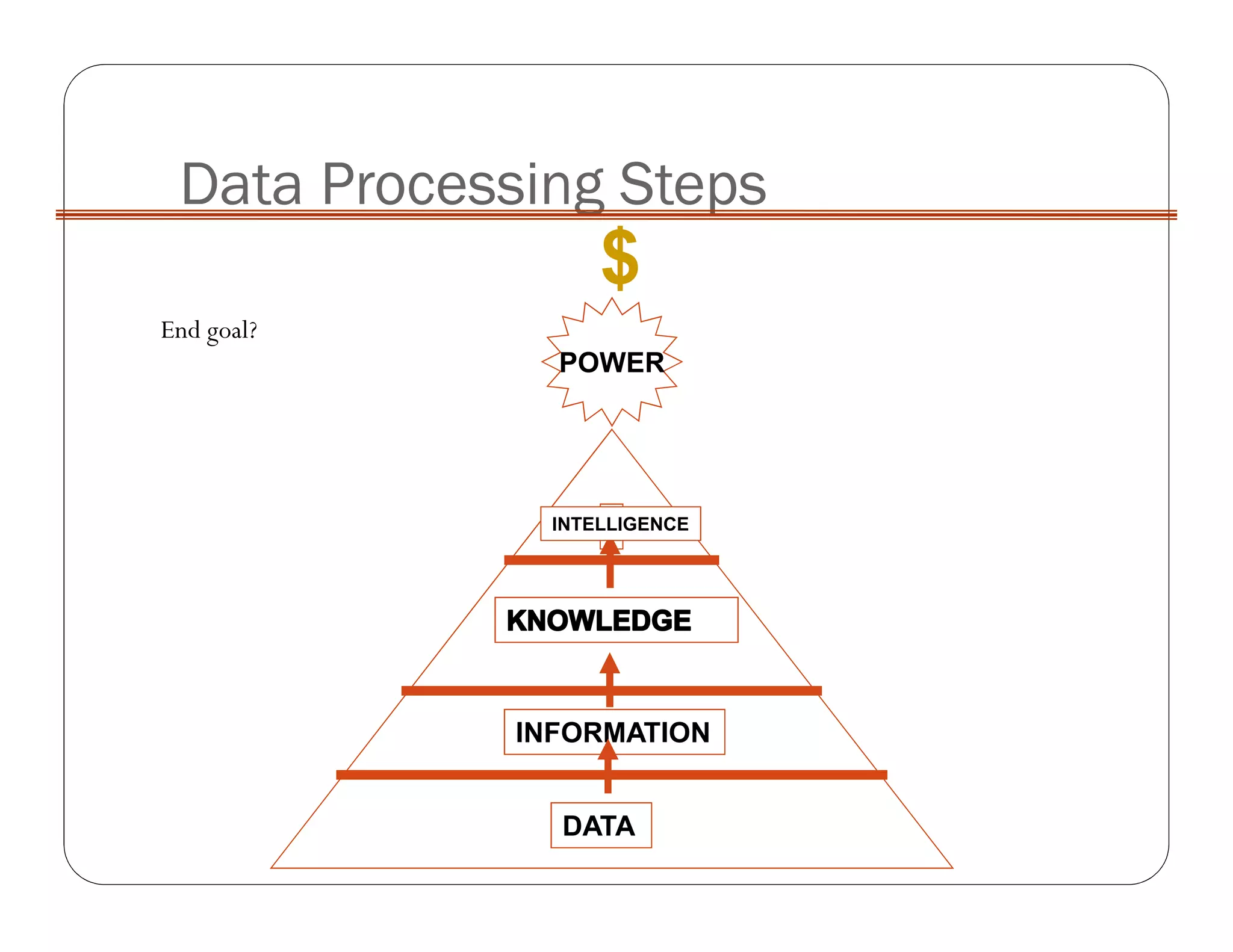

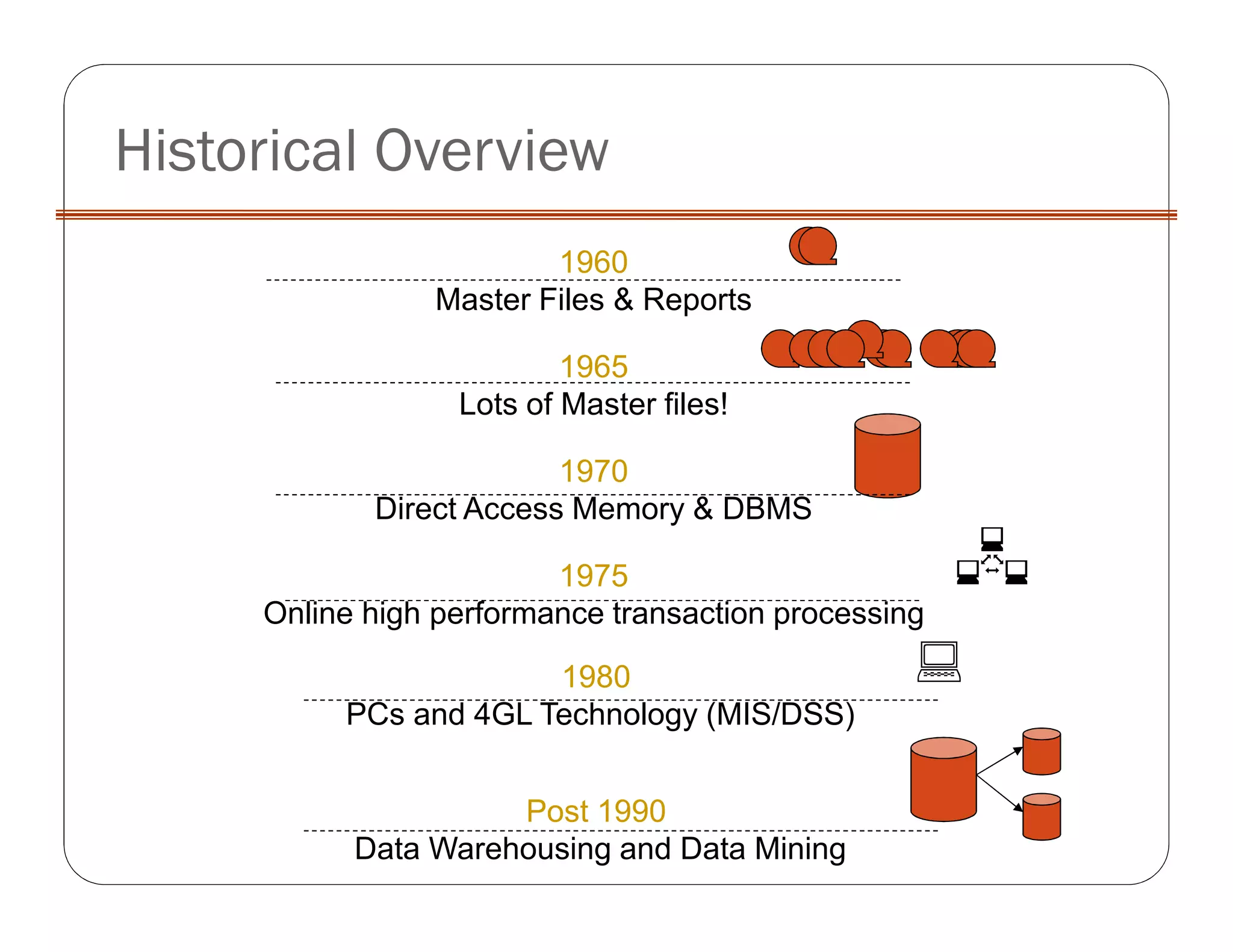

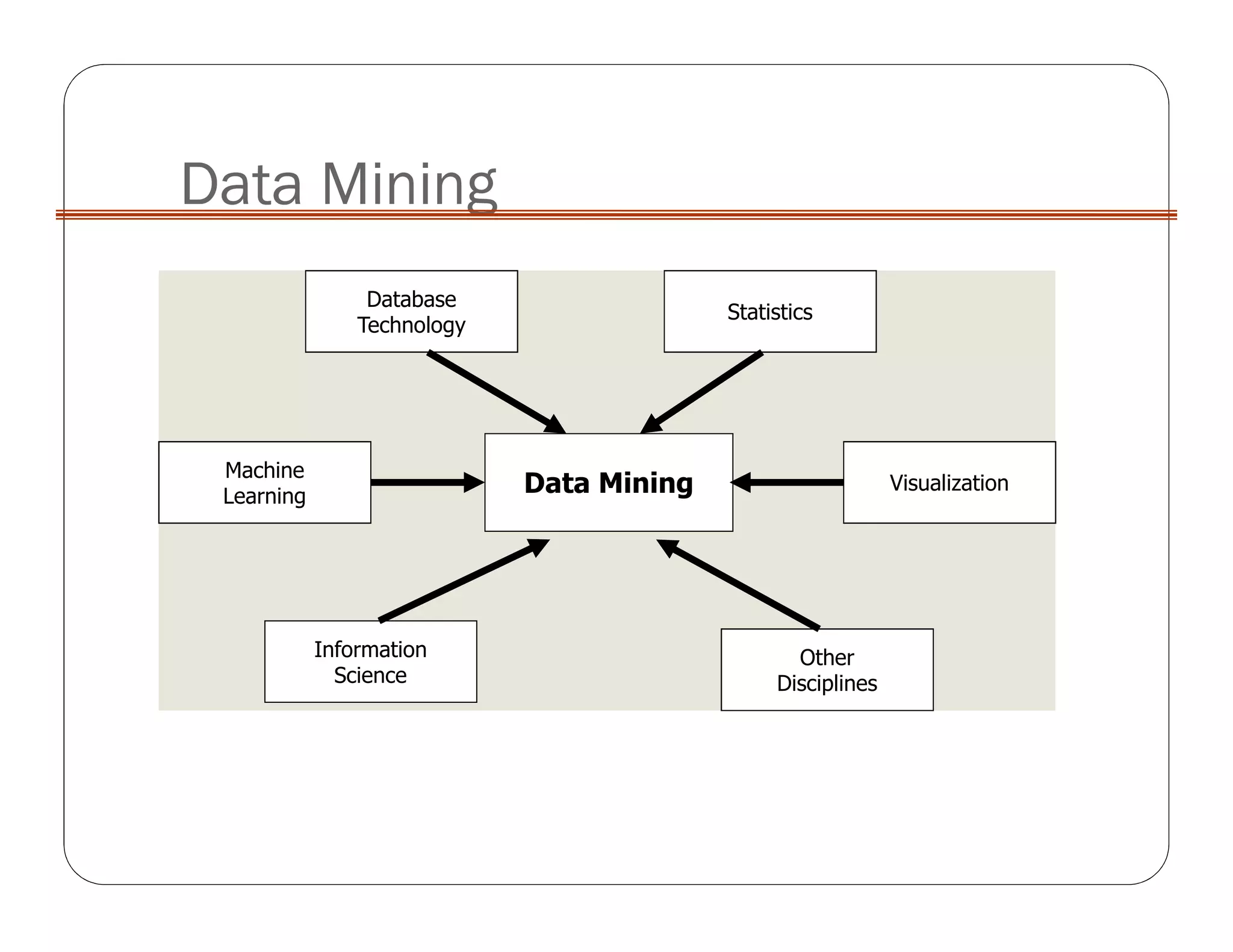

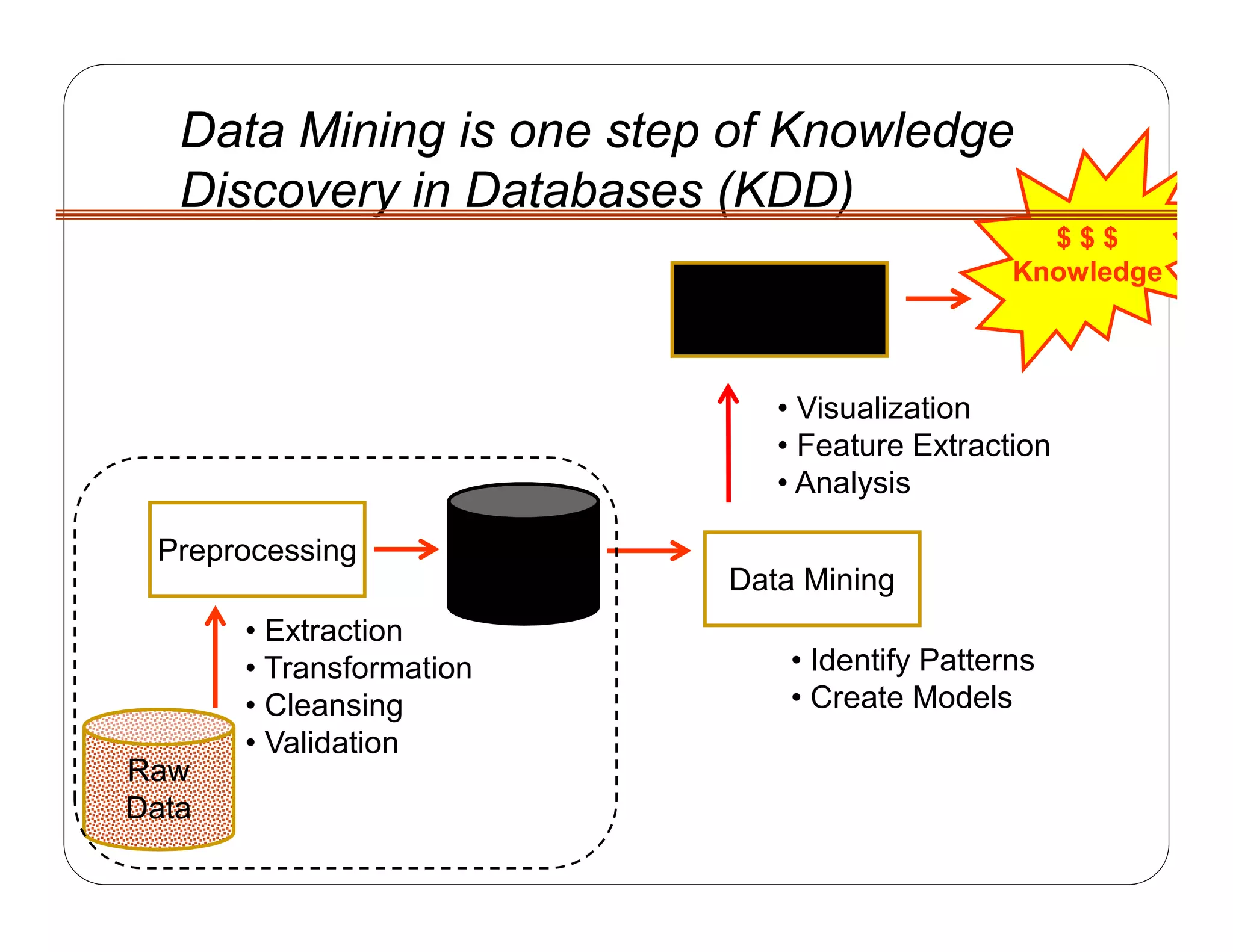

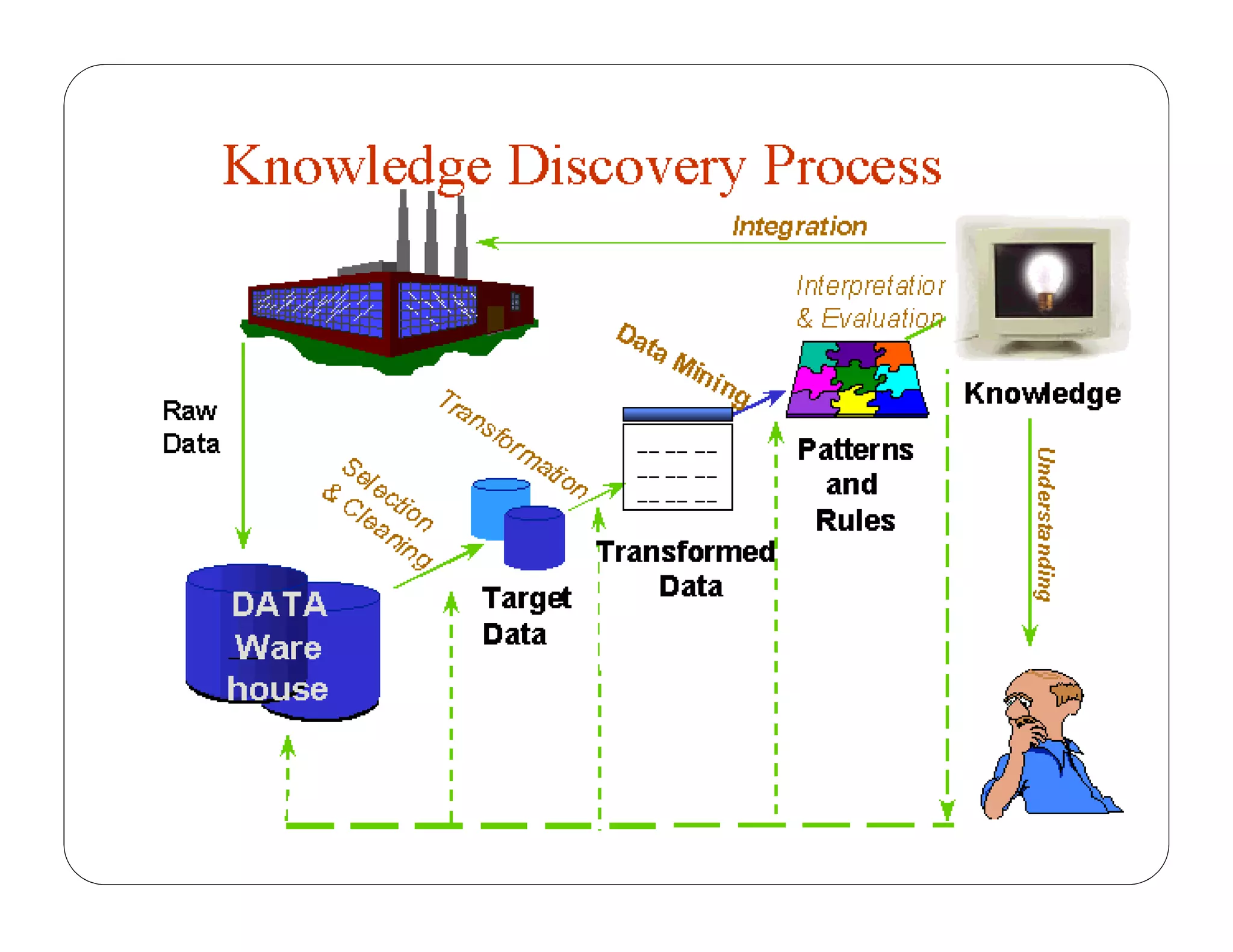

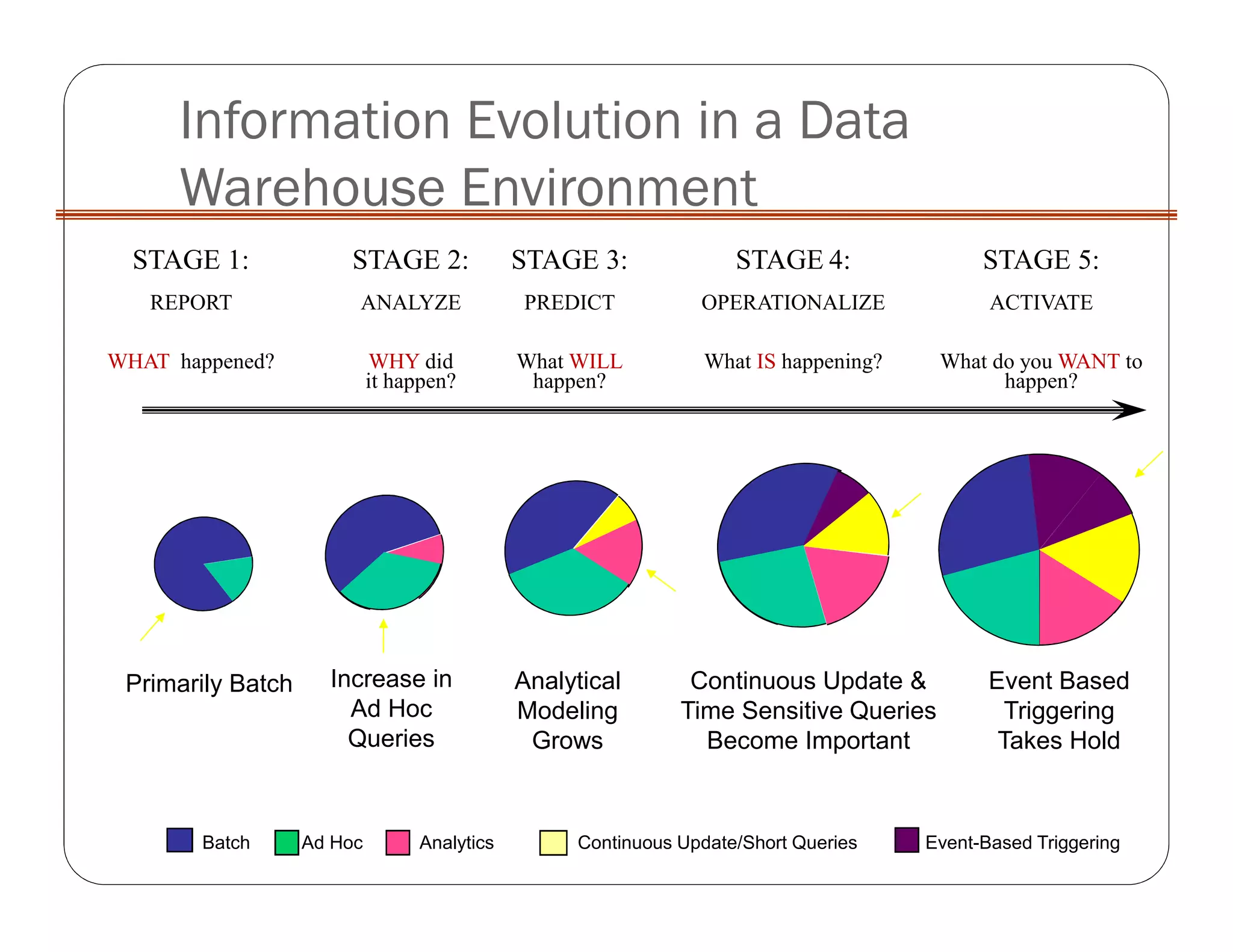

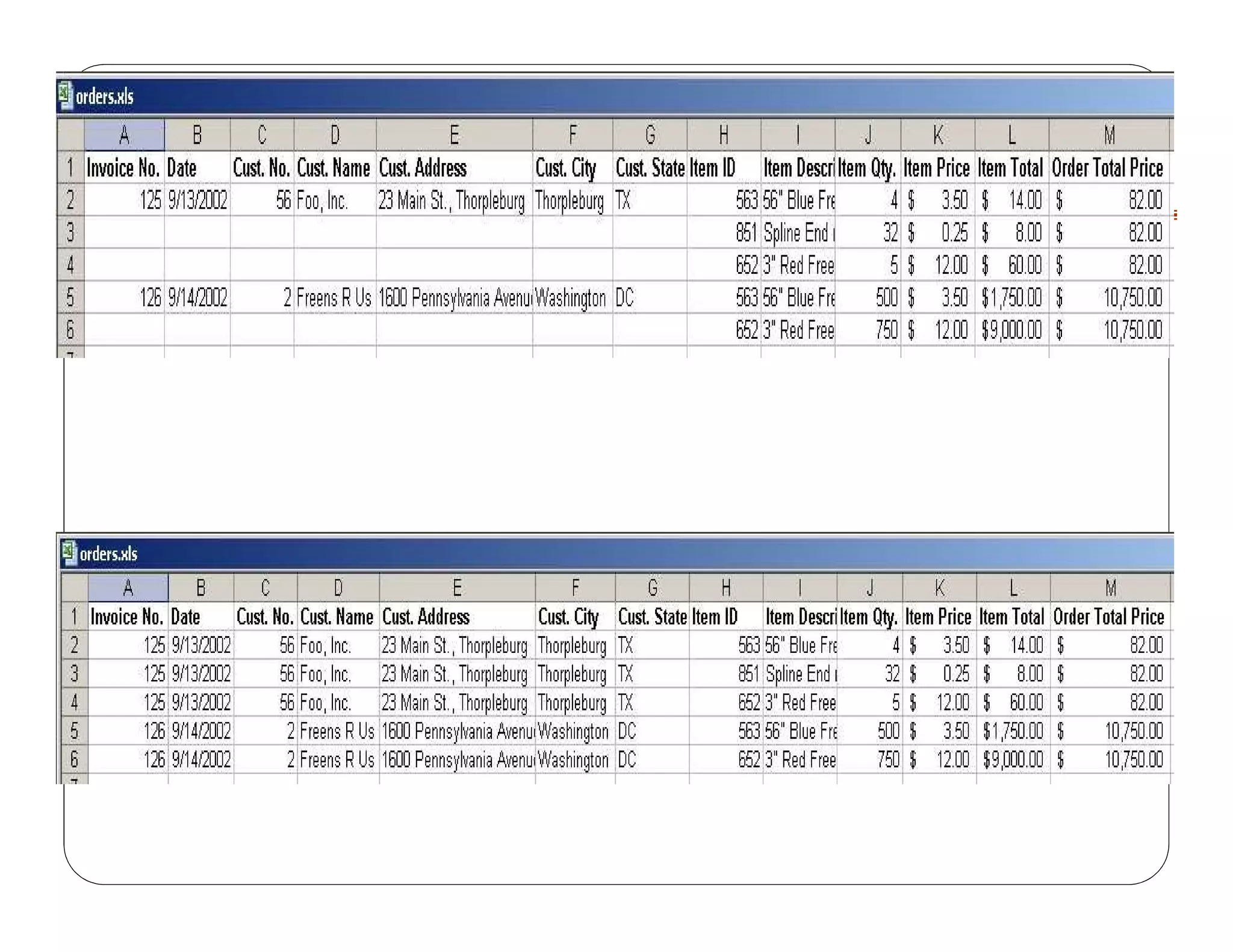

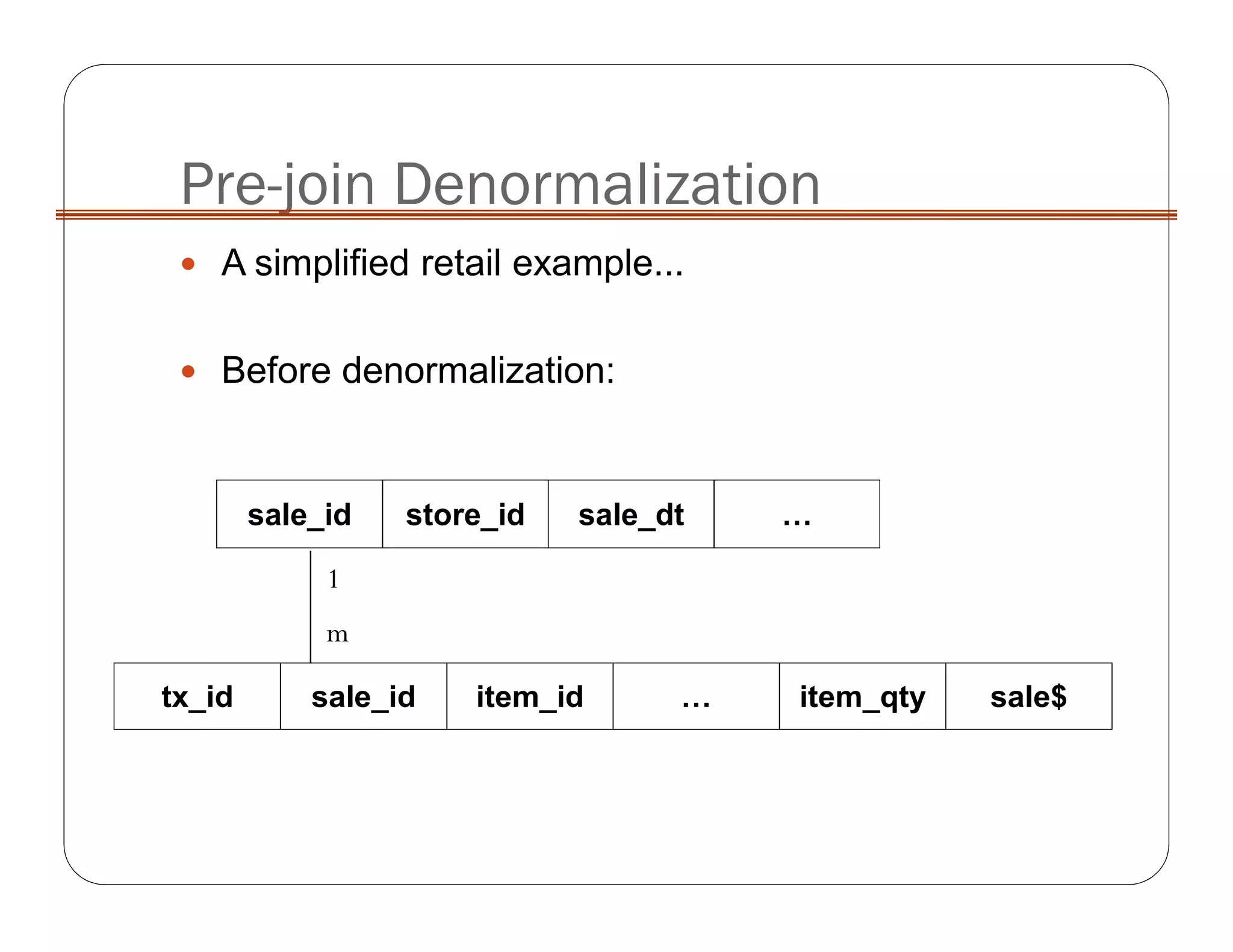

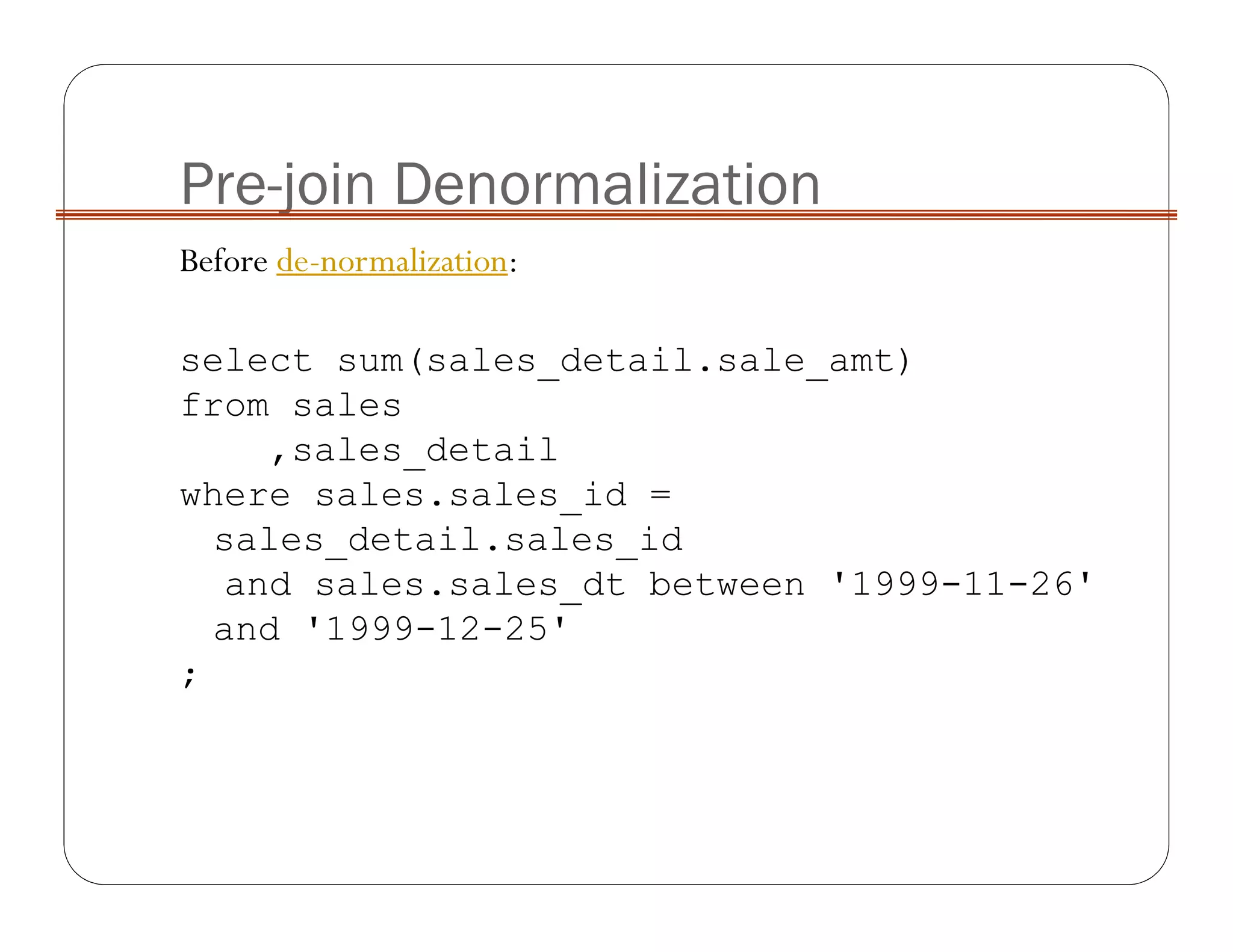

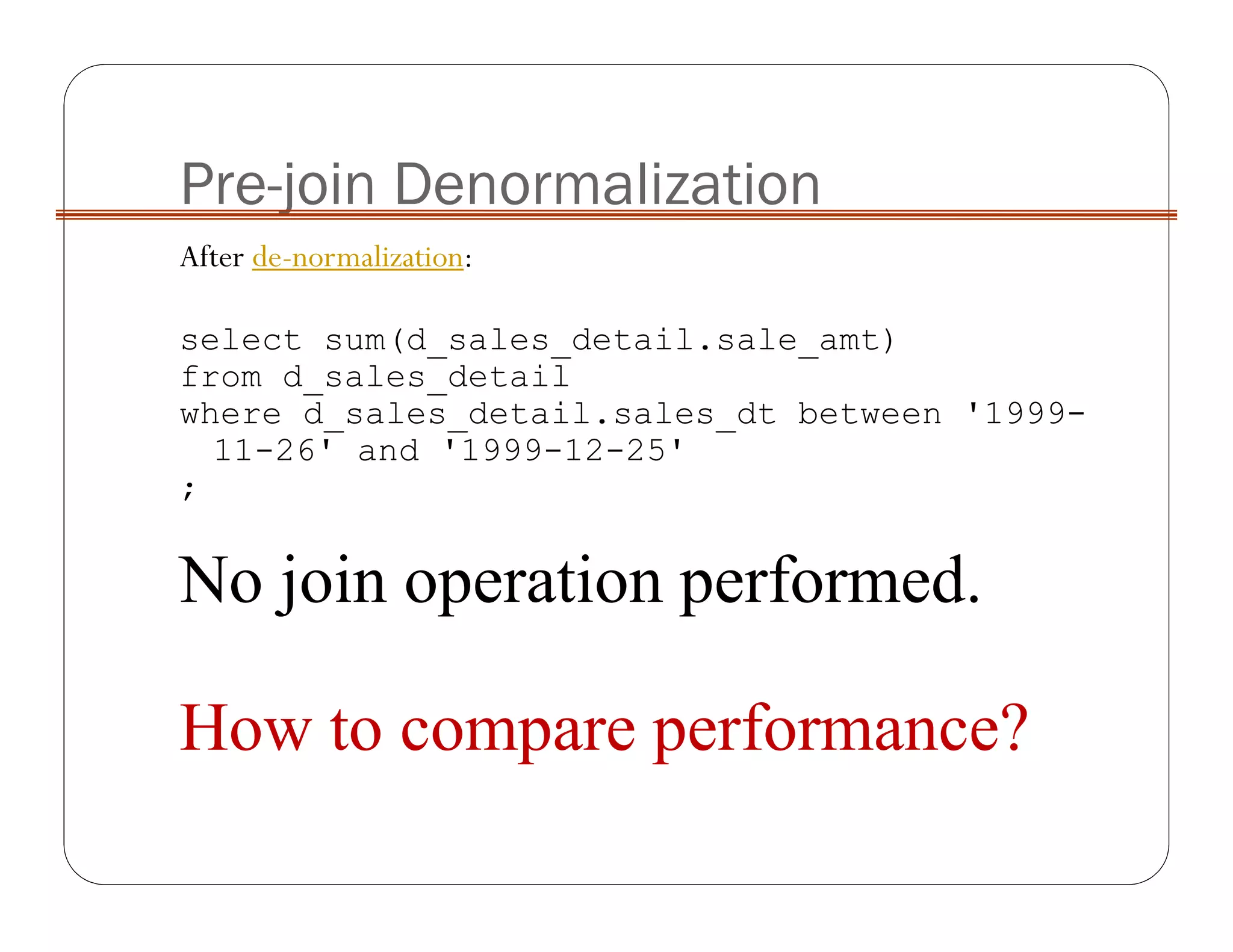

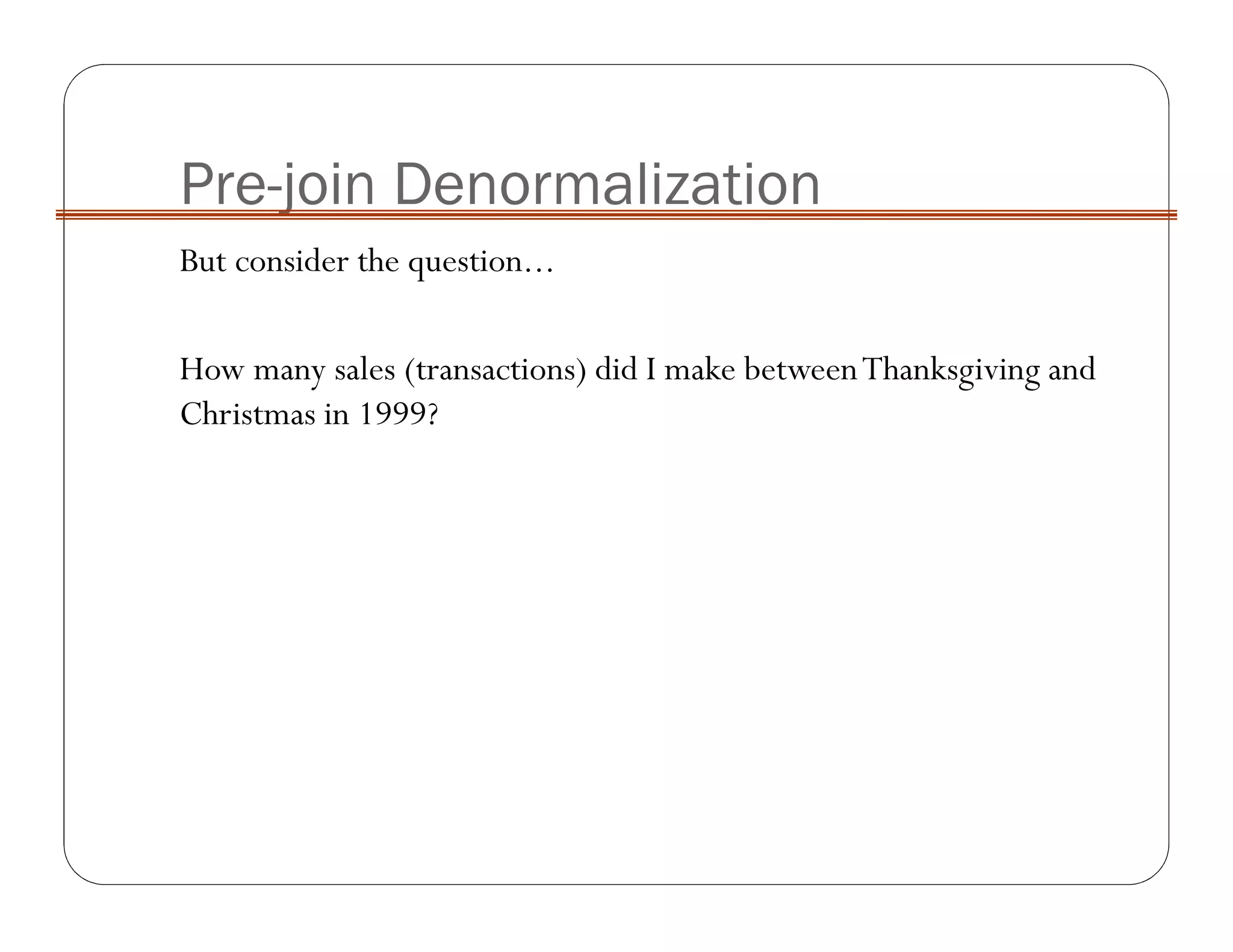

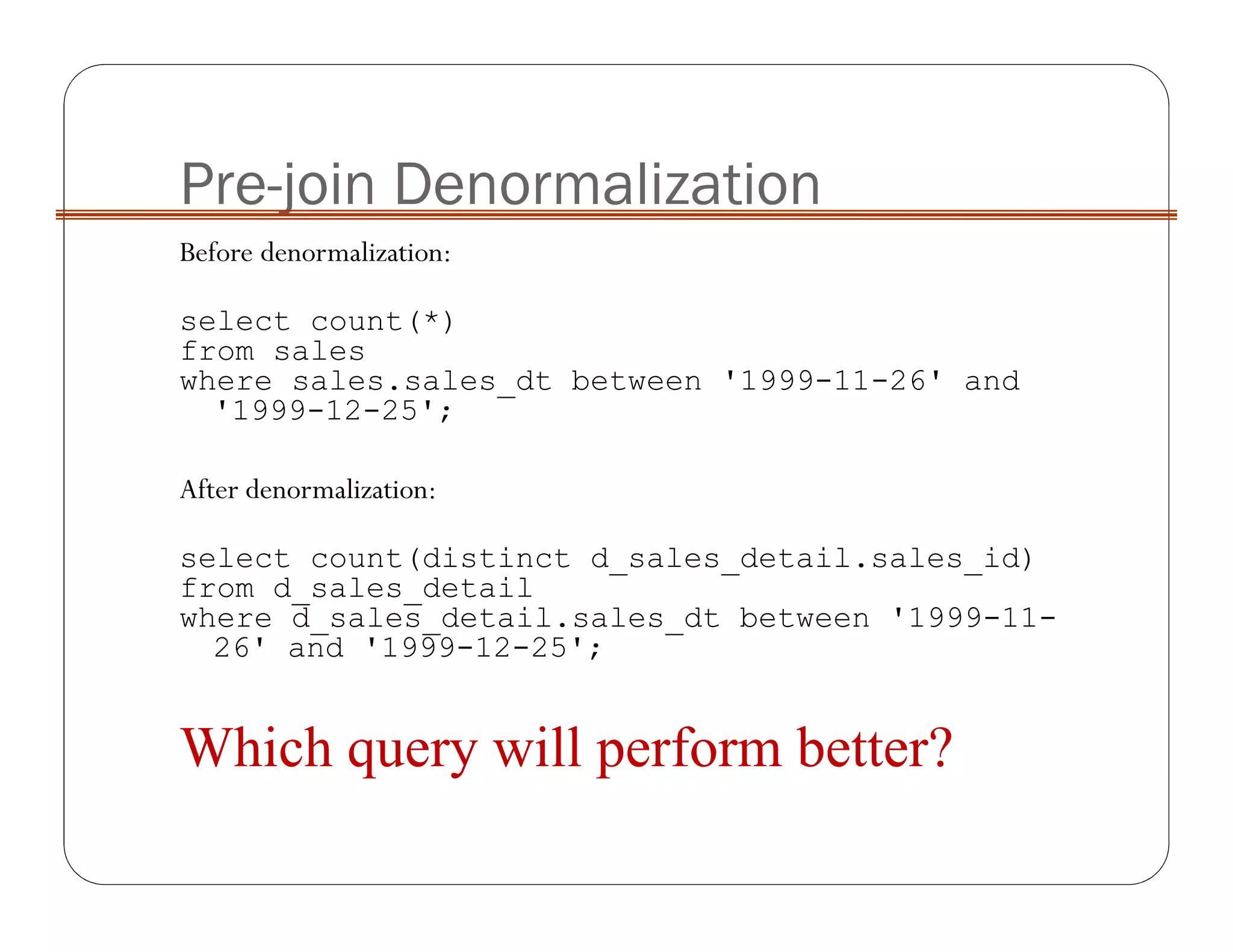

This document provides an overview and schedule for a course on Data Warehousing and Mining. The course will cover topics like data warehousing, data cubes, OLAP, data normalization and de-normalization, and various data mining techniques. A tentative schedule is provided that includes lectures on introduction, data warehousing motivation, indexing, building warehouses, mining techniques like regression, clustering, decision trees. Textbook references and grading plan are also outlined.