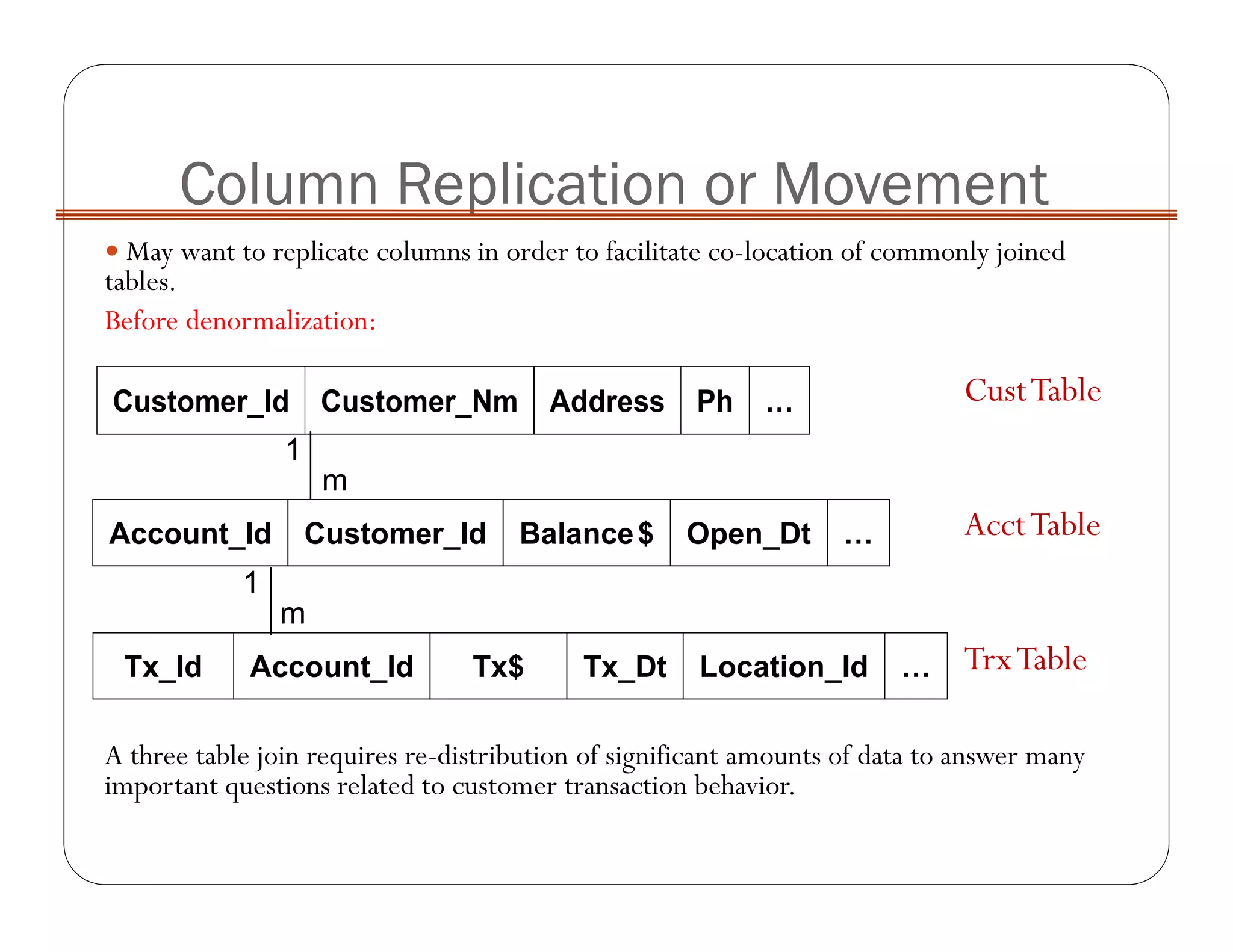

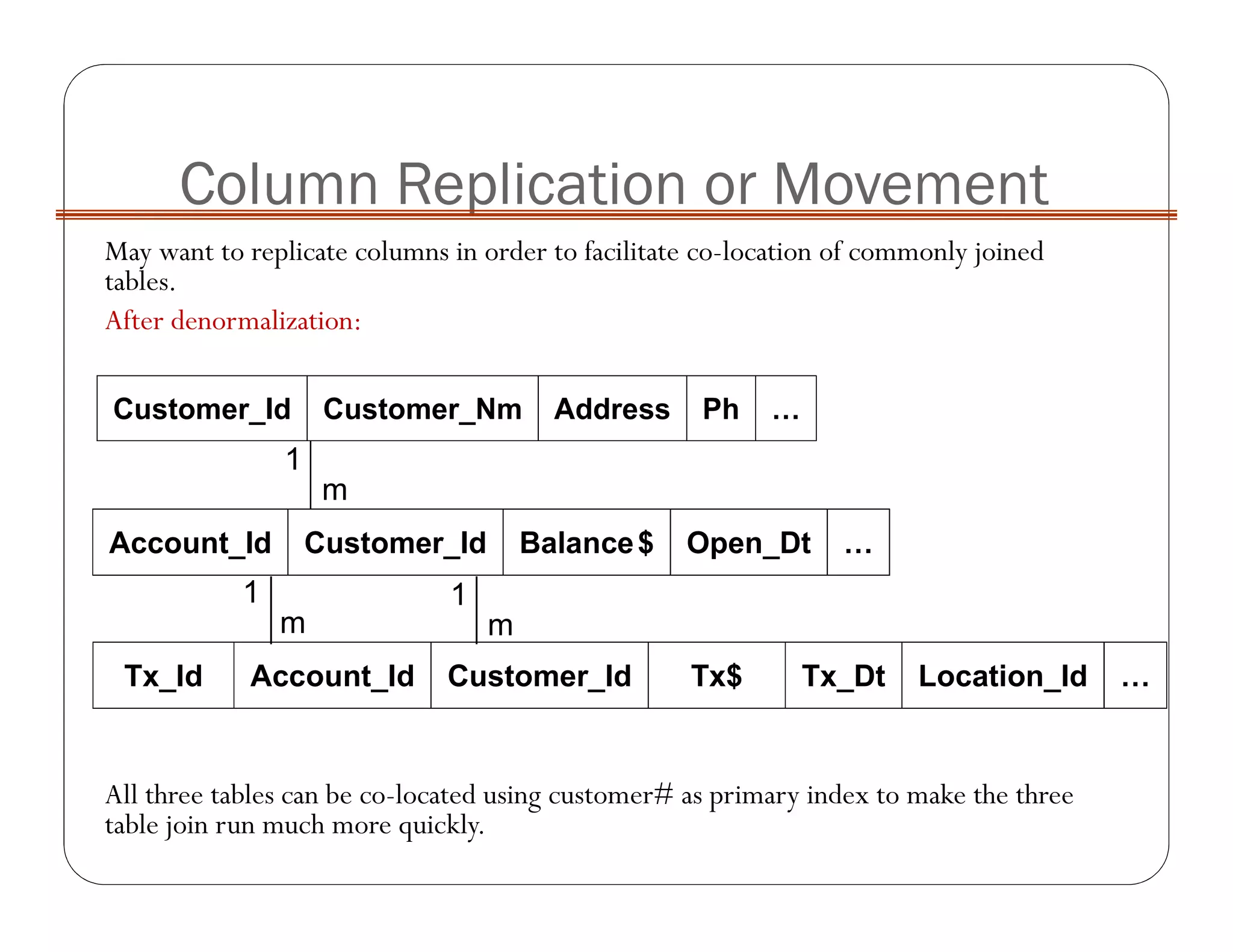

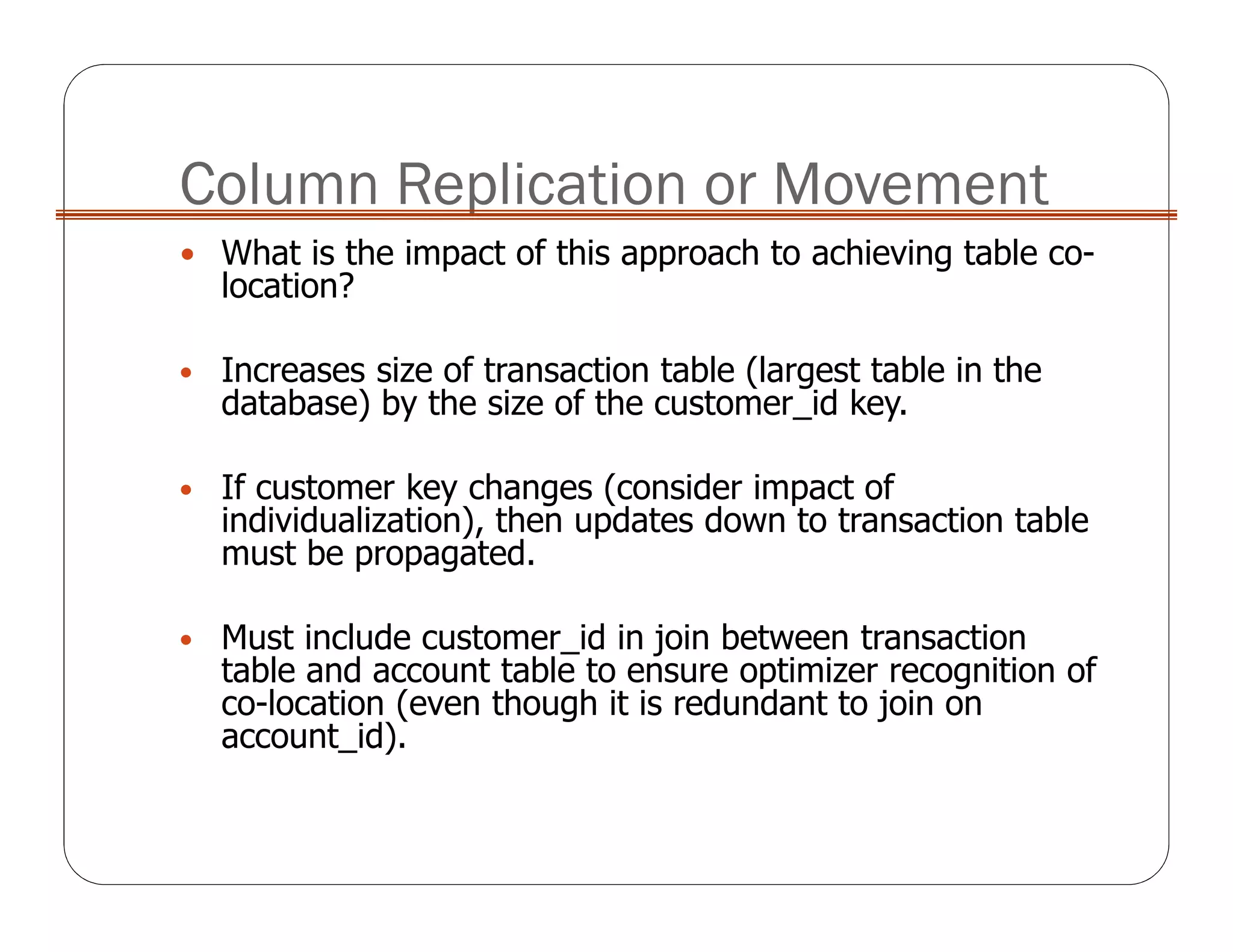

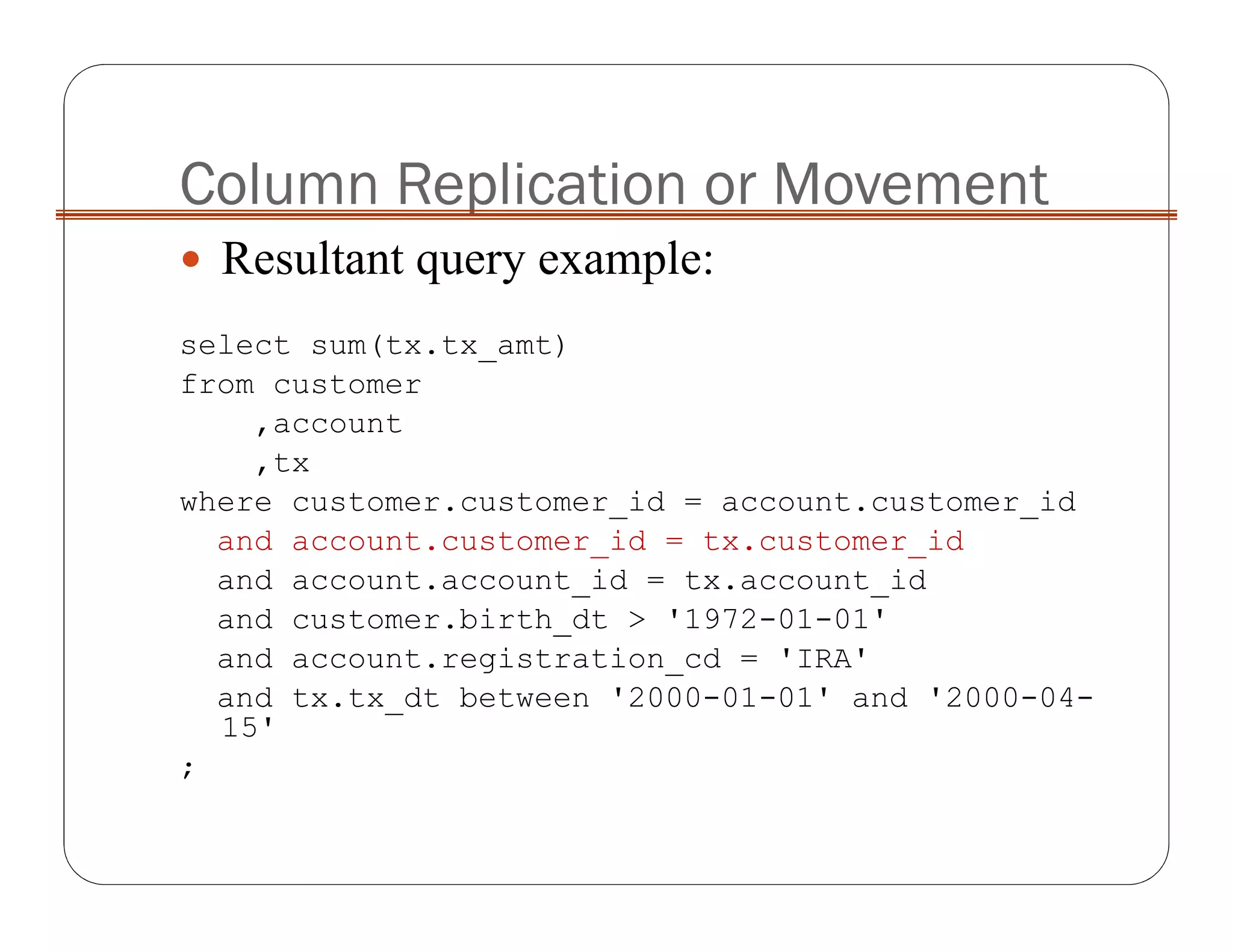

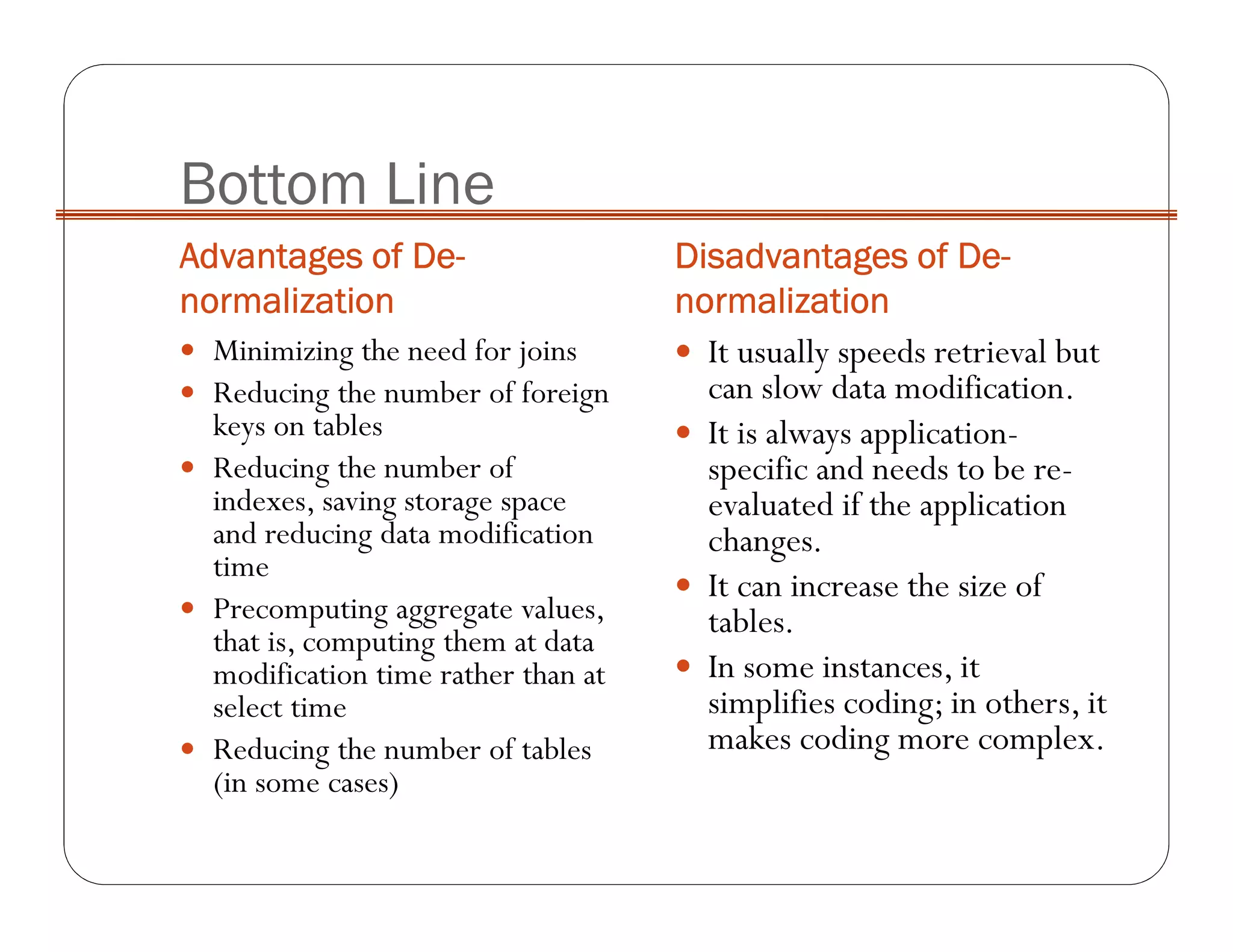

The document discusses denormalizing database tables to improve query performance. It describes replicating columns across tables to co-locate commonly joined data and facilitate three-table joins without redistributing large amounts of data. This involves adding additional columns like customer ID to other tables to enable co-locating the tables during queries. The approach increases storage usage but can significantly speed up queries.