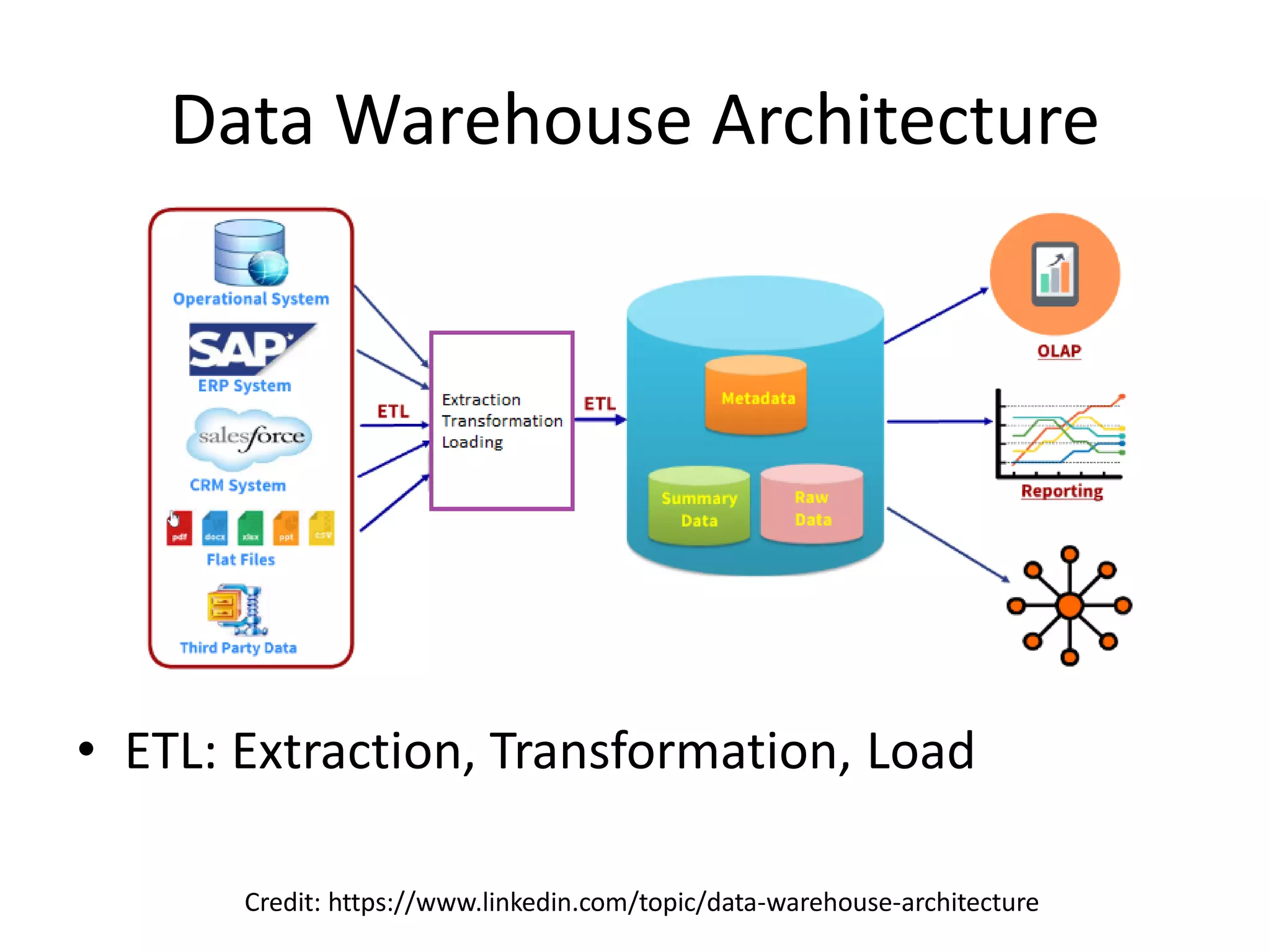

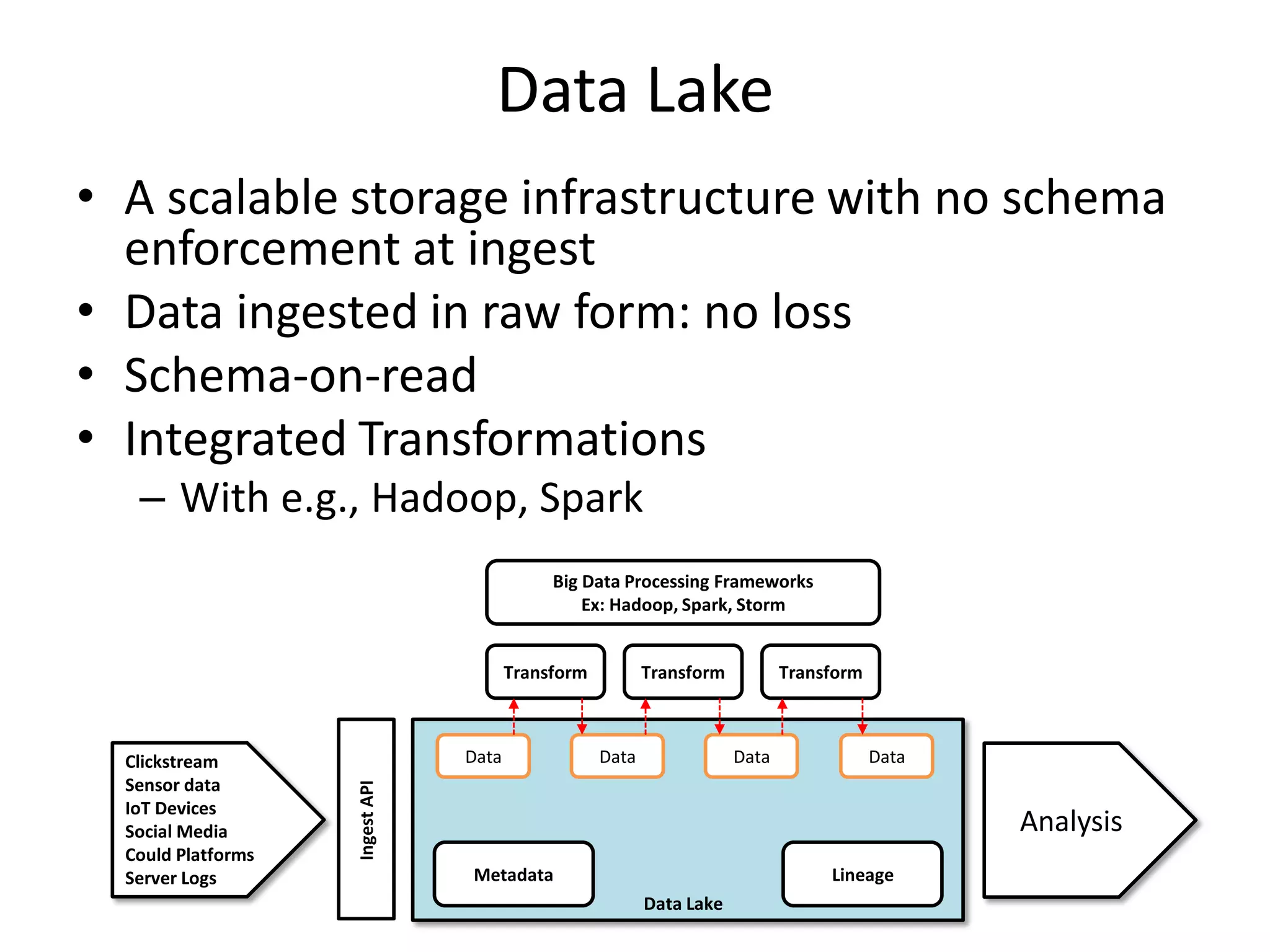

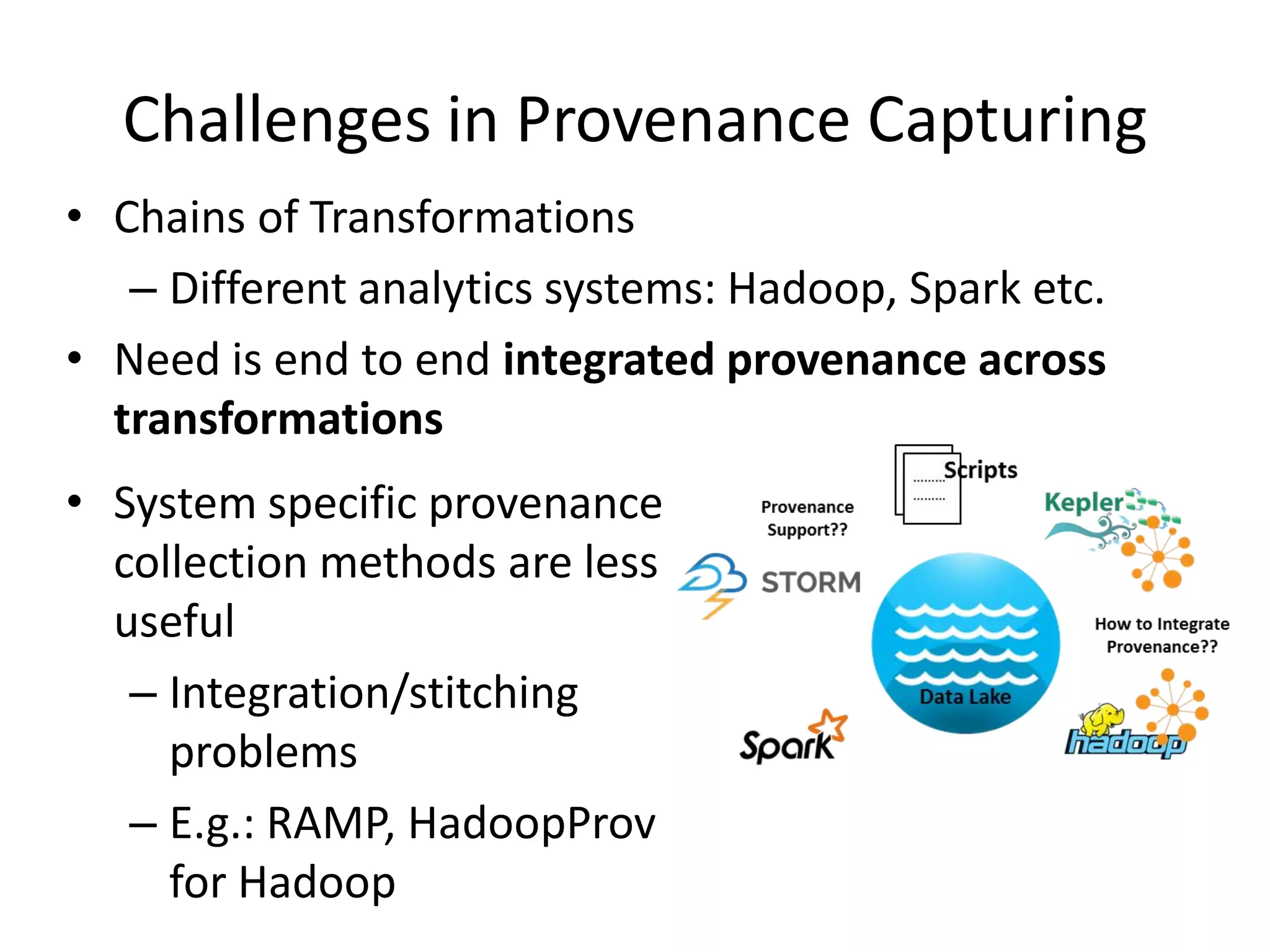

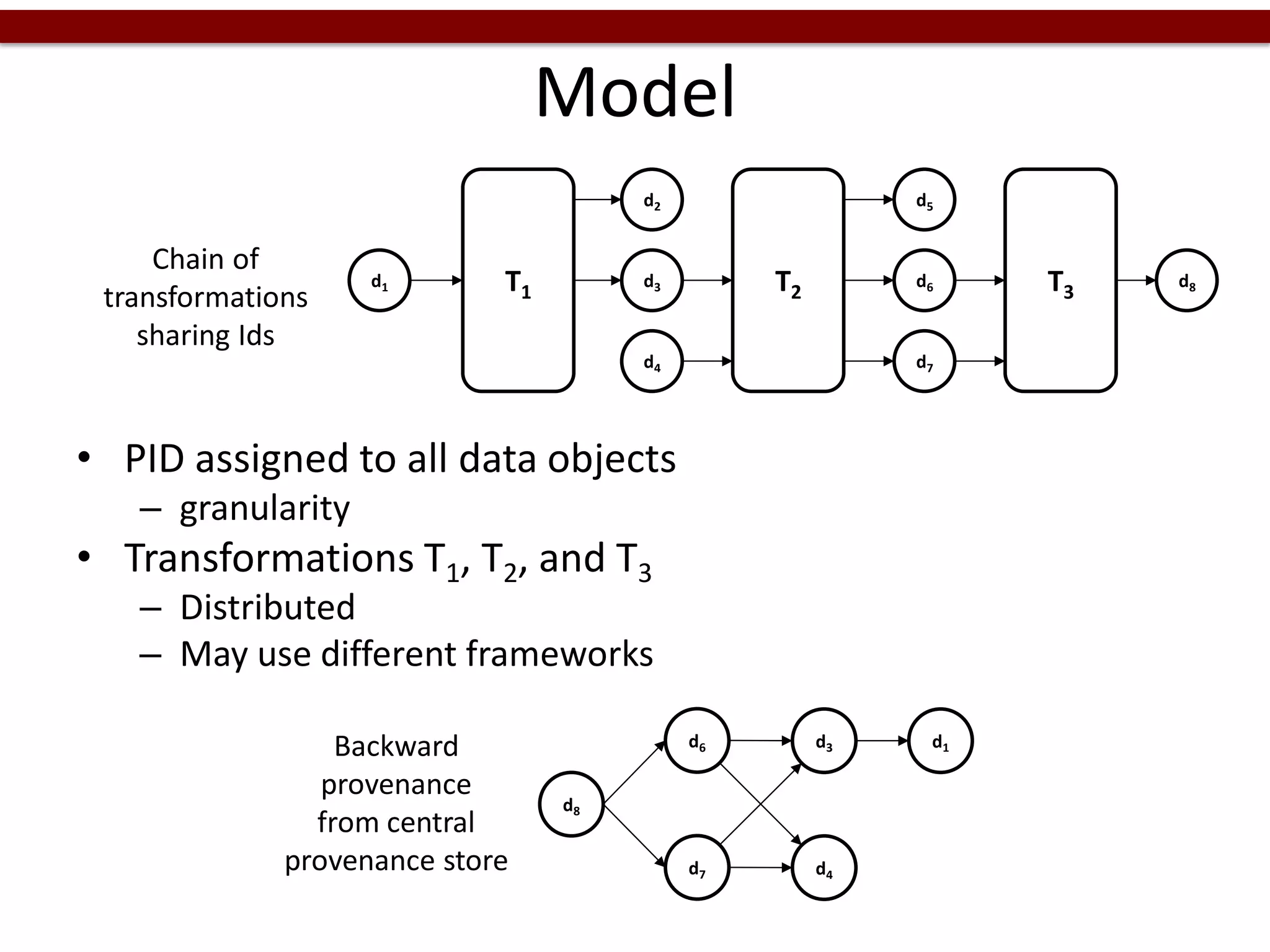

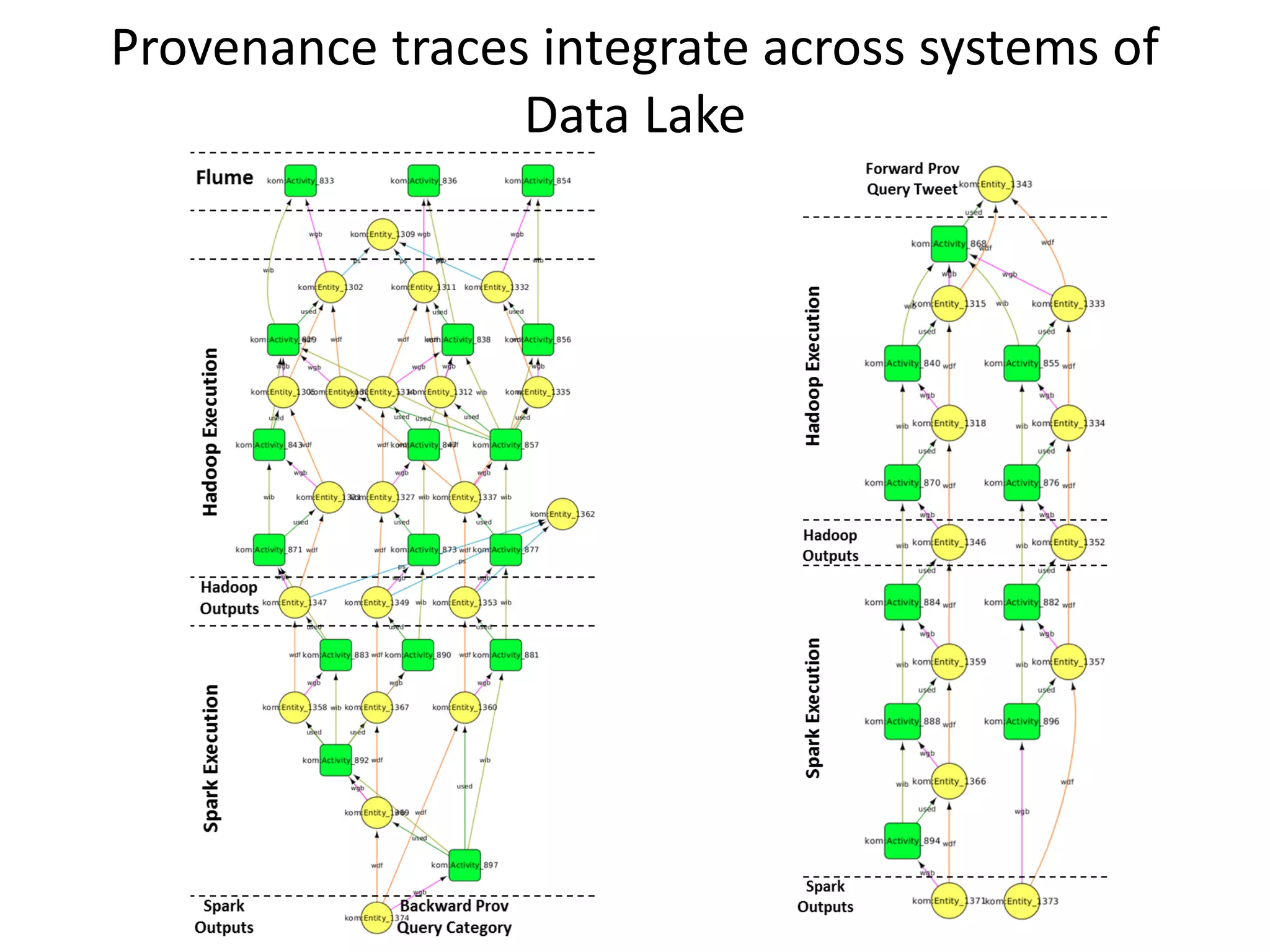

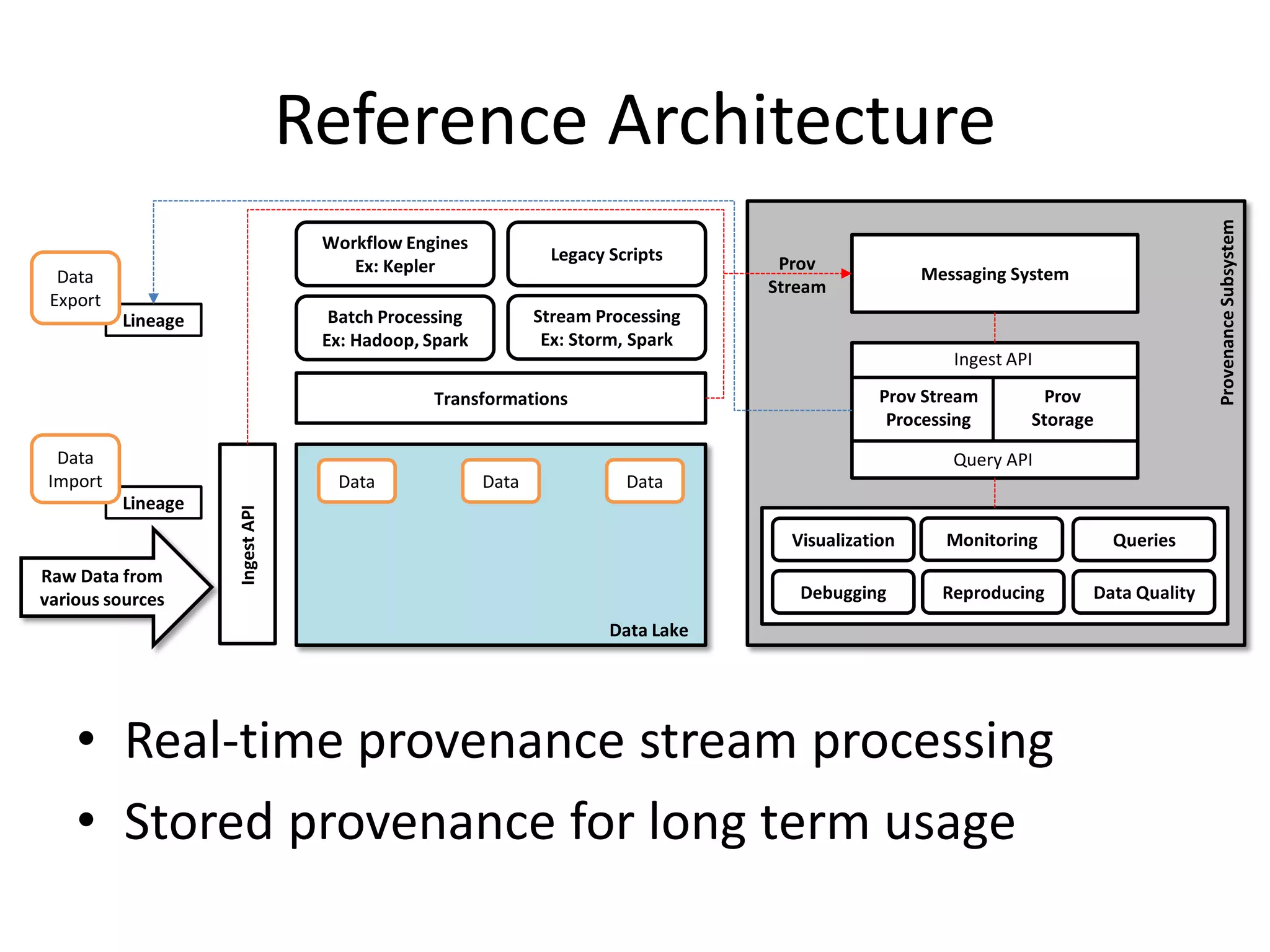

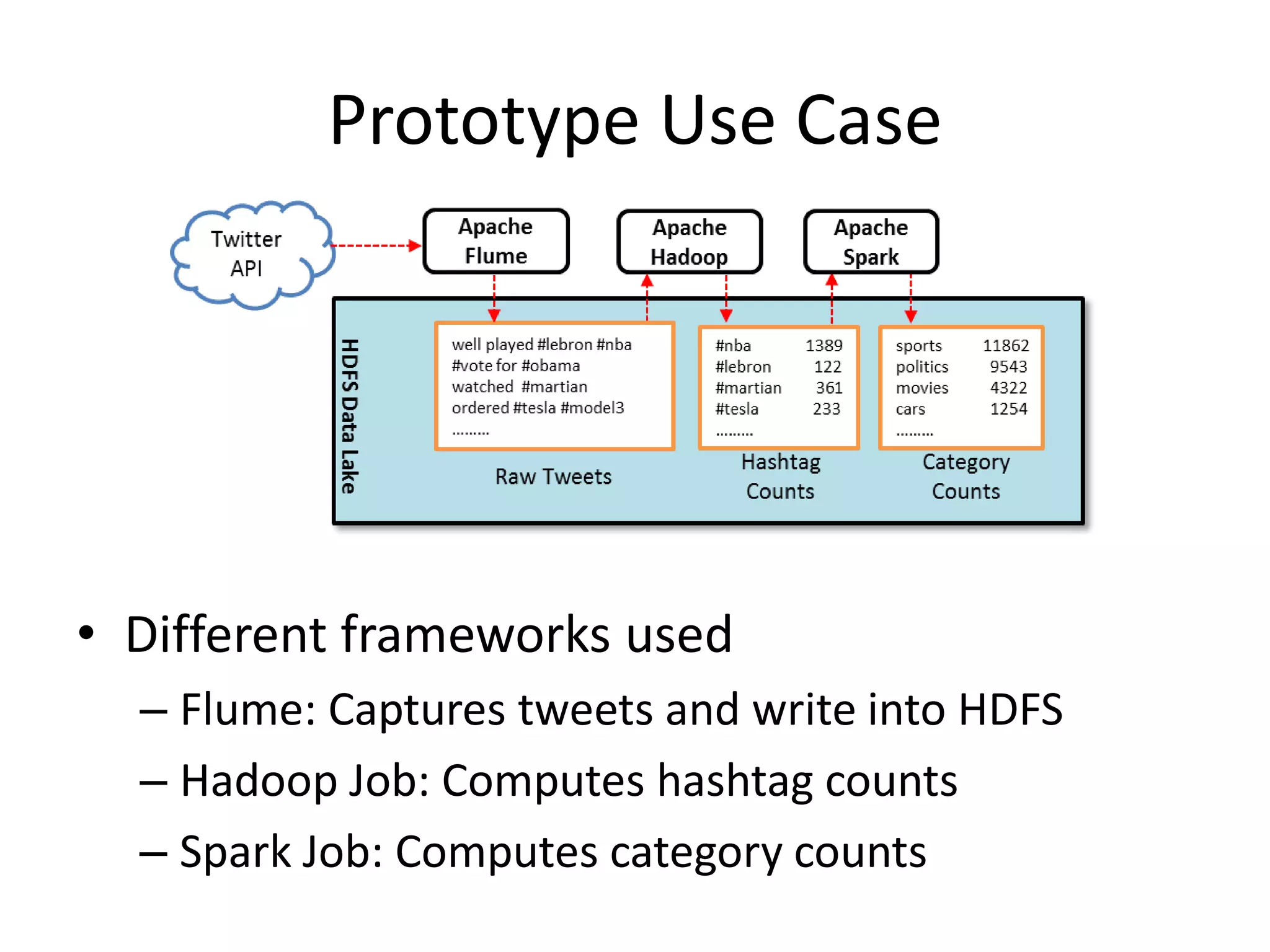

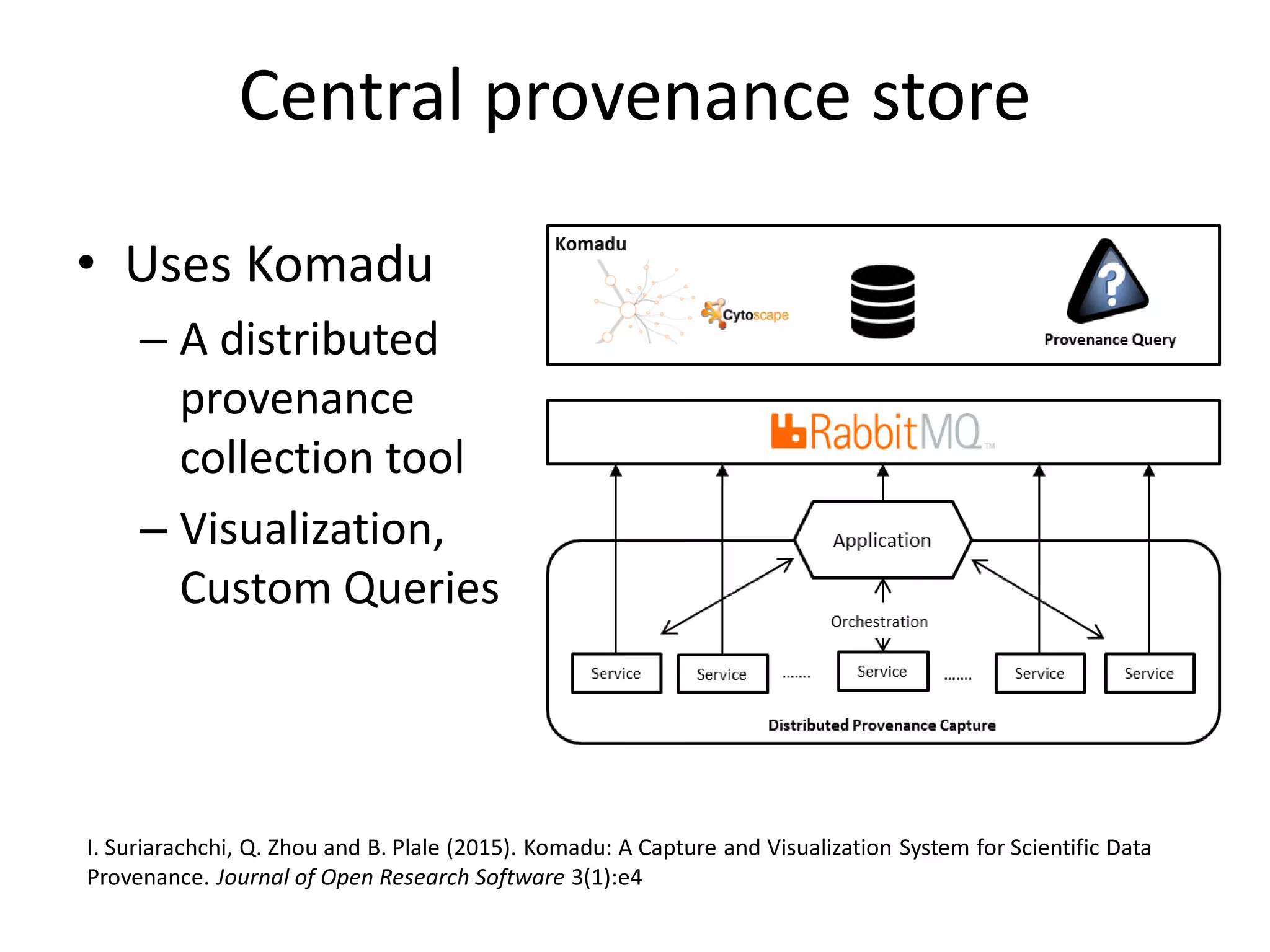

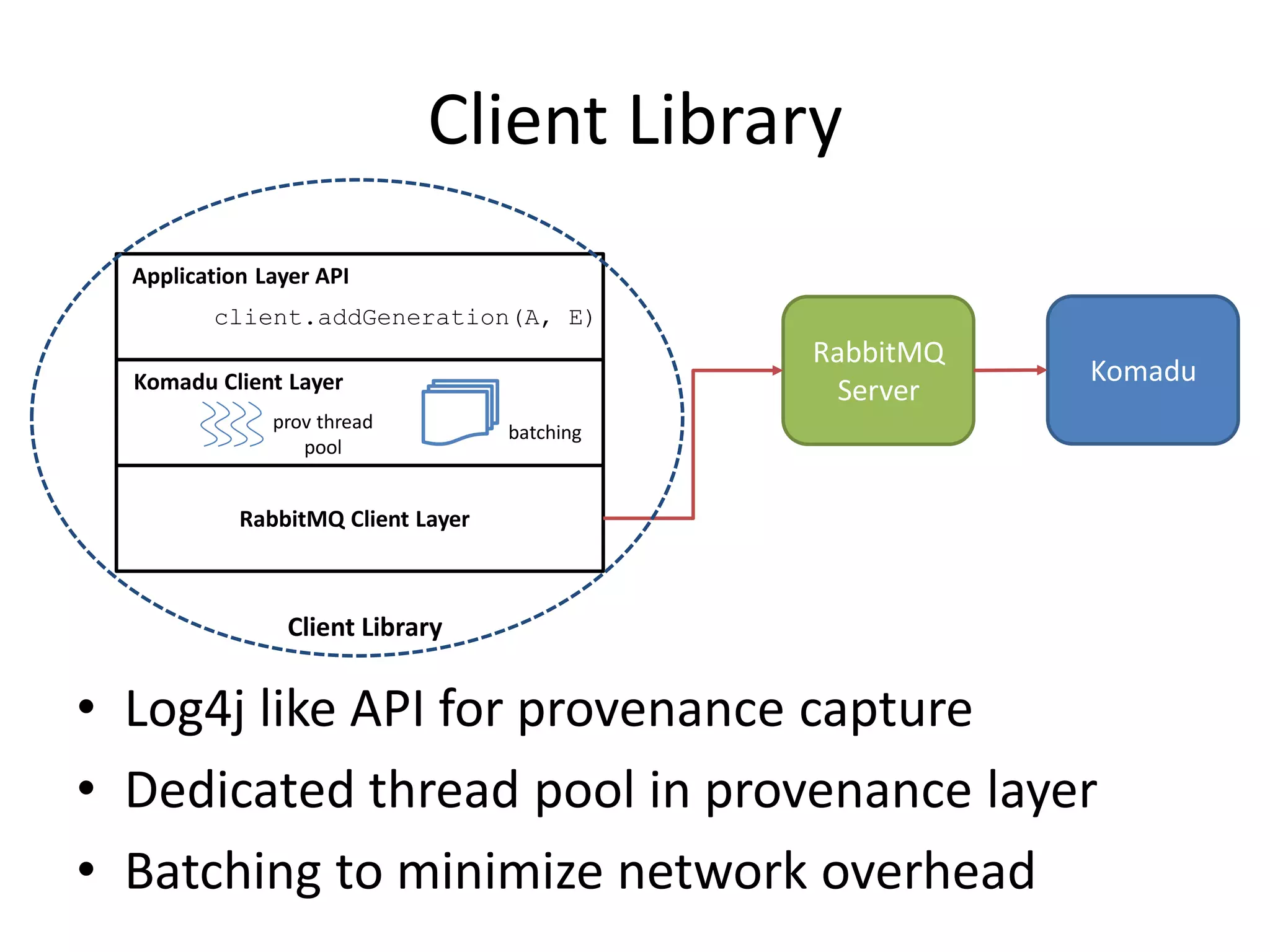

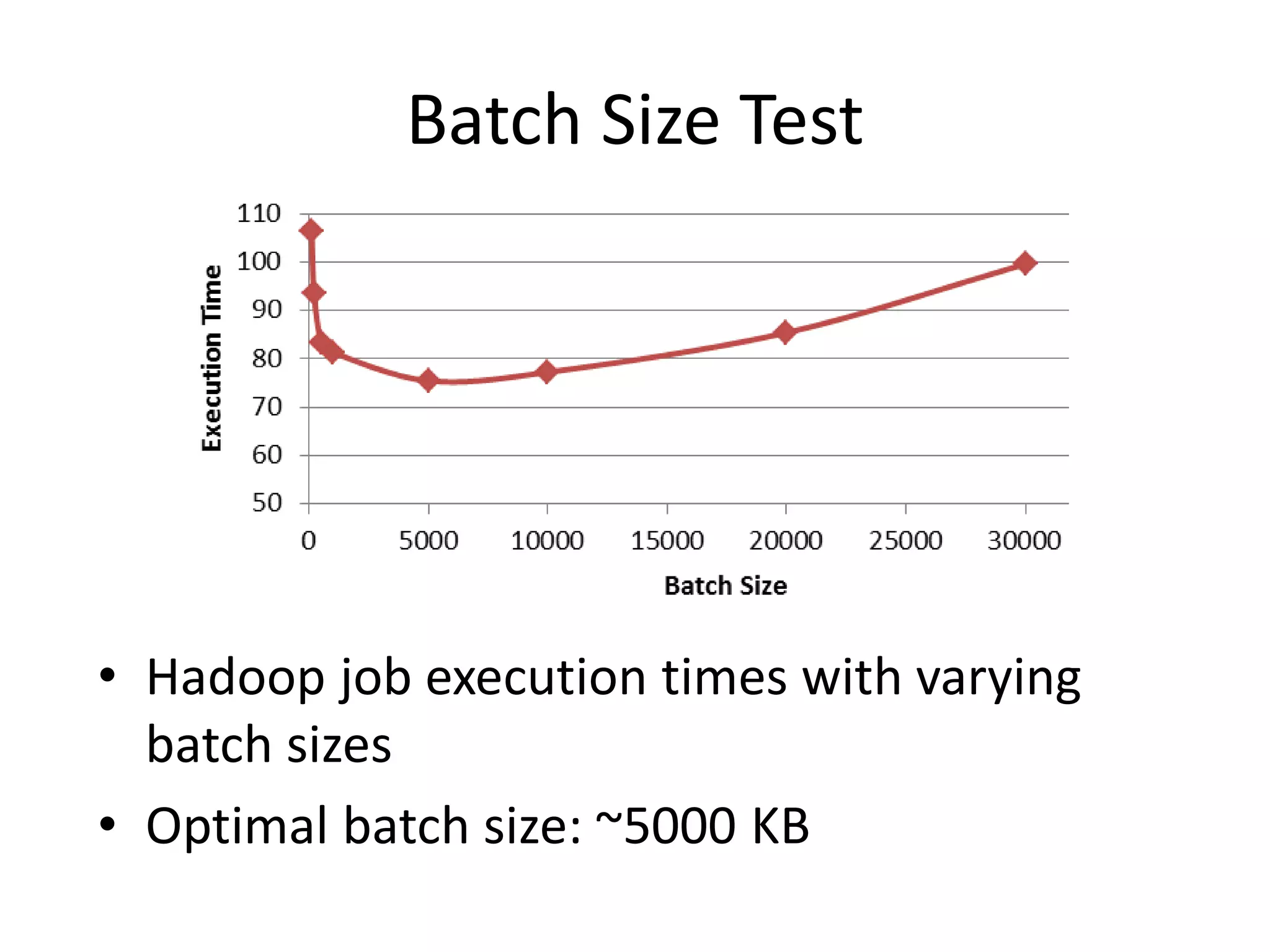

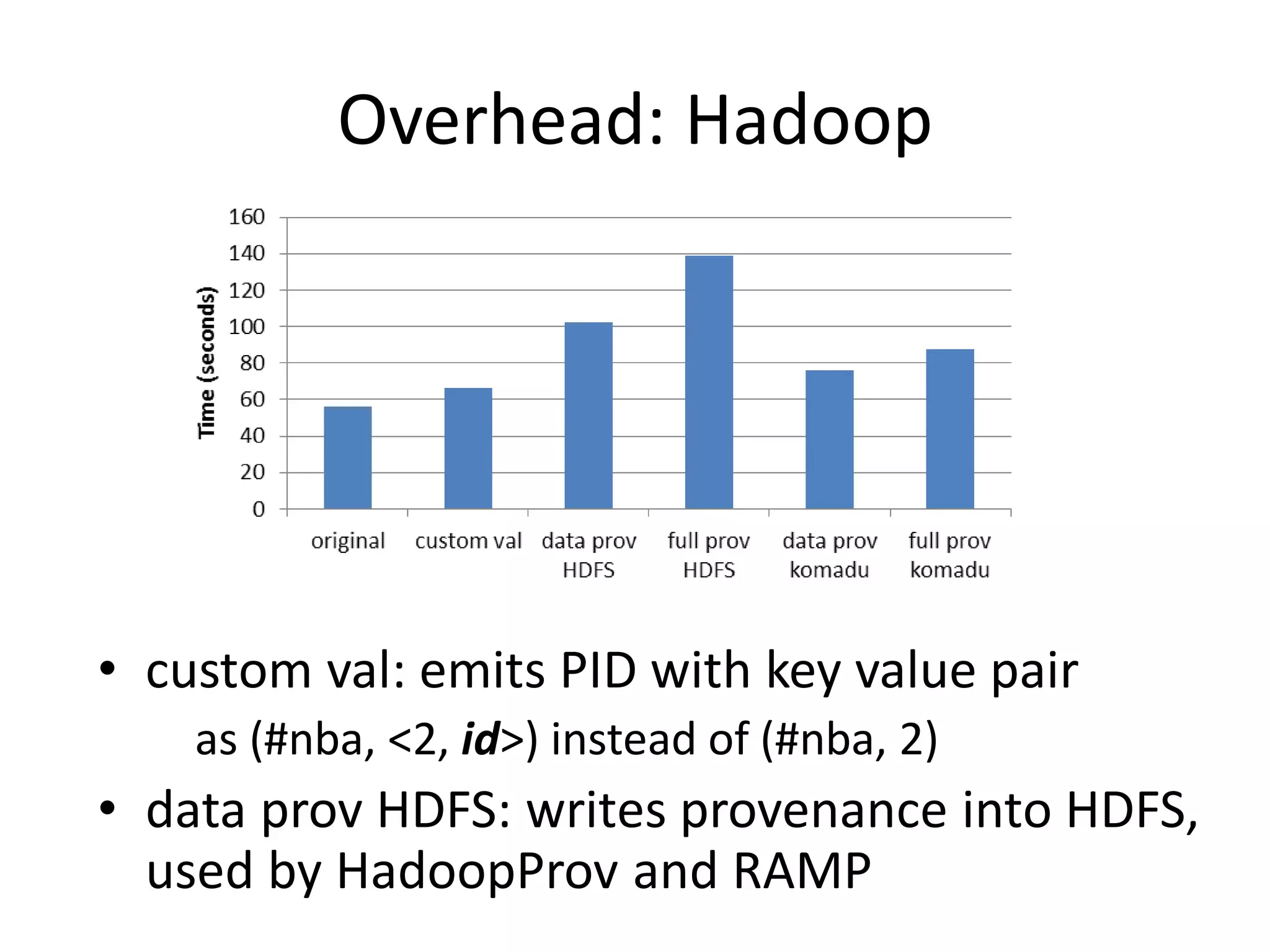

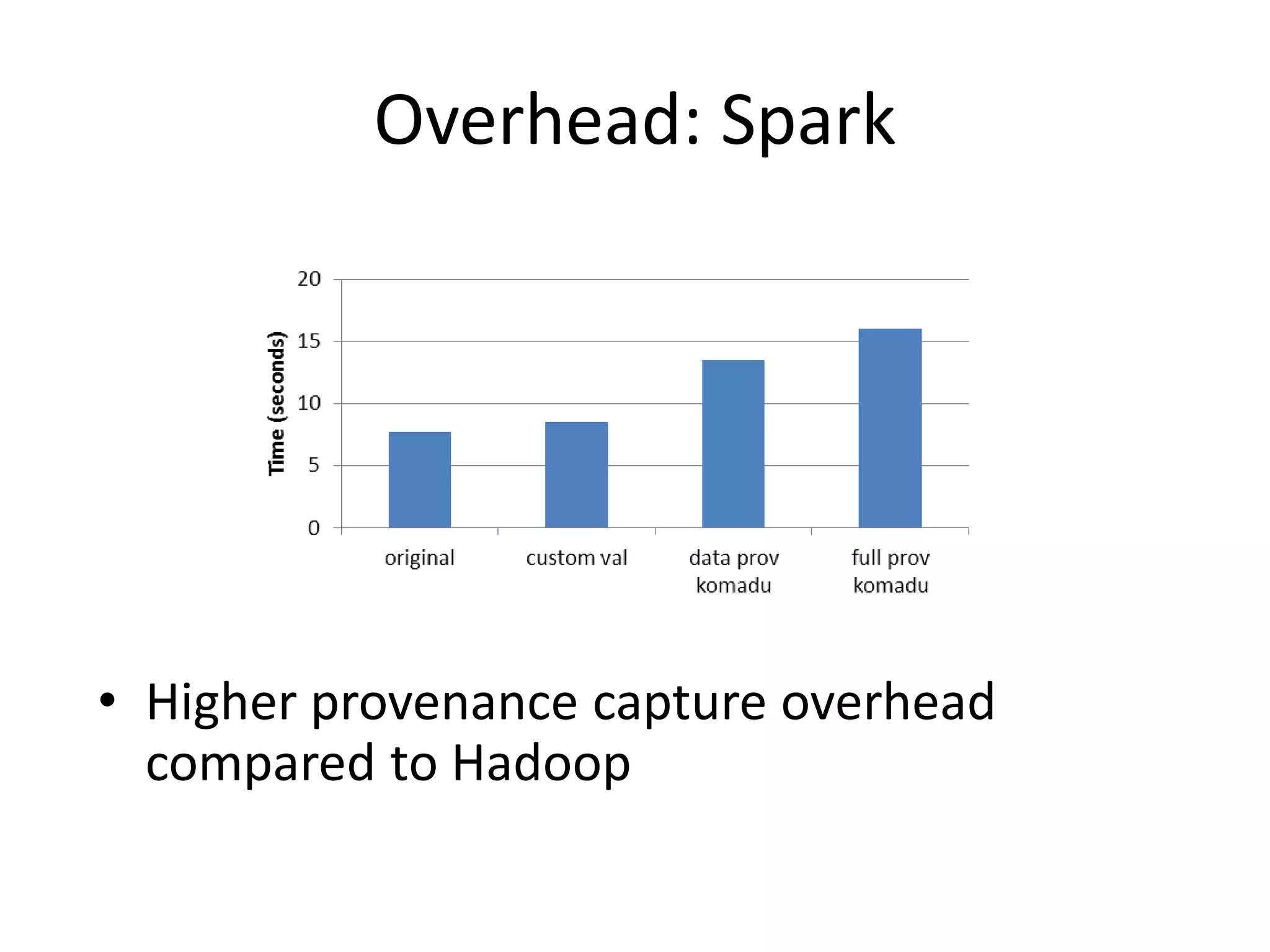

The document discusses the need for integrated provenance in data lakes to ensure effective management and traceability of varied data types. It highlights challenges posed by the flexibility of data lakes, such as increased difficulty in manageability and the concept of 'data swamps.' The authors propose a system for capturing provenance across different analytics frameworks to enhance data traceability and ensure reliable data processing within the data lake environment.