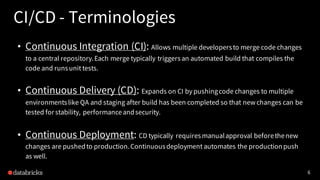

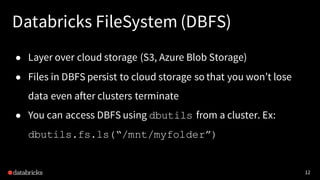

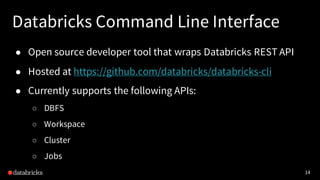

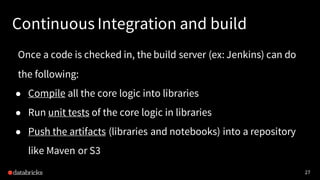

The document discusses continuous integration (CI) and continuous delivery (CD) practices, focusing on building and deploying data pipelines using Databricks. It outlines the stages of development, best practices for notebook development, and the tools and features available in Databricks for handling CI/CD processes. Additionally, it highlights the significance of collaboration and optimization for data operations within the Databricks platform.