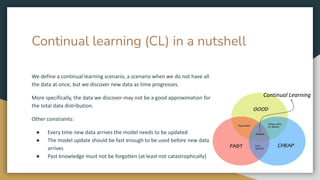

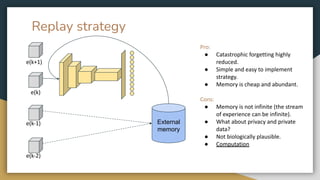

The document discusses continual learning in deep neural networks, emphasizing the challenges posed by catastrophic forgetting and the need for models that can learn from new data while retaining previous knowledge. It outlines various strategies for implementing continual learning, such as architectural adjustments, regularization, and memory-based approaches like rehearsal and latent replay. The author highlights ongoing research directions and the importance of developing more biologically plausible and efficient models to address these challenges.