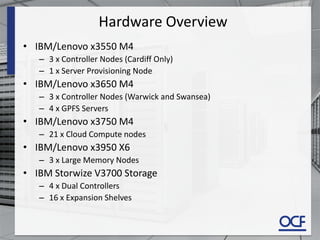

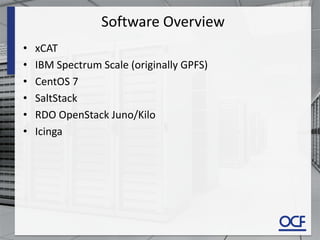

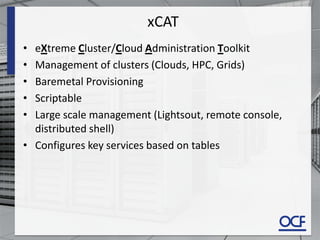

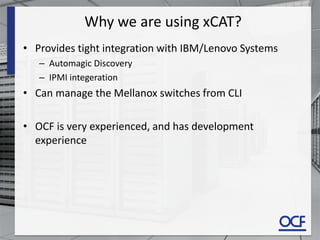

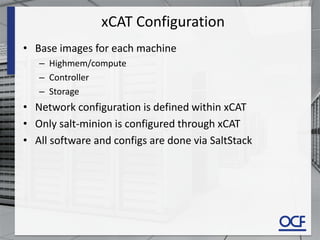

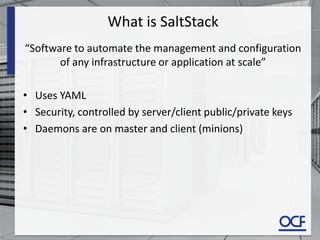

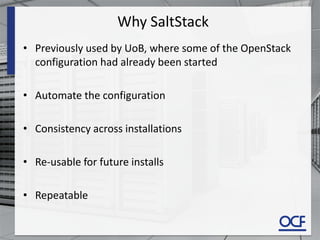

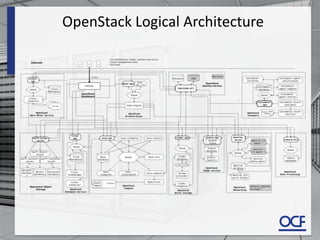

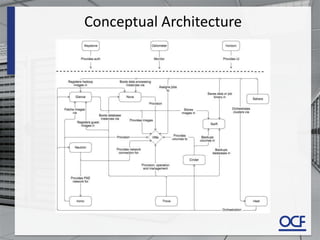

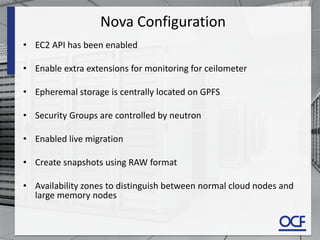

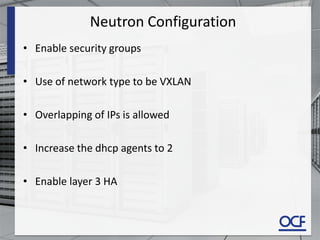

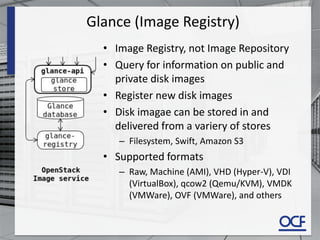

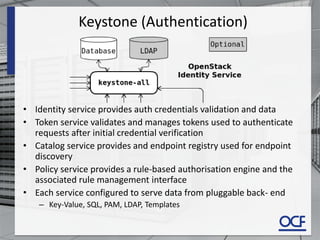

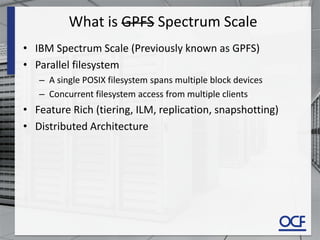

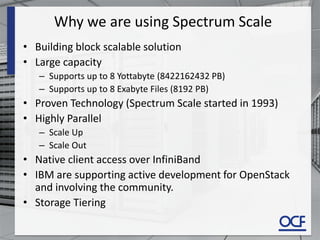

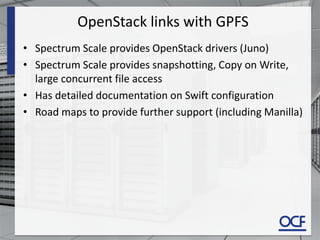

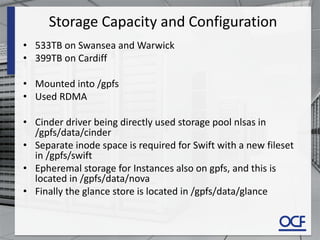

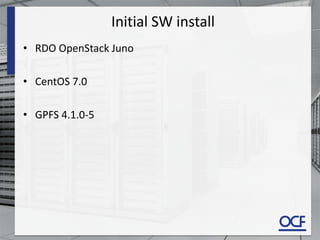

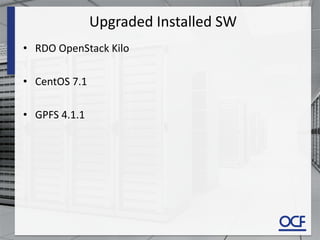

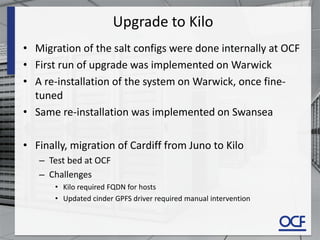

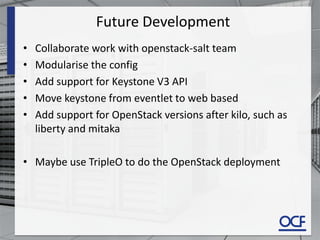

The document provides a technical overview of the CLIMB OpenStack cloud including hardware, software, and configuration details. The key components are IBM servers and storage, xCAT for provisioning, SaltStack for configuration management, OpenStack for cloud services, and IBM Spectrum Scale (formerly GPFS) for parallel file storage. Spectrum Scale is integrated with OpenStack components like Cinder, Glance, and Swift to provide scalable block and object storage.