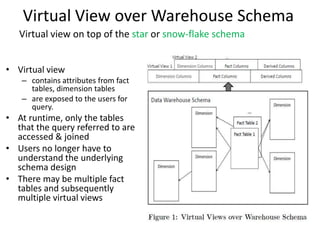

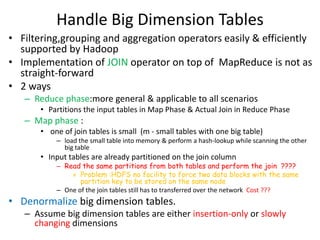

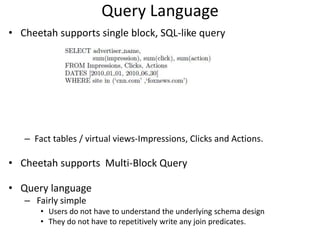

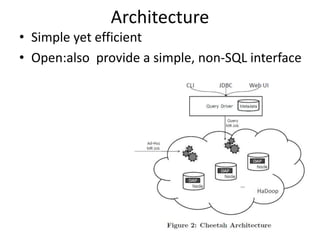

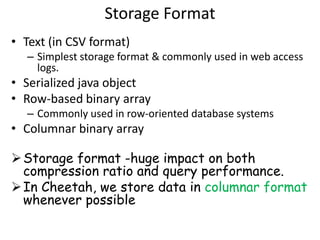

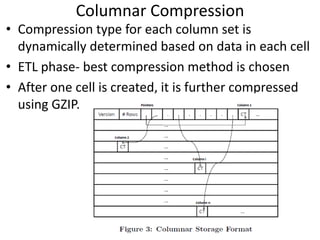

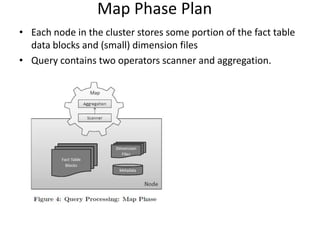

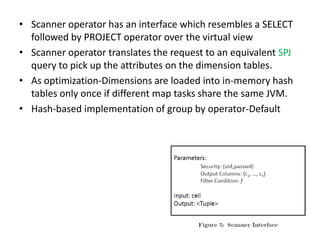

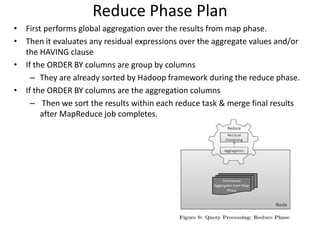

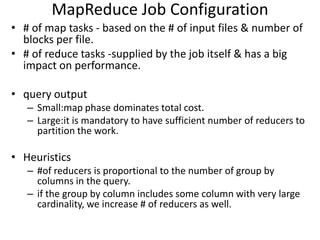

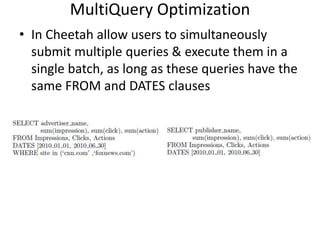

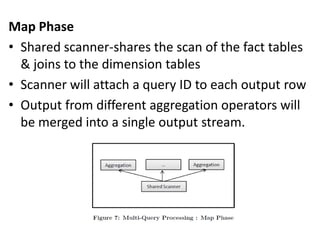

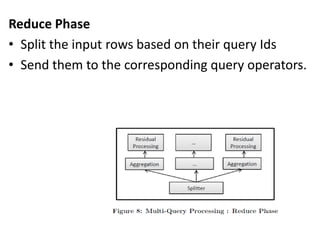

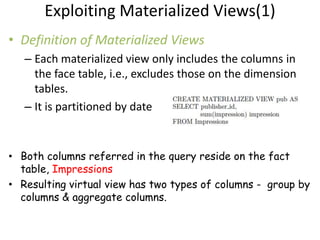

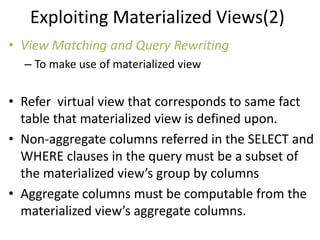

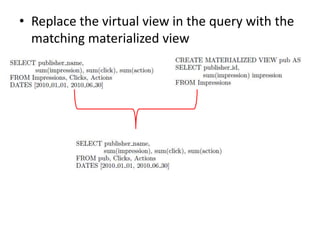

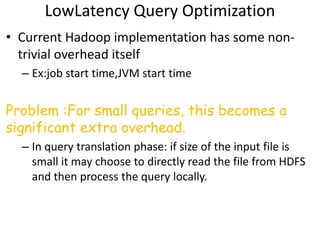

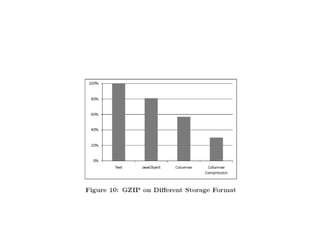

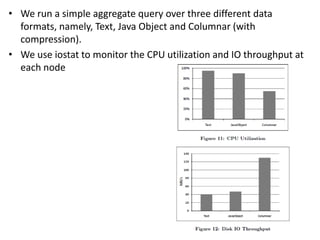

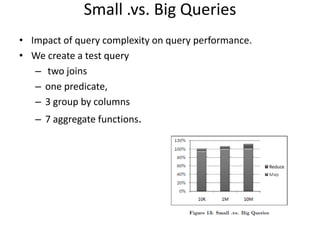

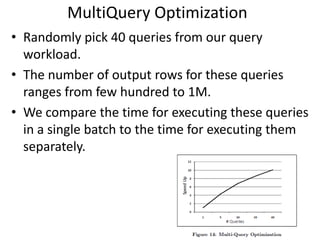

Cheetah is a custom data warehouse system built on top of Hadoop that provides high performance for storing and querying large datasets. It uses a virtual view abstraction over star and snowflake schemas to provide a simple yet powerful SQL-like query language. The system architecture utilizes MapReduce to parallelize query execution across many nodes. Cheetah employs columnar data storage and compression, multi-query optimization, and materialized views to improve query performance. Based on evaluations, Cheetah can efficiently handle both small and large queries and outperforms single-query execution when processing batches of queries together.