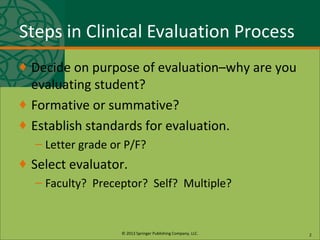

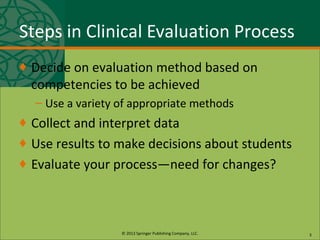

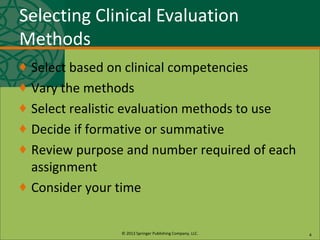

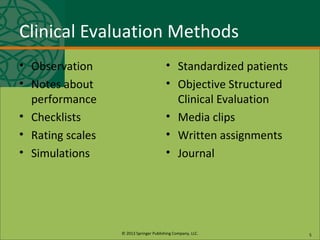

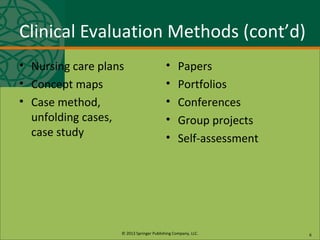

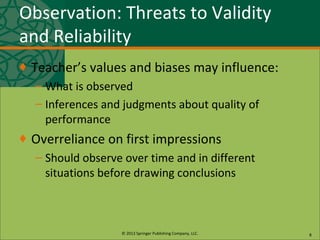

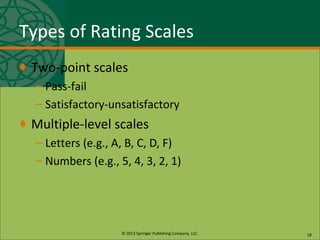

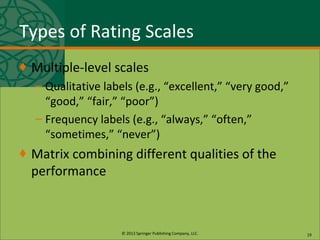

The document discusses various methods for evaluating nursing students' clinical performance, including observation, notes, checklists, rating scales, simulations, standardized patients, and objective structured clinical examinations. It provides guidance on selecting evaluation methods based on the competencies being assessed, ensuring validity and reliability, and improving the use of rating scales to reduce common errors in clinical evaluations.