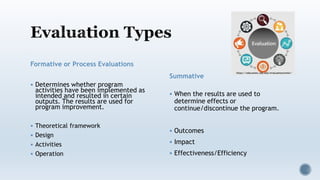

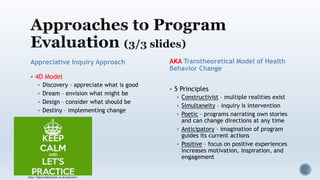

This document discusses program evaluation, outlining key concepts and approaches. It describes the purposes of program evaluation as determining if objectives are met and improving decision making. Formative and summative evaluations are explained, with formative used for ongoing improvement and summative to determine effects. Both quantitative and qualitative methods are appropriate, including experimental, quasi-experimental and non-experimental designs. Stakeholder involvement, utilization of results, and addressing ethical considerations are important aspects of program evaluation.