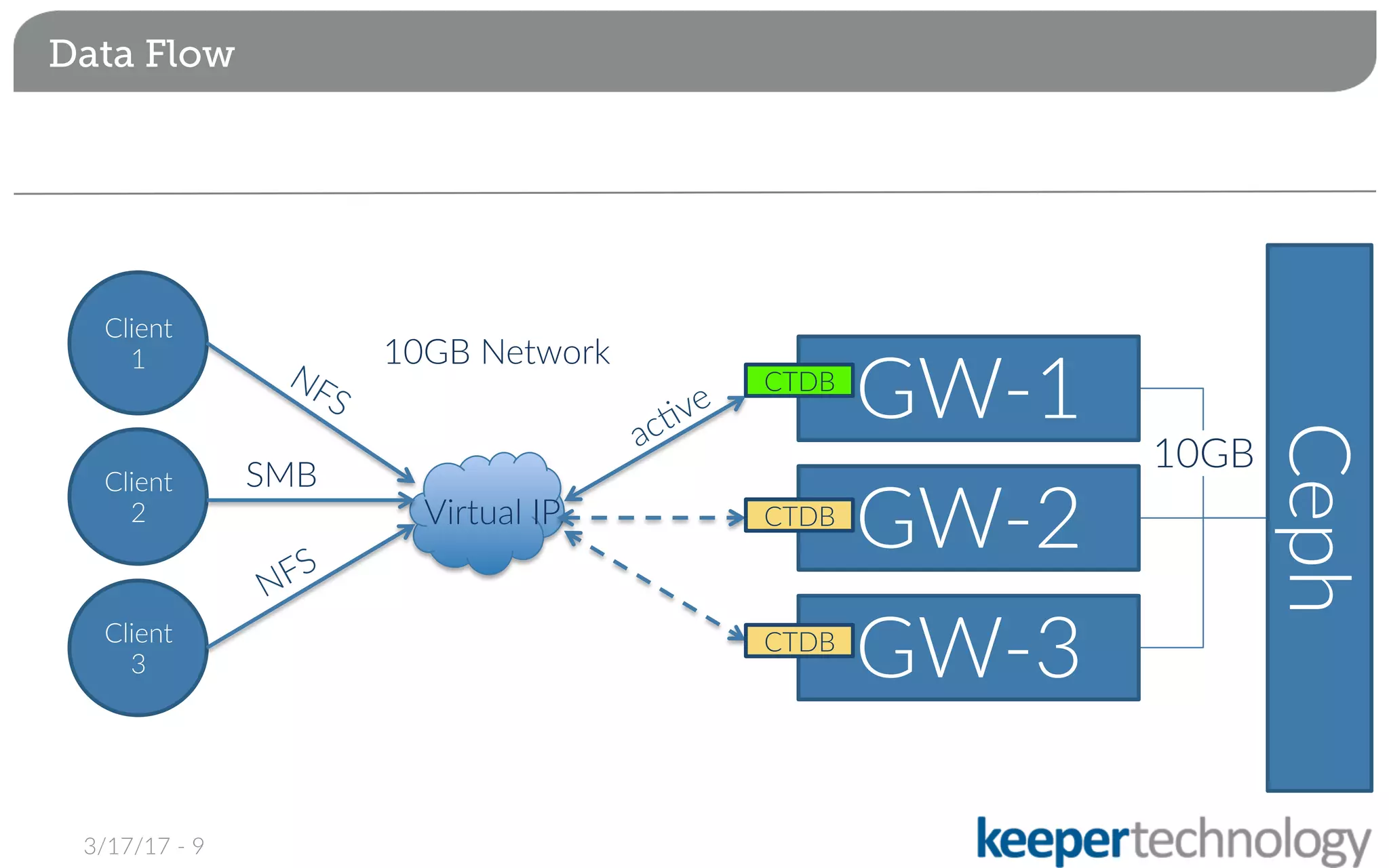

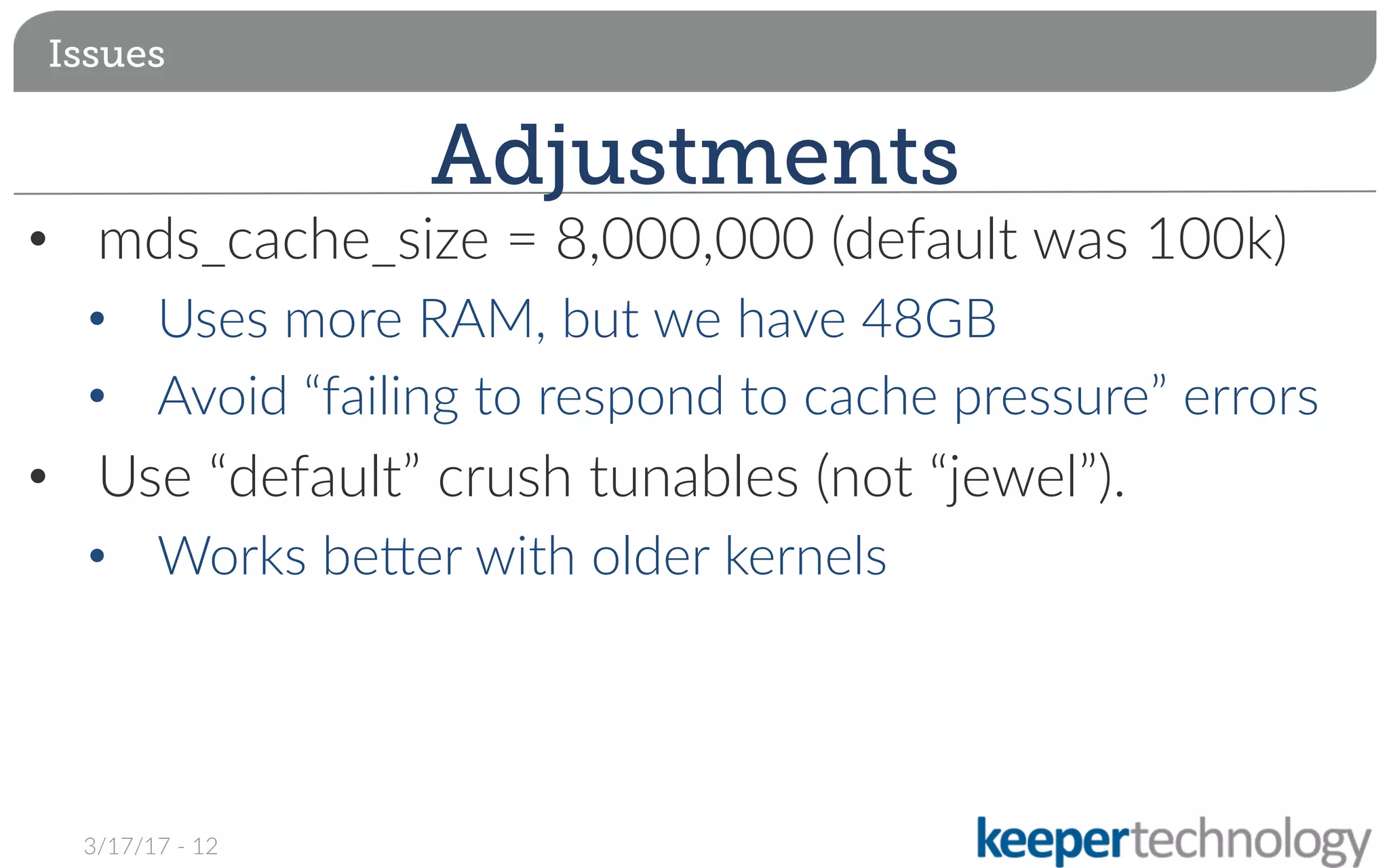

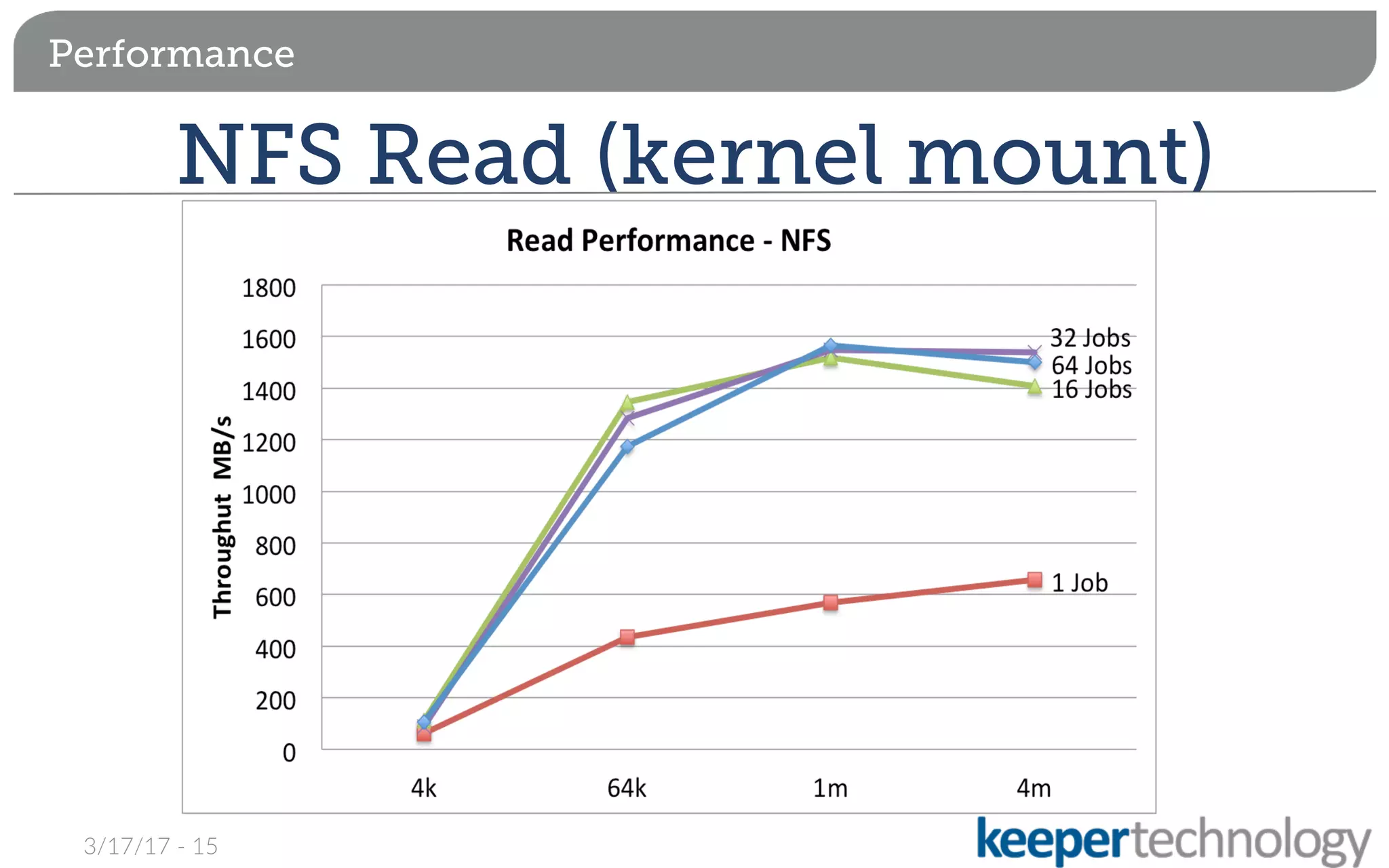

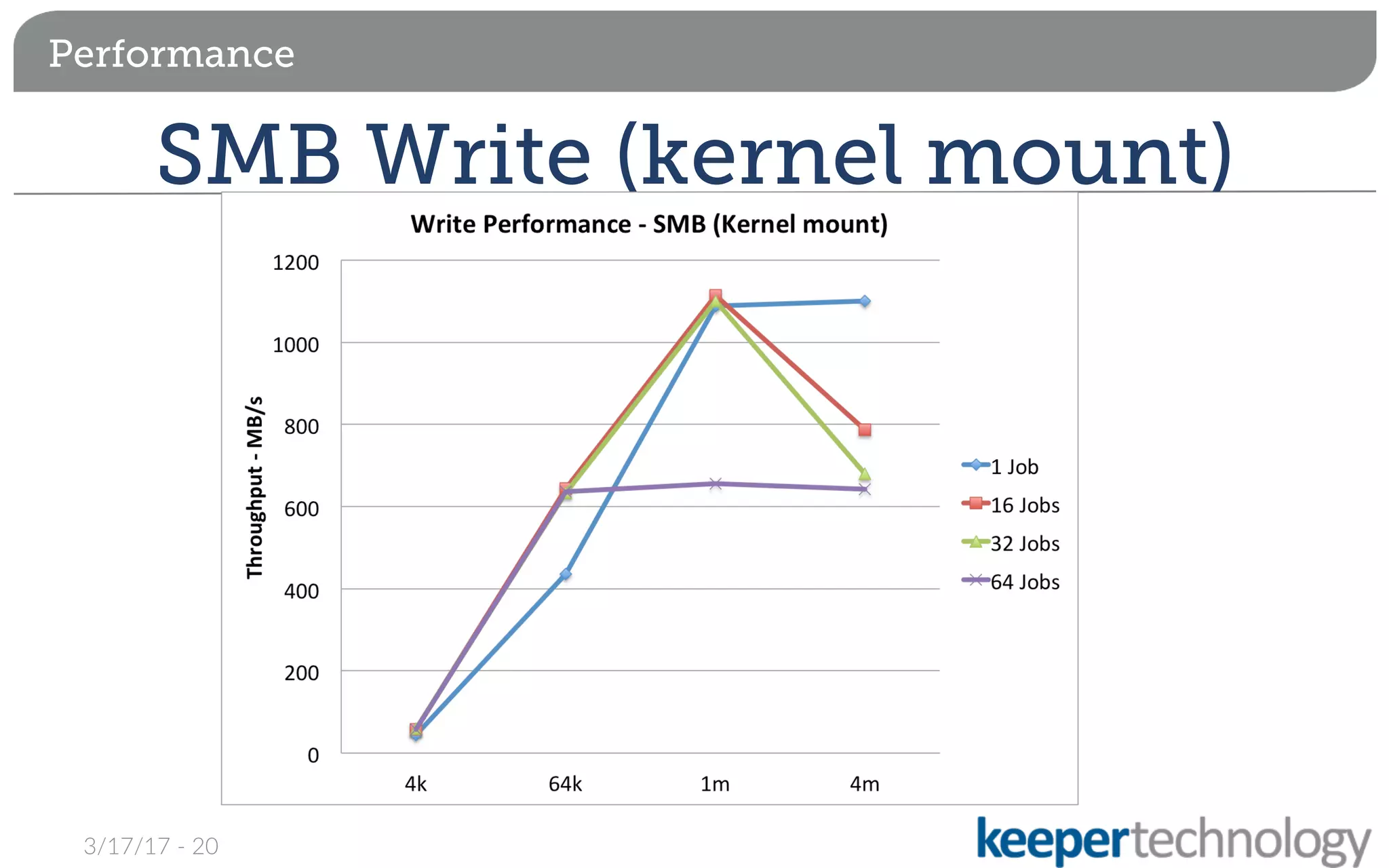

The document discusses implementing a high availability NAS gateway using CephFS. A 6 node Ceph cluster with 80TB of usable storage was configured with 2 gateway servers running Samba and Ganesha NFS to provide SMB and NFS shares of the CephFS filesystem. CTDB was used to enable failover between the gateways and floating IP addresses allow seamless failover. Some issues were encountered around snapshots, quotas and performance, but the overall solution provides high availability NAS capabilities using standard protocols and CephFS.

![Thank You

| 21740 Beaumeade Circle | Suite 150 | Ashburn, VA 20147 | P [571] 333 2725 | F [703] 738 7231 | solu?ons@keepertech.com | www.keepertech.com

Thank You](https://image.slidesharecdn.com/06-cephday-ha-nas-170320192159/75/Ceph-Day-San-Jose-HA-NAS-with-CephFS-24-2048.jpg)