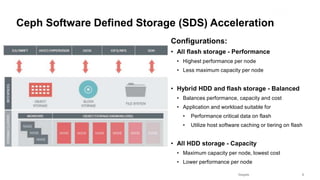

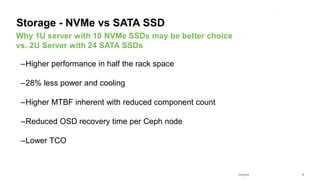

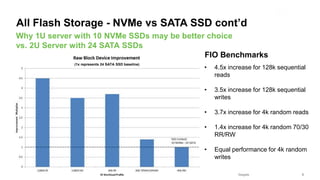

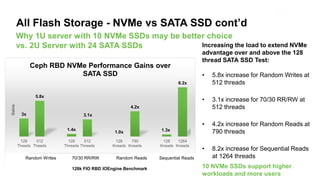

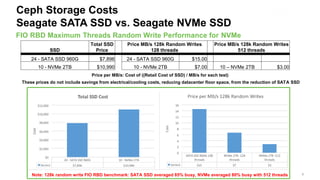

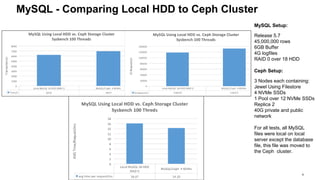

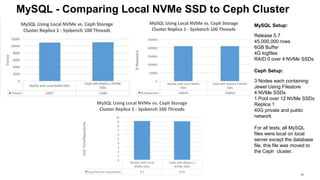

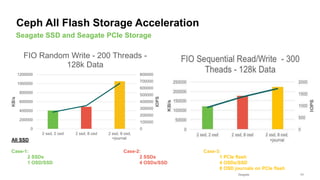

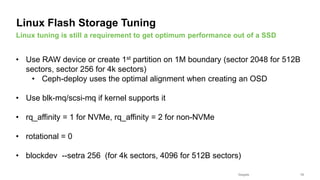

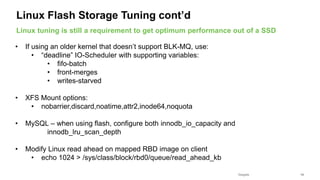

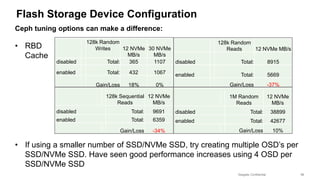

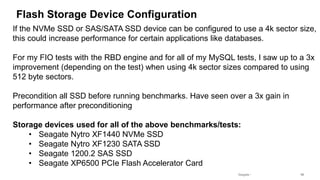

The document discusses three ways to accelerate application performance with flash storage using Ceph software defined storage: 1) utilizing all flash storage to maximize performance, 2) using a hybrid configuration with flash and HDDs to balance performance and capacity, and 3) using all HDD storage for maximum capacity but lowest performance. It also examines using NVMe SSDs versus SATA SSDs, and how to optimize Linux settings and Ceph configuration to improve flash performance for applications.