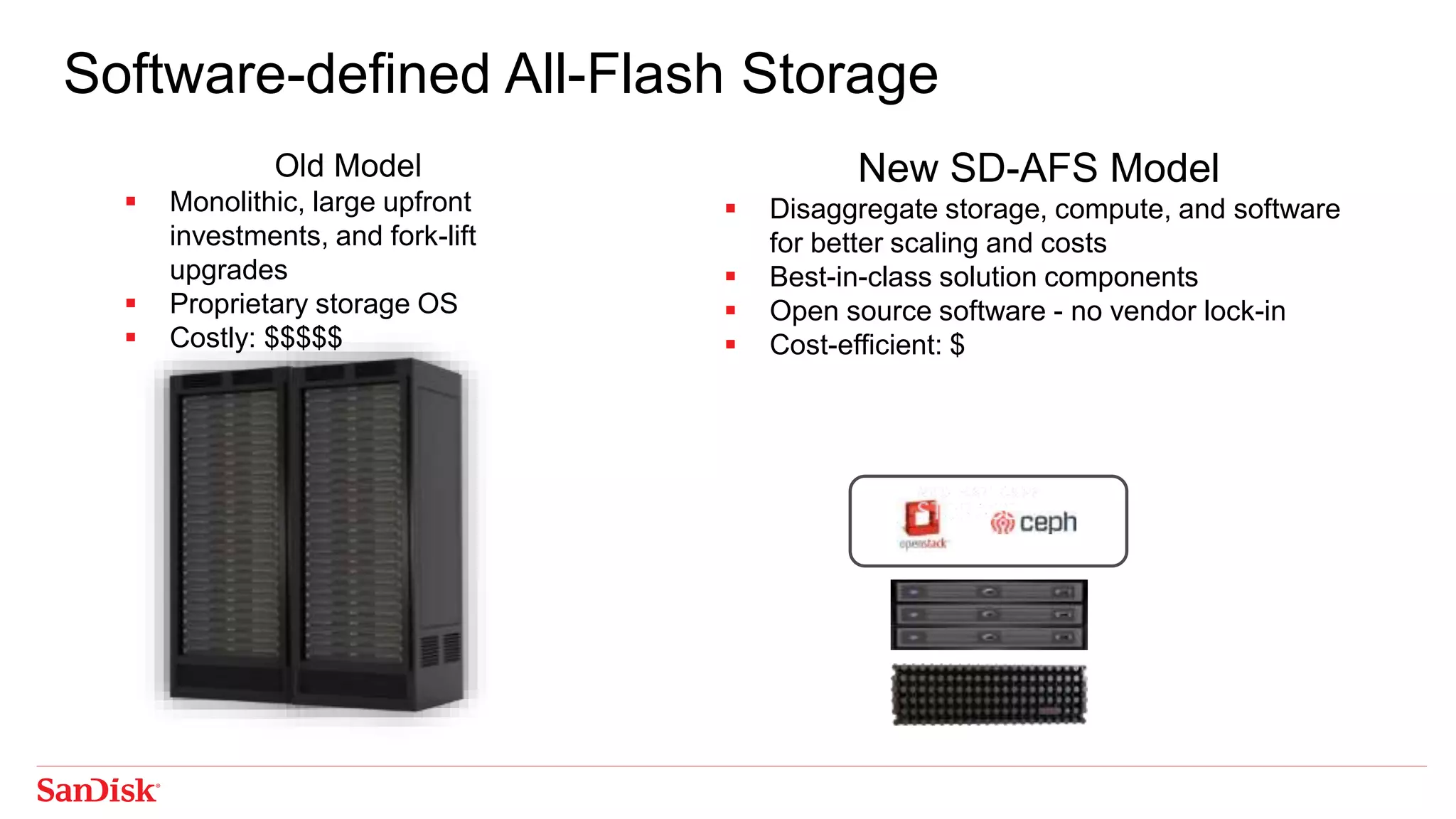

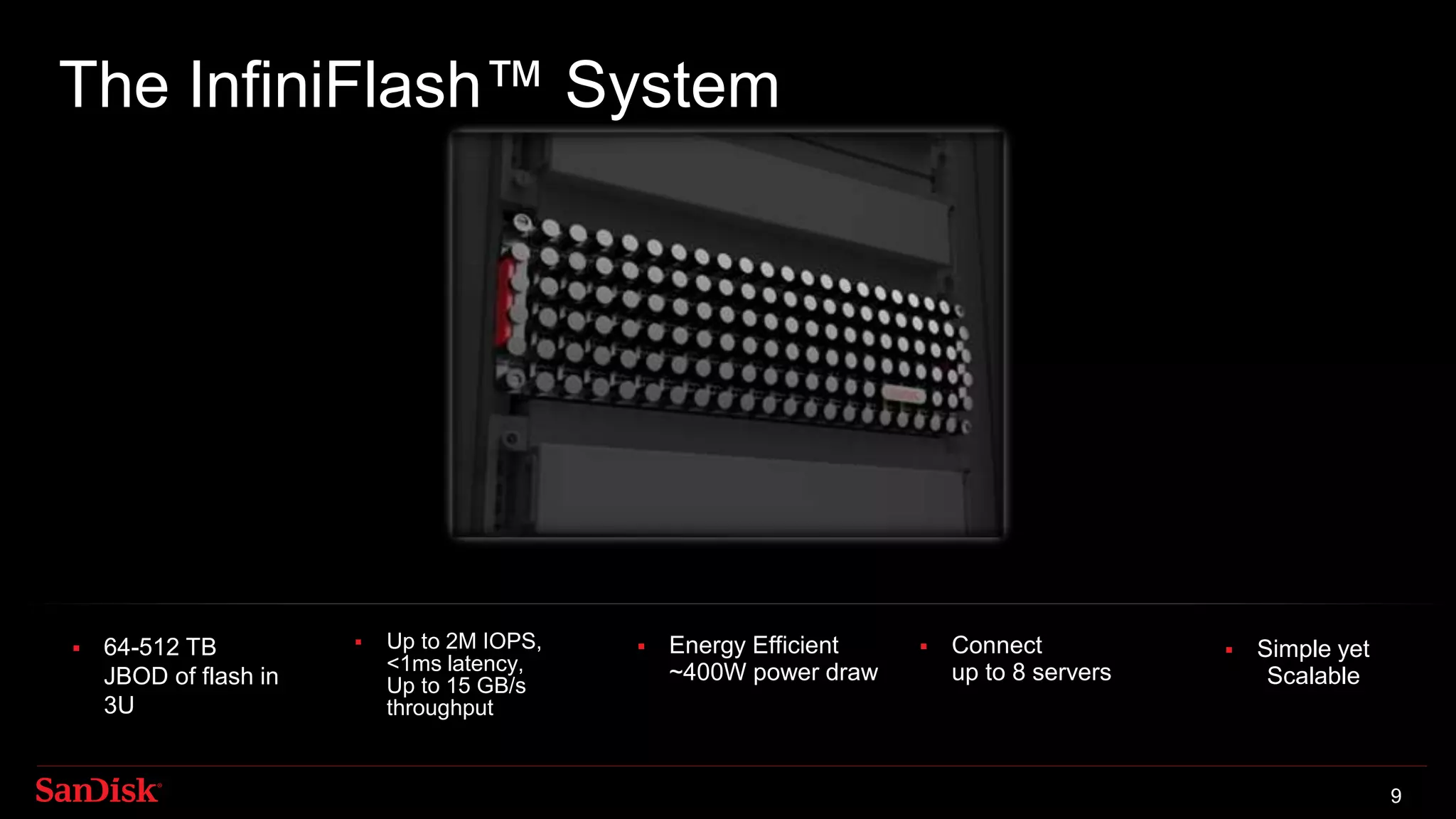

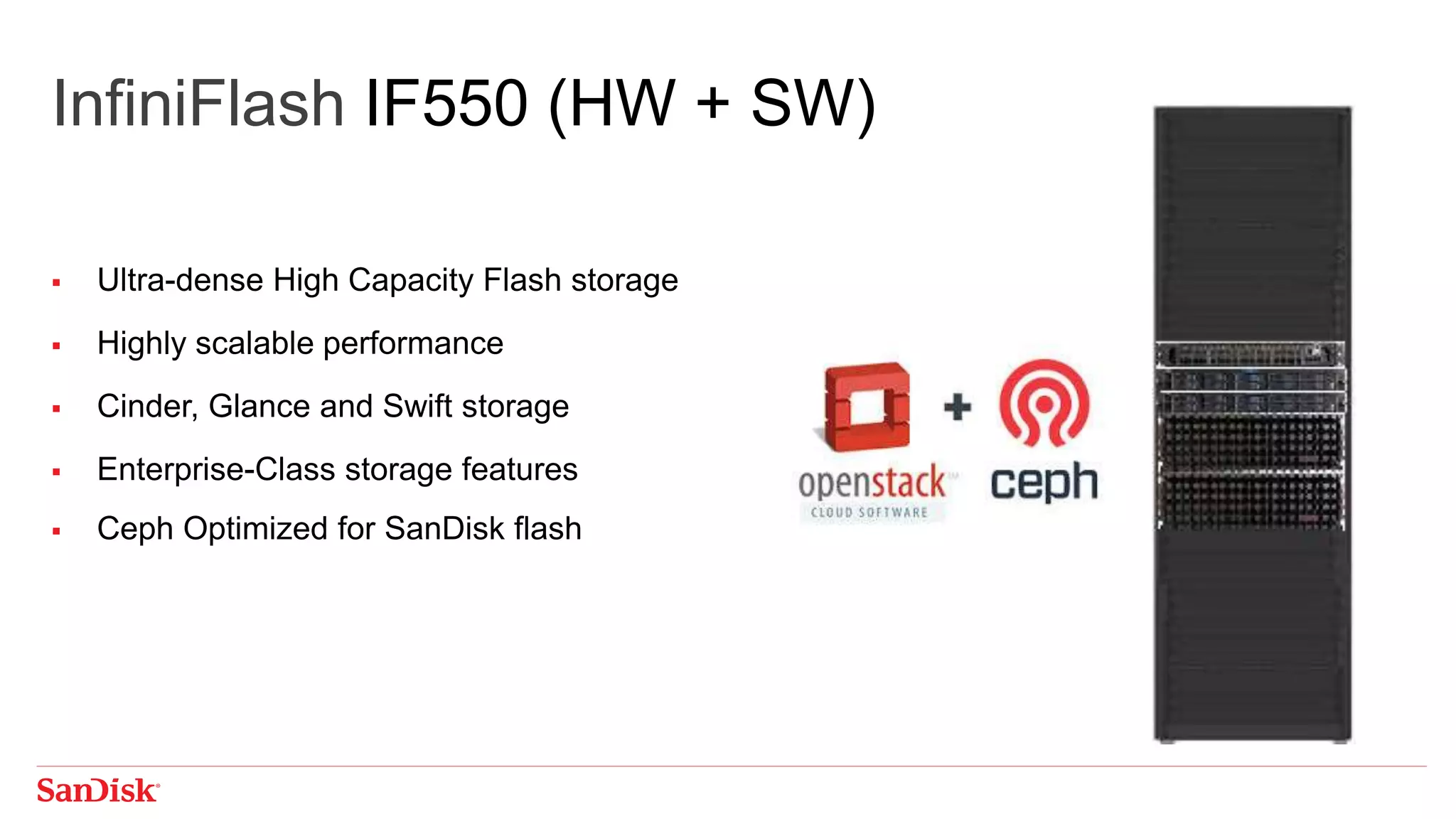

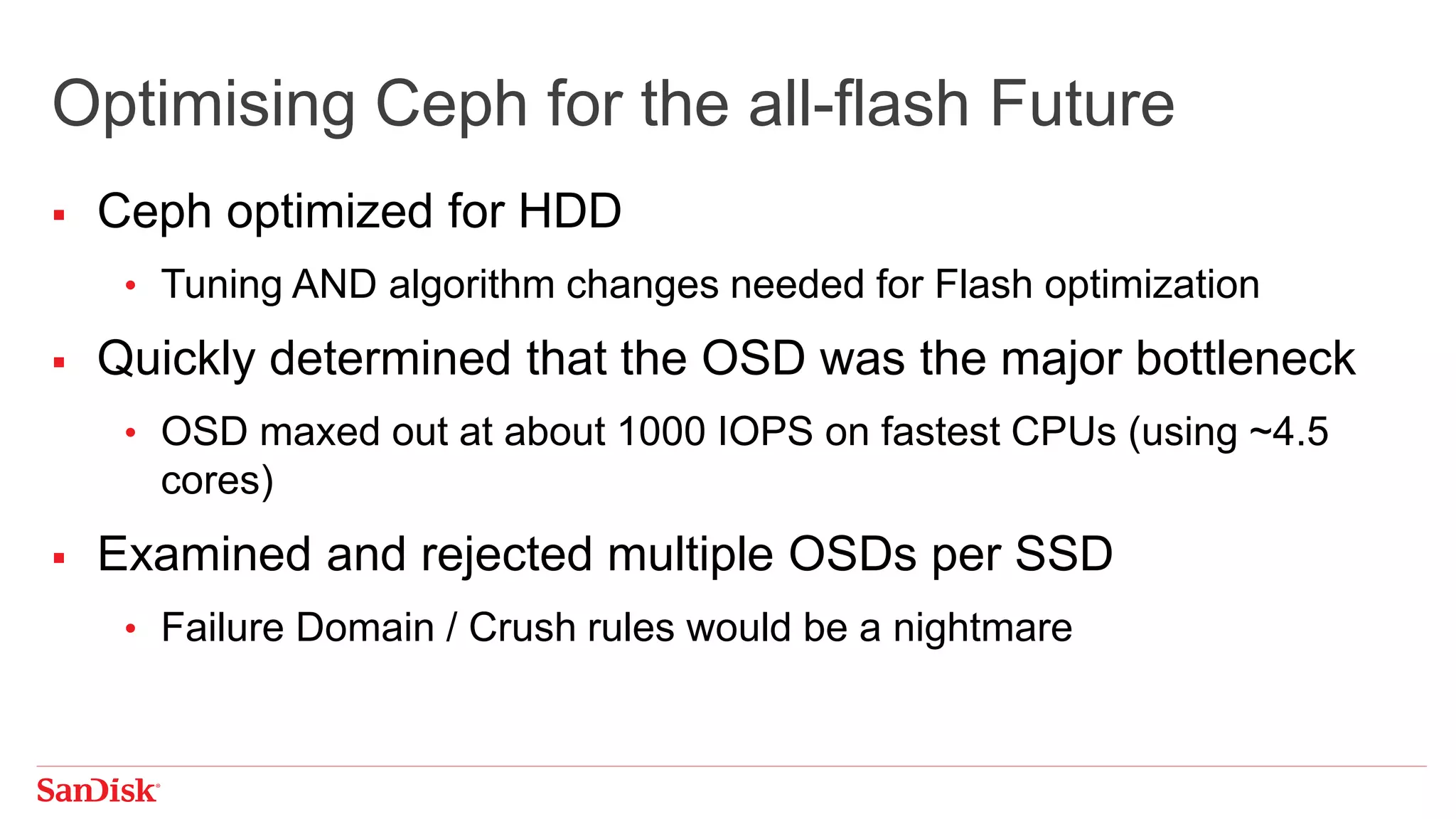

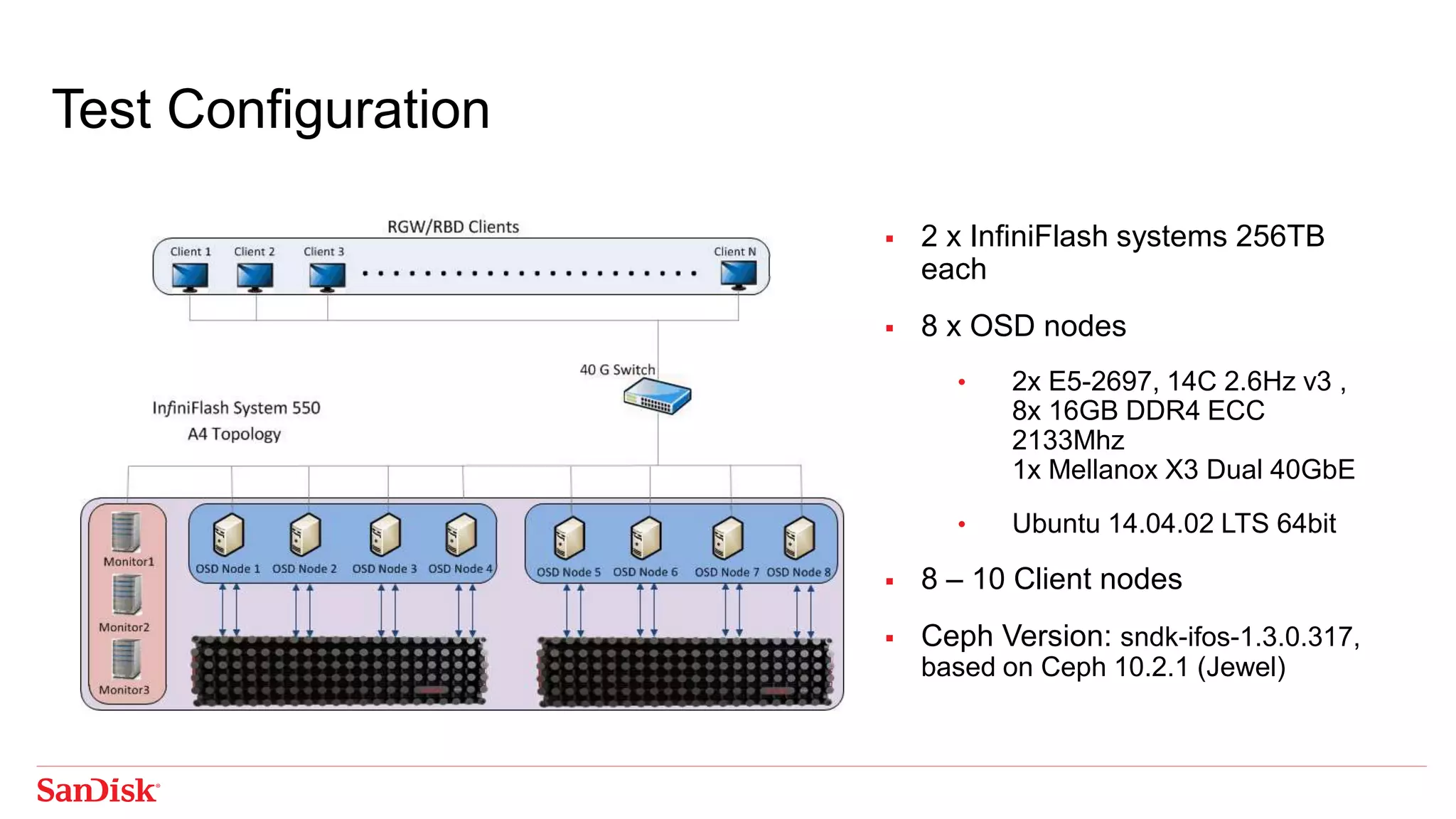

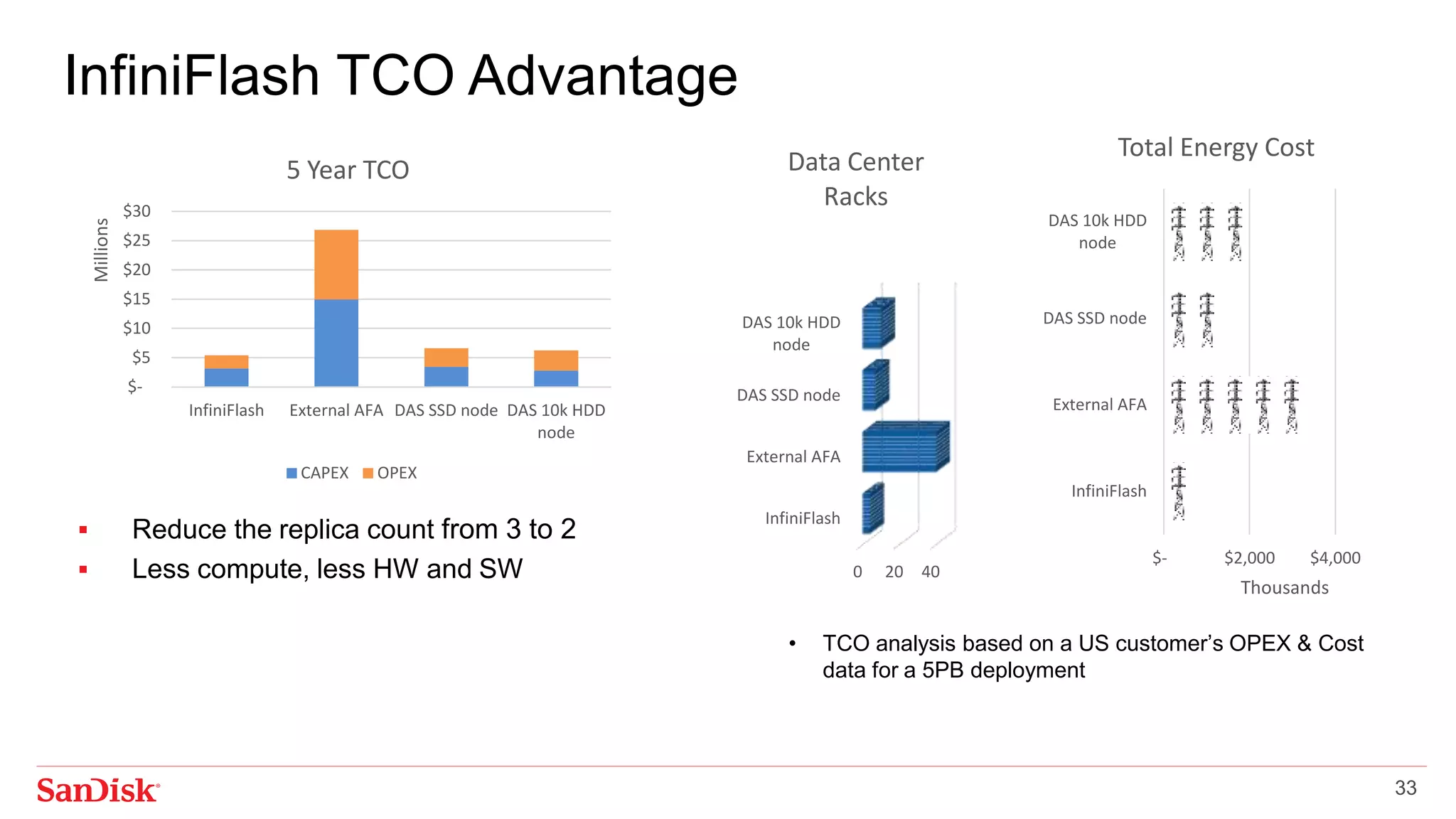

The document discusses Ceph storage performance on all-flash storage systems. It describes how SanDisk optimized Ceph for all-flash environments by tuning the OSD to handle the high performance of flash drives. The optimizations allowed over 200,000 IOPS per OSD using 12 CPU cores. Testing on SanDisk's InfiniFlash storage system showed it achieving over 1.5 million random read IOPS and 200,000 random write IOPS at 64KB block size. Latency was also very low, with 99% of operations under 5ms for reads. The document outlines reference configurations for the InfiniFlash system optimized for small, medium and large workloads.